Chapter: Psychology: Sensation

From Sound Waves to Hearing

From Sound

Waves to Hearing

So far, our discussion has

described only the physics of sound waves—the stimulus for hearing. What does

our ear, and then our brain, do with this stimulus to produce the sensation of

hearing?

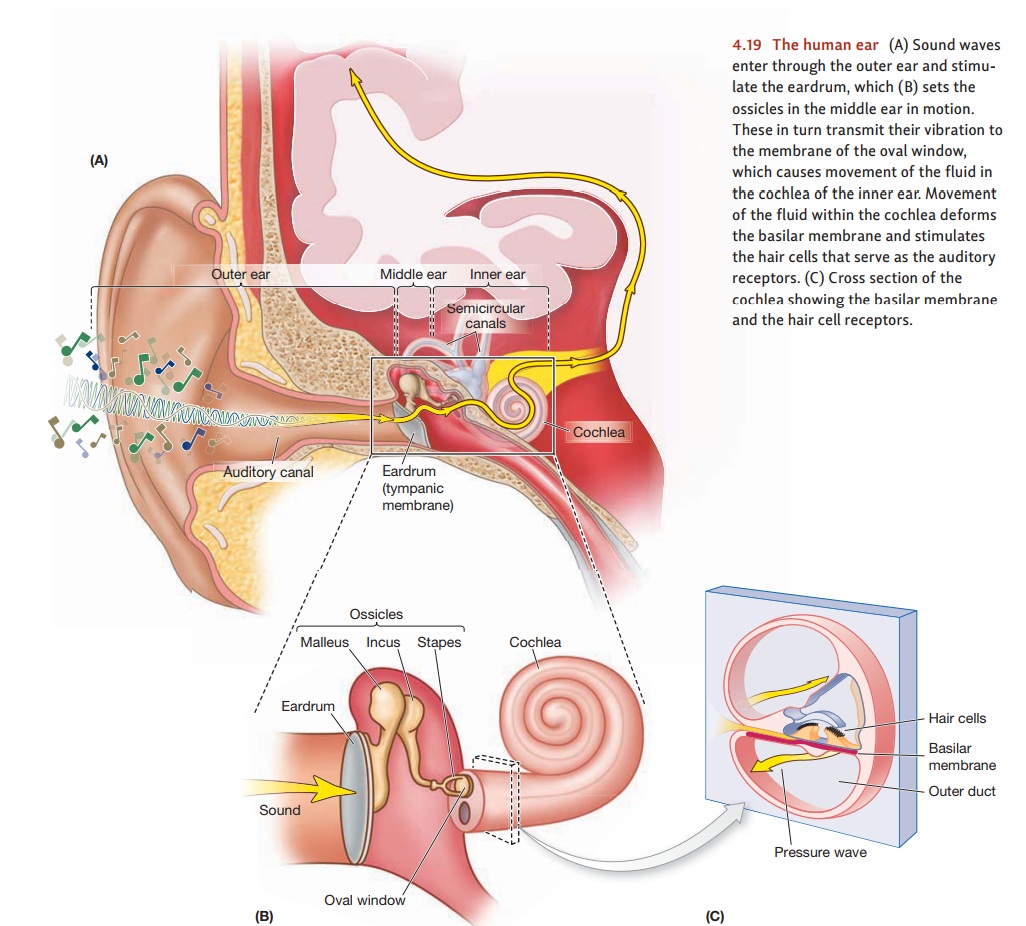

GATHERING THE SOUND WAVES

Mammals have their receptors for

hearing deep within the ear, in a snail-shaped struc-ture called the cochlea. To reach the cochlea, sounds

must travel a complicated path (Figure 4.19). The outer ear collects the sound waves from the air and directs them

toward the eardrum, a taut membrane

at the end of the auditory canal. The

sound waves make the eardrum vibrate, and these vibrations are then transmitted

to the oval win-dow, the membrane

that separates themiddle earfrom theinner ear. This transmissionis

accomplished by a trio of tiny bones called the auditory ossicles—the smallest bones in the human body. The

vibrations of the eardrum move the first ossicle (the malleus), which then

moves the second (the incus), which in turn moves the third (the stapes). The

stapes completes the chain by sending the vibration pattern on to the oval

window, which the stapes is attached to. (The ossicles are sometimes referred

to by the English translations of their names—the hammer, the anvil, and

the stirrup, all references to the

bones’ shapes.) The movements of the oval window then give rise to waves in the

fluid that fills the cochlea, causing (at last) a response by the receptors.

Why do we have this roundabout

method of sound transmission? The answer lies in the fact that these various

components work together to create an entirely mechanical— but very

high-fidelity—amplifier. The need for amplification arises because the sound

waves reach us through the air, and the proximal stimulus for hearing is made

up of minute changes in the air pressure. As we just mentioned, though, the

inner ear is (like most body parts) filled with fluid. Therefore, in order for

us to hear, the changes in air pressure must cause changes in fluid

pressure—and this is a problem, because fluid is harder to set in motion than

air is.

To solve this problem, the

pressure waves have to be amplified as they move toward the receptors; this is

accomplished by various features of the ear’s organization. For example, the

outer ear itself is shaped like a “sound scoop” so it can funnel the pressure

waves toward the auditory canal. Within the middle ear, the ossicles use

mechanical leverage to increase the sound pressure. Finally, the eardrum is

about 20 times larger than the portion of the oval window that’s moved by the

ossicles. As a result, the fairly weak force provided by sound waves acting on

the entire eardrum is transformed into a much stronger pressure concentrated on

the (smaller) oval window.

TRANSDUCTION IN THE COCHLEA

For most of its length, the cochlea is divided into an upper and lower section by several structures, including the basilar membrane. The actual auditory receptors—the 15,000 hair cells in each ear—are lodged between the basilar membrane and other membranes above it (Figure 4.19c).

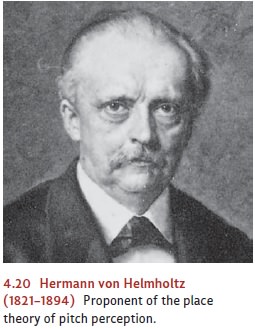

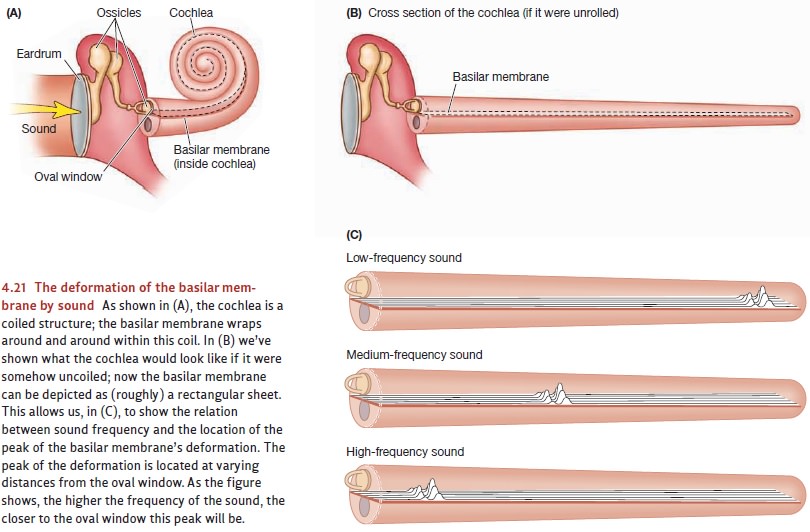

Motion of the oval window produces pressure changes in the cochlear fluid that, in turn, lead to vibrations of the basilar membrane. As the basilar membrane vibrates, its deformations bend the hair cells; this bending causes ion channels in the membranes of these cells to open, triggering the neural response. Sound waves arriv-ing at the ear generally cause the entire basilar membrane to vibrate, but the vibra-tion is not uniform. Some regions of the membrane actually move more than others, and the frequency of the incoming sound determines where the motion is greatest. For higher frequencies, the region of greatest movement is at the end of the basilar membrane closer to the oval window; for lower frequencies, the greatest movement occurs closer to the cochlear tip.

More than a century ago, these

points led Hermann von Helmholtz (Figure 4.20) to propose the place theory of pitch perception. This

theory asserts that the nervous sys-tem is able to identify a sound’s pitch

simply by keeping track of where the movement is greatest along the length of

the basilar membrane. More specifically, stimulation of hair cells at one end

of the membrane leads to the experience of a high tone, while stim-ulation of

hair cells at the other end leads to the sensation of a low tone (Figure 4.21).

There’s a problem with this theory, though. As the frequency of the stimulus gets lower and lower, the pattern of movement it produces on the basilar membrane gets broader and broader. At frequencies below 50 hertz, the movement produced by a sound stimulus deforms the entire membrane just about equally. Therefore, if we were using the location of the basilar membrane’s maximum movement as our cue to a sound’s frequency, we’d be unable to tell apart any of these low frequencies. But that’s not what happens; humans, in fact, can discriminate frequencies as low as 20 hertz. Apparently, then, the nervous system has another way of sensing pitch besides basilar location.

This other means of sensing pitch

is tied to the firing rate of cells in the auditory nerve. For lower-pitched

sounds, the firing of these cells is synchronized with the peaks of the

incoming sound waves. Consequently, the rate of firing, measured in neural

impulses per second, ends up matched to the frequency of the wave, measured in

crests per second. This coding, based on the exact timing of the cells’ firing,

is then relayed to higher neural centers that interpret this information as

pitch.

Note, then, that the ear has two

ways to encode pitch: based on the location of max-imum movement on the basilar

membrane, and based on the firing rate of cells in the auditory nerve. For

higher-pitched sounds, the location-based mechanism plays a larger role; for

lower-pitched sounds, the frequency of firing is more important (Goldstein,

1999).

FURTHERPROCESSINGOFAUDITORYIN FORMATION

Neurons carry the auditory

signals from the cochlea to the midbrain. From there, the signals travel to the

geniculate nucleus in the thalamus, an important subcortical structure in the

forebrain . Other neurons then carry the signal to the primary projection areas

for hearing, in the cortex of the temporal lobe. These neurons and those that

follow have a lot of work to do: The auditory signal must be analyzed for its timbre—the sound quality that helps us

distinguish a clarinet from an oboe, or one person’s voice from another’s. The

signal must also be tracked across time, to evaluate the patterns of pitch

change that define a melody or to distinguish an assertion (“I can have it”)

from a question (“I can have it?”). The nervous system must also do the

analysis that allows us to identify the sounds we hear—so we know that we heard

our cell phone and not someone else’s, or so we can recognize the words someone

is speaking to us. Finally, the nervous system draws one other type of

information from the sound signal: Pretty accurately, we can tell where a sound

is coming from—whether from the left or the right, for example. This localization is made possible by several

cues, including a close comparison of the left ear’s signal and the right

ear’s, as well as by tracking how the arrival at the two ears changes when we

turn our head slightly to the left or right.

Let’s keep our focus, though, on

how we can detect a sound’s pitch. It turns out that each neuron along the

auditory pathway responds to a wide range of pitches, but even so, each has a

“preferred” pitch—a frequency of

sound to which that neuron fires more vigorously than it fires to any other

frequency. As in the other senses, this pattern of responding makes it

impossible to interpret the activity of any individual neuron: If, for example,

the neuron is firing at a moderate rate, this might mean the neuron is

responding to a soft presentation of its preferred pitch, or it might mean the

neuron is responding to a louder version of a less preferred pitch.

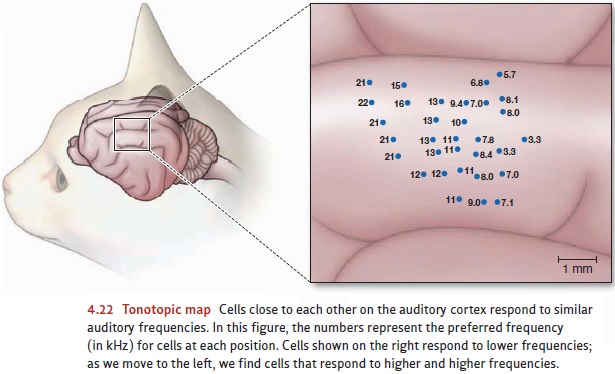

To resolve this ambiguity, the

nervous system must compare the activity in each of these neurons to the level

of activity in other neurons, to determine the overall pattern. So the

detection of pitch, just like most of the other senses, relies on a “pattern

code.” This process of comparison may be made easier by the fact that neurons

with similar preferred pitches tend to be located close to each other on the

cortex. This arrangement creates what’s known as a tonotopic map—a map organized on the basis of tone. For example,

Figure 4.22 shows the results of a careful mapping of the audi-tory cortex of

the cat. There’s an obvious ordering of preferred frequencies as we move across

the surface of this brain area.

Related Topics