Chapter: Embedded Systems

Embedded Software Development Tools

EMBEDDED SOFTWARE DEVELOPMENT TOOLS

EMULATORS

In

computing, an emulator is hardware

or software or both that duplicates (or emulates)

the functions of one computer system (the guest)

in another computer system (the host),

different from the first one, so that the emulated behavior closely resembles

the behavior of the real system (the guest). This focus on exact reproduction

of behavior is in contrast to some other forms of computer simulation, in which

an abstract model of a system is being simulated. For example, a computer

simulation of a hurricane or a chemical reaction is not emulation.

Emulators in computing

Emulation refers to the ability of a

computer program in an electronic device to

emulate (imitate) another program or device. Many printers, for example,

are designed to emulate Hewlett-Packard LaserJet printers because so much

software is written for HP printers. If a non-HP printer emulates an HP

printer, any software written for a real HP printer will also run in the non-HP

printer emulation and produce equivalent printing.

A hardware emulator is an emulator which

takes the form of a hardware device. Examples include the DOS-compatible card

installed in some old-world Macintoshes like Centris 610 or Performa 630 that

allowed them to run PC programs and FPGA-based hardware emulators.

In a

theoretical sense, the Church-Turing thesis implies that any operating

environment can be emulated within any other. However, in practice, it can be

quite difficult, particularly when the exact behavior of the system to be

emulated is not documented and has to be deduced through reverse engineering.

It also says nothing about timing constraints; if the emulator does not perform

as quickly as the original hardware, the emulated software may run much more

slowly than it would have on the original hardware, possibly triggering time

interrupts that alter performance.

Emulation in preservation

Emulation

is a strategy in digital preservation to combat obsolescence. Emulation focuses

on recreating an original computer environment, which can be time-consuming and

difficult to achieve, but valuable because of its ability to maintain a closer

connection to the authenticity of the digital object.

Emulation

addresses the original hardware and software environment of the digital object,

and recreates it on a current machine. The emulator allows the user to have

access to any kind of application or operating system on a current platform,

while the software runs as it did in its original environment. Jeffery

Rothenberg, an early proponent of emulation as a digital preservation strategy

states, "the ideal approach would provide a single extensible, long-term

solution that can be designed once and for all and applied uniformly,

automatically, and in synchrony (for example, at every refresh cycle) to all

types of documents and media". He further states that this should not only

apply to out of date systems, but also be upwardly mobile to future unknown

systems. Practically speaking, when a certain application is released in a new

version, rather than address compatibility issues and migration for every

digital object created in the previous version of that application, one could

create an emulator for the application, allowing access to all of said digital

objects.

Benefits

Basilisk

II emulates a Macintosh 68k using interpretation code and dynamic

recompilation.

Emulators

allow users to play games for discontinued consoles.

Emulators

maintain the original look, feel, and behavior of the digital object, which is

just as

important as the d igital data itself.

Despite

the original cost of developing an emulator, it may prove to be the more cost

efficient solution over time.

Reduces

labor hours, because rather than continuing an ongoing task of continual data

migration for every digital object, once the library of past and present o

perating systems and application software is established in an emulator, these

same tech nologies are used for every document using those platforms.

Many

emulators have already been developed and released under GN U General Public

License through the open source environment, allowing for wide scale co

llaboration.

Emulators

allow softwar e exclusive to one system to be used on another. For example, a

PlayStation

2 exclusive video game could (in theory) be played on a PC or Xbox 360 using an

emulator. This i s especially useful when the original system is d ifficult to

obtain, or incompatible with modern equipment (e.g. old video game consoles may

be unable to connect to modern TVs).

OBSTACLES

Intellectual

property - Many t echnology vendors implemented non-standard features during

program development in order to establish their niche in the market, whil e

simultaneously applying ongoing upgrades to remain competitive. While this may

have advance d the technology industry and increased vendor's market share, it

has left users lost in a preservation nightmare with little supporting

documentation due to the proprietary nature of the hardwa re and software.

Copyright laws

are no t yet in effect

to address saving

the do cumentation and specifications

of proprietary software and hardware in an emulator module.

Emulators

are often used as a copyright infringement tool, since they allow users to play

video games without having to buy the console, and rarely make any attempt to

prevent the use of illegal copies. This leads to a number of legal

uncertainties regarding emulation, and leads to software being programmed to

refuse to work if it can tell the host is an emulator; some video games in

particular will continue to run, but not allow the player to progress beyond

some late stage in the game, often appearing to be faulty or just extremely

difficult. These protections make it more difficult to design emulators, since

they must be accurate enough to avoid triggering the protections, whose effects

may not be obvious.

EMULATORS IN NEW MEDIA ART

Because

of its primary use of digital formats, new media art relies heavily on

emulation as a preservation strategy. Artists such as Cory Arcangel specialize

in resurrecting obsolete technologies in their artwork and recognize the

importance of a decentralized and deinstitutionalized process for the

preservation of digital culture.

In many

cases, the goal of emulation in new media art is to preserve a digital medium

so that it can be saved indefinitely and reproduced without error, so that

there is no reliance on hardware that ages and becomes obsolete. The paradox is

that the emulation and the emulator have to be made to work on future

computers.

EMULATION

IN FUTURE SYSTEMS DESIGN

Emulation

techniques are commonly used during the design and development of new systems.

It eases the development process by providing the ability to detect, recreate

and repair flaws in the design even before the system is actually built. It is

particularly useful in the design of multi-cores systems, where concurrency

errors can be very difficult to detect and correct without the controlled

environment provided by virtual hardware. This also allows the software

development to take place before the hardware is ready, thus helping to

validate design decisions.

TYPES OF EMULATORS

Windows

XP running an Acorn Archimedes emulator, which is in turn runni ng a Sinclair

ZX Spectrum emulator.

Most

emulators just emulate a hardware architecture—if operating system firmware or

software is required for the desired softw are, it must be provided as well

(and may itself be emulated). Both the OS and the software will then be

interpreted by the emulator, rather than being run by native hardware. Apart

from this interpreter for the emulated binary machine' s language, some other

hardware (such as input or output devices) must be provided in virtual form as

well; for example, if writing to a specific memory location should influence

what is displayed on the screen, then this would need to be emulated.

While

emulation could, if taken to the extreme, go down to the atomic level, basing

its output on a simulation of the actual circuitry from a virtual power source,

this would be a highly unusual solution. Emulators typically stop at a

simulation of the documented hardware specifications and digital logic.

Sufficient emulation of some hardware platforms requires extrem e accuracy,

down to the level of individual clock cycles, undocumented features,

unpredictable analog elements, and implementation bugs. This i s particularly

the case with classic home computers such as the Commodore 64, whose software

often depends on highly sophisticated low-le vel programming tricks invented by

game program mers and the demoscene.

In

contrast, some other platforms have had very little use of direct hardware ad

dressing. In these cases, a simple compatibility layer may suffice. This

translates system calls for the emulated system into system calls for the host

system e.g., the Linux compatibility layer used on *BSD to run closed source

Linux native software on FreeBSD, NetBSD and OpenBSD. For example, while the

Nintendo 64 graphic p rocessor was fully programmable, most games used one of a

few pre-made programs, which were mostly self-contained and communicated with

the game via FIFO; therefore, many emulatorss do not emulate the graphic

processor at all, but simply interpret the commands received from the CPU as

the original program would.

Developers

of software for em bedded systems or video game consoles of ten design their

software on especially accurate emulators called simulators before trying it on

the real hardware. This is so that software can be produced and tested before

the final hardwar e exists in large quantities, so that it can be teste d

without taking the time to copy the program to be debugged at a low level and

without introducing the side effects of a debugger. In many cases, the

simulator is actually produced by the company providing the hardware, which

theoretically increases its accuracy.

Math

coprocessor emulators allow programs compiled with math instructions to run on

machines that don't have the coprocessor installed, but the extra work done by

the CPU may slow the system down. If a math coprocessor isn't installed or

present on the CPU, when the CPU executes any coprocessor instruction it will

make a determined interrupt (coprocessor not available), calling the math

emulator routines. When the instruction is successfully emulated, the program

continues executing.

STRUCTURE OF AN EMULATOR

Typically,

an emulator is divided into modules that correspond roughly to the emulated

computer's subsystems. Most often, an emulator will be composed of the

following modules:

a CPU

emulator or CPU simulator (the two terms are mostly interchangeable in this

case) a memory subsystem module

various

I/O devices emulators

Buses are

often not emulated, either for reasons of performance or simplicity, and

virtual peripherals communicate directly with the CPU or the memory subsystem.

MEMORY SUBSYSTEM

It is

possible for the memory subsystem emulation to be reduced to simply an array of

elements each sized like an emulated word; however, this model falls very

quickly as soon as any location in the computer's logical memory does not match

physical memory.

This

clearly is the case whenever the emulated hardware allows for advanced memory

management (in which case, the MMU logic can be embedded in the memory

emulator, made a module of its own, or sometimes integrated into the CPU

simulator).

Even if

the emulated computer does not feature an MMU, though, there are usually other

factors that break the equivalence between logical and physical memory: many

(if not most) architectures offer memory-mapped I/O; even those that do not

often have a block of logical memory mapped to ROM, which means that the memory-array

module must be discarded if the read-only nature of ROM is to be emulated.

Features such as bank switching or segmentation may also complicate memory

emulation.

As a

result, most emulators implement at least two procedures for writing to and reading

from logical memory, and it is these procedures' duty to map every access to

the correct location of the correct object.

On a

base-limit addressing system where memory from address 0 to address ROMSIZE-1 is

read-only memory, while the rest is RAM, something along the line of the

following procedures would be typical:

void WriteMemory(word

Address, word

Value) { word

RealAddress;

RealAddress

= Address + BaseRegister; if ((RealAddress < LimitRegister) && (RealAddress > ROMSIZE)) { Memory[RealAddress] = Value;

} else

{ RaiseInterrupt(INT_SEGFAULT);

}

}

word ReadMemory(word Address) { word RealAddress; RealAddress=Address+BaseRegister; if (RealAddress < LimitRegister) {

return Memory[RealAddress]; } else {

RaiseInterrupt(INT_SEGFAULT); return NULL;

}

}

CPU SIMULATOR

The CPU

simulator is often the most complicated part of an emulator. Many emulators are

written using "pre-packaged" CPU simulators, in order to concentrate

on good and efficient emulation of a specific machine.

The

simplest form of a CPU simulator is an interpreter, which is a computer program

that follows the execution flow of the emulated program code and, for every

machine code instruction encountered, executes operations on the host processor

that are semantically equivalent to the original instructions.

This is

made possible by assigning a variable to each register and flag of the

simulated CPU. The logic of the simulated CPU can then more or less be directly

translated into software algorithms, creating a software re-implementation that

basically mirrors the original hardware implementation.

The

following example illustrates how CPU simulation can be accomplished by an

interpreter. In this case, interrupts are checked-for before every instruction

executed, though this behavior is rare in real emulators for performance

reasons (it is generally faster to use a subroutine to do the work of an

interrupt).

void Execute(void) {

if (Interrupt != INT_NONE) { SuperUser = TRUE;

WriteMemory(++StackPointer, ProgramCounter);

ProgramCounter

=

InterruptPointer;

}

switch (ReadMemory(ProgramCounter++)) { /*

* Handling

of every valid instruction

* goes

here...

*/ default:

Interrupt

=

INT_ILLEGAL;

}

}

Interpreters

are very popular as computer simulators, as they are much simpler to implement than

more time-efficient alternative solutions, and their speed is more than

adequate for emulating computers of more than roughly a decade ago on modern

machines.

I/O

Most

emulators do not, as mentioned earlier, emulate the main system bus; each I/O

device is thus often treated as a special case, and no consistent interface for

virtual peripherals is provided.

This can

result in a performance advantage, since each I/O module can be tailored to the

characteristics of the emulated device; designs based on a standard, unified

I/O API can, however, rival such simpler models, if well thought-out, and they

have the additional advantage of "automatically" providing a plug-in

service through which third-party virtual devices can be used within the emulator.

A unified

I/O API may not necessarily mirror the structure of the real hardware bus: bus

design is limited by several electric constraints and a need for hardware

concurrency management that can mostly be ignored in a software implementation.

Even in

emulators that treat each device as a special case, there is usually a common

basic infrastructure for:

managing

interrupts, by means of a procedure that sets flags readable by the CPU

simulator whenever an interrupt is raised, allowing the virtual CPU to

"poll for (virtual) interrupts"

writing

to and reading from physical memory, by means of two procedures similar to the

ones dealing with logical memory (although, contrary to the latter, the former can often be left out, and direct

references to the memory array be employed instead)

VIDEO GAME CONSOLE EMULATORS

Video

game console emulators are programs that allow a personal computer or video

game console to emulate another video game console. They are most often used to

play older video games on personal computers and more contemporary video game

consoles, but they are also used to translate games into other languages, to

modify existing games, and in the development process of home brew demos and

new games for older systems. The internet has helped in the spread of console

emulators, as most - if not all - would be unavailable for sale in retail

outlets. Examples of console emulators that have been released in the last 2

decades are: Dolphin, Zsnes, Kega Fusion, Desmume, Epsxe, Project64, Visual Boy

Advance, NullDC and Nestopia.

TERMINAL EMULATORS

Terminal

emulators are software programs that provide modern computers and devices

interactive access to applications running on mainframe computer operating

systems or other host systems such as HP-UX or OpenVMS. Terminals such as the

IBM 3270 or VT100 and many others, are no longer produced as physical devices.

Instead, software running on modern operating systems simulates a

"dumb" terminal and is able to render the graphical and text elements

of the host application, send keystrokes and process commands using the

appropriate terminal protocol. Some terminal emulation applications include

Attachmate Reflection, IBM Personal Communications, Stromasys CHARON-VAX/AXP

and Micro Focus Rumba.

IN-CIRCUIT EMULATOR

An in-circuit emulator (ICE) is a hardware

device used to debug the software of an embedded system. It was historically in

the form of bond-out processor which has many internal signals brought out for

the purpose of debugging. These signals provide information about the state of

the processor.

More

recently the term also covers JTAG based hardware debuggers which provide

equivalent access using on-chip debugging hardware with standard production

chips. Using standard chips instead of custom bond-out versions makes the

technology ubiquitous and low cost, and eliminates most differences between the

development and runtime environments. In this common case, the in-circuit emulator term is a misnomer,

sometimes confusingly so, because emulation is no longer involved.

Embedded

systems present special problems for a programmer because they usually lack

keyboards, monitors, disk drives and other user interfaces that are present on

computers. These shortcomings make in-circuit software debugging tools

essential for many common development tasks.

In-circuit emulation can also

refer to the use of hardware emulation, when the emulator is plugged into a system (not always

embedded) in place of a yet-to-be-built chip (not always a processor). These

in-circuit emulators provide a way to run the system with "live" data

while still allowing relatively good debugging capabilities. It can be useful

to compare this with an in-target probe (ITP) sometimes used on enterprise

servers.

FUNCTION

An

in-circuit emulator provides a window into the embedded system. The programmer

uses the emulator to load programs into the embedded system, run them, step

through them slowly, and view and change data used by the system's software.

An

"emulator" gets its name because it emulates (imitates) the central

processing unit of the embedded system's computer. Traditionally it had a plug

that inserts into the socket where the CPU chip would normally be placed. Most

modern systems use the target system's CPU directly, with special JTAG-based

debug access. Emulating the processor, or direct JTAG access to it, lets the

ICE do anything that the processor can do, but under the control of a software

developer.

ICEs

attach a terminal or PC to the embedded system. The terminal or PC provides an

interactive user interface for the programmer to investigate and control the

embedded system. For example, it is routine to have a source code level

debugger with a graphical windowing interface that communicates through a JTAG

adapter ("emulator") to an embedded target system which has no

graphical user interface.

Notably,

when their program fails, most embedded systems simply become inert lumps of

nonfunctioning electronics . Embedded systems often lack basic functions to

detect signs of software failure, such as an MMU to catch memory access errors.

Without an ICE, the development of embedded systems can be extremely difficult,

because there is usually no way to tell what went wrong. With an ICE, the

programmer can usually test pieces of code, then isolate the fault to a

particular section of code, and then inspect the failing code and rewrite it to

solve the problem.

In usage,

an ICE provides the programmer with execution breakpoints, memory display and

monitoring, and input/output control. Beyond this, the ICE can be programmed to

look for any range of matching criteria to pause at, in an attempt to identify

the origin of the failure.

Most

modern microcontrollers utilize resources provided on the manufactured version

of the microcontroller for device programming, emulation and debugging

features, instead of needing another special emulation-version (that is,

bond-out) of the target microcontroller. Even though it is a cost-effective

method, since the ICE unit only manages the emulation instead of actually

emulating the target microcontroller, trade-offs have to be made in order to

keep the prices low at manufacture time, yet provide enough emulation features

for the (relatively few) emulation applications.

ADVANTAGES

Virtually

all embedded systems have a hardware element and a software element, which are

separate but tightly interdependent. The ICE allows the software element to be

run and tested on the actual hardware on which it is to run, but still allows

programmer conveniences to help isolate

faulty code, such as "source-level debugging" (which shows the

program the way the programmer wrote it) and single-stepping (which lets the

programmer run the program step-by-step to find errors).

Most ICEs

consist of an adaptor unit that sits between the ICE host computer and the

system to be tested. A header and cable assembly connects the adaptor to a

socket where the actual CPU or microcontroller mounts within the embedded

system. Recent ICEs enable a programmer to access the on-chip debug circuit

that is integrated into the CPU via JTAG or BDM (Background Debug Mode) in

order to debug the software of an embedded system. These systems often use a

standard version of the CPU chip, and can simply attach to a debug port on a

production system. They are sometimes called in-circuit debuggers or ICDs, to

distinguish the fact that they do not replicate the functionality of the CPU,

but instead control an already existing, standard CPU. Since the CPU does not

have to be replaced, they can operate on production units where the CPU is

soldered in and cannot be replaced. On x86 Pentiums, a special 'probe mode' is

used by ICEs to aid in debugging.[2]

In the

context of embedded systems, the ICE is not emulating hardware. Rather, it is

providing direct debug access to the actual CPU. The system under test is under

full control, allowing the developer to load, debug and test code directly.

Most host

systems are ordinary commercial computers unrelated to the CPU used for

development - for example, a Linux PC might be used to develop software for a

system using a Freescale 68HC11 chip, which itself could not run Linux.

The

programmer usually edits and compiles the embedded system's code on the host

system, as well. The host system will have special compilers that produce

executable code for the embedded system. These are called cross compilers/cross

assemblers.

SIMULATORS

Software

instruction simulators provide simulated program execution with read and write

access to the internal processor registers. Beyond that, the tools vary in capability.

Some are limited to simulation of instruction execution only. Most offer

breakpoints, which allow fast execution until a specified instruction is

executed. Many also offer trace capability, which shows instruction execution

history.

While

instruction simulation is useful for algorithm development, embedded systems by

their very nature require access to peripherals such as I/O ports, timers,

A/Ds, and PWMs. Advanced simulators help verify timing and basic peripheral

operation, including I/O pins, interrupts, and status and control registers.

These

tools provide various stimulus inputs ranging from pushbuttons connected to I/O

pin inputs, logic vector I/O input stimulus files, regular clock inputs, and

internal register value injection for simulating A/D conversion data or serial

communication input. Many embedded systems can effectively be debugged using

proper peripheral stimulus.

Simulators

offer the lowest-cost development environment. However, many real-time systems

are difficult to debug with simulation only. Simulators also typically run at

speeds 100 to 1,000 times slower than the actual embedded processor, so long

timeout delays must be eliminated when simulating.

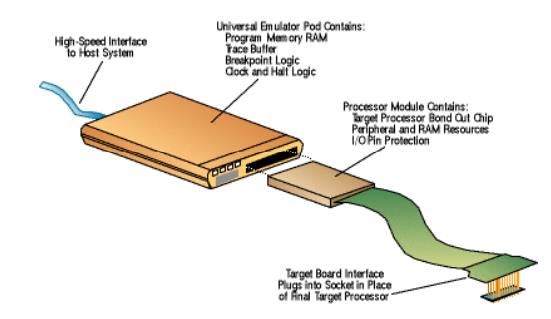

IN-CIRCUIT EMULATORS

In-circuit

emulators are plugged into a system in place of the embedded processor. They

offer real-time code execution, full peripheral implementation, and breakpoint

capability. High-end emulators also offer real-time trace buffers, and some

will time-stamp instruction execution for code profiling (see figure).

An in-circuit emulator is plugged into a target

system in place of the embedded processor.

Some

in-circuit emulators have special ASICs or FPGAs that imitate core processor

code execution and peripherals, but there may be behavioral differences between

the actual device and the emulator. The problem is sidestepped with

"bond-out" emulation devices that provide direct I/O and peripheral

access using the same circuit technology as the processor and that provide

access to the internal data registers, program memory, and peripherals.

This is

accomplished by using emulation RAM instead of the processor's internal program

memory. The microcontroller firmware is downloaded into the emulation RAM and

the bond-out processor executes instructions using the same data registers and

peripherals as the target processor. The I/Os of the bond-out silicon are made

available on a socket that is plugged into the system under development instead

of the target processor being emulated.

State-of-the-art

emulators provide multilevel conditional breakpoints and instruction trace,

including code coverage and timestamping. Some even allow code tracing without

halting the processor.

There is

a large gap in performance and price between software simulators and hardware

emulators. Between the two extremes, there is a lot of room for intermediate

solutions.

Burn-and-learn method

Simulation

is most often used with the burn-and-learn method of run-time firmware

development. A chip is burned with a device programmer; and after plugging it

into the hardware, the system crashes.

At this

point, an attempt is made to figure out what went wrong; the source code is

changed, the executable is rebuilt, and another chip is burned. This cycle is

repeated until the chip works properly.

Routines

can be added to dump vital debugging information to a serial port for display

on a terminal. I/O pins can be toggled to indicate program flow.

If more

symptoms are provided by the system as it runs, then more logical changes to

the source code can be made. Overall, this method of debugging is inefficient,

slow, and tedious.

In-circuit simulators

When

simulating code where branches are conditional on the state of an input pin or

other hardware condition, a simulator can get information on that state from

the hardware using simulator stimulus. This is the first step away from

software-only simulation, allowing a certain amount of hardware debug.

This

approach is taken by integrating the capability of a simulator with a

communication module that acts as the target processor. Stimulus is provided to

the simulation directly from the processor's digital input pins, which allows

the simulator to set binary values on the output pins.

However,

such tools run at the speed of a simulator, so they can't set and clear output

pins fast enough to implement timing-critical features like a software UART.

They often don't support complex peripheral features such as A/Ds and PWMs.

Run-time monitors

In-circuit

simulators provide a communication channel between the host development system

and the hardware, with something like a UART in the hardware to provide the

communication. The host can then issue commands to the processor to get it to

perform debug functions such as setting or reading memory contents.

Some code

execution control is also possible. This multiplexed execution of code under

development and communication of debug information with a cross-development

host is usually referred to as a run-time monitor.

Software

breakpoints can be built in at compile time. Other features, such as hooking

into a periodic timer interrupt to copy data of interest from the target to the

host, can also be included when building the executable. Since these techniques

don't require writing to program memory at run time, they can be used with

burn-and-learn debug using one-time-programmable devices.

The

routines required to transfer debug data to the host or to download a new

executable--the monitor code itself--occupy a certain amount of program memory

on the target device. They also use data memory and bandwidth in addition to

the UART or other communication device. However, most of this overhead occurs

when code execution is not in progress.

Use of a

software run-time monitor is a great step forward from simulation in isolation

from the target hardware. Adding just a few simple support features in the

silicon of the target processor can turn the monitor into a system that

provides all the basic features of an emulator.

In-circuit debuggers

The

system becomes more than a software monitor when custom silicon features are

added to the target processor. Development tools with special silicon features

to support code debug and having serial communication between host and target

are typically referred to as "debuggers."

In the

past, some systems have used external RAM program memory to support this

feature. Now reprogrammable flash memory has made in-circuit debuggers (ICDs)

practical for single-chip embedded microcontrollers. ICDs allow the embedded

processor to "self-emulate."

A good

example of an in-circuit debugger is the MPLAB-ICD, a powerful, low-cost,

run-time development tool. MPLAB-ICD uses the in-circuit debugging capability

built into the PIC16F87X or PIC18Fxxx microcontrollers.

This

feature, along with the In-Circuit Serial Programming (ICSP) protocol, offers

cost-effective in-circuit flash program load and debugging from the graphical

user interface of the MPLAB Integrated Development Environment. The designer

can develop and debug source code by watching variables, single-stepping, and

setting breakpoints. Running the device at full speed enables testing hardware

in real time.

The

in-circuit debugger consists of three basic components: the ICD module, ICD

header, and ICD demo board. The MPLAB software environment is connected to the

ICD module via a serial or full-speed USB port.

When

instructed by MPLAB, the ICD module programs and issues debug commands to the

target microcontroller using the ICSP protocol, which is communicated via a

five-conductor cable using a modular RJ-12 plug and jack. A modular jack can be

designed into a target circuit board to support direct connection to the ICD

module, or the ICD header can be used to plug into a DIP socket.

The ICD

header contains a target microcontroller and a modular jack to connect to the

ICD module. Male 40- and 28-pin DIP headers are provided to plug into a target

circuit board. The ICD header may be plugged into either the included ICD demo

board or the user's custom hardware.

The demo

board provides 40- and 28-pin DIP sockets that will accept either a

microcontroller device or the ICD header. It also offers LEDs, DIP switches, an

analog potentiometer, and a prototyping area.

Immediate

prototype development and evaluation are feasible even if hardware isn't yet

available. The complete hardware development system, along with the host

software, provides a powerful run-time development tool at a very reasonable

price.

Run-time operation

The debug

kernel is downloaded along with the target firmware via the ICSP interface. A

nonmaskable interrupt vectors execution to the kernel under three conditions:

when the program counter equals a preselected hardware breakpoint address,

after a single step, or when a halt command is received from MPLAB.

As with

all interrupts, this pushes the return address onto the stack. On reset, the

breakpoint register is set equal to the reset vector, so the kernel is entered

immediately when the device comes out of any reset.

The ICD

module issues a reset to the target microcontroller immediately after a

download. Thus, after a download the kernel is entered, and control is passed

to the host.

The user

can now issue commands to the target processor and modify or interrogate all

RAM registers, including the PC and other special function registers. The user

can single-step, set a breakpoint, animate, or start full-speed execution.

Once

started, a halt of program execution causes the PC address prior to kernel

entry to be stored, which allows the software to display where execution halted

in the source code. When the host is commanded to run again, the kernel code

executes a return from interrupt instruction, and execution continues at the

address that pops off the hardware stack.

Silicon support requirements

The

breakpoint address register and comparator make up most of what is needed in

silicon, along with some logic to single-step and recognize asynchronous

commands from the host. Since the ICSP programming interface is already in

place to support programming of the device, this doesn't constitute an

additional silicon requirement. When this channel is used for in-circuit debug,

these two I/O pins may not be used for other run-time functions.

Related Topics