Chapter: Distributed and Cloud Computing: From Parallel Processing to the Internet of Things : Virtual Machines and Virtualization of Clusters and Data Centers

Implementation Levels of Virtualization

IMPLEMENTATION LEVELS OF VIRTUALIZATION

Virtualization is a computer

architecture technology by which multiple virtual machines (VMs) are multiplexed in the same hardware

machine. The idea of VMs can be dated back to the 1960s [53]. The purpose of a

VM is to enhance resource sharing by many users and improve computer performance

in terms of resource utilization and application flexibility. Hardware

resources (CPU, memory, I/O devices, etc.) or software resources (operating

system and software libraries) can be virtualized in various functional layers.

This virtualization technology has been revitalized as the demand for

distributed and cloud computing increased sharply in recent years.

The idea

is to separate the hardware from the software to yield better system

efficiency. For example, computer users gained access to much enlarged memory space

when the concept of virtual memory was introduced. Similarly, virtualization

techniques can be applied to enhance the use of compute engines, networks, and storage. In this

chapter we will discuss VMs and their applications for building distributed systems.

According to a 2009 Gartner Report, virtualization was the top strategic

technology poised to change the computer industry. With sufficient storage, any

computer platform can be installed in another host computer, even if they use

processors with different instruction sets and run with distinct operating

systems on the same hardware.

1. Levels of Virtualization

Implementation

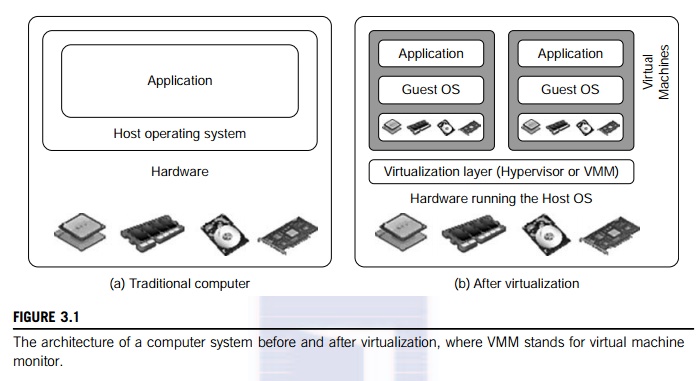

A traditional computer runs

with a host operating system specially tailored for its hardware architecture,

as shown in Figure 3.1(a). After virtualization, different user applications

managed by their own operating systems (guest OS) can run on the same hardware,

independent of the host OS. This is often done by adding additional software,

called a virtualization

layer as shown

in Figure 3.1(b). This virtualization layer is known as hypervisor or virtual machine monitor (VMM) [54]. The VMs are

shown in the upper boxes, where applications run with their own guest OS over

the virtualized CPU, memory, and I/O resources.

The main function of the software layer for

virtualization is to virtualize the physical hardware of a host machine into

virtual resources to be used by the VMs, exclusively. This can be implemented

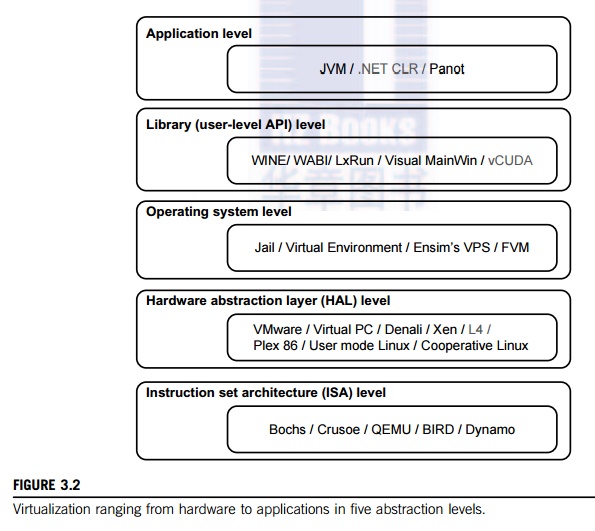

at various operational levels, as we will discuss shortly. The virtualization

software creates the abstraction of VMs by interposing a virtualization layer

at various levels of a computer system. Common virtualization layers include

the instruction set architecture

(ISA) level,

hardware level, operating system level, library support level, and application

level (see Figure 3.2).

1.1

Instruction Set Architecture Level

At the ISA level,

virtualization is performed by emulating a given ISA by the ISA of the host

machine. For example, MIPS binary code can run on an x86-based host machine

with the help of ISA emulation. With this approach, it is possible to run a

large amount of legacy binary code writ-ten for various processors on any given

new hardware host machine. Instruction set emulation leads to virtual ISAs

created on any hardware machine.

The

basic emulation method is through code interpretation. An interpreter program

interprets the source instructions to target instructions one by one. One

source instruction may require tens or hundreds of native target instructions

to perform its function. Obviously, this process is relatively slow. For better

performance, dynamic

binary translation is desired. This approach translates basic blocks of dynamic

source instructions to target instructions. The basic blocks can also be

extended to program traces or super blocks to increase translation efficiency.

Instruction set emulation requires binary translation and optimization. A virtual instruction set architecture (V-ISA) thus requires adding a

processor-specific software translation layer to the compiler.

1.2

Hardware Abstraction Level

Hardware-level virtualization is performed

right on top of the bare hardware. On the one hand, this approach generates a

virtual hardware environment for a VM. On the other hand, the process manages

the underlying hardware through virtualization. The idea is to virtualize a

computer’s resources, such as its processors, memory, and I/O devices. The

intention is to upgrade the hardware utilization rate by multiple users

concurrently. The idea was implemented in the IBM VM/370 in the 1960s. More

recently, the Xen hypervisor has been applied to virtualize x86-based machines

to run Linux or other guest OS applications. We will discuss hardware

virtualization approaches in more detail in Section 3.3.

1.3

Operating System Level

This refers to an abstraction

layer between traditional OS and user applications. OS-level virtualiza-tion

creates isolated containers on a single physical server

and the OS instances to utilize the hard-ware and software in data centers. The

containers behave like real servers. OS-level virtualization is commonly used

in creating virtual hosting environments to allocate hardware resources among a

large number of mutually distrusting users. It is also used, to a lesser

extent, in consolidating server hardware by moving services on separate hosts

into containers or VMs on one server. OS-level virtualization is depicted in

Section 3.1.3.

1.4

Library Support Level

Most applications use APIs

exported by user-level libraries rather than using lengthy system calls by the

OS. Since most systems provide well-documented APIs, such an interface becomes

another candidate for virtualization. Virtualization with library interfaces is

possible by controlling the communication link between applications and the

rest of a system through API hooks. The software tool WINE has implemented this

approach to support Windows applications on top of UNIX hosts. Another example

is the vCUDA which allows applications executing within VMs to leverage GPU

hardware acceleration. This approach is detailed in Section 3.1.4.

1.5 User-Application Level

Virtualization at the

application level virtualizes an application as a VM. On a traditional OS, an

application often runs as a process. Therefore, application-level virtualization is also known as process-level virtualization. The most popular approach

is to deploy high

level language (HLL)

VMs. In this scenario, the

virtualization layer sits as an application program on top of the operating

system, and the layer exports an abstraction of a VM that can run programs

written and compiled to a particular abstract machine definition. Any program

written in the HLL and compiled for this VM will be able to run on it. The

Microsoft .NET CLR and Java

Virtual Machine (JVM) are two good examples of this class of VM.

Other forms of application-level virtualization

are known as application isolation, application sandboxing, or

application streaming. The

process involves wrapping the application in a layer that is isolated from the host OS and other applications. The result is

an application that is much easier to distribute and remove from user

workstations. An example is the LANDesk application virtuali-zation platform

which deploys software applications as self-contained, executable files in an

isolated environment without requiring installation, system modifications, or

elevated security privileges.

1.6

Relative Merits of Different Approaches

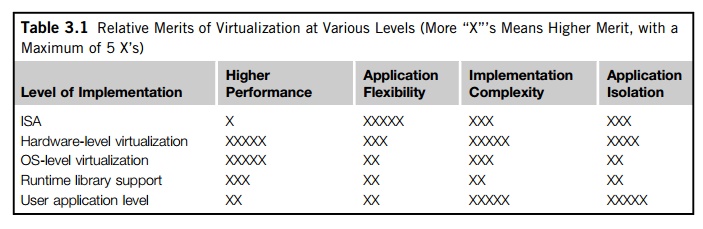

Table 3.1 compares the

relative merits of implementing virtualization at various levels. The column

headings correspond to four technical merits. “Higher Performance” and “Application Flexibility” are self-explanatory. “Implementation Complexity” implies the cost to implement that particular

vir-tualization level. “Application Isolation” refers to the effort required to isolate

resources committed to different VMs. Each row corresponds to a particular

level of virtualization.

The

number of X’s in the table cells reflects

the advantage points of each implementation level. Five X’s implies the best case and one X implies the

worst case. Overall, hardware and OS support will yield the highest

performance. However, the hardware and application levels are also the most

expensive to implement. User isolation is the most difficult to achieve. ISA

implementation offers the best application flexibility.

2. VMM Design Requirements and

Providers

As mentioned earlier, hardware-level

virtualization inserts a layer between real hardware and tradi-tional operating

systems. This layer is commonly called the Virtual Machine Monitor (VMM) and it manages the hardware

resources of a computing system. Each time programs access the hardware the VMM

captures the process. In this sense, the VMM acts as a traditional OS. One

hardware compo-nent, such as the CPU, can be virtualized as several virtual

copies. Therefore, several traditional oper-ating systems which are the same or

different can sit on the same set of hardware simultaneously.

There

are three requirements for a VMM. First, a VMM should provide an environment

for pro-grams which is essentially identical to the original machine. Second,

programs run in this environment should show, at worst, only minor decreases in

speed. Third, a VMM should be in complete control of the system resources. Any

program run under a VMM should exhibit a function identical to that which it

runs on the original machine directly. Two possible exceptions in terms of

differences are permitted with this requirement: differences caused by the

availability of system resources and differences caused by timing dependencies.

The former arises when more than one VM is running on the same machine.

The

hardware resource requirements, such as memory, of each VM are reduced, but the

sum of them is greater than that of the real machine installed. The latter

qualification is required because of the intervening level of software and the

effect of any other VMs concurrently existing on the same hardware. Obviously,

these two differences pertain to performance, while the function a VMM

pro-vides stays the same as that of a real machine. However, the identical

environment requirement excludes the behavior of the usual time-sharing

operating system from being classed as a VMM.

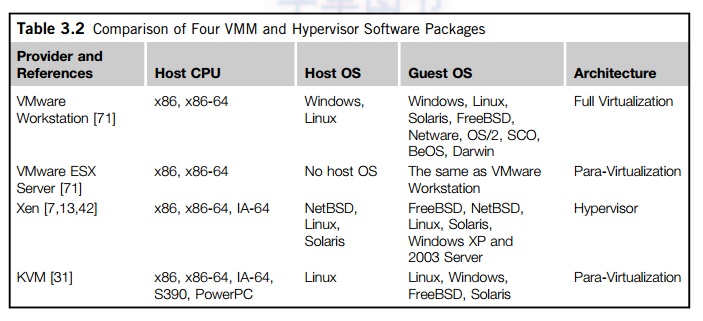

A VMM

should demonstrate efficiency in using the VMs. Compared with a physical

machine, no one prefers a VMM if its efficiency is too low. Traditional

emulators and complete software interpreters (simulators) emulate each

instruction by means of functions or macros. Such a method provides the most

flexible solutions for VMMs. However, emulators or simulators are too slow to

be used as real machines. To guarantee the efficiency of a VMM, a statistically

dominant subset of the virtual processor’s instructions needs to be executed directly by

the real processor, with no software intervention by the VMM. Table 3.2

compares four hypervisors and VMMs that are in use today.

Complete control of these resources by a VMM

includes the following aspects: (1) The VMM is responsible for allocating

hardware resources for programs; (2) it is not possible for a program to access

any resource not explicitly allocated to it; and (3) it is possible under

certain circumstances for a VMM to regain control of resources already

allocated. Not all processors satisfy these require-ments for a VMM. A VMM is

tightly related to the architectures of processors. It is difficult to

implement a VMM for some

types of processors, such as the x86. Specific limitations include the

inability to trap on some privileged instructions. If a processor is not

designed to support virtualization primarily, it is necessary to modify the

hardware to satisfy the three requirements for a VMM. This is known as

hardware-assisted virtualization.

3. Virtualization Support at the OS

Level

With the help of VM

technology, a new computing mode known as cloud computing is emerging. Cloud

computing is transforming the computing landscape by shifting the hardware and

staffing costs of managing a computational center to third parties, just like

banks. However, cloud computing has at least two challenges. The first is the

ability to use a variable number of physical machines and VM instances

depending on the needs of a problem. For example, a task may need only a single

CPU dur-ing some phases of execution but may need hundreds of CPUs at other

times. The second challenge concerns the slow operation of instantiating new

VMs. Currently, new VMs originate either as fresh boots or as replicates of a template

VM, unaware of the current application state. Therefore, to better support

cloud computing, a large amount of research and development should be done.

3.1

Why OS-Level Virtualization?

As mentioned earlier, it is

slow to initialize a hardware-level VM because each VM creates its own image

from scratch. In a cloud computing environment, perhaps thousands of VMs need

to be initi-alized simultaneously. Besides slow operation, storing the VM

images also becomes an issue. As a matter of fact, there is considerable

repeated content among VM images. Moreover, full virtualiza-tion at the

hardware level also has the disadvantages of slow performance and low density,

and the need for para-virtualization to modify the guest OS. To reduce the

performance overhead of hardware-level virtualization, even hardware

modification is needed. OS-level virtualization provides a feasible solution

for these hardware-level virtualization issues.

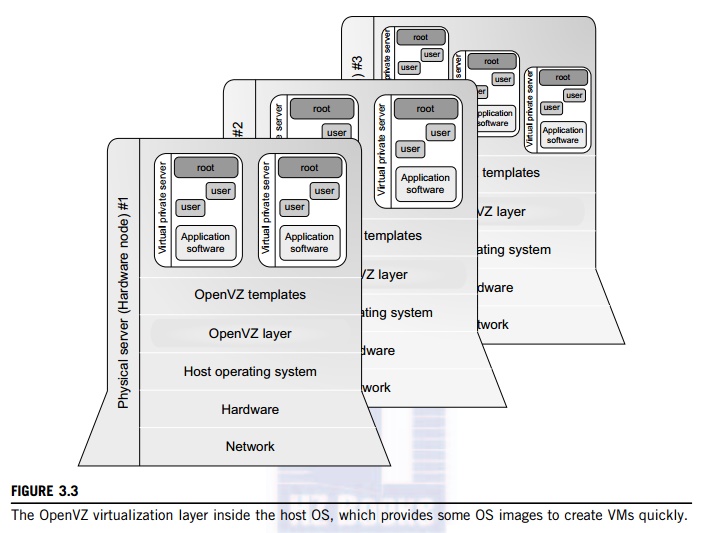

Operating

system virtualization inserts a virtualization layer inside an operating system

to partition a machine’s physical resources. It

enables multiple isolated VMs within a single operating system kernel. This

kind of VM is often called a virtual

execution environment (VE), Virtual Private System (VPS), or simply container. From the user’s point of view, VEs look like real ser-vers.

This means a VE has its own set of processes, file system, user accounts,

network interfaces with IP addresses, routing tables, firewall rules, and other

personal settings. Although VEs can be customized for different people, they

share the same operating system kernel. Therefore, OS-level virtualization is

also called single-OS image virtualization. Figure 3.3 illustrates operating

system virtualization from the point of view of a machine stack.

3.2

Advantages of OS Extensions

Compared to hardware-level virtualization, the

benefits of OS extensions are twofold: (1) VMs at the operating system level

have minimal startup/shutdown costs, low resource requirements, and high

scalability; and (2) for an OS-level VM, it is possible for a VM and its host

environment to synchro-nize state changes when necessary. These benefits can be

achieved via two mechanisms of OS-level virtualization: (1) All OS-level VMs on

the same physical machine share a single operating system kernel; and (2) the

virtualization layer can be designed in a way that allows processes in VMs to

access as many resources of the host machine as possible, but never to modify

them. In cloud

computing, the first and second

benefits can be used to overcome the defects of slow initialization of VMs at

the hardware level, and being unaware of the current application state,

respectively.

3.3

Disadvantages of OS Extensions

The main disadvantage of OS

extensions is that all the VMs at operating system level on a single container

must have the same kind of guest operating system. That is, although different

OS-level VMs may have different operating system distributions, they must

pertain to the same operating system family. For example, a Windows

distribution such as Windows XP cannot run on a Linux-based container. However,

users of cloud computing have various preferences. Some prefer Windows and

others prefer Linux or other operating systems. Therefore, there is a challenge

for OS-level virtualization in such cases.

Figure 3.3 illustrates the

concept of OS-level virtualization. The virtualization layer is inserted inside

the OS to partition the hardware resources for multiple VMs to run their

applications in multiple virtual environments. To implement OS-level

virtualization, isolated execution environ-ments (VMs) should be created based

on a single OS kernel. Furthermore, the access requests from a VM need to be

redirected to the VM’s local resource partition on

the physical machine. For example, the chroot command in a UNIX system can create several

virtual root directories within a host OS. These virtual root directories are

the root directories of all VMs created.

There

are two ways to implement virtual root directories: duplicating common

resources to each VM partition; or sharing most resources with the host

environment and only creating private resource copies on the VM on demand. The

first way incurs significant resource costs and overhead on a physical machine.

This issue neutralizes the benefits of OS-level virtualization, compared with

hardware-assisted virtualization. Therefore, OS-level virtualization is often a

second choice.

3.4

Virtualization on Linux or Windows Platforms

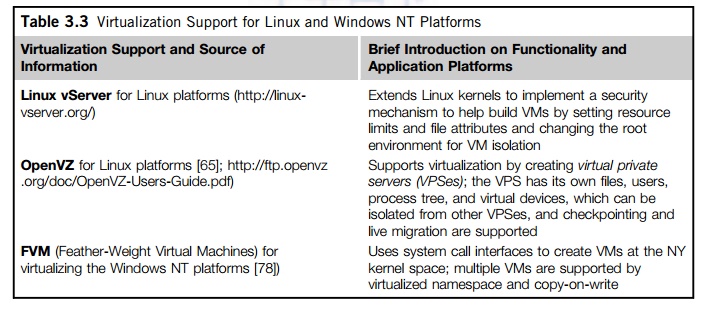

By far, most reported OS-level

virtualization systems are Linux-based. Virtualization support on the

Windows-based platform is still in the research stage. The Linux kernel offers

an abstraction layer to allow software processes to work with and operate on

resources without knowing the hardware details. New hardware may need a new

Linux kernel to support. Therefore, different Linux plat-forms use patched

kernels to provide special support for extended functionality.

However,

most Linux platforms are not tied to a special kernel. In such a case, a host

can run several VMs simultaneously on the same hardware. Table 3.3 summarizes

several examples of OS-level virtualization tools that have been developed in

recent years. Two OS tools (Linux vServer and OpenVZ) support Linux platforms to

run other platform-based applications through virtualiza-tion. These two

OS-level tools are illustrated in Example 3.1. The third tool, FVM, is an

attempt specifically developed for virtualization on the Windows NT platform.

Example 3.1 Virtualization Support for the

Linux Platform

OpenVZ is an OS-level tool designed to support

Linux platforms to create virtual environments for running VMs under different

guest OSes. OpenVZ is an open source container-based virtualization solution

built on Linux. To support virtualization and isolation of various subsystems,

limited resource management, and checkpointing, OpenVZ modifies the Linux

kernel. The overall picture of the OpenVZ system is illustrated in Figure 3.3.

Several VPSes can run simultaneously on a physical machine. These VPSes look

like normal

Table 3.3 Virtualization Support for Linux and Windows NT

Platforms

Linux

servers. Each VPS has its own files, users and groups, process tree, virtual

network, virtual devices, and IPC through semaphores and messages.

The resource management

subsystem of OpenVZ consists of three components: two-level disk alloca-tion, a

two-level CPU scheduler, and a resource controller. The amount of disk space a

VM can use is set by the OpenVZ server administrator. This is the first level

of disk allocation. Each VM acts as a standard Linux system. Hence, the VM

administrator is responsible for allocating disk space for each user and group.

This is the second-level disk quota. The first-level CPU scheduler of OpenVZ

decides which VM to give the time slice to, taking into account the virtual CPU

priority and limit settings.

The second-level CPU

scheduler is the same as that of Linux. OpenVZ has a set of about 20 parameters

which are carefully chosen to cover all aspects of VM operation. Therefore, the

resources that a VM can use are well controlled. OpenVZ also supports

checkpointing and live migration. The complete state of a VM can quickly be

saved to a disk file. This file can then be transferred to another physical

machine and the VM can be restored there. It only takes a few seconds to

complete the whole process. However, there is still a delay in processing

because the established network connections are also migrated.

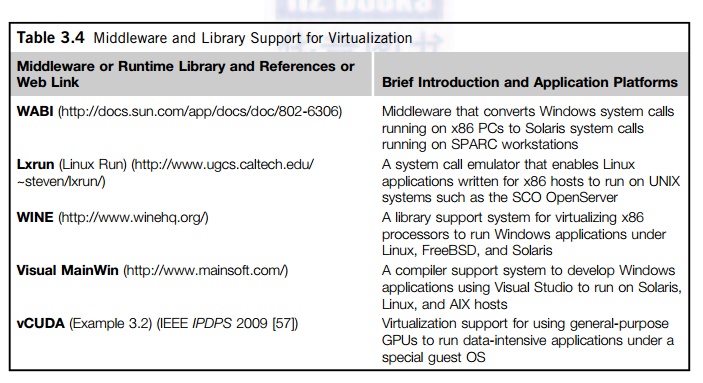

4. Middleware Support for Virtualization

Library-level virtualization

is also known as user-level Application

Binary Interface (ABI) or API emulation. This type of virtualization can create execution

environments for running alien programs on a platform rather than creating a VM

to run the entire operating system. API call interception and remapping are the

key functions performed. This section provides an overview of several

library-level virtualization systems: namely the Windows Application Binary Interface (WABI), lxrun, WINE, Visual

MainWin, and vCUDA, which are summarized in Table 3.4.

The WABI

offers middleware to convert Windows system calls to Solaris system calls.

Lxrun is really a system call emulator that enables Linux applications written

for x86 hosts to run on UNIX systems. Similarly, Wine offers library support

for virtualizing x86 processors to run Windows appli-cations on UNIX hosts.

Visual MainWin offers a compiler support system to develop Windows

appli-cations using Visual Studio to run on some UNIX hosts. The vCUDA is

explained in Example 3.2 with a graphical illustration in Figure 3.4.

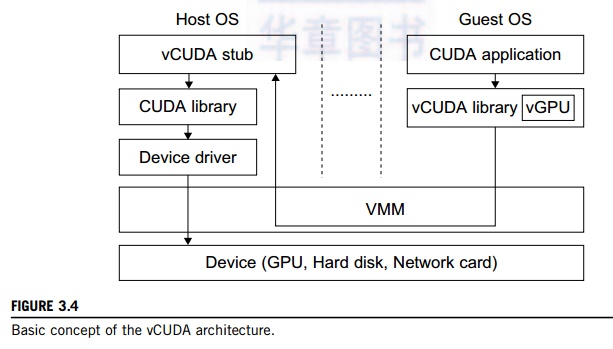

Example 3.2 The vCUDA for Virtualization of

General-Purpose GPUs

CUDA is

a programming model and library for general-purpose GPUs. It leverages the high

performance of GPUs to run compute-intensive applications on host operating

systems. However, it is difficult to run CUDA applications on hardware-level

VMs directly. vCUDA virtualizes the CUDA library and can be installed on guest

OSes. When CUDA applications run on a guest OS and issue a call to the CUDA

API, vCUDA intercepts the call and redirects it to the CUDA API running on the

host OS. Figure 3.4 shows the basic concept of the vCUDA architecture [57].

The

vCUDA employs a client-server model to implement CUDA virtualization. It

consists of three user space components: the vCUDA library, a virtual GPU in

the guest OS (which acts as a client), and the vCUDA stub in the host OS (which

acts as a server). The vCUDA library resides in the guest OS as a substitute

for the standard CUDA library. It is responsible for intercepting and

redirecting API calls from the client to the stub. Besides these tasks, vCUDA

also creates vGPUs and manages them.

The functionality of a vGPU

is threefold: It abstracts the GPU structure and gives applications a uni-form

view of the underlying hardware; when a CUDA application in the guest OS

allocates a device’s mem-ory the vGPU can return a local virtual address to the

application and notify the remote stub to allocate the real device memory, and

the vGPU is responsible for storing the CUDA API flow. The vCUDA stub receives

and

interprets remote requests and creates a corresponding execution context for

the API calls from the guest OS, then returns the results to the guest OS. The

vCUDA stub also manages actual physical resource allocation.

Related Topics