Chapter: Psychology: Thinking

Decision Making: Framing Effects

Framing Effects

Two factors are obviously crucial for any decision, and these factors are central to utility theory, a conception of decision making endorsed by many economists. Accordingto this theory, you should, first, always consider the possible outcomes of a decision and choose the most desirable one. Would you rather have $10 or $100? Would you rather work for 2 weeks to earn a paycheck or work for 1 week to earn the same pay-check? In each case, it seems obvious that you should choose the option with the great-est benefit ($100) or the lowest cost (working for just 1 week).

Second, you should consider the risks. Would you rather buy a lottery ticket with 1 chance in 100 of winning, or a lottery ticket—offered at the same price and with the same prize—with just 1 chance in 1,000 of winning? If one of your friends liked a movie and another didn’t, would you want to go see it? If five of your friends had seen the movie and all liked it, would you want to see it then? In these cases, you should (and probably would) choose the options that give the greatest likelihood of achieving the things you value (increasing your odds of winning the lottery or seeing a movie you’ll enjoy).

It’s no surprise, therefore, that our decisions are influenced by both of these factors—the attractiveness of the outcome and the likelihood of achieving that outcome. But our decisions are also influenced by something else that seems trivial and irrelevant—namely, how a question is phrased or how our options are described. In many cases, these changes in the framing of a decision can reverse our decisions, turn-ing a strong preference in one direction into an equally strong preference in the oppo-site direction. Take, for example, the following problem:

Imagine that the United States is preparing for the outbreak of an unusual disease, which is expected to kill 600 people. Two alternative programs to combat the disease have been proposed. Assume that the exact scientific estimate of the consequences of the two programs is as follows:

· If Program A is adopted, 400 people will die.

· If Program B is adopted, there’s a one-third probability that nobody will die and a two-thirds probability that 600 people will die.

Which of the two programs would you favor?

With these alternatives, a clear majority of participants (78%) opted for Program B—presumably in the hope that, in this way, they could avoid any deaths. But now consider what happens when participants are given exactly the same problem but with the options framed differently. In this case, participants were again told that if no action is taken, the disease will kill 600 people. They were then asked to choose between the following options:

· If Program A is adopted, 200 of these people will be saved.

· If Program B is adopted, there’s a one-third probability that 600 people will be saved and a two-thirds probability that no people will be saved.

Given this formulation, a clear majority of participants (72%) opted for Program A. To them, the certainty of saving 200 people was clearly preferable to a one-third probability of saving everybody (Tversky & Kahneman, 1981). But of course the options here are identical to the options in the first version of this program—400 dead, out of 600, is equivalent to 200 saved out of 600. The only difference between the problems lies in how the alternatives are phrased, but this shift in framing has an enormous impact (Kahneman & Tversky, 1984). Indeed, with one framing, the vote is almost 4 to 1 in favor of A; with the other framing, the vote is almost 3 to 1 in the opposite direction!

It’s important to realize that neither of these framings is “better” than the other—since, after all, the frames both present the same information. Nor is the selection of one of these options the “correct choice,” and one can defend either the choice of Program A or the choice of B. What’s troubling, though, is the inconsistency in people’s choices—their preferences are flip-flopping based on the framing of the problem. It’s also troubling that people’s choices are so easily manipu-lated—by a factor that seems irrelevant to the options being considered.

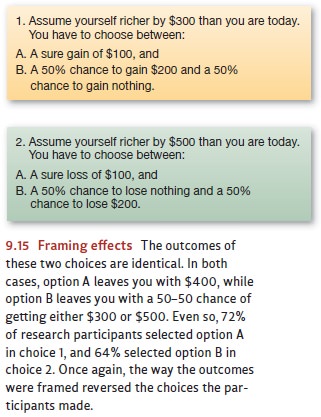

Framing effects are quite widespread and can easily be observed in settings both inside and outside of the laboratory. For example, consider the fact that physicians are more willing to endorse a program of treatment that has a 50% success rate than they are to endorse a program with a 50% failure rate—because they’re put off by a frame that emphasizes the negative outcome. Likewise, research participants make one choice in the problem shown in the top half of Figure 9.15 and the opposite choice in the problem shown in the bottom half of the figure—even though the out-comes are the same in the two versions of this problem; the only thing that’s changedis the framing (Levin & Gaeth, 1988; Mellers, Chang, Birnbaum, & Ordóñez, 1992; also Schwarz, 1999).

In all cases, though, framing effects follow a simple pattern: In general, people try to make choices that will minimize or avoid losses—that is, they show a tendency called loss aversion. Thus—returning to our earlier example—if the “disease problem” isframed in terms of losses (people dying), participants are put off by this, and so they reject Program A, the option that makes the loss seem certain. They instead choose the gamble inherent in Program B, presumably in the hope that the gamble will pay off and the loss will be avoided. Loss aversion also leads people to cling tightly to what they already have, to make sure they won’t lose it. If, therefore, the disease problem is framed in terms of gains (lives saved), people want to take no chances with this gain and so reject the gamble and choose the sure bet (Program A).

Loss aversion thus explains both halves of the data in the disease problem—a ten-dency to take risks when considering a potential loss, but an equally strong tendency to avoid risk when considering a potential gain. And, again, let’s be clear that both of thesetendencies are sensible; surely it’s a good idea to hold tight to sure gains and avoid sure losses. The problem, though, is that what counts as a loss or gain depends on one’s ref-erence point. (Compared to the best possible outcome, everything looks like a loss; compared to the worst outcome, everything looks like a gain.) This is the key to fram-ing effects. By recasting our options, the shift in framing changes our reference point— and the consequence is that people are left open to manipulation by whoever chooses the frame.

Related Topics