Chapter: Psychology: Thinking

Mental Representations

MENTAL REPRESENTATIONS

Common sense tells us that we’re

able to think about objects or events not currently in view. Thus, you can

decide whether you want the chocolate ice cream or the strawberry before either

is delivered. Likewise, you can draw conclusions about George by remem-bering

how he acted at the party, even though the party was two weeks ago and a

hun-dred miles away. In these (and many other cases), our thoughts must involve

mentalrepresentations—contents in

the mind that stand for some object or event or state ofaffairs, allowing us to

think about those objects or events even in their absence.

Mental representations can also

stand for objects or events that exist only

in our minds—including fantasy objects, like unicorns or Hogwarts School,

or impossible objects, like the precise

value of pi. And even when we’re thinking about objects in plain view,

mental representations still have a role to play. If you’re thinking about this

page, for example, you might represent it for yourself in many ways: “a page

from the Thinking,” or “a piece of paper,” or “something colored white,” and so

on. These different ideas all refer to the same physical object—but it’s the

ideas (the men-tal representations), not the physical object, that matter for

thought. (Imagine, for example, that you were hunting for something to start a

fire with; for that, it might be helpful to think of this page as a piece of

paper rather than a carrier of information.)

Mental representations of all

sorts provide the content for our thoughts. Said differ-ently, what we call

“thinking” is just the set of operations we apply to our mental rep-resentations—analyzing

them, contemplating them, and comparing them in order to draw conclusions,

solve problems, and more. However, the nature of the operations applied to our

mental operations varies—in part because mental representations come in

different forms, and each form requires its own type of operations.

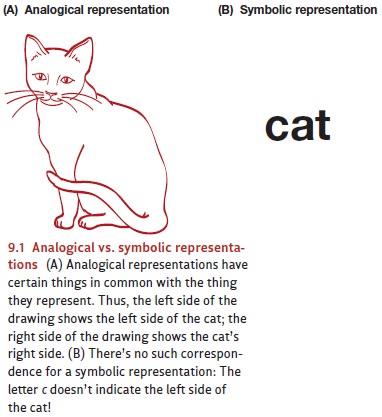

Distinguishing Images and Symbols

Some of our mental

representations are analogical—they

capture some of the actual characteristics of (and so are analogous to) what

they represent. Analogical representa-tions usually take the form of mental images. In contrast, other

representations are symbolic and

don’t in any way resemble the item they stand for.

To illustrate the difference

between images and symbols, consider a drawing of a cat (Figure 9.1). The picture

consists of marks on paper, but the actual cat is flesh and blood. Clearly,

therefore, the picture is not equivalent to a cat; it’s merely a

representa-tion of one. Even so, the picture has many similarities to the

creature it represents, so that, in general, the picture looks in some ways

like a cat: The cat’s eyes are side by side in reality, and they’re side by

side in the picture; the cat’s ears and tail are at opposite ends of the

creature, and they’re at opposite ends in the picture. It’s properties like

these that make the picture a type of analogical representation.

In contrast, consider the word cat. Unlike a picture, the word in no

way resembles the cat. The letter c

doesn’t represent the left-hand edge of the cat, nor does the overall shape of

the word in any way indicate the shape of this feline. The word, therefore, is

an entirely abstract representation, and the rela-tion between the three

letters c-a-t and the animal they

represent is essentially arbitrary.

For some thoughts, mental images

seem crucial. (Try thinking about a particular shade of blue, or try to recall

whether a horse’s ears are rounded at the top or slightly pointed. The odds are

good that these thoughts will call mental images to mind.) For other thoughts,

you probably need a symbolic representation. (Think about the causes of global

warming; this thought may call images to mind—perhaps smoke pouring out of a

car’s exhaust pipes—but it’s likely that your thoughtspecifies relationships

and issues not captured in the images at all.)For still other thoughts, it’s

largely up to you how to represent thethought, and the form of representation

is often consequential. If you form a mental image of a cat, for example, you

may be reminded of other animals that look like the cat—and so you may find

yourself thinking about lions or tigers. If you think about cats without a

mental image, this may call a different set of ideas to mind—perhaps thoughts

about other types of pet. In this way, the type of representation can shape the

flow of your thoughts—and thus can influence your judgments, your decisions,

and more.

Mental Images

People often refer to their

mental images as “mental pictures” and comment that they inspect these

“pictures” with the “mind’s eye.” In fact, references to a mind’s eye have been

part of our language at least since the days of Shakespeare, who used the

phrase in Act 1 of Hamlet. But, of

course, there is no (literal) mind’s eye—no tiny eye somewhere inside the

brain. Likewise, mental pictures cannot be actual pictures: With no eye deep

inside the brain, who or what would inspect such pictures?

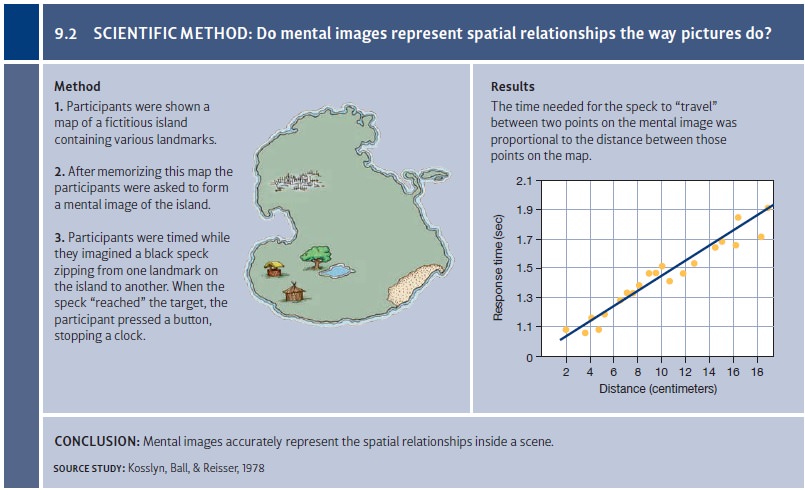

Why, then, do people describe

their images as mental pictures? This usage presum-ably reflects the fact that

images resemble pictures in some ways, but that simply invites the next

question: What is this resemblance? A key part of the answer involves spatial

layout. In a classic study, research participants were first shown the map of a

fictitious island containing various objects: a hut, a well, a tree, and so on

(Kosslyn, Ball, & Reisser, 1978; Figure 9.2). After memorizing this map,

the participants were asked to

form a mental image of the

island. The experimenters then named two objects on the map (e.g., the hut and

the tree), and participants had to imagine a black speck zipping from the first

location to the second; when the speck “reached” the target, the partici-pant

pressed a button, stopping a clock. Then the experimenters did the same for

another pair of objects—say the tree and the well—and so on for all the various

pairs of objects on the island.

The results showed that the time

needed for the speck to “travel” across the image was directly proportional to

the distance between the two points on the original map. Thus, participants

needed little time to scan from the pond to the tree; scanning from the pond to

the hut (roughly four times the distance) took roughly four times as long;

scanning from the hut to the patch of grass took even longer. Apparently, then,

the image accurately depicted the map’s geometric arrangement: Points close

together on the map were somehow close to each other in the image; points

farther apart on the map were more distant in the image. In this way, the image

is unmistakably picture-like, even if it’s not literally a picture.

Related evidence indicates

enormous overlap between the brain areas crucial for cre-ating and examining

mental images and the brain areas crucial for visual perception. Specifically,

neuroimaging studies show that many of the same brain structures (prima-rily in

the occipital lobe) are active during both visual perception and visual imagery

(Figure 9.3). In fact, the parallels between these two activities are quite

precise: When people imagine movement patterns, high levels of activation are

observed in brain areas that are sensitive to motion in ordinary perception.

Likewise, for very detailed images, the brain areas that are especially

activated tend to be those crucial for perceiving fine detail in a stimulus

(Behrmann, 2000; Thompson & Kosslyn, 2000).

Further evidence comes from

studies using transcranial magnetic stimulation. Using this technique,

researchers have produced temporary disruptions in the visual cortex of healthy

volunteers—and, as expected, this causes problems in seeing. What’s important

here is that this procedure also causes parallel problems in visual

imagery—consistent with the idea that this brain region is crucial both for the

processing of visual inputs and for the creation and inspection of images

(Kosslyn, Pascual-Leone, Felician, Camposano, Keenan et al., 1999).

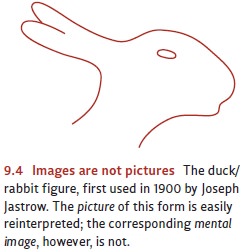

All of these results powerfully

confirm that visual images are indeed picture-like; and they lend credence to

the often-heard report that people can “think in pictures.” Be aware, though,

that visual images are picture-like,

but not pictures. In one study, partic-ipants were shown the drawing in Figure

9.4 and asked to memorize it (Chambers & Reisberg, 1985; also Reisberg

& Heuer, 2005). The figure was then removed, and partic-ipants were asked

to form a mental image of this now absent figure and to describe their image.

Some participants reported that they could vividly see a duck facing to the

left; others reported seeing a rabbit facing to the right. The participants

were then told there was another way to perceive the figure and asked if they

could reinterpret the image, just as they had reinterpreted a series of

practice figures a few moments earlier. Given this task, not one of the participants

was able to reinterpret the form. Even with hints and considerable coaxing,

none were able to find a duck in a “rabbit image” or a rabbit in a “duck

image.” The participants were then given a piece of paper and asked to draw the

figure they had just been imagining; every participant was now able to come up

with the perceptual alternative.

These findings make it clear that

a visual image is different from a picture. The pic-ture of the duck/rabbit is easily reinterpreted; the

corresponding image is not. This

isbecause the image is already organized and interpreted to some extent (e.g.,

facing “to the left” or “to the right”), and this interpretation shapes what

the imaged form seems to resemble and what the imaged form will call to mind.

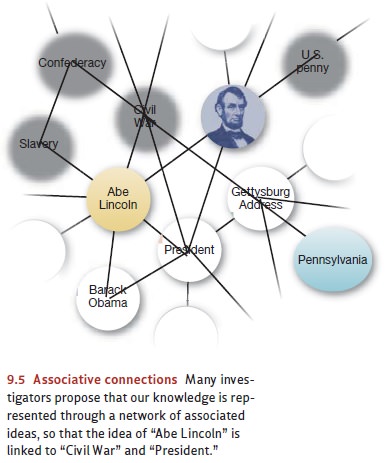

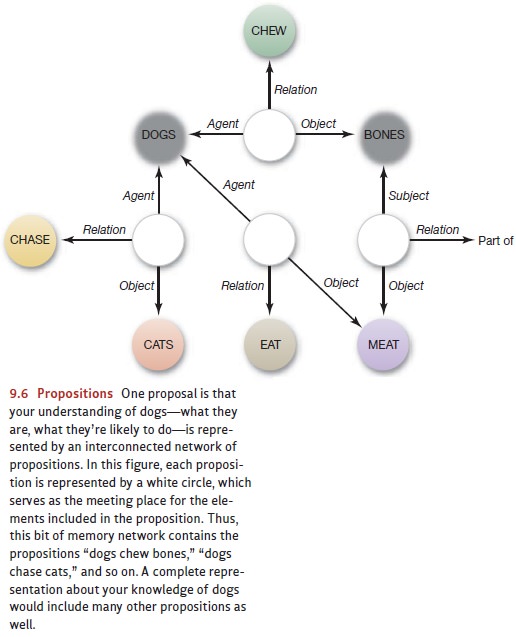

Propositions

As we’ve seen, mental images—and

analogical representations in general—are essen- tial for representing some

types of information.

Other information, in

contrast, requires a symbolic representation. This type of mental

representation is more flexible because

symbols can represent

any content we

choose, thanks to

the fact that

it’s entirely up to us what each symbol stands for. Thus, we can use the

word mole to stand for an animal that digs in the ground, or we could use the

word (as Spanish speakers do) to refer to a type of sauce used in cooking.

Likewise, we can use the word cat to refer to

your pet, Snowflake; but, if we

wished, we could instead

use the Romanian

word pisica˘ as the symbol representing your pet, or we could use the

arbitrary designation X2$. (Of course, for communicating with others, it’s

important that we use the same terms they do. This is not an issue, however,

when we’re representing thoughts in ou own minds.)

Crucially, symbols can also be

combined with each other to represent more complex contents—such as “San Diego

is in California,” or “cigarette smoking is bad for your health.” There is

debate about the exact nature of these combinations, but many schol- ars

propose that symbols can be assembled into propositions—statements that relate

a subject (the item about which the statement is being made) and a predicate

(what’s being asserted about the

subject). For example, “Solomon loves to blow glass,” “Jacob lived in Poland,”

and “Squirrels eat burritos” are all propositions (although the first two are

true, and the last is false). But just the word Susan or the phrase “is

squeamish” aren’t propositions—the first is a subject without a predicate; the

second is a predicate without a subject. (For more on how propositions are

structured and the role they play in our thoughts, see J. Anderson, 1993, 1996.)It’s

easy to express propositions as sentences, but this is just a convenience; many

other formats are possible. In the mind, propositions are probably expressed

via net- work structures, related to the network models. Individual symbols

serve as nodes

within the network—meeting places

for various links—so if we were

to draw a picture of the network, the nodes would look like knots in a

fisherman’s net, and this

is the origin

of the term

node (derived from

the Latinnodus, meaning “knot”).

The individual nodes are connected to each other by

associative links (Figure

9.5). Thus, in this system

there might be a node representing Abe

Lincoln and another node repre-

senting President, and the link between

them represents part of our knowledge about Lincoln—namely, that he was a

president. Other links have labels

on them, as

shown in Figure

9.6; these labels allow

us to specify

other relationships among

nodes, and in this way we can use the network to express

any proposition at all (after J. Anderson, 1993, 1996).

The various nodes representing a

proposition are activated when- ever

a person is

thinking about that

proposition. This activation then spreads

to neighboring nodes, through the associative links, much

as electric current

spreads through a

network of wires.

However, this

spread of activation

will be weaker

(and will occur more

slowly) between nodes

that are only

weakly associated. The

spreading activation will

also dissipate as it spreads

outward, so that little or no activation will reach the nodes more

distant from the activation’s source.

In fact, we can follow the spread of activation directly. In a classic study, participants were presented with two strings of letters, like NARDE–DOCTOR, or GARDEN–DOCTOR, or NURSE–DOCTOR (Meyer & Schvaneveldt, 1971). The participants’ job was to press a “yes” button if both sequences were real words (as in the second and third examples here), and a “no” button if either was not a word (the first example). Our interest here is only in the two pairs that required a yes response. (In these tasks, the no items serve only as catch trials, ensuring that partici-pants really are doing the task as they were instructed.)

Let’s consider a trial in which

participants see a related pair, like NURSE– DOCTOR. In choosing a response,

they first need to confirm that, yes, NURSE is a real word in English. To do

this, they presumably need to locate the word NURSE in their mental dictionary;

once they find it, they can be sure that these letters do form a legitimate

word. What this means, though, is that they will have searched for, and

activated, the node in memory that represents this word—and this, we have

hypothe-sized, will trigger a spread of activation outward from the node,

bringing activation to other, nearby nodes. These nearby nodes will surely

include the node for DOCTOR, since there’s a strong association between “nurse”

and “doctor.” Therefore, once the node for NURSE is activated, some activation

should also spread to the node for DOCTOR.

Once they’ve dealt with NURSE,

the participants can turn their attention to the sec-ond word in the pair. To make a decision about DOCTOR (is

this string a word or not?), the participants must locate the node for this

word in memory. If they find the relevant node, then they know that this

string, too, is a word and can hit the “yes” button. But of course the process

of activating the node for DOCTOR has already begun, thanks to the activation

this node just received from the node for NURSE. This should accelerate the

process of bringing the DOCTOR node to threshold (since it’s already partway

there), and so it will take less time to activate. Hence, we expect quicker

responses to DOCTOR in this context, compared to a context in which it was

preceded by some unrelated word and therefore not primed. This prediction is

correct. Participants’ lexi-cal decision responses are faster by almost 100 milliseconds

if the stimulus words are related, so that the first word can prime the second

in the way we just described.

We’ve described this sequence of

events within a relatively uninteresting task— participants merely deciding

whether letter strings are words in English or not. But the

same dynamic—with one node

priming other, nearby nodes—plays a role in, and can shape, the flow of our

thoughts. For example, we mentioned that the sequence of ideas in a dream is

shaped by which nodes are primed. Likewise, in problem solving, we sometimes

have to hunt through memory, looking for ideas about how to tackle the problem

we’re confronting. In this process, we’re plainly guided by the pattern of

which nodes are activated (and so more available) and which nodes aren’t. This

pat-tern of activation in turn depends on how the nodes are connected to each

other—and so the arrangement of our knowledge within long-term memory can have

a powerful impact on whether we’ll locate a problem’s solution.

Related Topics