Chapter: Embedded Systems Design : Memory systems

Bus snooping

Bus snooping

With bus snooping, a memory cache monitors the external bus for any

access to data within the main memory that it already has. If the cache data is

more recent, the cache can either supply it direct or force the other master

off the bus, update main memory and start a retry, thus allowing the original

master access to valid data. As an alternative to forcing a retry, the cache

containing the valid data can act as memory and supply the data directly. As previously

discussed, bus snooping is essential for any multimaster system to ensure cache

coherency.

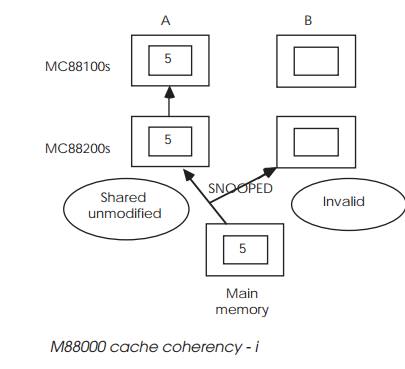

The bus snooping mechanism used by the MC88100/ MC88200 uses a

combination of write policies, cache tag status and bus monitoring to ensure

coherency. Nine diagrams show a typical sequence. In the first figure on the

previous page, processor A reads data from the main memory and this data is

cached. The main memory is declared global and is shared by processors A and B.

Both these caches have bus snooping enabled for this global memory. This causes

the cached data to be tagged as shared unmodified; i.e. another master may need

it and the data is identical to that of main memory. A´s access is snooped by

processor B, which does nothing as its cache entry is invalid. It should be

noted that snooping does not require any direct proces-sor of software

intervention and is entirely automatic.

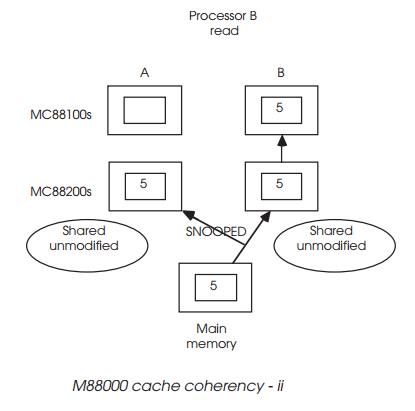

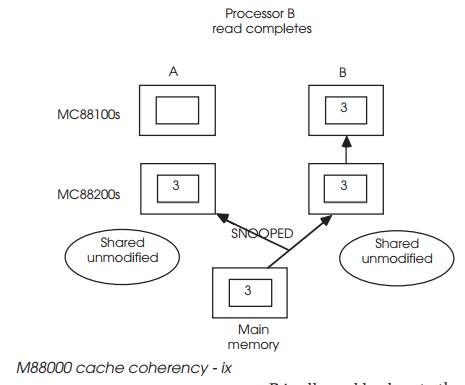

Processor B accesses the main memory, as shown in the next diagram and

updates its cache as well. This is snooped by A but the current tag of shared

unmodified is still correct and nothing is done.

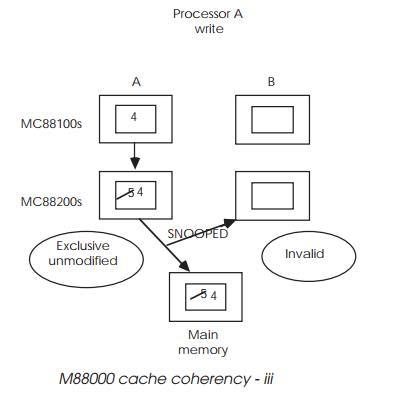

Processor A then modifies its data as shown in diagram (iii) and by

virtue of a first write-allocate policy, writes through to the main memory. It

changes the tag to exclusive unmodified; i.e. the data is cached exclusively by

A and is coherent with main memory. Processor B snoops the access and

immediately invalidates its old copy within its cache.

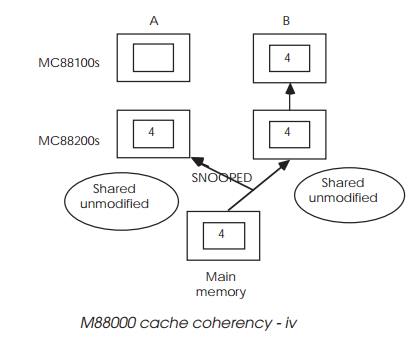

When processor B needs the data, it is accessed from the main memory and

written into the cache as shared unmodified data. This is snooped by A, which

changes its data to the same status. Both processors now know that the data

they have is coherent with the main memory and is shared.

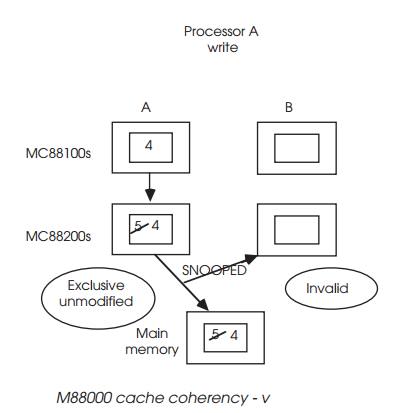

Processor A now modifies the data which is written out to the main

memory and snooped by B which marks its cache entry as invalid. Again, this is

a first write-allocate policy in effect.

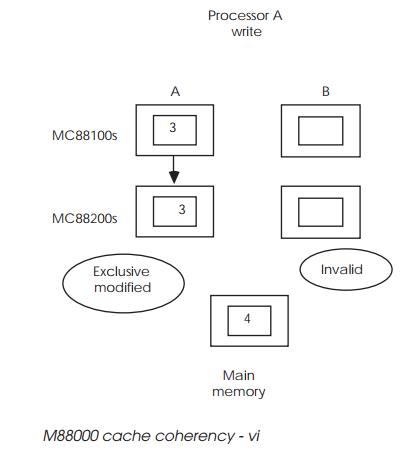

Processor A modifies the data again but, by virtue of the copyback

selection, the data is not written out to the main memory. Its cache entry is

now tagged as exclusive modified; i.e. this may be the only valid copy within

the system.

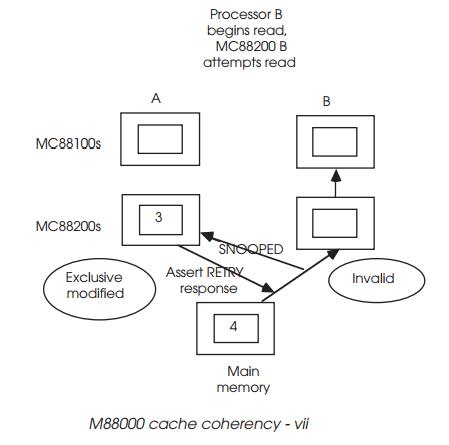

Processor B tries to get the data and starts an external memory access,

as shown. Processor A snoops this access, recog-nises that it has the valid

copy and so asserts a retry response to processor B, which comes off the bus

and allows processor A to update the main memory and change its cache tag

status to shared unmodified.

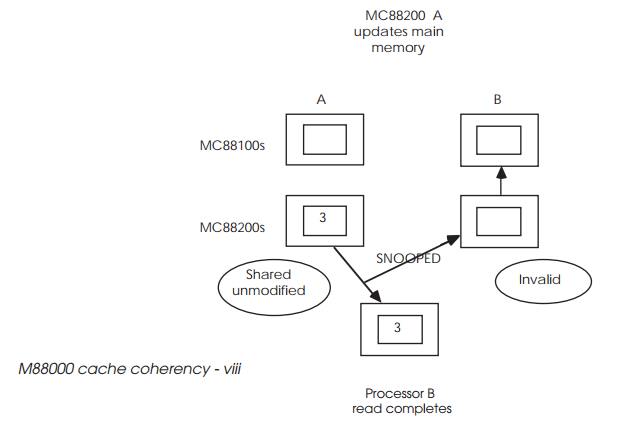

Once completed, processor B is allowed back onto the bus to complete its

original access, this time with the main memory containing the correct data.

This sequence is relatively simple, compared with those encountered in

real life where page faults, cache flushing, etc., further complicate the state

diagrams. The control logic for the CMMU is far more complex than that of the

MC88100 processor itself and this demonstrates the complexity involved in

ensuring cache coherency within multiprocessor systems.

The problem of maintaining cache coherency has led to the development of

two standard mechanisms — MESI and MEI. The MC88100 sequence that has just been

discussed is similar to that of the MESI protocol. The MC68040 cache coherency

scheme is similar to that of the MEI protocol.

Related Topics