Chapter: Security in Computing : Security in Networks

Encryption - Security in Networks

Encryption

Encryption is probably the

most important and versatile tool for a network security expert. We have seen

in earlier chapters that encryption is powerful for providing privacy,

authenticity, integrity, and limited access to data. Because networks often

involve even greater risks, they often secure data with encryption, perhaps in

combination with other controls.

Before we begin to study the

use of encryption to counter network security threats, let us consider these

points. First, remember that encryption is not a panacea or silver bullet. A flawed

system design with encryption is still a flawed system design. Second, notice

that encryption protects only what is encrypted (which should be obvious but

isn't). Data are exposed between a user's fingertips and the encryption process

before they are transmitted, and they are exposed again once they have been

decrypted on the remote end. The best encryption cannot protect against a

malicious Trojan horse that intercepts data before the point of encryption.

Finally, encryption is no more secure than its key management. If an attacker

can guess or deduce a weak encryption key, the game is over. People who do not

understand encryption sometimes mistake it for fairy dust to sprinkle on a

system for magic protection. This book would not be needed if such fairy dust

existed.

In network applications,

encryption can be applied either between two hosts (called link encryption) or

between two applications (called end-to-end encryption). We consider each

below. With either form of encryption, key distribution is always a problem.

Encryption keys must be delivered to the sender and receiver in a secure

manner. In this section, we also investigate techniques for safe key

distribution in networks. Finally, we study a cryptographic facility for a

network computing environment.

Link Encryption

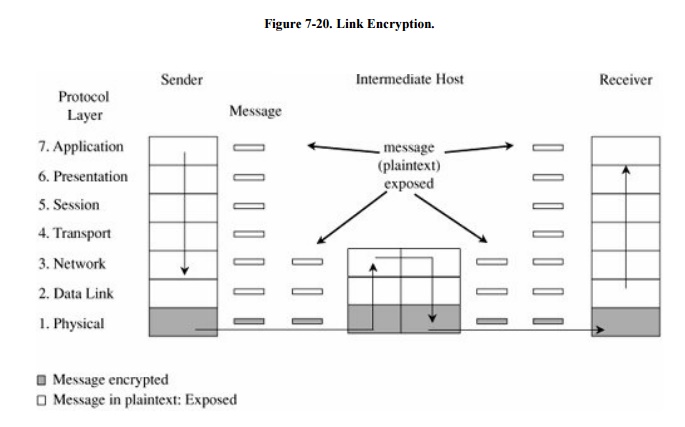

In link encryption, data are encrypted just before the system places

them on the physical communications link. In this case, encryption occurs at

layer 1 or 2 in the OSI model. (A similar situation occurs with TCP/IP

protocols.) Similarly, decryption occurs just as the communication arrives at

and enters the receiving computer. A model of link encryption is shown in Figure 7-20.

Encryption protects the

message in transit between two computers, but the message is in plaintext

inside the hosts. (A message in plaintext is said to be "in the

clear.") Notice that because the encryption is added at the bottom

protocol layer, the message is exposed in all other layers of the sender and

receiver. If we have good physical security, we may not be too concerned about

this exposure; the exposure occurs on the sender's or receiver's host or

workstation, protected by alarms or locked doors, for example. Nevertheless,

you should notice that the message is exposed in two layers of all intermediate

hosts through which the message may pass. This exposure occurs because routing

and addressing are not read at the bottom layer, but only at higher layers. The

message is in the clear in the intermediate hosts, and one of these hosts may

not be especially trustworthy.

Link encryption is invisible

to the user. The encryption becomes a transmission service performed by a

low-level network protocol layer, just like message routing or transmission

error detection. Figure 7-21 shows a

typical link encrypted message, with the shaded fields encrypted. Because some

of the data link header and trailer is applied before the block is encrypted,

part of each of those blocks is shaded. As the message M is handled at each

layer, header and control information is added on the sending side and removed

on the receiving side. Hardware encryption devices operate quickly and

reliably; in this case, link encryption is invisible to the operating system as

well as to the operator.

Link encryption is especially

appropriate when the transmission line is the point of greatest vulnerability.

If all hosts on a network are reasonably secure but the communications medium

is shared with other users or is not secure, link encryption is an easy control

to use.

End-to-End Encryption

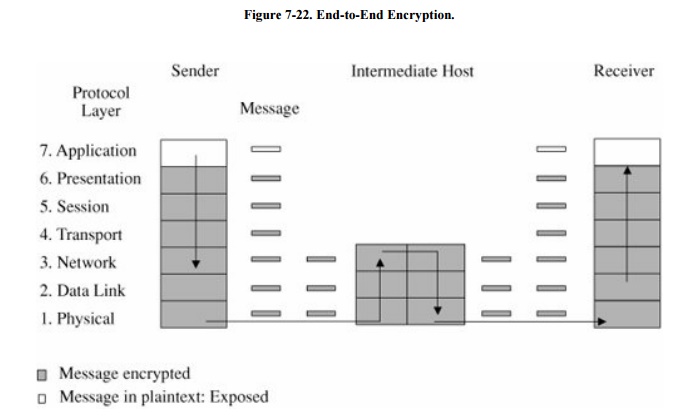

As its name implies, end-to-end encryption provides security

from one end of a transmission to the other. The encryption can be applied by a

hardware device between the user and the host. Alternatively, the encryption

can be done by software running on the host computer. In either case, the

encryption is performed at the highest levels (layer 7, application, or perhaps

at layer 6, presentation) of the OSI model. A model of end-to-end encryption is

shown in Figure 7-22.

Since the encryption precedes

all the routing and transmission processing of the layer, the message is

transmitted in encrypted form throughout the network. The encryption addresses

potential flaws in lower layers in the transfer model. If a lower layer should

fail to preserve security and reveal data it has received, the data's

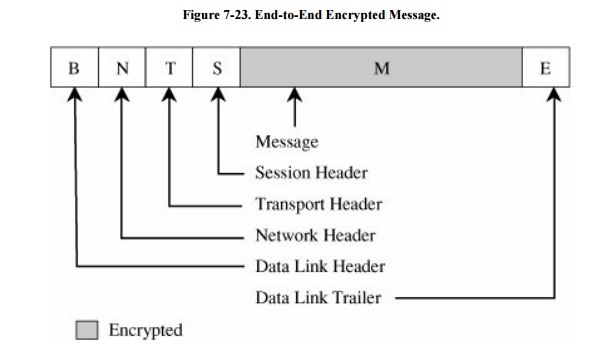

confidentiality is not endangered. Figure 7-23

shows a typical message with end-to-end encryption, again with the encrypted

field shaded.

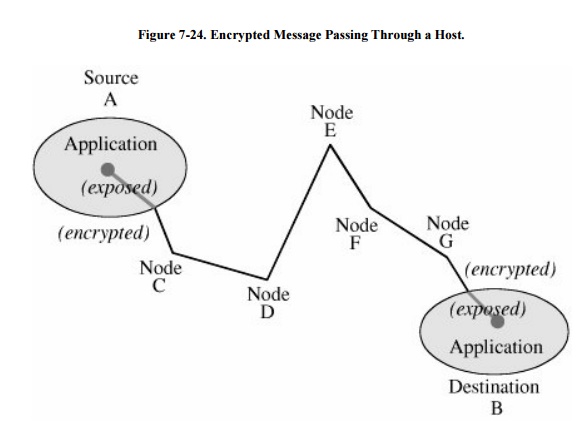

When end-to-end encryption is

used, messages sent through several hosts are protected. The data content of

the message is still encrypted, as shown in Figure

7-24, and the message is encrypted (protected against disclosure)

while in transit. Therefore, even though a message must pass through

potentially insecure nodes (such as C through G) on the path between A and B,

the message is protected against disclosure while in transit.

Comparison of Encryption Methods

Simply encrypting a message

is not absolute assurance that it will not be revealed during or after

transmission. In many instances, however, the strength of encryption is

adequate protection, considering the likelihood of the interceptor's breaking

the encryption and the timeliness of the message. As with many aspects of

security, we must balance the strength of protection with the likelihood of

attack. (You will learn more about managing these risks in Chapter 8.)

With link encryption, encryption

is invoked for all transmissions along a particular link. Typically, a given

host has only one link into a network, meaning that all network traffic

initiated on that host will be encrypted by that host. But this encryption

scheme implies that every other host receiving these communications must also

have a cryptographic facility to decrypt the messages. Furthermore, all hosts

must share keys. A message may pass through one or more intermediate hosts on

the way to its final destination. If the message is encrypted along some links

of a network but not others, then part of the advantage of encryption is lost.

Therefore, link encryption is usually performed on all links of a network if it

is performed at all.

By contrast, end-to-end

encryption is applied to "logical links," which are channels between

two processes, at a level well above the physical path. Since the intermediate

hosts along a transmission path do not need to encrypt or decrypt a message,

they have no need for cryptographic facilities. Thus, encryption is used only

for those messages and applications for which it is needed. Furthermore, the

encryption can be done with software, so we can apply it selectively, one

application at a time or even to one message within a given application.

The selective advantage of

end-to-end encryption is also a disadvantage regarding encryption keys. Under

end-to-end encryption, there is a virtual cryptographic channel between each

pair of users. To provide proper security, each pair of users should share a unique

cryptographic key. The number of keys required is thus equal to the number of

pairs of users, which is n * (n - 1)/2 for n users. This number increases

rapidly as the number of users increases. However, this count assumes that

single key encryption is used. With a public key system, only one pair of keys

is needed per recipient.

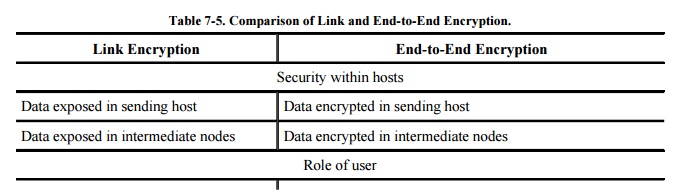

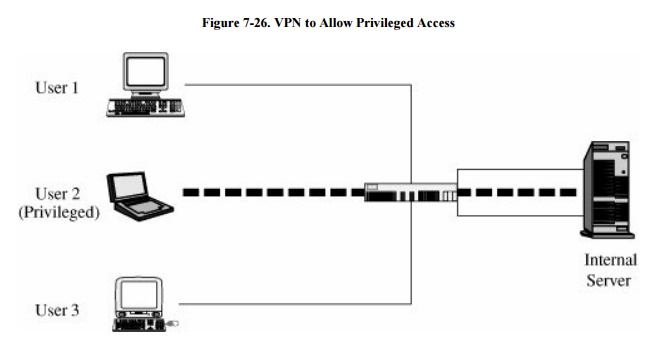

As shown in Table 7-5, link encryption is faster, easier for

the user, and uses fewer keys. End-to-end encryption is more flexible, can be

used selectively, is done at the user level, and can be integrated with the

application. Neither form is right for all situations.

In some cases, both forms of

encryption can be applied. A user who does not trust the quality of the link

encryption provided by a system can apply end -to-end encryption as well. A

system administrator who is concerned about the security of an end-to-end

encryption scheme applied by an application program can also install a link

encryption device. If both encryptions are relatively fast, this duplication of

security has little negative effect.

Virtual Private Networks

Link encryption can be used

to give a network's users the sense that they are on a private network, even

when it is part of a public network. For this reason, the approach is called a virtual private network (or VPN).

Typically, physical security

and administrative security are strong enough to protect transmission inside

the perimeter of a network. Thus, the greatest exposure for a user is between

the user's workstation or client and the perimeter of the host network or

server.

A firewall is an access

control device that sits between two networks or two network segments. It

filters all traffic between the protected or "inside" network and a

less trustworthy or "outside" network or segment. (We examine

firewalls in detail later in this chapter.)

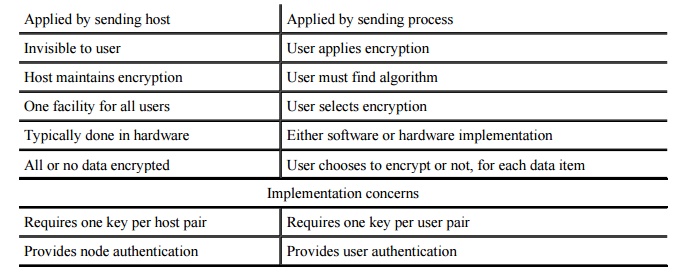

Many firewalls can be used to

implement a VPN. When a user first establishes a communication with the

firewall, the user can request a VPN session with the firewall. The user's client

and the firewall negotiate a session encryption key, and the firewall and the

client subsequently use that key to encrypt all traffic between the two. In

this way, the larger network is restricted only to those given special access

by the VPN. In other words, it feels to the user that the network is private,

even though it is not. With the VPN, we say that the communication passes

through an encrypted tunnel or

tunnel. Establishment of a VPN is shown in Figure

7-25.

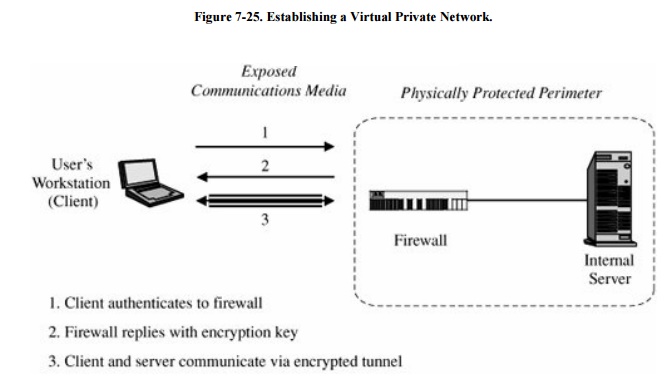

Virtual private networks are

created when the firewall interacts with an authentication service inside the

perimeter. The firewall may pass user authentication data to the authentication

server and, upon confirmation of the authenticated identity, the firewall

provides the user with appropriate security privileges. For example, a known

trusted person, such as an employee or a system administrator, may be allowed

to access resources not available to general users. The firewall implements

this access control on the basis of the VPN. A VPN with privileged access is

shown in Figure 7-26. In that figure,

the firewall passes to the internal server the (privileged) identity of User 2.

PKI and Certificates

A public key infrastructure, or PKI, is a process created to enable

users to implement public key cryptography, usually in a large (and frequently,

distributed) setting. PKI offers each user a set of services, related to

identification and access control, as follows:

·

Create certificates associating a user's identity with a (public)

cryptographic key

·

Give out certificates from its database

·

Sign certificates, adding its credibility to the authenticity of

the certificate

·

Confirm (or deny) that a certificate is valid

·

Invalidate certificates for users who no longer are allowed access

or whose private key has been exposed

PKI is often considered to be

a standard, but in fact it is a set of policies, products, and procedures that

leave some room for interpretation. (Housley and Polk [HOU01b] describe both the technical parts and

the procedural issues in developing a PKI.) The policies define the rules under

which the cryptographic systems should operate. In particular, the policies

specify how to handle keys and valuable information and how to match level of control

to level of risk. The procedures dictate how the keys should be generated,

managed, and used. Finally, the products actually implement the policies, and

they generate, store, and manage the keys.

PKI sets up entities, called certificate authorities, that implement

the PKI policy on certificates. The general idea is that a certificate

authority is trusted, so users can delegate the construction, issuance,

acceptance, and revocation of certificates to the authority, much as one would

use a trusted bouncer to allow only some people to enter a restricted

nightclub. The specific actions of a certificate authority include the

following:

·

managing public key certificates for their whole life cycle

·

issuing certificates by binding a user's or system's identity to a

public key with a digital signature

·

scheduling expiration dates for certificates

·

ensuring that certificates are revoked when necessary by publishing

certificate revocation lists

The functions of a

certificate authority can be done in-house or by a commercial service or a

trusted third party.

PKI also involves a

registration authority that acts as an interface between a user and a

certificate authority. The registration authority captures and authenticates

the identity of a user and then submits a certificate request to the

appropriate certificate authority. In this sense, the registration authority is

much like the U.S. Postal Service; the postal service acts as an agent of the

U.S. State Department to enable U.S. citizens to obtain passports (official

U.S. authentication) by providing the appropriate forms, verifying identity,

and requesting the actual passport (akin to a certificate) from the appropriate

passport-issuing office (the certificate authority). As with passports, the

quality of registration authority determines the level of trust that can be

placed in the certificates that are issued. PKI fits most naturally in a

hierarchically organized, centrally controlled organization, such as a

government agency.

PKI efforts are under way in

many countries to enable companies and government agencies to implement PKI and

interoperate. For example, a Federal PKI Initiative in the United States will

eventually allow any U.S. government agency to send secure communication to any

other U.S. government agency, when appropriate. The initiative also specifies

how commercial PKI-enabled tools should operate, so agencies can buy ready-made

PKI products rather than build their own. The European Union has a similar

initiative (see www.europepki.org for more information.) Sidebar 7-8 describes

the commercial use of PKI in a major U.K. bank. Major PKI solutions vendors include Baltimore

Technologies, Northern Telecom/Entrust, and Identrus.

Sidebar

7-8: Using PKI at Lloyd's Bank

Lloyd's TSB is a savings bank based in

the United Kingdom. With 16 million customers and over 2,000 branches, Lloyd's

has 1.2 million registered Internet customers. In fact, lloydstsb.com is the

most visited financial web site in the United Kingdom [ACT02]. In 2002,

Lloyd's implemented a pilot project using smart cards for online banking

services. Called the Key Online Banking (KOB) program, it is the first

large-scale deployment of smart-card-based PKI for Internet banking. Market

research revealed that 75 percent of the bank's clients found appealing the

enhanced security offered by KOB.

To use KOB, customers insert the smart

card into an ATM-like device and then supply a unique PIN. Thus, authentication

is a two-step approach required before any financial transaction can be

conducted. The smart card contains PKI key pairs and digital certificates. When

the customer is finished, he or she logs out and removes the smart card to end

the banking session.

According to Alan Woods, Lloyd's TSB's

business banking director of distribution, "The beauty of the Key Online

Banking solution is that it reduces the risk of a business' digital identity

credentials from being exposed. This is becauseunlike standard PKI systemsthe

user's private key is not kept on their desktop but is issued, stored, and

revoked on the smart card itself. This Key Online Banking smart card is kept

with the user at all times."

The benefits of this system are clear to customers, who can transact

their business more securely. But the bank has an added benefit as well. The

use of PKI protects issuing banks and financial institutions against liability

under U.K. law.

Most PKI processes use

certificates that bind identity to a key. But research is being done to expand

the notion of certificate to a broader characterization of credentials. For

instance, a credit card company may be more interested in verifying your

financial status than your identity; a PKI scheme may involve a certificate

that is based on binding the financial status with a key. The Simple

Distributed Security Infrastructure (SDSI) takes this approach, including

identity certificates, group membership certificates, and name-binding

certificates. As of this writing, there are drafts of two related standards:

ANSI standard X9.45 and the Simple Public Key Infrastructure (SPKI); the latter

has only a set of requirements and a certificate format.

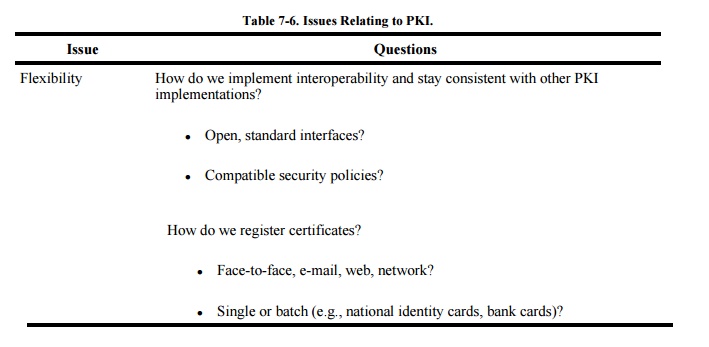

PKI is close to but not yet a

mature process. Many issues must be resolved, especially since PKI has yet to

be implemented commercially on a large scale. Table

7-6 lists several issues to be addressed as we learn more about PKI.

However, some things have become clear. First, the certificate authority should

be approved and verified by an independent body. The certificate authority's

private key should be stored in a tamper-resistant security module. Then,

access to the certificate and registration authorities should be tightly

controlled, by means of strong user authentication such as smart cards.

The security involved in protecting

the certificates involves administrative procedures. For example, more than one

operator should be required to authorize certification requests. Controls

should be put in place to detect hackers and prevent them from issuing bogus

certificate requests. These controls might include digital signatures and

strong encryption. Finally, a secure audit trail is necessary for

reconstructing certificate information should the system fail and for

recovering if a hacking attack does indeed corrupt the authentication process.

SSH Encryption

SSH (secure shell) is a pair of protocols (versions

1 and 2), originally defined for Unix but also available under Windows 2000,

that provides an authenticated and

encrypted path to the shell or operating system command interpreter. Both SSH

versions replace Unix utilities such as Telnet, rlogin, and rsh for remote

access. SSH protects against spoofing attacks and modification of data in

communication.

The SSH protocol involves negotiation between

local and remote sites for encryption algorithm (for example, DES, IDEA, AES)

and authentication (including public key and Kerberos).

SSL Encryption

The SSL (Secure Sockets Layer) protocol was originally designed by

Netscape to protect communication between a web browser and server. It is also

known now as TLS, for transport layer

security. SSL interfaces between applications (such as browsers) and the

TCP/IP protocols to provide server authentication, optional client

authentication, and an encrypted communications channel between client and

server. Client and server negotiate a mutually supported suite of encryption

for session encryption and hashing; possibilities include triple DES and SHA1,

or RC4 with a 128-bit key and MD5.

To use SSL, the client

requests an SSL session. The server responds with its public key certificate so

that the client can determine the authenticity of the server. The client

returns part of a symmetric session key encrypted under the server's public

key. Both the server and client compute the session key, and then they switch

to encrypted communication, using the shared session key.

The protocol is simple but

effective, and it is the most widely used secure communication protocol on the

Internet. However, remember that SSL protects only from the client's browser to

the server's decryption point (which is often only to the server's firewall or,

slightly stronger, to the computer that runs the web application). Data are

exposed from the user's keyboard to the browser and throughout the recipient's

company. Blue Gem Security has developed a product called LocalSSL that

encrypts data after it has been typed until the operating system delivers it to

the client's browser, thus thwarting any keylogging Trojan horse that has

become implanted in the user's computer to reveal everything the user types.

IPSec

As noted previously, the

address space for the Internet is running out. As domain names and equipment

proliferate, the original, 30-year-old, 32-bit address structure of the

Internet is filling up. A new structure, called IPv6 (version 6 of the IP protocol suite), solves the addressing

problem. This restructuring also offered an excellent opportunity for the

Internet Engineering Task Force (IETF) to address serious security

requirements.

As a part of the IPv6 suite,

the IETF adopted IPSec, or the IP

Security Protocol Suite. Designed to address fundamental shortcomings such as

being subject to spoofing, eavesdropping, and session hijacking, the IPSec

protocol defines a standard means for handling encrypted data. IPSec is

implemented at the IP layer, so it affects all layers above it, in particular

TCP and UDP. Therefore, IPSec requires no change to the existing large number

of TCP and UDP protocols.

IPSec is somewhat similar to

SSL, in that it supports authentication and confidentiality in a way that does

not necessitate significant change either above it (in applications) or below

it (in the TCP protocols). Like SSL, it was designed to be independent of

specific cryptographic protocols and to allow the two communicating parties to

agree on a mutually supported set of protocols.

The basis of IPSec is what is

called a security association, which

is essentially the set of security parameters for a secured communication

channel. It is roughly comparable to an SSL session. A security association

includes

·

encryption algorithm and mode (for example, DES in block-chaining

mode)

·

encryption key

·

encryption parameters, such as the initialization vector

·

authentication protocol and key

·

lifespan of the association, to permit long-running sessions to

select a new cryptographic key as often as needed

·

address of the opposite end of association

·

sensitivity level of protected data (usable for classified data)

A host, such as a network

server or a firewall, might have several security associations in effect for

concurrent communications with different remote hosts. A security association

is selected by a security parameter

index (SPI), a data element that is essentially a pointer into a table of

security associations.

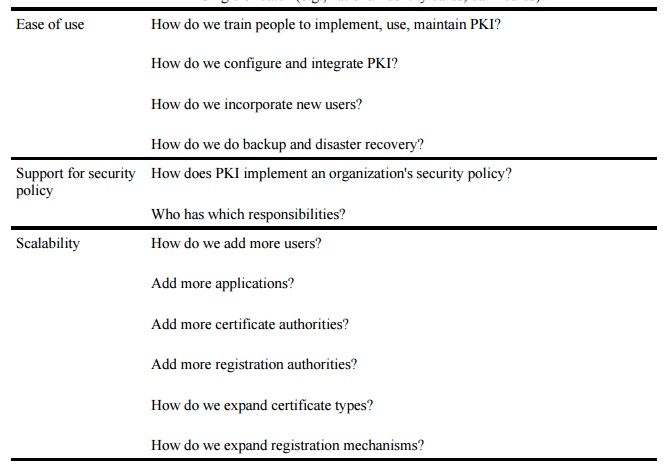

The fundamental data

structures of IPSec are the AH (authentication

header) and the ESP (encapsulated

security payload). The ESP replaces (includes) the conventional TCP header

and data portion of a packet, as shown in Figure

7-27. The physical header and trailer depend on the data link and

physical layer communications medium, such as Ethernet.

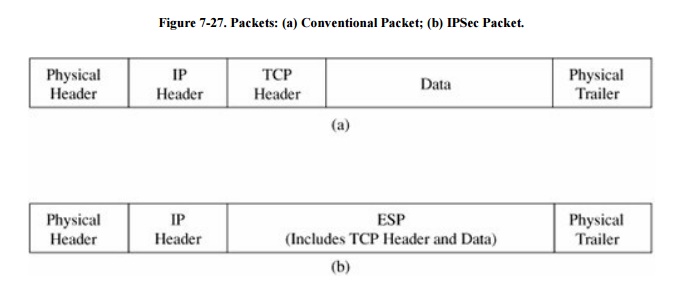

The ESP contains both an

authenticated portion and an encrypted portion, as shown in Figure 7-28. The sequence number is incremented

by one for each packet transmitted to the same address using the same SPI, to

preclude packet replay attacks. The payload data is the actual data of the

packet. Because some encryption or other security mechanisms require blocks of

certain sizes, the padding factor and padding length fields contain padding and

the amount of padding to bring the payload data to an appropriate length. The

next header indicates the type of payload data. The authentication field is

used for authentication of the entire object.

As with most cryptographic applications, the

critical element is key management. IPSec addresses this need with ISAKMP or Internet Security Association

Key Management Protocol. Like SSL, ISAKMP requires that a distinct key be

generated for each security association. The ISAKMP protocol is simple,

flexible, and scalable. In IPSec, ISAKMP is implemented through IKE or ISAKMP key exchange. IKE provides a way to

agree on and manage protocols, algorithms, and keys. For key exchange between

unrelated parties IKE uses the DiffieHellman scheme (also described in Chapter 2). In DiffieHellman, each of the two

parties, X and Y, chooses a large prime and sends a

number g raised to the power of the prime to

the other. That is, X sends gx and Y sends gy. They both

raise what they receive to the power

they kept: Y raises gx to (gx)y

and X raises gy to (gy)x, which are both the

same; voilà, they share a secret (gx)y = (gy)x.

(The computation is slightly more complicated, being done in a finite field

mod(n), so an attacker cannot factor the secret easily.) With their shared

secret, the two parties now exchange identities and certificates to

authenticate those identities. Finally, they derive a shared cryptographic key

and enter a security association.

The key exchange is very efficient: The

exchange can be accomplished in two messages, with an optional two more

messages for authentication. Because this is a public key method, only two keys

are needed for each pair of communicating parties. IKE has submodes for

authentication (initiation) and for establishing new keys in an existing

security association.

IPSec can establish cryptographic sessions with

many purposes, including VPNs, applications, and lower-level network management

(such as routing). The protocols of IPSec have been published and extensively

scrutinized. Work on the protocols began in 1992. They were first published in

1995, and they were finalized in 1998 (RFCs 24012409) [KEN98].

Signed Code

As we have seen, someone can

place malicious active code on a web site to be downloaded by unsuspecting

users. Running with the privilege of whoever downloads it, such active code can

do serious damage, from deleting files to sending e-mail messages to fetching

Trojan horses to performing subtle and hard-to-detect mischief. Today's trend

is to allow applications and updates to be downloaded from central sites, so

the risk of downloading something malicious is growing.

A partialnot completeapproach

to reducing this risk is to use signed

code. A trustworthy third party appends a digital signature to a piece of

code, supposedly connoting more trustworthy code. A signature structure in a

PKI helps to validate the signature.

Who might the trustworthy

party be? A well-known manufacturer would be recognizable as a code signer. But

what of the small and virtually unknown manufacturer of a device driver or a

code add-in? If the code vendor is unknown, it does not help that the vendor

signs its own code; miscreants can post their own signed code, too.

In March 2001, Verisign

announced it had erroneously issued two code-signing certificates under the

name of Microsoft Corp. to someone who purported to bebut was nota Microsoft

employee. These certificates were in circulation for almost two months before

the error was detected. Even after Verisign detected the error and canceled the

certificates, someone would know the certificates had been revoked only by

checking Verisign's list. Most people would not question a code download signed

by Microsoft.

Encrypted E-mail

An electronic mail message is

much like the back of a post card. The mail carrier (and everyone in the postal

system through whose hands the card passes) can read not just the address but

also everything in the message field. To protect the privacy of the message and

routing information, we can use encryption to protect the confidentiality of

the message and perhaps its integrity.

As we have seen in several

other applications, the encryption is the easy part; key management is the more

difficult issue. The two dominant approaches to key management are the use of a

hierarchical, certificate-based PKI solution for key exchange and the use of a

flat, individual-to-individual exchange method. The hierarchical method is

called S/MIME and is employed by many commercial mail-handling programs, such

as Microsoft Exchange or Eudora. The individual method is called PGP and is a

commercial add-on. We look more carefully at encrypted e-mail in a later

section of this chapter.

Related Topics