Chapter: Security in Computing : Designing Trusted Operating Systems

Evaluation

Evaluation

Most system consumers (that

is, users or system purchasers) are not security experts. They need the

security functions, but they are not usually capable of verifying the accuracy

or adequacy of test coverage, checking the validity of a proof of correctness,

or determining in any other way that a system correctly implements a security

policy. Thus, it is useful (and sometimes essential) to have an independent third

party evaluate an operating system's security. Independent experts can review

the requirements, design, implementation, and evidence of assurance for a

system. Because it is helpful to have a standard approach for an evaluation,

several schemes have been devised for structuring an independent review. In

this section, we examine three different approaches: from the United States,

from Europe, and a scheme that combines several known approaches.

U.S. "Orange Book" Evaluation

In the late 1970s, the U.S.

Department of Defense (DoD) defined a set of distinct, hierarchical levels of

trust in operating systems. Published in a document [DOD85]

that has become known informally as the "Orange Book," the Trusted

Computer System Evaluation Criteria (TCSEC) provides the criteria for an

independent evaluation. The National Computer Security Center (NCSC), an

organization within the National Security Agency, guided and sanctioned the

actual evaluations.

The levels of trust are described as four

divisions, A, B, C, and D, where A has the most comprehensive degree of

security. Within a division, additional distinctions are denoted with numbers;

the higher numbers indicate tighter security requirements. Thus, the complete

set of ratings ranging from lowest to highest assurance is D, C1, C2, B1, B2,

B3, and A1. Table 5 -7 (from Appendix D

of [DOD85]) shows the security

requirements for each of the seven evaluated classes of NCSC certification.

(Class D has no requirements because it denotes minimal protection.)

D, with no requirements

C1/C2/B1, requiring security

features common to many commercial operating systems

B2, requiring a precise proof

of security of the underlying model and a narrative specification of the

trusted computing base

B3/A1, requiring more

precisely proven descriptive and formal designs of the trusted computing base

These clusters do not imply

that classes C1, C2, and B1 are equivalent. However, there are substantial

increases of stringency between B1 and B2, and between B2 and B3 (especially in

the assurance area). To see why, consider the requirements for C1, C2, and B1.

An operating system developer might be able to add security measures to an

existing operating system in order to qualify for these ratings. However, security

must be included in the design of the operating system for a B2 rating.

Furthermore, the design of a B3 or A1 system must begin with construction and

proof of a formal model of security. Thus, the distinctions between B1 and B2

and between B2 and B3 are significant.

Let us look at each class of

security described in the TCSEC. In our descriptions, terms in quotation marks

have been taken directly from the Orange Book to convey the spirit of the

evaluation criteria.

Class D: Minimal Protection

This class is applied to

systems that have been evaluated for a higher category but have failed the

evaluation. No security characteristics are needed for a D rating.

Class C1: Discretionary Security Protection

C1 is intended for an

environment of cooperating users processing data at the same level of

sensitivity. A system evaluated as C1 separates users from data. Controls must

seemingly be sufficient to implement access limitation, to allow users to

protect their own data. The controls of a C1 system may not have been

stringently evaluated; the evaluation may be based more on the presence of

certain features. To qualify for a C1 rating, a system must have a domain that

includes security functions and that is protected against tampering. A keyword

in the classification is "discretionary." A user is

"allowed" to decide when the controls apply, when they do not, and

which named individuals or groups are allowed access.

Class C2: Controlled Access Protection

A C2 system still implements

discretionary access control, although the granularity of control is finer. The

audit trail must be capable of tracking each individual's access (or attempted

access) to each object.

Class B1: Labeled Security Protection

All certifications in the B

division include nondiscretionary access control. At the B1 level, each

controlled subject and object must be assigned a security level. (For class B1,

the protection system does not need to control every object.)

Each controlled object must

be individually labeled for security level, and these labels must be used as

the basis for access control decisions. The access control must be based on a

model employing both hierarchical levels and nonhierarchical categories. (The

military model is an example of a system with hierarchical levelsunclassified,

classified, secret, top secretand nonhierarchical categories, need-to-know

category sets.) The mandatory access policy is the BellLa Padula model. Thus, a

B1 system must implement BellLa Padula controls for all accesses, with user

discretionary access controls to further limit access.

Class B2: Structured Protection

The major enhancement for B2

is a design requirement: The design and implementation of a B2 system must

enable a more thorough testing and review. A verifiable top-level design must be

presented, and testing must confirm that the system implements this design. The

system must be internally structured into "well-defined largely

independent modules." The principle of least privilege is to be enforced

in the design. Access control policies must be enforced on all objects and

subjects, including devices. Analysis of covert channels is required.

Class B3: Security Domains

The security functions of a B3 system must be

small enough for extensive testing. A high-level design must be complete and

conceptually simple, and a "convincing argument" must exist that the

system implements this design. The implementation of the design must

"incorporate significant use of layering, abstraction, and information

hiding."

The security functions must

be tamperproof. Furthermore, the system must be "highly resistant to

penetration." There is also a requirement that the system audit facility

be able to identify when a violation of security is imminent.

Class A1: Verified Design

Class A1 requires a formally verified

system design. The capabilities of the system are the same as for class B3. But

in addition there are five important criteria for class A1 certification: (1) a

formal model of the protection system and a proof of its consistency and

adequacy,

(2) a formal top-level

specification of the protection system, (3) a demonstration that the top-level

specification corresponds to the model,

(4) an implementation

"informally" shown to be consistent with the specification, and (5)

formal analysis of covert channels.

European ITSEC Evaluation

The TCSEC was developed in

the United States, but representatives from several European countries also

recognized the need for criteria and a methodology for evaluating

security-enforcing products. The European efforts culminated in the ITSEC, the

Information Technology Security Evaluation Criteria [ITS91b].

Origins of the ITSEC

England, Germany, and France

independently began work on evaluation criteria at approximately the same time.

Both England and Germany published their first drafts in 1989; France had its

criteria in limited review when these three nations, joined by the Netherlands,

decided to work together to develop a common criteria document. We examine

Britain and Germany's efforts separately, followed by their combined output.

German Green Book

The (then West) German

Information Security Agency (GISA) produced a catalog of criteria [GIS88] five years after the first use of the

U.S. TCSEC. Keeping with tradition, the security community began to call the

document the German Green Book because of its green cover. The German criteria

identified eight basic security functions, deemed sufficient to enforce a broad

spectrum of security policies:

identification and

authentication: unique and certain association of an identity with a subject or

object

administration of rights: the

ability to control the assignment and revocation of access rights between

subjects and objects

verification of rights:

mediation of the attempt of a subject to exercise rights with respect to an

object

audit: a record of

information on the successful or attempted unsuccessful exercise of rights

object reuse: reusable

resources reset in such a way that no information flow occurs in contradiction

to the security policy

error recovery: identification

of situations from which recovery is necessary and invocation of an appropriate

action

continuity of service:

identification of functionality that must be available in the system and what

degree of delay or loss (if any) can be tolerated

data communication security:

peer entity authentication, control of access to communications resources, data

confidentiality, data integrity, data origin authentication, and nonrepudiation

Note that the first five of

these eight functions closely resemble the U.S. TCSEC, but the last three move

into entirely new areas: integrity of data, availability, and a range of

communications concerns.

Like the U.S. DoD, GISA did

not expect ordinary users (that is, those who were not security experts) to

select appropriate sets of security functions, so ten functional classes were

defined. Classes F1 through F5 corresponded closely to the functionality

requirements of U.S. classes C1 through B3. (Recall that the functionality

requirements of class A1 are identical to those of B3.) Class F6 was for high

data and program integrity requirements, class F7 was appropriate for high

availability, and classes F8 through F10 relate to data communications

situations. The German method addressed assurance by defining eight quality levels,

Q0 through Q7, corresponding roughly to the assurance requirements of U.S.

TCSEC levels D through A1, respectively. For example,

The evaluation of a Q1 system is merely

intended to ensure that the implementation more or less enforces the security

policy and that no major errors exist.

The goal of a Q3 evaluation

is to show that the system is largely resistant to simple penetration attempts.

To achieve assurance level

Q6, it must be formally proven that the highest specification level meets all

the requirements of the formal security policy model. In addition, the source

code is analyzed precisely.

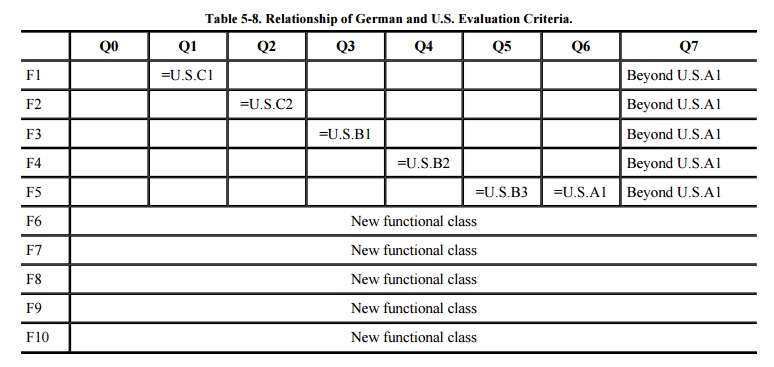

These functionality classes and assurance

levels can be combined in any way, producing potentially 80 different

evaluation results, as shown in Table 5-8.

The region in the upper-right portion of the table represents requirements in

excess of U.S. TCSEC requirements, showing higher assurance requirements for a

given functionality class. Even though assurance and functionality can be

combined in any way, there may be limited applicability for a low -assurance,

multilevel system (for example, F5, Q1) in usage. The Germans did not assert

that all possibilities would necessarily be useful, however.

Another significant

contribution of the German approach was to support evaluations by independent,

commercial evaluation facilities.

British Criteria

The British criteria

development was a joint activity between the U.K. Department of Trade and

Industry (DTI) and the Ministry of Defence (MoD). The first public version,

published in 1989 [DTI89a], was issued

in several volumes.

The original U.K. criteria

were based on the "claims" language, a metalanguage by which a vendor

could make claims about functionality in a product. The claims language

consisted of lists of action phrases

and target phrases with parameters.

For example, a typical action phrase might look like this:

This

product can [not] determine … [using the mechanism described in paragraph n of

this document] …

The parameters product and n

are, obviously, replaced with specific references to the product to be

evaluated. An example of a target phrase is

… the

access-type granted to a [user, process] in respect of a(n) object.

These two phrases can be

combined and parameters replaced to produce a claim about a product.

This

access control subsystem can determine the read access granted to all subjects

in respect to system files.

The claims language was

intended to provide an open -ended structure by which a vendor could assert

qualities of a product and independent evaluators could verify the truth of

those claims. Because of the generality of the claims language, there was no

direct correlation of U.K. and U.S. evaluation levels.

In addition to the claims language, there were

six levels of assurance evaluation, numbered L1 through L6, corresponding

roughly to U.S. assurance C1 through A1 or German Q1 through Q6.

The claims language was

intentionally open-ended because the British felt it was impossible to predict

which functionality manufacturers would choose to put in their products. In

this regard, the British differed from Germany and the United States, who

thought manufacturers needed to be guided to include specific functions with

precise functionality requirements. The British envisioned certain popular

groups of claims being combined into bundles that could be reused by many

manufacturers.

The British defined and

documented a scheme for Commercial Licensed Evaluation Facilities (CLEFs) [DTI89b], with precise requirements for the

conduct and process of evaluation by independent commercial organizations.

Other Activities

As if these two efforts were

not enough, Canada, Australia, and France were also working on evaluation

criteria. The similarities among these efforts were far greater than their

differences. It was as if each profited by building upon the predecessors'

successes.

Three difficulties, which

were really different aspects of the same problem, became immediately apparent.

Comparability. It was not

clear how the different evaluation criteria related. A German F2/E2 evaluation

was structurally quite similar to a U.S. C2 evaluation, but an F4/E7 or F6/E3

evaluation had no direct U.S. counterpart. It was not obvious which U.K. claims

would correspond to a particular U.S. evaluation level.

Transferability. Would a

vendor get credit for a German F2/E2 evaluation in a context requiring a U.S.

C2? Would the stronger F2/E3 or F3/E2 be accepted?

Marketability. Could a vendor

be expected to have a product evaluated independently in the United States,

Germany, Britain, Canada, and Australia? How many evaluations would a vendor

support? (Many vendors suggested that they would be interested in at most one

because the evaluations were costly and time consuming.)

For reasons including these

problems, Britain, Germany, France, and the Netherlands decided to pool their

knowledge and synthesize their work.

ITSEC: Information Technology Security Evaluation Criteria

In 1991 the Commission of the

European Communities sponsored the work of these four nations to produce a

harmonized version for use by all European Union member nations. The result was

a good amalgamation.

The ITSEC preserved the

German functionality classes F1F10, while allowing the flexibility of the

British claims language. There is similarly an effectiveness component to the evaluation, corresponding roughly to

the U.S. notion of assurance and to the German E0E7 effectiveness levels.

A vendor (or other

"sponsor" of an evaluation) has to define a target of evaluation (TOE), the item that is the evaluation's

focus. The TOE is considered in the context of an operational environment (that

is, an expected set of threats) and security enforcement requirements. An

evaluation can address either a product (in general distribution for use in a

variety of environments) or a system (designed and built for use in a specified

setting). The sponsor or vendor states the following information:

system security policy or

rationale: why this product (or system) was built

specification of security-enforcing functions: security

properties of the product (or system)

definition of the mechanisms of the product (or system) by

which security is enforced

a claim about the strength of the mechanisms

the target evaluation level in terms of

functionality and effectiveness

The evaluation proceeds to

determine the following aspects:

suitability of functionality: whether the chosen functions implement the

desired security features

binding of functionality: whether the chosen functions work together

synergistically

vulnerabilities: whether vulnerabilities exist either in the construction of the

TOE or how it will work in its intended environment

ease of use

strength of mechanism: the

ability of the TOE to withstand direct attack

The results of these

subjective evaluations determine whether the evaluators agree that the product

or system deserves its proposed functionality and effectiveness rating.

Significant Departures from the Orange Book

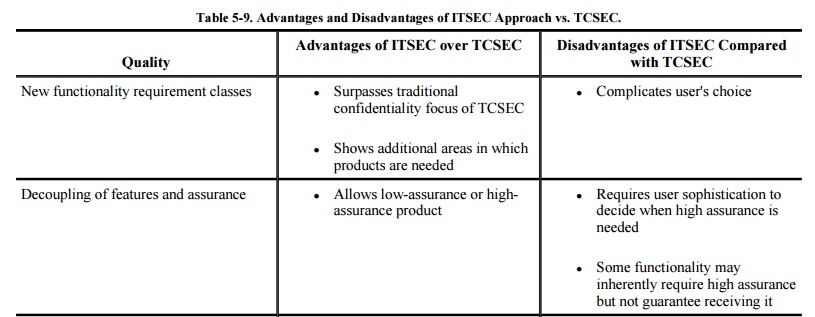

The European ITSEC offers the following significant

changes compared with the Orange Book. These variations have both advantages

and disadvantages, as listed in Table 5-9.

U.S. Combined Federal Criteria

In 1992, partly in response

to other international criteria efforts, the United States began a successor to

the TCSEC, which had been written over a decade earlier. This successor, the Combined Federal Criteria [NSA92], was produced jointly by the National

Institute for Standards and Technology (NIST) and the National Security Agency

(NSA) (which formerly handled criteria and evaluations through its National

Computer Security Center, the NCSC).

The team creating the Combined Federal Criteria

was strongly influenced by Canada's criteria [CSS93],

released in draft status just before the combined criteria effort began.

Although many of the issues addressed by other countries' criteria were the

same for the United States, there was a compatibility issue that did not affect

the Europeans, namely, the need to be fair to vendors that had already passed

U.S. evaluations at a particular level or that were planning for or in the

middle of evaluations. Within that context, the new U.S. evaluation model was

significantly different from the TCSEC. The combined criteria draft resembled

the European model, with some separation between features and assurance.

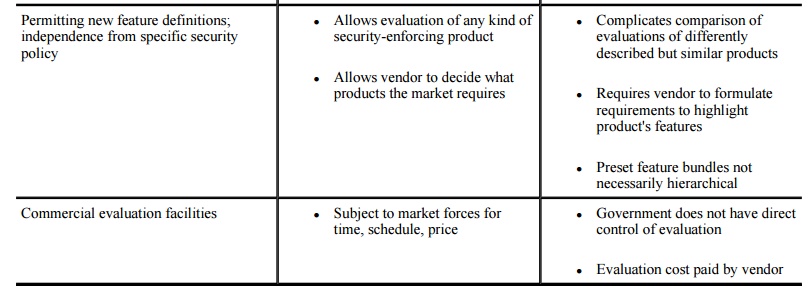

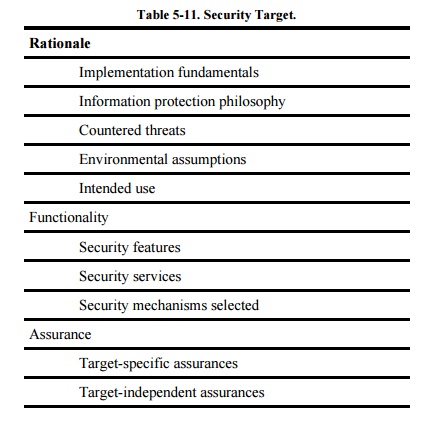

The Combined Federal Criteria introduced the

notions of security target (not to be confused with a target of evaluation, or

TOE) and protection profile. A user would generate a protection profile to detail the protection needs, both functional

and assurance, for a specific situation or a generic scenario. This user might

be a government sponsor, a commercial user, an organization representing many

similar users, a product vendor's marketing representative, or a product

inventor. The protection profile would be an abstract specification of the

security aspects needed in an information technology (IT) product. The

protection profile would contain the elements listed in Table 5-10.

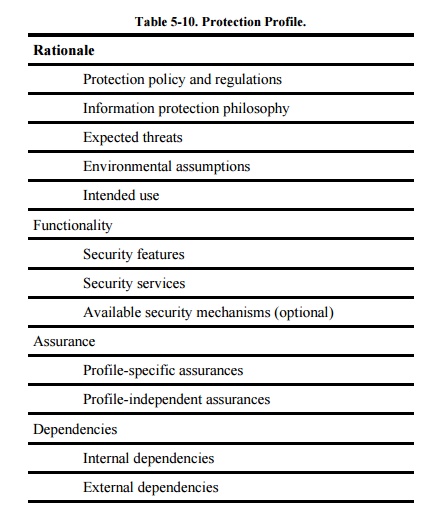

In response to a protection profile, a vendor

might produce a product that, the vendor would assert, met the requirements of

the profile. The vendor would then map the requirements of the protection

profile in the context of the specific product onto a statement called a security target. As shown in Table 5-11, the security target matches the

elements of the protection profile.

The security target then

becomes the basis for the evaluation. The target details which threats are

countered by which features, to what degree of assurance and using which

mechanisms. The security target outlines the convincing argument that the

product satisfies the requirements of the protection profile. Whereas the protection

profile is an abstract description of requirements, the security target is a

detailed specification of how each of those requirements is met in the specific

product.

The criteria document also

included long lists of potential requirements (a subset of which could be

selected for a particular protection profile), covering topics from object

reuse to accountability and from covert channel analysis to fault tolerance.

Much of the work in specifying precise requirement statements came from the

draft version of the Canadian criteria.

The U.S. Combined Federal

Criteria was issued only once, in initial draft form. After receiving a round

of comments, the editorial team announced that the United States had decided to

join forces with the Canadians and the editorial board from the ITSEC to

produce the Common Criteria for the

entire world.

Common Criteria

The Common Criteria [CCE94, CCE98]

approach closely resembles the U.S. Federal Criteria (which, of course, was

heavily influenced by the ITSEC and Canadian efforts). It preserves the

concepts of security targets and protections profiles. The U.S. Federal

Criteria were intended to have packages of protection requirements that were

complete and consistent for a particular type of application, such as a network

communications switch, a local area network, or a stand-alone operating system.

The example packages received special attention in the Common Criteria.

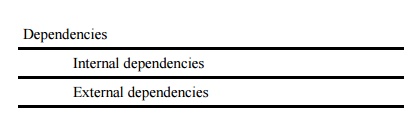

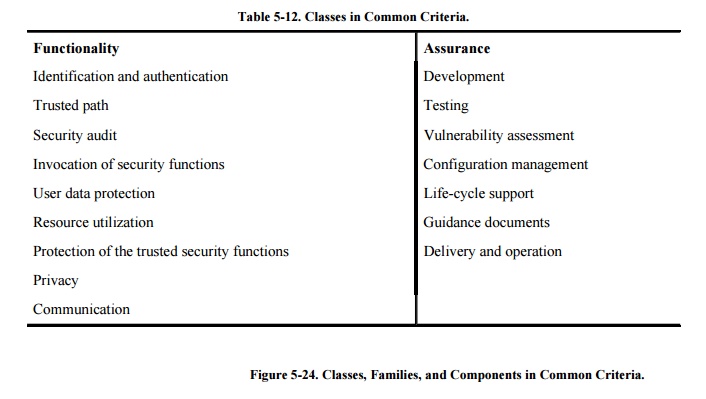

The Common Criteria defined topics of interest

to security, shown in Table 5-12. Under

each of these classes, they defined families of functions or assurance needs,

and from those families, they defined individual components, as shown in Figure 5-24.

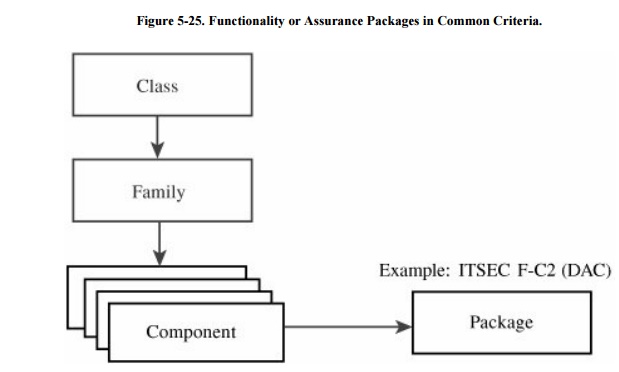

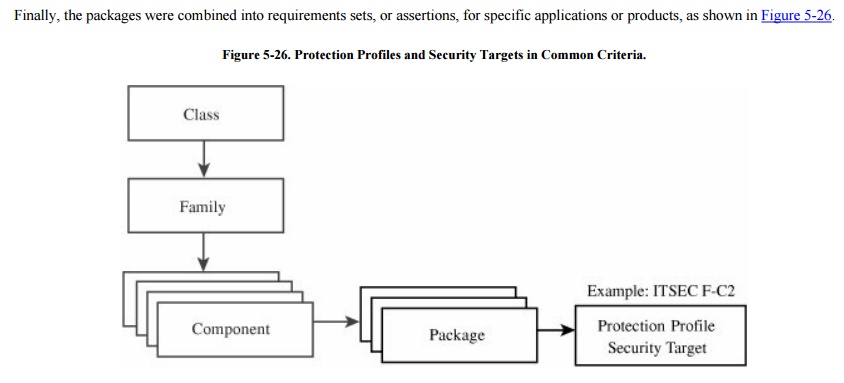

Individual components were then combined into

packages of components that met some comprehensive requirement (for

functionality) or some level of trust (for assurance), as shown in Figure 5-25.

Summary of Evaluation Criteria

The criteria were intended to

provide independent security assessments in which we could have some

confidence. Have the criteria development efforts been successful? For some, it

is too soon to tell. For others, the answer lies in the number and kinds of

products that have passed evaluation and how well the products have been

accepted in the marketplace.

Evaluation Process

We can examine the evaluation

process itself, using our own set of objective criteria. For instance, it is

fair to say that there are several desirable qualities we would like to see in

an evaluation, including the following:

Extensibility. Can the evaluation be extended as the product is enhanced?

Granularity. Does the evaluation look at the product at the right level of

detail?

Speed. Can the evaluation be done quickly enough to allow the product to

compete in the marketplace?

Thoroughness. Does the evaluation look at all relevant aspects of the product?

Objectivity. Is the evaluation independent of the reviewer's opinions? That is,

will two different reviewers give the same rating to a product?

Portability. Does the evaluation apply to the product no matter what platform

the product runs on?

Consistency. Do similar

products receive similar ratings? Would one product evaluated by different

teams receive the same results?

Compatibility. Could a

product be evaluated similarly under different criteria? That is, does one

evaluation have aspects that are not examined in another?

Exportability. Could an

evaluation under one scheme be accepted as meeting all or certain requirements

of another scheme?

Using these characteristics,

we can see that the applicability and extensibility of the TCSEC are somewhat

limited. Compatibility is being addressed by combination of criteria, although

the experience with the ITSEC has shown that simply combining the words of

criteria documents does not necessarily produce a consistent understanding of

them. Consistency has been an important issue, too. It was unacceptable for a

vendor to receive different results after bringing the same product to two

different evaluation facilities or to one facility at two different times. For

this reason, the British criteria documents stressed consistency of evaluation

results; this characteristic was carried through to the ITSEC and its companion

evaluation methodology, the ITSEM. Even though speed, thoroughness, and

objectivity are considered to be three essential qualities, in reality

evaluations still take a long time relative to a commercial computer product

delivery cycle of 6 to 18 months.

Criteria Development Activities

Evaluation criteria continue

to be developed and refined. If you are interested in doing evaluations, in

buying an evaluated product, or in submitting a product for evaluation, you

should follow events closely in the evaluation community. You can use the

evaluation goals listed above to help you decide whether an evaluation is

appropriate and which kind of evaluation it should be.

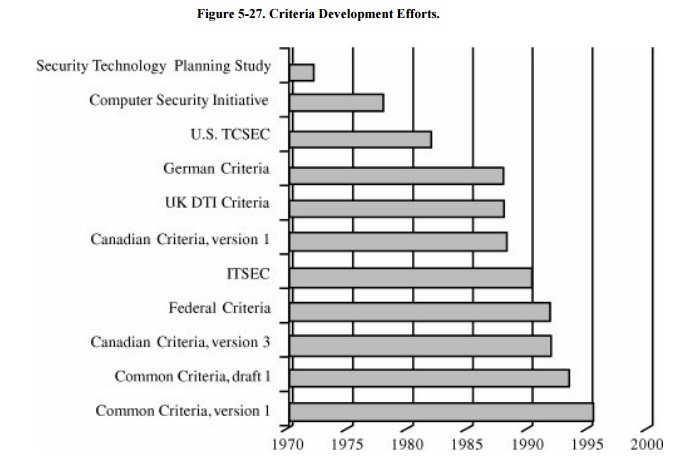

It is instructive to look back at the evolution

of evaluation criteria documents, too. Figure 5-27

shows the timeline for different criteria publications; remember that the

writing preceded the publication by one or more years. The figure begins with

Anderson's original Security Technology Planning Study [AND72], calling for methodical, independent

evaluation. To see whether progress is being made, look at the dates when

different criteria documents were published; earlier documents influenced the

contents and philosophy of later ones.

The criteria development

activities have made significant progress since 1983. The U.S. TCSEC was based

on the state of best practice known around 1980. For this reason, it draws

heavily from the structured programming paradigm that was popular throughout

the 1970s. Its major difficulty was its prescriptive manner; it forced its

model on all developments and all types of products. The TCSEC applied most

naturally to monolithic, stand-alone, multiuser operating systems, not to the

heterogeneous, distributed, networked environment based largely on individual

intelligent workstations that followed in the next decade.

Experience with Evaluation Criteria

To date, criteria efforts

have been paid attention to by the military, but those efforts have not led to

much commercial acceptance of trusted products. The computer security research

community is heavily dominated by defense needs because much of the funding for

security research is derived from defense departments. Ware [WAR95] points out the following about the

initial TCSEC:

It was driven by the U.S.

Department of Defense.

It focused on threat as

perceived by the U.S. Department of Defense.

It was based on a U.S.

Department of Defense concept of operations, including cleared personnel,

strong respect for authority and management, and generally secure physical

environments.

It had little relevance to

networks, LANs, WANs, Internets, client-server distributed architectures, and

other more recent modes of computing.

When the TCSEC was

introduced, there was an implicit contract between the U.S. government and

vendors, saying that if vendors built products and had them evaluated, the

government would buy them. Anderson [AND82]

warned how important it was for the government to keep its end of this bargain.

The vendors did their part by building numerous products: KSOS, PSOS, Scomp,

KVM, and Multics. But unfortunately, the products are now only of historical

interest because the U.S. government did not follow through and create the

market that would encourage those vendors to continue and other vendors to

join. Had many evaluated products been on the market, support and usability

would have been more adequately addressed, and the chance for commercial

adoption would have been good. Without government support or perceived

commercial need, almost no commercial acceptance of any of these products has

occurred, even though they have been developed to some of the highest quality

standards.

Schaefer [SCH04a] gives a thorough description of the

development and use of the TCSEC. In his paper he explains how the higher

evaluation classes became virtually unreachable for several reasons, and thus

the world has been left with less trustworthy systems than before the start of

the evaluation process. The TCSEC's almost exclusive focus on confidentiality

would have permitted serious integrity failures (as obliquely described in [SCH89b]).

On the other hand, some major

vendors are actively embracing low and moderate assurance evaluations: As of

May 2006, there are 78 products at EAL2, 22 at EAL3, 36 at EAL4, 2 at EAL5 and

1 at EAL7. Product types include operating systems, firewalls, antivirus software, printers, and

intrusion detection products. (The current list of completed evaluations

(worldwide) is maintained at www.commoncriteriaportal.org.) Some vendors have announced corporate commitments to

evaluation, noting that independent evaluation is a mark of quality that will always be a stronger selling

point than so-called emphatic assertion

(when a vendor makes loud claims about the strength of a product, with no

independent evidence to substantiate those claims). Current efforts in

criteria-writing support objectives, such as integrity and availability, as

strongly as confidentiality. This approach can allow a vendor to identify a

market niche and build a product for it, rather than building a product for a paper

need (that is, the dictates of the evaluation criteria) not matched by

purchases. Thus, there is reason for optimism regarding criteria and

evaluations. But realism requires everyone to accept that the marketnot a

criteria documentwill dictate what is desired and delivered. As Sidebar 5 -7 describes,

secure systems are sometimes seen as a marketing niche: not part of the

mainstream product line, and that can only be bad for security.

It is generally believed that the market will

eventually choose quality products. The evaluation principles described above

were derived over time; empirical evidence shows us that they can produce

high-quality, reliable products deserving our confidence. Thus, evaluation

criteria and related efforts have not been in vain, especially as we see

dramatic increases in security threats and the corresponding increased need for

trusted products. However, it is often easier and cheaper for product

proponents to speak loudly than to present clear evidence of trust. We caution

you to look for solid support for the trust you seek, whether that support be

in test and review results, evaluation ratings, or specialized assessment.

Related Topics