Chapter: Computer Networks

Transport layer

TRANSPORT LAYER

1. DATA COMPRESSION

┬Ę

Multimedia data, comprising audio, video, and still

images, now makes up the majority of traffic on the Internet by many estimates.

This is a

relatively recent developmentŌĆöit may be hard to believe now, but there was no

YouTube before 2005.

┬Ę

Part of what has made the widespread transmission

of multimedia across networks possible is advances in compression technology.

┬Ę

Because multimedia data is consumed mostly by

humans using their sensesŌĆövision and hearingŌĆöand processed by the human brain,

there are unique challenges to compressing it.

┬Ę

You want to try to keep the information that is

most important to a human, while getting rid of anything that doesnŌĆÖt improve

the humanŌĆÖs perception of the visual or auditory experience.

┬Ę

Hence, both computer science and the study of human

perception come into play.

┬Ę

In this section weŌĆÖll look at some of the major

efforts in representing and compressing multimedia data.

┬Ę

To get a sense of how important compression has

been to the spread of networked multimedia, consider the following example.

┬Ę

A high-definition TV screen has something like 1080

├Ś 1920 pixels, each of which has 24 bits of color information, so each frame is

1080 ├Ś 1920 ├Ś 24 = 50Mb and so if you want to send 24 frames per second, that

would be over 1Gbps.

┬Ę

ThatŌĆÖs a lot more than most Internet users can get

access to, by a good margin.

┬Ę

By contrast, modern compression techniques can get

a reasonably high quality HDTV signal down to the range of 10 Mbps, a two order

of magnitude reduction, and well within the reach of many broadband users.

┬Ę

Similar compression gains apply to lower quality

video such as YouTube clipsŌĆöweb video could never have reached its current

popularity without compression to make all those entertaining videos fit within

the bandwidth of todayŌĆÖs networks.

┬Ę

Lossless Compression Techniques

In many

ways, compression is inseparable from data encoding.

That is, in thinking about how to encode a piece of

data in a set of bits, we might just as well think about how to encode the data

in the smallest set of bits possible.

For example, if you have a block of data that is

made up of the 26 symbols A through Z, and if all of these symbols have an

equal chance of occurring in the data block you are encoding, then encoding

each symbol in 5 bits is the best you can do (since 25 = 32 is the

lowest power of 2 above 26).

If, however, the symbol R occurs 50% of the time,

then it would be a good idea to use fewer bits to encode the R than any of the

other symbols.

┬Ę

In general, if you know the relative probability

that each symbol will occur in the data, then you can assign a different number

of bits to each possible symbol in a way that minimizes the number of bits it

takes to encode a given block of data.

┬Ę

This is the essential idea of Huffman codes, one of

the important early developments in data compression.

1.1 Lossless

Compression Techniques

┬Ę

Run length Encoding

o

Run length encoding (RLE) is a compression

technique with a brute-force simplicity.

o

The idea is to replace consecutive occurrences of a

given symbol with only one copy of the symbol, plus a count of how many times

that symbol occursŌĆöhence the name ŌĆ£run length.ŌĆØ

o

For example, the string AAABBCDDDD would be encoded

as 3A2B1C4D.

1.2 Differential Pulse Code Modulation

o

Another simple lossless compression algorithm is

Differential Pulse Code Modulation (DPCM).

o

The idea here is to first output a reference symbol

and then, for each symbol in the data, to output the difference between that

symbol and the reference symbol.

o

For example, using symbol A as the reference

symbol, the string AAABBCDDDD would be encoded as A0001123333 since A is the

same as the reference symbol, B has a difference of 1 from the reference

symbol, and so on.

o

Dictionary based Methods

o

The final lossless compression method we consider

is the dictionary-based approach, of which the Lempel-Ziv (LZ) compression

algorithm is the best known.

o

The Unix compress and gzip commands use variants of

the LZ algorithm.

o

The idea of a dictionary-based compression

algorithm is to build a dictionary (table) of variable-length strings (think of

them as common phrases) that you expect to find in the data, and then to

replace each of these strings when it appears in the data with the

corresponding index to the dictionary.

o

Dictionary based Methods

o

For example, instead of working with individual

characters in text data, you could treat each word as a string and output the

index in the dictionary for that word.

o

To further elaborate on this example, the word

ŌĆ£compressionŌĆØ has the index 4978 in one particular dictionary; it is the 4978th

word in /usr/share/dict/words.

o

To compress a body of text, each time the string

ŌĆ£compressionŌĆØ appears, it would be replaced by 4978.

1.2 Image Representation and Compression

Given the

increase in the use of digital imagery in recent yearsŌĆöthis use was spawned by

the invention of graphical displays, not high-speed networksŌĆöthe need for

standard representation formats and compression algorithms for digital imagery

data has grown more and more critical.

In

response to this need, the ISO defined a digital image format known as JPEG, named

after the Joint Photographic Experts Group that designed it. (The ŌĆ£JointŌĆØ in

JPEG stands for a joint ISO/ITU effort.)

1.3 Image Representation and Compression

o

JPEG is the most widely used format for still

images in use today.

o

At the heart of the definition of the format is a

compression algorithm, which we describe below.

o

Many techniques used in JPEG also appear in MPEG,

the set of standards for video compression and transmission created by the

Moving Picture Experts Group.

o

Digital images are made up of pixels (hence the

megapixels quoted in digital camera advertisements).

o

Each pixel represents one location in the

two-dimensional grid that makes up the image, and for color images, each pixel

has some numerical value representing a color.

o

There are lots of ways to represent colors,

referred to as color spaces: the one most people are familiar with is RGB (red,

green, blue).

2.

INTRODUCTION TO JPEG

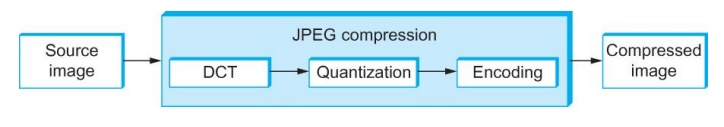

2.1 JPEG Compression

DCT Phase

DCT is a

transformation closely related to the fast Fourier transform (FFT). It takes an

8 ├Ś 8

matrix of pixel values as input and outputs an 8 ├Ś 8 matrix of frequency

coefficients.

You can

think of the input matrix as a 64-point signal that is defined in two spatial

dimensions (x and y); DCT breaks this signal into 64 spatial frequencies. DCT,

along with its inverse, which is performed during decompression, is defined by

the following formulas:

where

pixel(x, y) is the grayscale value of the pixel at position (x, y) in the 8├Ś8

block being compressed; N = 8 in this case

Quantization

Phase

The

second phase of JPEG is where the compression becomes lossy.

DCT does

not itself lose information; it just transforms the image into a form that

makes it easier to know what information to remove.

Quantization

is easy to understandŌĆöitŌĆÖs simply a matter of dropping the insignificant bits

of the frequency coefficients

Quantization Phase

n The basic quantization equation

is QuantizedValue(i, j) = IntegerRound(DCT(i, j)/Quantum(i, j)) Where

n Decompression is then simply

defined as DCT(i, j) = QuantizedValue(i, j) ├Ś Quantum(i, j)

Encoding

Phase

┬¦ The final

phase of JPEG encodes the quantized frequency coefficients in a compact form.

┬¦ This

results in additional compression, but this compression is lossless.

┬¦ Starting

with the DC coefficient in position (0,0), the coefficients are processed in

the zigzag sequence.

┬¦ Along

this zigzag, a form of run length encoding is usedŌĆöRLE is applied to only the 0

coefficients, which is significant because many of the later coefficients are

0.

┬¦ The

individual coefficient values are then encoded using a Huffman code.

3. INTRODUCTION TO MPEG

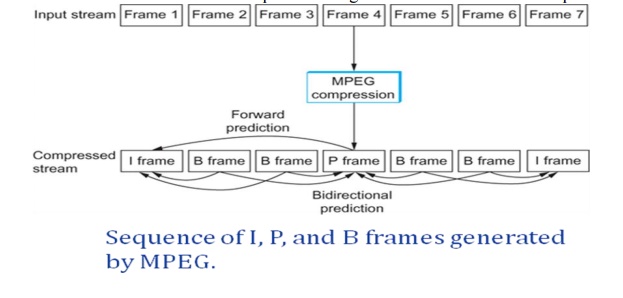

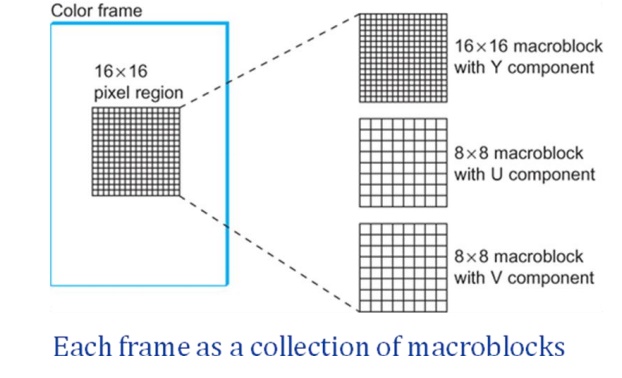

3.1Video Compression (MPEG)

We now turn our attention to the MPEG format,

named after the Moving Picture Experts Group that defined it.

To a

first approximation, a moving picture (i.e., video) is simply a succession of

still imagesŌĆöalso called frames or picturesŌĆödisplayed at some video rate.

Each of

these frames can be compressed using the same DCT-based technique used in JPEG

3.2 Frame Types

MPEG

takes a sequence of video frames as input and compresses them into three types

of frames, called I frames (intrapicture), P frames (predicted picture), and B

frames (bidirectional predicted picture).

Each

frame of input is compressed into one of these three frame types. I frames can

be thought of as reference frames; they are self-contained, depending on

neither earlier frames nor later frames.

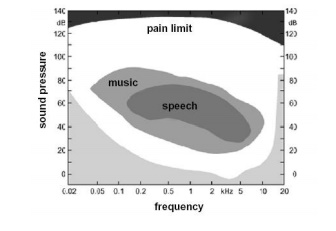

4. INTRODUCTION TO MP3

The most

common compression technique used to create CD-quality audio is based on the

perceptual encoding technique. This type of audio needs at least 1.411 Mbps,

which cannot be sent over the Internet without compression. MP3 (MPEG audio

layer 3) uses this technique.

The basic

perceptual model used in MP3 is that louder frequencies mask out adjacent

quieter ones. People can not hear a quiet sound at one frequency if there is a

loud sound at another

This can

be explained better by the following figures presented by Rapha Depke

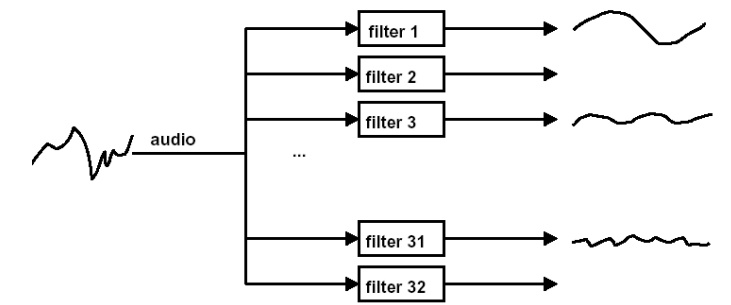

The audio

signal passes through 32 filters with different frequency

Joint stereo coding takes advantage of the fact that both channels of a

stereo channel pair contain similar information

These

stereophonic irrelevancies and redundancies are exploited to reduce the total

bitrate

Joint

stereo is used in cases where only low bitrates are available but stereo

signals are desired.

Encoder

A typical

solution has two nested iteration loops

Distortion/Noise control loop (outer loop)

Rate control loop (inner loop)

ŌĆó

Rate control loop

┬Ę

For a given bit rate allocation, adjust the

quantization steps to achieve the bit rate.

This loop checks if the number of bits resulting

from the coding operation exceeds the number of bits available to code a given

block of data.

If it is true, then the quantization step is

increased to reduce the total bits. This can be achieved by adjusting the

global gain

5. CRYPTOGRAPHY

What Is Cryptography

Cryptography

is the science of hiding information in plain sight, in order to conceal it

from unauthorized parties.

Substitution

cipher first used by Caesar for battlefield communications

Encryption Terms and Operations

Plaintext

ŌĆō an original message

Ciphertext

ŌĆō an encrypted message

Encryption

ŌĆō the process of transforming plaintext into ciphertext (also encipher)

Decryption

ŌĆō the process of transforming ciphertext into plaintext (also decipher)

Encryption key ŌĆō the text value required to

encrypt and decrypt data

Encryption methodologies

Substitution Cipher

Plaintext characters are substituted to form

ciphertext

ŌĆ£AŌĆØ becomes ŌĆ£RŌĆØ, ŌĆ£BŌĆØ becomes ŌĆ£GŌĆØ, etc.

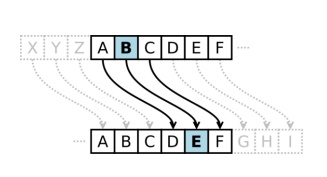

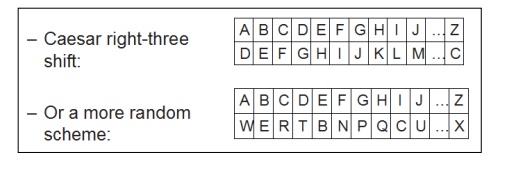

Character rotation

Caesar rotated three to the right (A > D,

B > E, C > F, etc.)

A table or formula is used

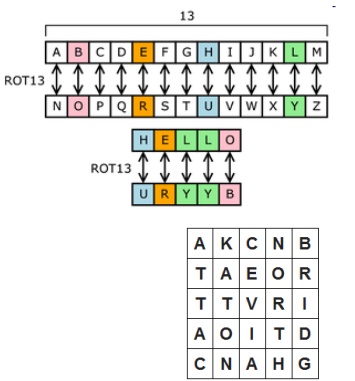

ROT13 is a Caesar cipher

Image from Wikipedia (link Ch 5a)

Subject to frequency analysis attack

5.1

Transposition Cipher

Plaintext messages are transposed into

ciphertext

Plaintext: ATTACK AT ONCE VIA NORTH BRIDGE

Write into columns going down

Read from columns to the right Ciphertext:

AKCNBTAEORTTVRIAOITDCNAHG Subject to

frequency analysis attack Monoalphabetic Cipher

One alphabetic character is substituted or

another

Subject to frequency analysis attack

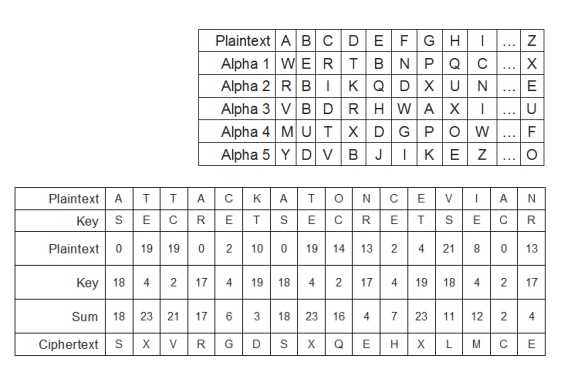

5.2 Polyalphabetic Cipher

Two or more substitution alphabets

CAGED becomes RRADB

Not subject to frequency attack

Running-key Cipher Plaintext letters

converted to numeric (A=0, B=1, etc.)

Plaintext values ŌĆ£addedŌĆØ to key values

giving ciphertext

Modulo arithmetic is used to keep results in

range 0-26

Add 26 if results < 0; subtract 26 if

results > 26 One-time Pad

Works like running key cipher, except that

key is length of plaintext, and is used only once

Highly resistant to cryptanalysis

5.3

Types of ecryption

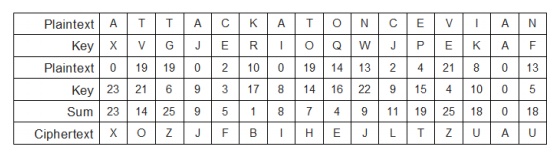

Block cipher

Encrypts blocks of data, often 128 bits

Stream cipher

Operates on a continuous stream of data

Block Ciphers

Encrypt and decrypt a block of data at a

time Typically 128 bits

Typical uses for block ciphers

Files, e-mail messages, text communications,

web Well known encryption algorithms

DES, 3DES, AES, CAST, Twofish, Blowfish,

Serpent

Block Cipher Modes of Operation

Electronic Code Book (ECB)

Cipher-block chaining (CBC)

Cipher feedback (CFB)

Output feedback (OFB)

Counter

(CTR)

5.4 Initialization Vector (IV)

Starting

block of information needed to encrypt the first block of data IV must be

random and should not be re-used

WEP wireless encryption is weak because it re-uses

the IV, in addition to making other errors

Block Cipher: Cipher-block Chaining (CBC)

Ciphertext output from each encrypted

plaintext block is used in the encryption for the next block

First block encrypted with IV (initialization

vector)

Block Cipher: Cipher Feedback (CFB)

Plaintext for block N is XORŌĆÖd with the

ciphertext from block N-1. In the first block, the plaintext XORŌĆÖd with the

encrypted IV

5.5 Stream Ciphers

Used to

encrypt a continuous stream of data, such as an audio or video transmission

A stream cipher is a substitution cipher that

typically uses an exclusive-or (XOR) operation that can be performed very

quickly by a computer.

Most common stream cipher is RC4 Other stream ciphers

5.6 Types of Encryption Keys Symmetric key

A common

secret that all parties must know

Difficult

to distribute key securely

Used by

DES, 3DES, AES, Twofish, Blowfish, IDEA, RC5

Asymmetric key

Public /

private key

Openly

distribute public key to all parties

Keep

private key secret

Anyone

can use your public key to send you a message

Used by

RSA. El Gamal, Elliptic Curve

Asymmetric

Encryption Uses

Encrypt

message with recipient's public key

Only

recipient can read it, using his or her private key Provides confidentiality

Sign message

Hash

message, encrypt hash with your private key Anyone can verify the signature

using your public key

Provides

integrity and non-repudiation (sender cannot deny authorship) Sign and encrypt

Both of

the above

6. SYMMETRIC KEY

┬Ę

most symmetric block ciphers are based on a Feistel

Cipher Structure

┬Ę

needed since must be able to decrypt ciphertext to

recover messages efficiently

┬Ę

block ciphers look like an extremely large

substitution

┬Ę

would need table of 264 entries for a

64-bit block

┬Ę

instead create from smaller building blocks

┬Ę

using idea of a product cipher

┬Ę

Horst Feistel devised the feistel cipher

based on concept of invertible product cipher

┬Ę

partitions input block into two halves

process through multiple rounds which

perform a substitution on left data half

based on round function of right half & subkey

then have permutation swapping halves

┬Ę

implements ShannonŌĆÖs substitution-permutation

network concept

┬Ę

block size

increasing size improves security, but slows cipher

┬Ę

key size

increasing size improves security, makes exhaustive

key searching harder, but may slow cipher

┬Ę

number of rounds

increasing number improves security, but slows

cipher

┬Ę

subkey generation

greater complexity can make analysis harder, but

slows cipher

┬Ę

round function

greater complexity can make analysis harder, but

slows cipher

┬Ę

fast software en/decryption & ease of analysis

are more recent concerns for practical use and

testing

7. PUBLIC-KEY

Rapidly

increasing needs for flexible and secure transmission of information require to

use new cryptographic methods.

The main

disadvantage of the classical cryptography is the need to send a (long) key

through a super secure channel before sending the message itself.

In

secret-key (symetric key) cryptography both sender and receiver share the same

secret

key.

In public-key ryptography there are two different

keys: a public encryption key

and

a secret

decryption key (at the receiver side).

Basic

idea: If it is infeasible from the knowledge of an encryption algorithm ek

to construct the corresponding description algorithm dk, then ek

can be made public.

Toy

example: (Telephone directory encryption)

Start:

Each user U makes public a unique telephone directory tdU to encrypt

messages for U and U is the only user to have an inverse telephone directory

itdU.

Encryption:

Each letter X of a plaintext w is replaced, using the telephone directory tdU

of the intended receiver U, by the telephone number of a person whose name

starts with letter X.

Decryption:

easy for Uk, with an inverse telephone directory, infeasible for

others. Analogy:

Secret-key

cryptography 1. Put the message into a box, lock it with a padlock and send the

box. 2. Send the key by a secure channel.

Public-key

cryptography Open padlocks, for each user different one, are freely available.

Only legitimate user has key from his padlocks. Transmission: Put the message

into the box of the intended receiver, close the padlock

Main

problem of the secret-key cryptography: a need to make a secure distribution

(establishment) of secret keys ahead of transmissions.

Diffie+Hellman

solved this problem in 1976 by designing a protocol for secure key

establishment (distribution) over public channels.

Protocol:

If two parties, Alice and Bob, want to create a common secret key, then they

first agree, somehow, on a large prime p and a primitive root q (mod p) and

then

they

perform, through a public channel, the following activities.

Alice

chooses, randomly, a large 1 Ł x < p -1 and computes X = q x mod

p.

Bob also

chooses, again randomly, a large 1 Ł y < p -1 and computes Y = q y

mod p.

Alice and

Bob exchange X and Y, through a public channel, but keep x, y secret.

Alice

computes Y x mod p and Bob computes X y mod p and then

each of them has the

Key K = q xy mod p.

The

following attack by a man-in-the-middle is possible against the Diffie-Hellman

key establishment protocol.

. Eve

chooses an exponent z.

Eve sends

q z to both Alice and Bob. (After that Alice believes she has

received q x and Bob believes he has received q y.)

Eve

intercepts q x and q y.

When

Alice sends a message to Bob, encrypted with KA, Eve intercepts it,

decrypts it,

then encrypts it with KB and sends it to Bob without any need

forsecret key distribution (Shamir's no-key algorithm)

Basic assumption: Each user X has its own

secret

encryption function eX secret decryption function dX

and all

these functions commute (to form a commutative cryptosystem). Communication

protocol

with

which Alice can send a message w to Bob.

Alice sends eA (w) to Bob

Bob sends eB (eA (w)) to

Alice

Alice sends dA (eB (eA

(w))) = eB (w) to Bob

Bob performs the decryption to get dB (eB

(w)) = w.

8. AUTHENTICATION

┬Ę

fundamental security building block

basis of

access control & user accountability

┬Ę

is the process of verifying an identity claimed by

or for a system entity

┬Ę

has two steps:

identification

- specify identifier

verification

- bind entity (person) and identifier

distinct

from message authentication

four

means of authenticating user's identity

based one

something the individual

┬Ę

knows - e.g. password, PIN

┬Ę

possesses - e.g. key, token, smartcard

┬Ę

is (static biometrics) - e.g. fingerprint, retina

┬Ę

does (dynamic biometrics) - e.g. voice, sign

can use

alone or combined

all can

provide user authentication all have issues

Authentication Protocols

used to

convince parties of each others identity and to exchange session keys

may be

one-way or mutual

key

issues are

┬Ę

confidentiality ŌĆō to protect session keys

┬Ę

timeliness ŌĆō to prevent replay attacks

where a

valid signed message is copied and later resent

┬Ę

simple replay

┬Ę

repetition that can be logged

┬Ę

repetition that cannot be detected

┬Ę

backward replay without modification

countermeasures

include

┬Ę

use of sequence numbers (generally impractical)

┬Ę

timestamps (needs synchronized clocks)

┬Ę

challenge/response (using unique nonce)

One-Way Authentication

┬Ę

required when sender & receiver are not in

communications at same time (eg. email)

┬Ę

have header in clear so can be delivered by email

system

┬Ę

may want contents of body protected & sender

authenticated

┬Ę

as discussed previously can use a two-level

hierarchy of keys

┬Ę

usually with a trusted Key Distribution Center

(KDC)

each

party shares own master key with KDC

KDC

generates session keys used for connections between parties

master

keys used to distribute these to them

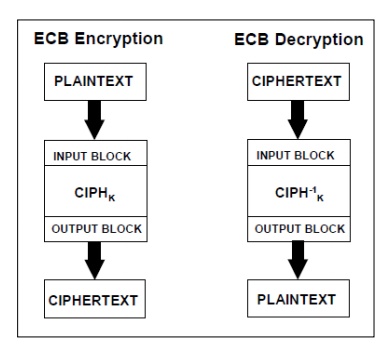

9. KEY DISTRIBUTION

ŌĆó In

designing the key distribution protocol, the authors took into consideration

the following requirements:

ŌĆó Security

domain change: The Certifiction Authority (CA) of the receiving security domain must be able to

authenticate the agent which comes from another security domain.

ŌĆó Trust establishment:

The key distribution process should start with a very high trust relationship with the

Certifiction Authority (CA) .

ŌĆó Secure

key distribution: The key distribution process should be conducted in a secure manner (e.g., trusted path).

ŌĆó Efficiency:

The key distribution process should not consume a lot of resources, such as machines CPUs and network

bandwidth.

ŌĆó Scalability:

The key distribution process must be scalable enough, so that the mobile agent can have the ability to

roam widely.

ŌĆó Transparency:

The mobile agents should not include a code which is proper to key distribution.

ŌĆó This will

ease programming as agents programmers will concentrate on the programming logic rather than the

key obtaining issues.

ŌĆó Portability:

The protocol should not be platform specific and should be ported to any mobile agent platform.

ŌĆó Ease of

administration: The key distribution protocol should not be a burden on the administrator. The protocol is an

automated infrastructure that should require minimum administratorŌĆÖs

intervention.

9.1 Key Distribution Mechanisms.

System Components:

A key

distribution system for mobile agents includes the following components:

Agent: An

agent is a software component which executes on behalf of a particular user who

is the user of the agent.

An agent can be mobile and move from one host server to another under

its own control to achieve tasks on these hosts servers.

┬Ę

Agent Server: Each host, as part of the mobile

agent platform, runs an execution environment, the agent server.

┬Ę

Messaging System. A messaging system is part of an

agent execution environment. It provides facilities for agents to communicate

both locally and remotely

┬Ę

The CA. It is a trusted third party which provides

digital certificates for mobile agents, users and agent servers.

All digital certificates are signed by the CA for further verification

of their authenticity and validity.

┬Ę

Keystore: Each agent server has a local database

which is used to store and retrieve its own private/public key pair and the

digital certificate.

┬Ę

It also stores the digital certificate of the

trusted CA and other agent severs, mobile agents, and CAs with which the agent

server has prior communication.

┬Ę

Similarly, each CA has a local keystore .

┬Ę

Security Domain: A security domain consists of a

group of agent servers which are under one common CA. In the security domain,

the agent servers have the digital certificate of their local CA stored in

their local keystores.

┬Ę

When a mobile agent moves, it can move within the

same security domain or changes a security domain.

10. KEY

AGREEMENT

ŌĆō Flexibility in credentials

ŌĆō Modern, publically analysed/available

cryptographic primitives

ŌĆō Freshness guarantees

ŌĆō PFS?

ŌĆō Mutual authentication

ŌĆō Identity hiding for supplicant/end-user

ŌĆō No key re-use

ŌĆō Fast re-key

ŌĆō Fast handoff

ŌĆō Efficiency not an overarching concern:

┬Ę

Protocol runs only 1/2^N-1 packets, on average

ŌĆō DOS resistance

Credentials flexibility

Local

security policy dictates types of credentials used by end-users

Legacy

authentication compatibility extremely important in market

┬Ę Examples:

ŌĆō username/password

ŌĆō Tokens (SecurID, etc)

ŌĆō X.509 certificates

Algorithms

┬Ę Algorithms

must provide confidentiality and integrity of the authentication and key

agreement.

┬Ę Public-key

encryption/signature

ŌĆō RSA

ŌĆō ECC

ŌĆō DSA

┬Ę PFS

support D-H

┬Ę Most

cryptographic primitives require strong random material that is ŌĆ£freshŌĆØ.

ŌĆō Not a protocol issue, per se, but a design

requirement nonetheless

┬Ę Both

sides of authentication/key agreement must be certain of identity of other

party.

┬Ę Symmetric

RSA/DSA schemes (public-keys on both sides)

┬Ę Asymmetric

schemes

ŌĆō Legacy on end-user side

ŌĆō RSA/DSA on authenticator side

11. PGP

PGP

provides a confidentiality and authentication service that can be used for file

storage and electronic mail applications.

PGP was

developed be Phil Zimmermann in 1991 and since then it has grown in popularity.

There have been several updates to PGP.

A free

versions of PGP is available over the Internet, but only for non-commercial

use. The latest (Jan. 2000) current version is 6.5.

Commercial

versions of PGP are available from the PGP Division of Network Associates

For three

years, Philip Zimmermann, was threatened with federal prosecution in the United

States for his actions. Charges were finally dropped in January 1996.

At the

close of 1999, Network Associates, Inc. announced that it has been granted a

full license by the U.S. Government to export PGP world-wide, ending a

decades-old ban.

PGP

enables you to make your own public and secret key pairs.

PGP public keys are distributed and certified via an informal network

called "the web of trust".

Most experts consider PGP very secure if used

correctly. PGP is based on RSA, DSS, Diffie-Hellman in the public encryption

side, and CAST.128, IDEA, 3DES for conventional encryption. Hash coding is done

with SHA-1.

PGP has a wide range of applicability from

corprorations that wish to enforce a standardized scheme for encryptin files

and messages to individuals who wish to communicate securely with each others

over the interent.

The actual operation of PGP consists of five

services: authentication, confidentiality, compression, e-mail compatibility

and segmentation (Table 12.1.)

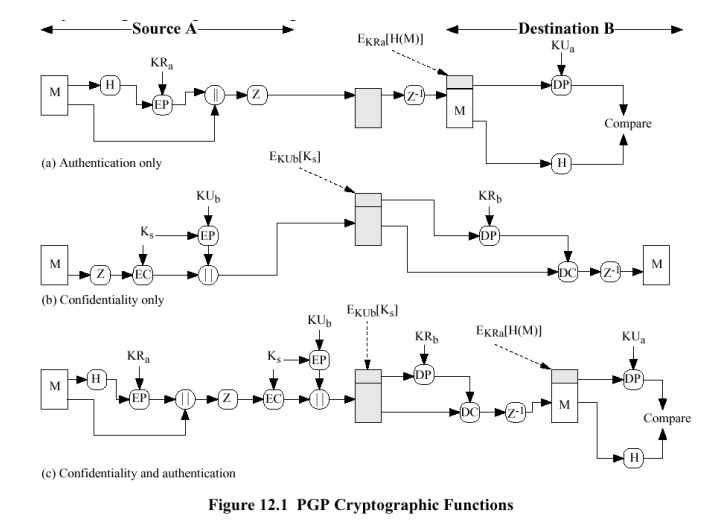

Authenticaiton

The digital signature service is illustrated in Fig

12.1a.

ŌĆō EC is used for conventional encryption, DC for

decryption, and EP and ED correspondingly for public key encryption and

decryption.

The algorithms used are SHA-1 and RSA.

Alternatively digital signatures can be generated using DSS/SHA-1.

Normally digital signatures are attached to the

files they sign, but there are exceptions

ŌĆō a detached signature can be used to detect a

virus infection of an executable program.

ŌĆō sometimes more than one party must sign the

document.

a

separate signature log of all messages is maintained

Confidentiality

ŌĆó Confidentiality service is

illustrated in Fig 12.1b.

┬Ę

Confidentiality can be use for storing files

locally or transmitting them over insecure channel.

┬Ę

The algorithms used are CAST-128 or alternatively

IDEA or 3DES. The ciphers run in CFB mode.

┬Ę

Each conventional key is used only once.

ŌĆō A new key is generated as a random 128-bit

number for each message.

ŌĆō The key is encrypted with the receivers public

key (RSA) and attached to the message.

ŌĆō An alternative to using RSA for key encryption,

ELGamal, a variant of Diffie-Hellman providing also encryption/decryption, can

be used.

┬Ę

The use of conventional encryption is fast compared

to encryption the whole message with RSA.

┬Ę

The use of public key algorithm solves the use

session key distribution problem. In email application any kind of handshaking would

not be practical.

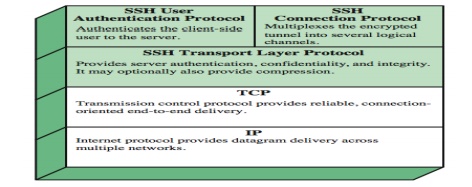

12. SSH

protocol

for secure network communications

ŌĆō designed to be simple & inexpensive

SSH1

provided secure remote logon facility

ŌĆō replace TELNET & other insecure schemes

ŌĆō also has more general client/server

capability

Can be used for FTP, for example

SSH2 was

documented in RFCs 4250 through 4254

SSH clients & servers are widely available (even in OSs)

Identification

string exchange

ŌĆō To know which SSH version, which SSH

implementation

Algorithm

Negotitation

ŌĆō For the crypto algorithms (key exchange,

encryption, MAC) and compression algo.

ŌĆō A list in the order of preference of the

client

ŌĆō For each category, the algorithm chosen is the

first algorithm on the client's list that is also supported by the server.

key

exchange

ŌĆō Only two exchanges

ŌĆō Diffie-Hellman based

ŌĆō Also signed by the server (host private key)

ŌĆō As a result (i) two sides now share a master key

K. (ii) the server has been authenticated to the client.

┬Ę

Then, encryption, MAC keys and IV are derived from

the master key

┬Ę

End of key exchange

ŌĆō To signal the end of key exchange process

ŌĆō Encrypted and MACed using the new keys

┬Ę

Service Request: to initiate either user

authentication or connection protocol

┬Ę

Authentication of client to server

┬Ę

First client and server agree on an authentication

method

ŌĆō Then a sequence of exchanges to perform that

method

ŌĆō Several authentication methods may be

performed one after another

┬Ę

authentication methods

ŌĆō public-key

Client

signs a message and server verifies

ŌĆō password

Client

sends pasword which is encrypted and MACed using the keys agreed

ŌĆō runs on SSH Transport Layer Protocol

ŌĆō assumes secure authentication connection

ŌĆō which is called tunnel

ŌĆō used for multiple logical channels

ŌĆō SSH communications use separate channels

ŌĆō either side can open with unique id number

ŌĆō flow controlled via sliding window mechanism

ŌĆō have three stages:

ŌĆō opening a channel, data transfer, closing a

channel

13. TRANSPORT SECURITY

transport

layer security service

originally

developed by Netscape

version 3

designed with public input

subsequently

became Internet standard known as TLS (Transport Layer Security)

uses TCP

to provide a reliable end-to-end service

SSL has

two layers of protocols

SSL

connection

┬Ę

a transient, peer-to-peer, communications link

┬Ę

associated with 1 SSL session

SSL

session

┬Ę

an association between client & server

┬Ę

created by the Handshake Protocol

┬Ę

define a set of cryptographic parameters

┬Ę

may be shared by multiple SSL connections

confidentiality

using

symmetric encryption with a shared secret key defined by Handshake Protocol

AES,

IDEA, RC2-40, DES-40, DES, 3DES, Fortezza, RC4-40, RC4-128

message

is compressed before encryption

┬Ę

message integrity

using a

MAC with shared secret key

similar

to HMAC but with different padding

┬Ę

one of 3 SSL specific protocols which use the SSL

Record protocol

┬Ę

a single message

┬Ę

causes pending state to become current

┬Ę

hence updating the cipher suite in use

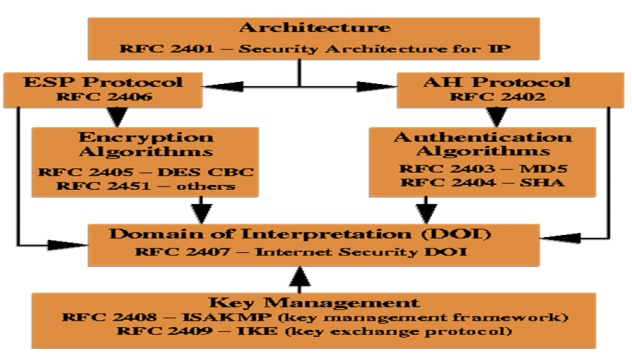

14. IP SECURITY

RFC

2401 - Overall security architecture and

services offered by IPSec.

Authentication

Protocols

┬Ę

RFC 2402 ŌĆō IP Authentication Header processing

(in/out bound packets )

┬Ę

RFC 2403 ŌĆō Use of MD-5 with Encapsulating Security

Payload and Authentication Header

┬Ę

RFC 2404 - Use of Sha1with Encapsulating Security

Payload and Authentication Header

ESP

Protocol

┬Ę

RFC 2405 ŌĆō Use of DES-CBS which is a symmetric

secret key block algorithm (block size 64 bits).

┬Ę

RFC 2406 ŌĆō IP Encapsulating Security Payload

processing (in/out bound packets)

RFC 2407

ŌĆō Determines how to use ISAKMP for IPSec

RFC 2408

(Internet Security Association and Key Management Protocol - ISAKMP)

┬Ę

Common frame work for exchanging key securely.

┬Ę

Defines format of Security Association (SA)

attributes, and for negotiating, modifying, and deleting SA.

┬Ę

Security Association contains information like

keys, source and destination address, algorithms used.

┬Ę

Key exchange mechanism independent.

RFC 2409

ŌĆō Internet key exchange

┬Ę

Mechanisms for generating and exchanging keys

securely.

┬Ę

Designed to provide both confidentiality and

integrity protection

┬Ę

Everything after the IP header is encrypted

┬Ę

The ESP header is inserted after the IP header

┬Ę

Designed for integrity only

┬Ę

Certain fields of the IP header and everything

after the IP header is protected

┬Ę

Provides protection to the immutable parts of the

IP header

┬¦

15. WIRELESS SECURITY

┬Ę

Wireless connections need to be secured since the

intruders should not be allowed to access, read and modify the network traffic.

┬Ę

Mobile systems should be connected at the same

time.

┬Ę

Algorithm is required which provides a high level

of security as provided by the physical wired networks.

┬Ę

Protect wireless communication from eavesdropping,

prevent unauthorized access.

┬Ę

Access Control

Ensure

that your wireless infrastructure is not used.

┬Ę

Data Integrity

Ensure

that your data packets are not modified in transit.

┬Ę

Confidentiality

Ensure

that contents of your wireless traffic is not leaked.

┬Ę

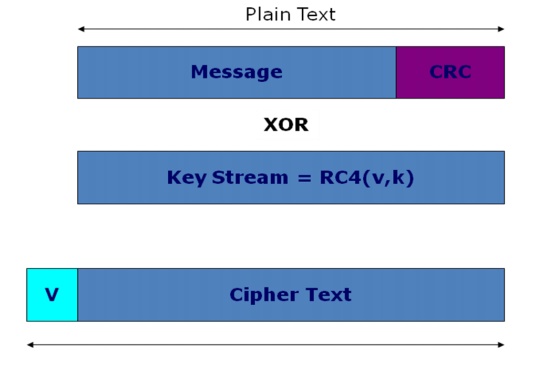

WEP relies on a secret key which is shared

between the sender (mobile station) and the receiver (access point).

┬Ę

Secret Key : packets are encrypted using the secret

key before they are transmitted.

┬Ę

Integrity Check : it is used to ensure that packets

are not modified in transit

┬Ę

To send a message to M:

Compute

the checksum c(M). Checksum does not depend on the secret key ŌĆśkŌĆÖ.

Pick a IV

ŌĆśvŌĆÖ and generate a key stream RC4(v,k).

XOR

<M,c(M)> with the key stream to get the cipher text.

Transmit

ŌĆśvŌĆÖ and the cipher text over a radio link.

WEP uses

RC4 encryption algorithm known as ŌĆ£stream cipherŌĆØ to protect the

confidentiality of its data.

Stream

cipher operates by expanding a short key into an infinite pseudo-random key

stream.

Sender

XORŌĆÖs the key stream with plaintext to produce cipher text.

Receiver

has the copy of the same key, and uses it to generate an identical key stream.

XORing

the key stream with the cipher text yields the original message.

Passive

Attacks

To decrypt

the traffic based on statistical analysis (Statistical Attack)

Active

Attacks

To inject

new traffic from authorized mobile stations, based on known plaintext.

Active

Attacks

To

decrypt the traffic based on tricking the access point

Dictionary

Attacks

Allow

real time automated decryption of all traffic.

16. FIREWALLS

Effective

means of protection a local system or network of systems from network-based

security threats while affording access to the outside world via WAN`s or the

Internet

Information

systems undergo a steady evolution (from small LAN`s to Internet connectivity)

Strong

security features for all workstations and servers not established

The

firewall is inserted between the premises network and the Internet

Aims:

Establish

a controlled link

┬Ę

Protect the premises network from Internet-based

attacks

┬Ę

Provide a single choke point

Design

goals:

┬Ę

All traffic from inside to outside must pass

through the firewall (physically blocking all access to the local network

except via the firewall)

┬Ę

Only authorized traffic (defined by the local

security police) will be allowed to pass

Four general techniques:

Service

control

┬Ę

Determines the types of Internet services that can

be accessed, inbound or outbound

Direction

control

┬Ę

Determines the direction in which particular

service requests are allowed to flow

User

control

┬Ę

Controls access to a service according to which

user is attempting to access it

Behavior

control

┬Ę

Controls how particular services are used (e.g.

filter e-mail)

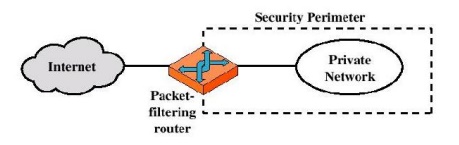

Types of Firewalls

Three common types of Firewalls:

ŌĆō Packet-filtering routers

ŌĆō Application-level gateways

ŌĆō Circuit-level gateways (Bastion host

Packet-filtering

Router

Packet-filtering Router

ŌĆō Applies a set of rules to each incoming IP packet

and then forwards or discards the packet

ŌĆō Filter packets going in both directions

ŌĆō The packet filter is typically set up as a list

of rules based on matches to fields in the IP or TCP header

ŌĆō Two default policies (discard or forward)

Advantages:

ŌĆō Simplicity

ŌĆō Transparency to users

ŌĆō High speed

Disadvantages:

ŌĆō Difficulty of setting up packet filter rules

ŌĆō Lack of Authentication

Related Topics