Chapter: Embedded Systems Design : Real-time operating systems

Scheduler algorithms

Scheduler algorithms

So far in this section, the main methods of swapping tasks has been

discussed. It is clear that pre-emption is the first choice for embedded

systems because of its better system response. The next issue to address is how

to assign the task priorities so that the system works and this is the topic

that is examined now.

Rate monotonic

Rate monotonic scheduling (RMS) is an approach that is used to assign

task priority for a pre-emptive system in such a way that the correct execution

can be guaranteed. It assumes that task priorities are fixed for a given set of

tasks and are not dynamically changed during execution. It assumes that there

are sufficient task priority levels for the task set and that the task set

models periodic events only. This means that an interrupt that is generated by

a serial port peripheral is modelled as an event that occurs on a periodic rate

determined by the data rate, for example. Asynchro-nous events such as a user

pressing a key are handled differently as will be explained later.

The key policy within RMS is that tasks with shorter execu-tion periods

are given the highest priority within the system. This means that the faster

executing tasks can pre-empt the slower periodic tasks so that they can meet

their deadlines. The advan-tage this gives the system designer is that it is

easier to theoretically specify the system so that the tasks will meet their

deadlines without overloading the processor. This requires detailed knowl-edge

about each task and the time it takes to execute. This and its periodicity can

be used to calculate the processor loading.

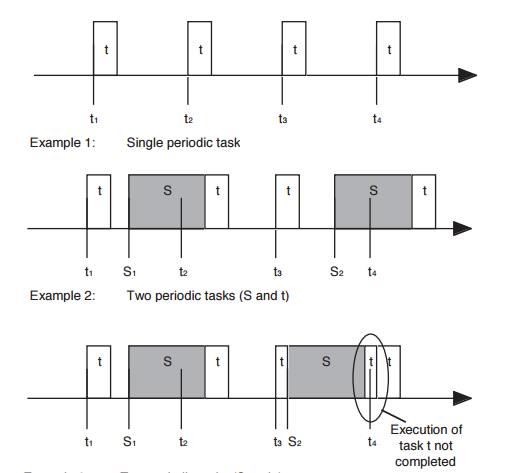

To see how this policy works, consider the examples shown in the diagram

on the next page. In the diagrams, events that start a task are shown as lines

that cross the horizontal time line and tasks are shown as rectangles whose

length determines their execution time. Example 1 shows a single periodic task

where the task t is executed with a periodicity of time t. The second example

adds a second task S where its periodicity is longer than that of task t. The task priority shown is with task

S having the highest priority. In

this case, the RMS policy has not been followed because the longest task has

been given a higher priority than the shortest task. However, please note that

in this case the system works fine because of the timing of the tasks’ periods.

Example 3 shows what can go wrong if the timing is changed and the

periodicity for task S approaches that of task t. When t3 occurs, task t is activated and

starts to run. It does not complete because S2 occurs and task S is swapped-in due to its higher priority. When tasks

S completes, task t resumes but during its execution, the event t4 occurs and thus task t has

failed to meets its task 3 deadline. This could result in missed or corrupted

data, for example. When task t completes, it is then reactivated to cope with

the t4 event.

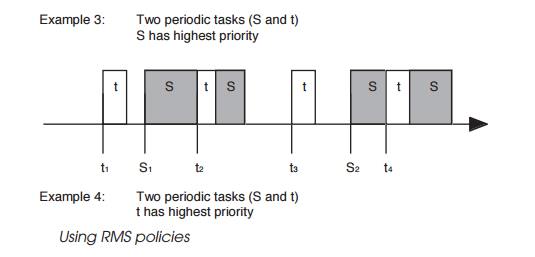

Example 4 shows the same scenario with the task priorities reversed so

that task t pre-empts task S. In this case, RMS policy has been followed and

the system works fine with both tasks meeting their deadlines. This system is

useful to understand and does allow theoretical analysis before implementation

to prevent a design from having to manually assign task priorities to get it to

work using a trial and error approach. It is important to remember within any

calculations to take into account the context swapping time needed to pre-empt

and resume tasks. The processor utilisa-tion can also be calculated and thus

give some idea of the perform-ance needed or how much performance is spare.

Typically, the higher the utilisation (>80%), the more chance that the

priority assignment is wrong and that the system will fail. If the utilisation

is below this, the chances are that the system will run correctly.

This is one of the problems with theoretical analysis of such systems in

that the actual design may have to break some of the assumptions on which the

analysis is based. Most embedded systems will have asynchronous events and

tasks running. While these can be modelled as a periodic task that polls for

the asynchro-nous event and are analysed as if they will run, other factors

such as cache memory hit ratios can invalidate the timing by lengthen-ing the

execution time of any analysis. Similarly, the act of syn-chronising tasks by

inter-task communication can also cause difficulties within any analysis.

Deadline monotonic scheduling

Deadline monotonic scheduling (DMS) is another task pri-ority policy

that uses the nearest deadline as the criterion for assigning task priority.

Given a set of tasks, the one with the nearest deadline is given the highest

priority. This means that the scheduling or designer must now know when these

deadlines are to take place. Tracking and, in fact, getting this information in

the first place can be difficult and this is often the reason behind why

deadline scheduling is often a second choice compared to RMS.

Priority guidelines

With a system that has a large number of tasks or one that has a small

number of priority levels, the general rule is to assign tasks with a similar

period to the same level. In most cases, this does not effect the ability to

schedule correctly. If a task has a large context, i.e. it has more registers

and data to be stored compared to other tasks, it is worth raising its priority

to reduce the context switch overhead. This may prevent the system from

scheduling properly but can be a worthwhile experiment.

Related Topics