Chapter: An Introduction to Parallel Programming : Parallel Program Development

Parallelizing the basic solver using OpenMP

Parallelizing the basic solver

using OpenMP

How can we use OpenMP to map tasks/particles to

cores in the basic version of our n-body solver? Let’s take a look at the pseudocode for the serial

program:

for each timestep {

if (timestep output) Print positions and

velocities of particles; for each

particle q

Compute total force on q; for each particle q

Compute position and velocity of q;

}

The two inner loops are both iterating over

particles. So, in principle, parallelizing the two inner for loops will map tasks/particles to cores, and

we might try something like this:

for each timestep {

if (timestep output) Print positions and

velocities of particles;

pragma omp parallel for for each particle q

Compute total force on q;

pragma omp parallel for for each particle q

Compute position and velocity of q;

}

We may not like the fact that this code could

do a lot of forking and joining of threads, but before dealing with that, let’s

take a look at the loops themselves: we need to see if there are any race

conditions caused by loop-carried dependences.

In the basic version the first loop has the

following form:

pragma omp parallel for for each particle q {

forces[q][X] = forces[q][Y] = 0;

for each particle k != q {

x_diff = pos[q][X]- pos[k][X];

y_diff =

pos[q][Y]- pos[k][Y];

dist = sqrt(x_diff* x_diff + y_diff *y_diff);

dist_cubed = dist*dist * dist;

forces[q][X] = G*masses[q]*masses[k]/dist_cubed

* x_diff;

forces[q][Y] = G*masses[q]*masses[k]/dist_cubed

* y_diff;

}

}

Since the iterations of the for each

particle q loop are partitioned among

the threads, only one thread will access forces[q] for any q. Different threads do access the same elements of the pos array and the masses array. However, these arrays are only read in the loop. The remaining variables are used

for temporary storage in a single iteration of the inner loop, and they can be

private. Thus, the parallelization of the first loop in the basic algorithm

won’t introduce any race conditions.

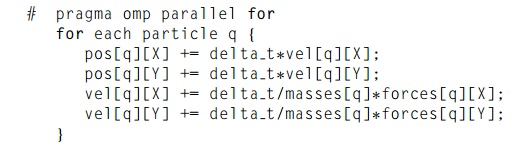

The second loop has the form:

Here, a single thread accesses pos[q], vel[q], masses[q], and forces[q] for any particle q, and the scalar variables are only read, so parallelizing this

loop also won’t introduce any race conditions.

Let’s return to the issue of repeated forking

and joining of threads. In our pseudocode, we have

for each timestep {

if (timestep output) Print positions and

velocities of particles;

# pragma omp parallel for

for each

particle q

Compute total force on q;

#pragma omp parallel for

for each particle q

Compute position and velocity of q;

}

We encountered a similar issue when we

parallelized odd-even transposition sort (see Section 5.6.2). In that case, we

put a parallel directive before the outermost loop and used OpenMP for directives for the inner loops. Will a similar

strategy work here? That is, can we do something like this?

# pragma omp parallel

for each

timestep {

if (timestep output) Print positions and

velocities of particles;

# pragma omp for

for each particle q

Compute total force on q;

#pragma omp for

for each particle q

Compute position and velocity of q;

}

This will have the desired effect on the two for each

particle loops: the same team of threads will be used

in both loops and for every iteration of the outer loop. However, we have a

clear problem with the output statement. As it stands now, every thread will

print all the positions and velocities, and we only want one thread to do the

I/O. However, OpenMP provides the single directive for exactly this situation: we have

a team of threads executing a block of code, but a part of the code should only

be executed by one of the threads. Adding the single directive gives us the following pseudocode:

#pragma omp parallel

for each

timestep {

if (timestep output) {

# pragma omp single

Print positions and velocities of particles;

}

#pragma omp for

for each particle q

Compute total force on q;

# pragma omp for

for each particle q

Compute position and velocity of q;

}

There are still a few issues that we need to

address. The most important has to do with possible race conditions introduced

in the transition from one statement to another. For example, suppose thread 0

completes the first for each particle loop before thread 1, and it then starts updating the positions

and velocities of its assigned particles in the second for each

particle loop. Clearly, this could cause thread 1 to

use an updated position in the first for each particle loop. However, recall that there is an implicit barrier at the end

of each structured block that has been parallelized with a for directive. So, if thread 0 finishes the first

inner loop before thread 1, it will block until thread 1 (and any other

threads) finish the first inner loop, and it won’t start the second inner loop

until all the threads have finished the first. This will also prevent the

possibility that a thread might rush ahead and print positions and velocities

before they’ve all been updated by the second loop.

There’s also an implicit barrier after the single directive, although in this pro-gram the barrier isn’t necessary.

Since the output statement won’t update any memory locations, it’s OK for some

threads to go ahead and start executing the next iteration before output has

been completed. Furthermore, the first inner for loop in the next iteration only updates the forces array, so it can’t cause a thread executing the output statement

to print incorrect values, and because of the barrier at the end of the first

inner loop, no thread can race ahead and start updating positions and

velocities in the second inner loop before the output has been completed. Thus,

we could modify the single directive with a nowait clause. If the OpenMP implementation supports it, this simply

eliminates the implied barrier associated with the single directive. It can also be used with for, parallel for, and parallel directives. Note that in this case, addition

of the nowait clause is unlikely to have much effect on performance, since the

two for each

particle loops have implied barriers that will prevent

any one thread from getting more than a few statements ahead of any other.

Finally, we may want to add a schedule clause

to each of the for directives in order to insure that the iterations have a block

partition:

# pragma omp for schedule(static, n/thread_count)

Related Topics