Chapter: Psychology: Perception

The Neuroscience of Vision

THE NEUROSCIENCE OF VISION

Where are we so far? We started

by acknowledging the complexities of form perception— including the perceiver’s

need to interpret and organize visual information. We then con-sidered how this

interpretation might be achieved—through a network of detectors, shaped by an

interplay between bottom-up and top-down processes. But these points simply

invite the next question: How does the nervous system implement these

processes? More broadly, what events in the eye, the optic nerve, and the brain

make perception possible?

The Visual Pathway

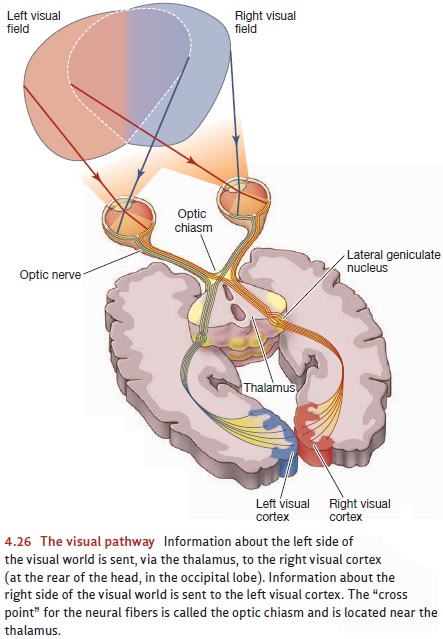

As we saw, the rods and cones

pass their signals to the bipolar cells, which relay them to the ganglion cells

(Figure 4.26). The axons of the ganglion cells form the optic nerve, which

leaves the eyeball and begins the journey toward the brain. But even at this

early stage, the neurons are specialized in important ways, and different cells

are responsible for detecting different aspects of the visual world.

The ganglion cells, for example,

can be broadly classified into two categories: the smaller ones are called parvo cells, and the larger are called magno cells (parvo and magno are the

Latin for “small” and “large”). Parvo cells, which blanket the entireretina,

far outnumber magno cells. Magno cells, in contrast, are found largely in the

retina’s periphery. Parvo cells appear to be sensitive to color differences (to

be more pre-cise, to differences either in hue or in brightness), and they

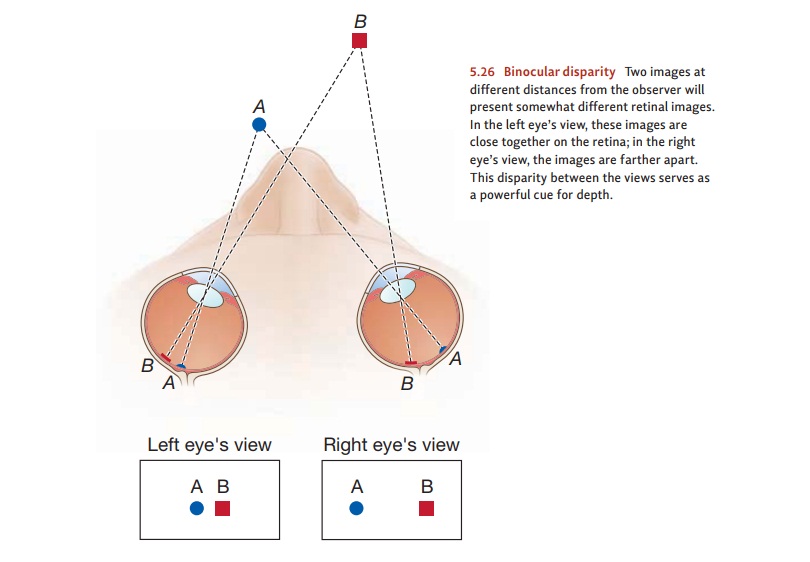

probably play a crucial role in our perception of pattern and form. Magno

cells, on the other hand, are insensitive to hue differences but respond

strongly to changes in brightness; they play a central role in the detection of

motion and the perception of depth.

This pattern of neural

specialization continues and sharpens as we look more deeply into the nervous

system. The relevant evidence comes largely from the single-cell recording

technique that lets investigators determine which specific stimuli elicit a

response from a cell and which do not . This technique has allowed

investigators to explore the visual system cell by cell and has given us a rich

understand-ing of the neural basis for vision.

PARALLEL PROCESSING IN THE VISUAL CORTEX

We noted that cells in the visual

cortex each seem to have a “preferred stimulus”—and each cell fires most

rapidly whenever this special stimulus is in view. For some cells, the

preferred stimulus is relatively simple—a curve, or a line tilted at a particular

orientation. For other cells, the preferred stimulus is more complex—a corner,

an angle, or a notch. Still other cells are sensitive to the color (hue) of the

input. Others fire rapidly in response to motion—some

cells are particularly sensitive to left-to-right motion, others to the

reverse.

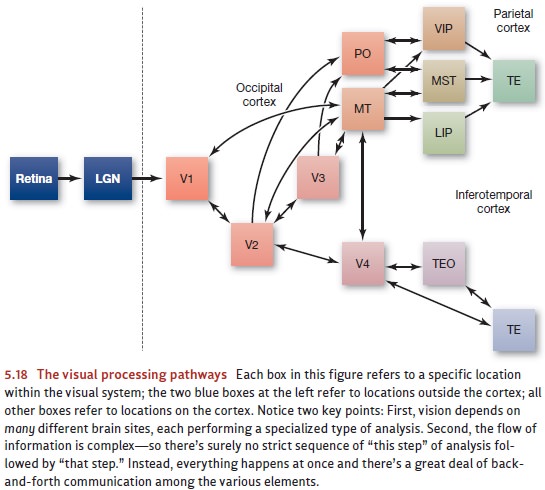

This abundance of cell types

suggests that the visual system relies on a “divide-and-conquer” strategy.

Different cells—and even different areas of the brain—each specialize in a

particular kind of analysis. Moreover, these different analyses go on in

parallel: The cells analyzing the forms do their work at the same time that

other cells are analyzing the motion and still others the colors. Using

single-cell recording, investigators have been able to map where these various

cells are located in the visual cortex as well as how they communicate with

each other; Figure 5.18 shows one of these maps.

Why this reliance on parallel

processing? For one thing, parallel processing allows greater speed, since (for

example) brain areas trying to recognize the shape of the stimulus aren’t kept

waiting while other brain areas complete the motion analysis or the color

analysis. Instead, all types of analysis can take place simultaneously. Another

advantage of parallel processing lies in its ability to allow each system to

draw informa-tion from the others. Thus, your understanding of an object’s

shape can be sharpened by a consideration of how the object is moving; your

understanding of its movement can be sharpened by noting what the shape is

(especially the shape in three dimen-sions). This sort of mutual influence is

easy to arrange if the various types of analysis are all going on at the same

time (Van Essen & DeYoe, 1995).

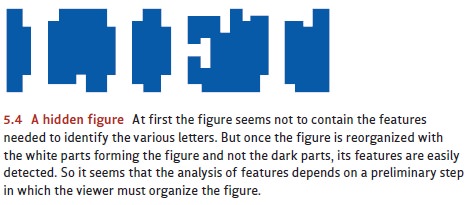

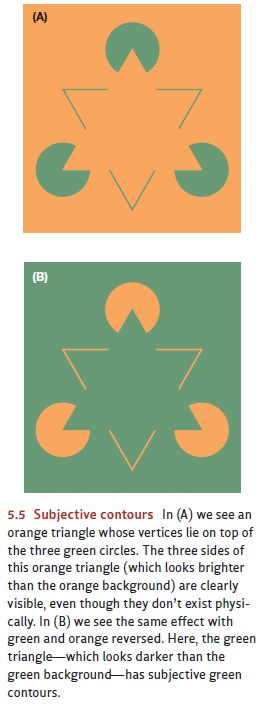

The parallel processing we see in

the visual cortex also clarifies a point we’ve already discussed. Earlier, we

argued that the inventory of a figure’s features depends on how the perceiver

organizes the figure. So, in Figure 5.4, the features of the letters were absent

with one interpretation of the shapes, but they’re easily visible with a

different interpretation. In Figure 5.5, some of the features (the triangle’s

sides) aren’t present in the figure but seem to be created by the way we

interpret the arrangement of features. Observations like these make it sound

like the interpretation has priority because it determines what features are

present.

But it would seem that the

reverse claim must also be correct because the way we inter-pret a form depends

on the features we can see. After all, no matter how you try to inter-pret

Figure 5.18, it’s not going to look like a race car, or a map of Great Britain,

or a drawing of a porcupine. The form doesn’t include the features needed for

those interpretations; and as a result, the form will not allow these

interpretations. This seems to suggest that it’s the features, not the

interpretation, that have priority: The features guide the interpre-tation, and

so they must be in place before the interpretation can be found.

How can both of these claims be

true—with the features depending on the interpreta-tion and the interpretation

depending on the features? The answer involves parallel pro-cessing. Certain

brain areas are sensitive to the input’s features, and the cells in these areas

do their work at the same time that other brain areas are analyzing the

larger-scale config-uration. These two types of analysis, operating in

parallel, can then interact with each other, ensuring that our perception makes

sense at both the large-scale and fine-grained levels.

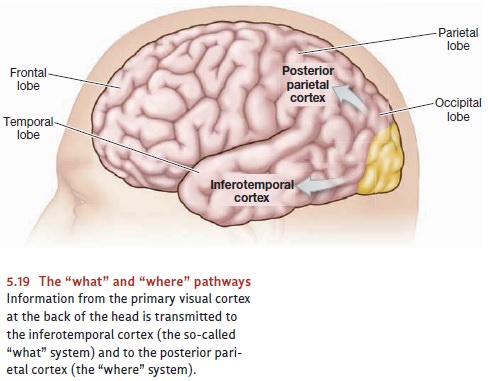

THE “ WHAT ” AND “ WHERE ” SYSTEMS

Evidence for specialized neural

processes, all operating in parallel, continues as we move beyond the visual

cortex. As Figure 5.19 indicates, information from the visual cortex is

transmitted to two other important brain areas in the inferotemporal cortex

(the lower part of the temporal cortex) and the parietal cortex. The pathway

carrying information to the temporal cortex is often called the “what” system; it plays a major role in

the identification of visual objects, telling us whether the object is a cat,

an apple, or whatever. The second pathway, which carries information to the

parietal cortex, is often called the “where”

system; it tells us where an object is located— above or below, to our

right or left (Ungerleider & Haxby, 1994; Ungerleider & Mishkin, 1982).

There’s been some controversy,

however, over how exactly we should think about these two systems. Some

theorists, for example, propose that the path to the parietal cortex isn’t

concerned with the conscious perception of position. Instead, it’s primarily

involved in the unnoticed, automatic registration of spatial location that

allows us to control our movements as we reach for or walk toward objects in

our visual world. Likewise, this view proposes that the pathway to the temporal

cortex isn’t really a “what” system; instead, it’s associated with our

conscious sense of the world around us, including our conscious recognition of

objects and our assessment of what these objects look like (e.g., Goodale &

Milner, 2004; also D. Carey, 2001; Sereno & Maunsell, 1998).

No matter how this debate is settled, there can be no question that these two path-ways serve very different functions. Patients who have suffered lesions in the occipi-tal-temporal pathway—most people agree this is the “what” pathway—show visual agnosia . They may be unable to recognize common objects, such as a cup or a pencil. They’re often unable to recognize the faces of relatives and friends— but if the relatives speak, the patients can recognize them by their voices. At the same time, these patients show little disorder in visual orientation and reaching. On the other hand, patients who have suffered lesions in the occipital-parietal pathway— usually understood as the “where” pathway—show the reverse pattern. They have difficulty in reaching for objects but no problem with identifying them (A. Damasio, Tranel, & H. Damasio, 1989; Farah, 1990; Goodale, 1995; Newcombe, Ratcliff, & Damasio, 1987).

The Binding Problem

It’s clear, then, that natural

selection has favored a division-of-labor strategy for vision: The processes of

perception are made possible by an intricate network of subsystems, each

specialized for a particular task and all working together to create the final

product—an organized and coherent perception of the world.

We’ve seen the benefits of this

design, but the division-of-labor setup also creates a problem for the visual

system. If the different aspects of vision—the perception of shape, color,

movement, and distance—are carried out by different processing mod-ules, then

how do we manage to recombine these pieces of information into one whole? For

example, when we see a ballet dancer in a graceful leap, the leap itself is

registered by the magno cells; the recognition of the ballet dancer depends on

parvo cells. How are these pieces put back together? Likewise, when we reach

for a coffee cup but stop midway because we see that the cup is empty, the

reach itself is guided by the occipital-parietal system (the “where” system);

the fact that the cup is empty is perceived by the occipital-temporal system

(the “what” system). How are these two streams of process-ing coordinated?

We can examine the same issue in

light of our subjective impression of the world around us. Our impression, of

course, is that we perceive a cohesive and organized world. After all, we don’t

perceive big and blue and distant; we instead perceive sky. We don’t perceive

brown and large shape on top of four shapes and moving; instead, we perceive

our pet dog running along. Somehow, therefore, we do manage to re-integrate the

separate pieces of visual information. How do we achieve this reunification?

Neuroscientists call this the binding

problem—how the nervous system manages to bind together elements that were

initially detected by separate systems.

We’re just beginning to

understand how the nervous system solves the binding problem. But evidence is

accumulating that the brain uses a pattern of neuralsynchrony—different

groups of neurons firing in synchrony with each other—toidentify which sensory

elements belong with which. Specifically, imagine two groups of neurons in the

visual cortex. One group of neurons fires maximally whenever a vertical line is

in view. Another group of neurons fires maximally whenever a stimulus is in

view moving from left to right. Also imagine that, right now, a vertical line

is presented, and it is moving to the right. As a result, both groups of

neurons are firing rapidly. But how does the brain encode the fact that these

attributes are bound together, different aspects of a single object? How does

the brain differentiate between this stimulus and one in which the features

being detected actually belong to different objects—perhaps a static vertical

and a moving diagonal?

The answer lies in the timing of

the firing by these two groups of neurons. We emphasized the firing rates of various neurons—whether a

neuron was firing at, say, 100 spikes per second or 10. But we also need to

consider exactly when a neuron is

firing, and whether, in particular, it is firing at the same moment as other

neurons. When the neurons are synchronized, this seems to be the nervous

system’s indication that the messages from the synchronized neurons are in fact

bound together. To return to our example, if the neurons detecting a vertical

line are firing in synchrony with the neurons signaling movement—like a group

of drummers all keep-ing the same beat—then these attributes, vertical and

moving, are registered as belong-ing to the same object. If the neurons are not

firing in synchrony, the features are registered as belonging to separate

objects (Buzsáki & Draguhn, 2004; Csibra, Davis, Spratling, & Johnson,

2000; M. Elliott & Müller, 2000; Fries, Reynolds, Rorie, & Desimone,

2001; Gregoriou, Gotts, Zhou, & Desimone, 2009).

Related Topics