Chapter: Psychology: Perception

Perceptual Selection: Attention

PERCEPTUAL SELECTION :ATTENTION

Our discussion throughout has been shaped by the challenges we face in getting an accurate view of the world around us. The challenges come from many sides—the enormous number of objects we can recognize; the huge diversity in views we can get of each object as we move around in the world; the possibility of interpret-ing the sensory information we receive in more than one way. Besides all that, here’s another challenge we need to confront: When we look out at the world, we don’t just see one object in front of our eyes; instead, we look at complex scenes containing many objects and activities. These many visual inputs are joined by inputs in other modalities: At every moment, we’re likely to be hearing various noises and perhaps smelling certain smells. We’re also receiving information from our skin senses—per-haps signaling the heat in the room, or the pressure of our clothing against our bod-ies. How do we cope with this sensory feast? Without question, we can choose to pay attention to any of these inputs—and so if the sounds are important to us, we’l focus on those; if the smells are alluring, we may focus on those instead. The one thing we can’t do is pay attention to all of these inputs. Indeed, if we become absorbed in front of our eyes, we may lose track of the sounds in the room. If we lis-ten intently to the sounds, we lose track of other aspects of the environment.

How should we think about all of

this? How do we manage to pay attention to some inputs and not others?

Selection

When a stimulus interests us, we

turn our head and eyes to inspect it or position our ears for better hearing.

Other animals do the same, exploring the world with paws or lips or whiskers or

even a prehensile tail. These various forms of orienting serve to adjust the sensory machinery and are one of the

most direct means of selecting the input we wish to learn more about (Posner

& Rothbart, 2007).

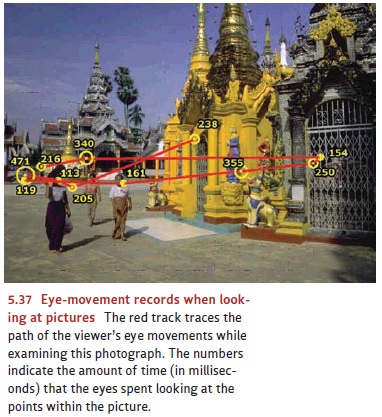

For humans, eye movement is the

major means of orienting. Peripheral vision informs us that something’s going

on, say, in the upper-left section of our field of vision. But our peripheral

acuity isn’t good enough to tell us precisely what it is. To find out, we move

our eyes so that the area where the activity is taking place falls into the

visual field of the fovea (Rayner, Smith, Malcolm, & Henderson, 2009). In

fact, motion in the visual periphery tends to trigger a reflex eye movement,

making it difficult not to look toward a moving object (Figure 5.37).

However, eye movements aren’t our

only means of selecting what we pay attention to and what we ignore. The

selective control of perception also draws on processes involving mental

adjustments rather than physical ones. To study these mental adjustments, many

experiments rely on the visual search

task we’ve already discussed. In this task, someone is shown a set of forms and

must indicate as rapidly as possi-ble whether a particular target is present.

We noted earlier that this task is effortless if the target can be identified

on the basis of just one salient feature—if, for example, you’re searching for

a red circle among items that are blue, or for the vertical in a field of

horizontals. We also mentioned earlier that in these situations, you can search

through four items as fast as you can search through two, or eight as fast as

you can search through four. This result indicates that you have no need to

look at the figures on the screen one by one; if you did, then you’d need more

time as the number of fig-ures grew. Instead, you seem to be looking at all of

the figures at the same time.

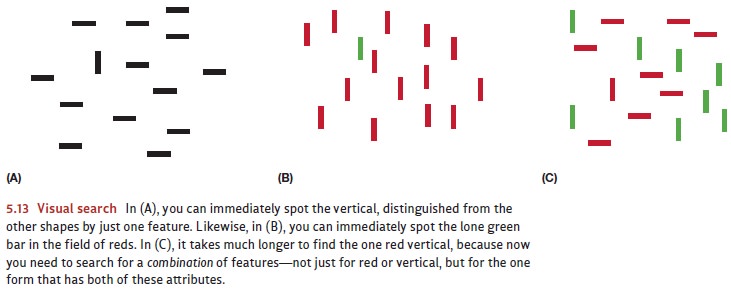

It’s an entirely different

situation when someone is doing a conjunction

search—a search in which the target is defined by a combination of

features.

Thus, for example, we might ask

you to search for a red vertical among distracters that include green verticals

and red horizontals (Figure 5.13C), or to search for a blue circle hidden among

a bunch of blue squares and red circles. Now it’s not enough to search for

(say) red-ness or for the vertical’s orientation; instead, you must search for

a tar-get with both of these features. This requirement has an enormous impact

on performance. Under these conditions, the search times are longer and depend

on the number of items in the display; the more items there are, the longer it

takes you to search through them.

What’s going on here? Apparently, we don’t need to focus our attention when looking for the features themselves. For that task, it seems, we can look at all the items in front of us at once; and so it doesn’t matter if there are two items to examine or four or ten. But when we’re looking for a conjunction of features, we need to take the extra step of figuring out how the features are assembled (and thus whether, for example, the redness and the vertical are part of the same stimulus or not). And this step of assembling the features is where attention plays its role: Attention allows us, in essence, to focus a mental spotlight on just a single item. Thanks to this focus, we can analyze the input one stimulus at a time. This process is slower, but it gives us the information we need; if at any moment we’re analyzing only one item, then we can be sure the features we’re detecting all come from that stimulus. This tells us directly that the features are linked to each other—and, of course, for a conjunction search (and for many other purposes as well), this is the key.

Notice, then, that attention is

crucial for another issue—the binding problem. This is, you’ll recall, the

problem of figuring out which elements in the stimulus information belong with

which and, several lines of evidence confirm a role for attention in achieving

this “binding.” In some studies, for example, people have been shown brief

displays while they’re thinking about some-thing other than the display. Thus,

the research participants might be quickly shown a red F and a green X while

they’re also trying to remember a short list of numbers they’d heard just a

moment before. In this situation, the participants are likely to have no

difficulty perceiving the features in the visual display—so they’ll know that

they saw something red and something green, and they may also know they saw an F and an X . In a fair number of trials, though, the participants will be

confused about how these various aspects of the display were bundled together,

so they may end up reporting illusory

conjunctions—such as having seen a green F and a red X . Notice,

therefore, that simply detecting features doesn’t require the participants’

attention and so goes forward smoothly despite the distracter task. This

finding is consistent with the visual search results as well as our earlier

comments about the key role of feature detection in object recognition. But, in

clear contrast, the combining of

fea-tures into organized wholes does require attention, and this process

suffers when the participant is somehow distracted.

Related evidence comes from

individuals who suffer from severe attention deficits because of damage in the

parietal cortex. These individuals can do visual search tasks if the target is

defined by a single feature, but they’re deeply impaired if the task requires

them to judge how features are conjoined to form complex objects (Cohen &

Rafal, 1991; Eglin, L . Robertson, & Knight, 1989; L .Robertson, Treisman,

Friedman-Hill, & Grabowecky, 1997).

But what exactly does it mean to

“focus attention” on a stimulus or to “shine a mental spotlight” on a

particular input? How do we achieve this selection? The answer involves priming—a warming up of certain detectors so they’re better prepared to

respond thanthey otherwise would be. Priming can be produced in two ways:

First, exposure to a stimulus can cause “data-driven” priming—and so, if you’ve

recently seen a red H, or a pic-ture

of Moses, this experience has primed the relevant detectors; the result is

that, the next time you encounter these stimuli, you’ll be more efficient in

perceiving them. But there’s another way priming can occur, and it’s based on expectations rather than recently viewed

stimuli. For example, if the circumstances lead you to expect that the word CAT is about to be presented, you can

prime the appropriate detectors. This top-down priming will then help if your

expectations turn out to be correct. When the input arrives, you’ll process it

more efficiently because the relevant detectors are already warmed up.

Evidence suggests that the

top-down priming we just described (dependent on expectations rather than

recent exposure) draws on some sort of mental resources, and these resources

are in limited supply. So if you expect to see the word CAT, you’ll prime the relevant detectors, but this will force you

to take resources away from other detectors. As a result, the priming is selective.

If you expect to see CAT, you’ll be

well pre-pared for this stimulus but less prepared for anything else. Imagine,

therefore, that youexpect to see CAT

but are shown TREE instead. In this

case, you’ll process the input less efficiently than you would if you had no

expectations at all. Indeed, if the unexpected stimulus is weak (perhaps

flashed briefly or only on a dimly lit screen), then it may not trigger any

response. In this way, priming can help spare us from distraction—by

selec-tively helping us perceive expected stimuli but simultaneously hindering

our perception of anything else.

In the example just considered,

priming prepared the perceiver for a particular stimulus—the word CAT. But priming can also prepare you

for a broad class of stimuli— for example, preparing you for any stimulus that

appears in a particular location. Thus, you can pay attention to the top-left

corner of a computer screen by priming just those detec-tors that respond to

that spatial region. This step will make you more responsive to any stimulus

arriving in that corner of the screen, but it will also take resources away

from other detectors, making you less responsive to stimuli that appear

elsewhere.

In fact, this process of pointing

attention at a specific location can be demonstrated directly. In a typical

experiment, participants are asked to point their eyes at a dot on a computer

screen (e.g., Wright & Ward, 2008). A moment later, an arrow appears for an

instant in place of the dot and points either left or right. Then, a fraction

of a second later, the stimulus is presented. If it’s presented at the place

where the arrow pointed, the participants respond more quickly than they do

without the prime. If the stimulus appears in a different location—so that the

arrow prime was actually misleading— participants respond more slowly than they

do with no prime at all. Clearly, the prime influences how the participants

allocate their processing resources.

It’s important to realize that

this spatial priming is not simply a matter of cuing eye movements. In most

studies the interval between the appearance of the prime and the arrival of the

target is too short to permit a voluntary eye movement. But even so, when the arrow

isn’t misleading, it makes the task easier. Evidently, priming affects an

inter-nal selection process—it’s as if your mind’s eye moves even though the

eyes in your head are stationary.

Perception in the Absence of Attention

As we’ve just seen, attention

seems to do several things for us. It orients us toward the stimulus so that we

can gain more information. It helps bind the input’s features together so that

we can perceive a coherent object. And it primes us so that we can perceive

more efficiently and so that, to some extent, we’re sheltered from unwanted

distraction.

If attention is this important

and has so many effects, then we might expect that the ability to perceive

would be seriously compromised in the absence of attention. Recent studies indicate

that this expectation is correct, and they document some remarkable sit-uations

in which people fail to perceive prominent stimuli directly in front of their

eyes.

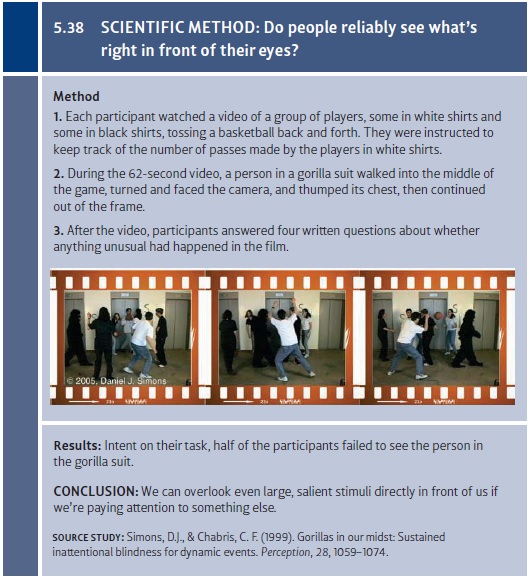

In one study, participants

watched a video showing one group of players, dressed in white shirts, tossing

a ball back and forth. Interspersed with these white-shirted players—and

visible in the same video—a different group of players, in black shirts, also

were tossing a ball. But, when participants were focusing on the white-shirted

players, that was all they noticed. They were oblivious to what the

black-shirted players were doing, even though they were looking right at them.

Indeed, in one experiment, the par-ticipants failed to notice when someone

wearing a gorilla suit strolled right through the scene and even paused briefly

in the middle of the scene to thump on his chest (Figure 5.38; Neisser &

Becklen, 1975; Simons & Chabris, 1999).

In a related study, participants

were asked to stare at a dot in the middle of a computer screen while trying to

make judgments about stimuli presented just a bit off of their line of view.

During the moments when the to-be-judged stimulus was on the screen, the dot

at which the participants were

staring changed momentarily to a triangle and then back to a dot. When asked

about this event a few seconds later, though, the participants insisted that

they’d seen no change in the dot. When given a choice about whether the dot had

changed into a triangle, a plus sign, a circle, or a square, they chose

randomly. Apparently, with their attention directed elsewhere, the participants

were essentially “blind” to a stimulus that had appeared right in front of

their eyes (Mack, 2003; Mack & Rock, 1998; also see Rensink, 2002; Simons,

2000; Vitevitch, 2003).

Related Topics