Chapter: Psychology: Social Psychology

Social Influence: Obedience

Obedience

Conformity

is one way that other people influence us. Another way is obedience, when people change their behavior because someone tells

them to. A certain degree of obedi-ence is a necessary ingredient of social

life. After all, in any society some individuals need to have authority over

others, at least within a limited sphere. Someone needs to direct traffic;

someone needs to tell people when they should put their garbage out at the curb

for collection; someone needs to instruct children and get them to do their

homework (Figure 13.16). But obedience can also lead people to violate their

own prin-ciples and do things they previously felt they should not do. The

atrocities of the last 100 years—the Nazi death camps, the Soviet “purges,” the

Cambodian massacres, the Rwandan and Sudanese genocides—give terrible proof

that the disposition to obedi-ence can become a corrosive poison.

Why

were people so obedient in these situations? Psychologists trying to answer

this question have adopted two different approaches. One is based on the

intuitively appealing notion that some individuals who are more obedient than

others are the pri-mary culprits. The other emphasizes the social situation in

which the obedient person finds herself.

PERSONALITY AND OBEDIENCE

A

half century ago, investigators proposed that it was people with authoritarian person-alities who were

most likely to be highly obedient and to show a cluster of traits relatedto

their obedience. They are prejudiced against various minority groups and hold

cer-tain sentiments about authority, including a general belief that the world

is best gov-erned by a system of power and dominance in which each of us must

submit to those above us and show harshness to those below. These authoritarian

attitudes can be revealed (and measured) by a test in which people express how

much they agree with statements such as “Obedience and respect for authority

are the most important virtues children should learn” and “People can be

divided into two distinct classes: the weak and the strong” (Adorno et al.,

1950).

Contemporary

researchers have broadened the conception of the authoritarian personality by

analyzing the motivational basis for conservative ideology (Jost, Nosek, &

Gosling, 2008), building on the supposition that this ideology—like any belief

system—serves the psychological needs of the people who hold these beliefs. In

particular, the motivated social

cognition perspective maintains that people respond to threat and

uncertainty by expressing beliefs that help them to manage their concerns.

Evidence supporting this perspective has come from studies showing that

political conservatism is positively related to a concern with societal

instability and death, a need for order and structure, and an intolerance of

ambiguity (e.g., Jost et al., 2003).

SITUATION

SAND OBEDIENCE

A very different approach to obedience is suggested by an often-quoted account of the trial of Adolf Eichmann, the man who oversaw the execution of 6 million Jews and other minorities in the Nazi gas chambers. In describing Eichmann, historian Hannah Arendt noted a certain “banality of evil”: “The trouble with Eichmann was precisely that so many were like him, and that the many were neither perverted nor sadistic, that they were, and still are, terribly and terrifyingly normal”.

What

could have led a “normal” man like Eichmann to commit such atrocities? The

answer probably lies in the situation in which Eichmann found himself, one that

encouraged horrible deeds and powerfully discouraged more humane courses of

action. But this simply demands a set of new questions: What makes a situation

influential? And how coercive does a situation have to be in order to elicit

monstrous acts? Stanley Milgram explored these questions in a series of

experiments that are perhaps the best-known studies in all of social psychology

(Milgram, 1963). In these studies, Milgram recruited his participants from the

local population surrounding Yale University in Connecticut via a newspaper

advertisement offering $4.50 per hour to people willing to participate in a

study of how punishment affected human learning.

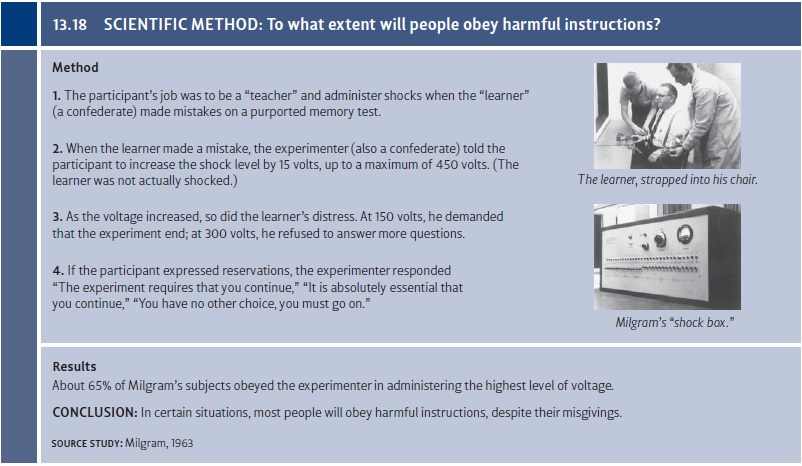

Participants

in this experiment were asked to serve as “teachers,” and their job was to read

out the cue word for each trial, to record the “learner’s” spoken answer,

and—most important—to administer punishment—in the form of an electric

shock—whenever a learner answered incorrectly (Figure 13.18). The first shock

was slight. Each time the learner made a mistake, though, the teacher was

required to increase the voltage by one step—proceeding through a series of

switches on a “shock generator” with labels rang-ing from “Slight Shock”

through “Danger: Severe Shock” to a final, undefined “XXX.”

In

truth, there was no “learner.” Instead, a middle-aged actor who was a

confederate of the experimenter pretended, at the experiment’s start, to be the

learner and then retreated into a separate room, with all subsequent

communication between teacher and learner conducted over an intercom. And, in

fact, the “shock generator” didn’t deliver punishment. The point of the

experiment was not to study punishment and learning at all; that was just a

cover story. It was actually to determine how far the par-ticipants would go in

obeying the experimenter’s instructions.

Within

the procedure, the learner made a fair number of (scripted) errors, and so the

shocks that the participant delivered kept getting stronger and stronger. By

the time 120 volts was reached, the learner shouted that the shocks were

becoming too painful. At 150 volts, he demanded that he be let out of the

experiment. At 180 volts, he cried out that he could no longer stand the pain,

sometimes yelling, “My heart, my heart!” At 300 volts, he screamed, “Get me out

of here!” and said he would not answer anymore. At the next few shocks, there

were agonized screams. After 330 volts, there was silence.

The

learner ’s responses were all predetermined, but the participants—the

teachers—did not know that, so they had to decide what to do. When the learner

cried out in pain or refused to go on, the participants usually turned to the

experimenter for instructions, a form of social referencing. In response, the

experimenter told the partic-ipants that the experiment had to go on, indicated

that he took full responsibility, and pointed out that “the shocks may be

painful but there is no permanent tissue damage.”

How

far did subjects go in obeying the experimenter? The results were astounding:

About 65% of Milgram’s subjects—both males and females—obeyed the experimenter

to the bitter end. Of course, many of the participants showed signs of being

enormously upset by the procedure—they bit their lips, twisted their hands, sweated

profusely, and in some cases, laughed nervously. Nonetheless, they did

obey—and, in the process, apparently delivered lethal shocks to another human

being. (See Burger, 2009, for a contemporary replication that showed obedience

rates comparable to those in the initial Milgram reports. For similar data when

the study was repeated in countries such as Australia, Germany, and Jordan, see

Kilham & Mann, 1974; Mantell & Panzarella, 1976; Shanab & Yahya,

1977.)

These

are striking data—suggesting that it takes remarkably little within a situation

to produce truly monstrous acts. But we should also note the profound ethical

ques-tions raised by this study. Milgram’s participants were of course fully

debriefed at the study’s end, and so they knew they had done no damage to the

“learner” and had inflicted no pain. But the participants also knew that they

had obeyed the researcher and had (apparently) been willing to hurt

someone—perhaps quite seriously. We there-fore need to ask whether the

scientific gain from this study is worth the cost—includ-ing the horrible

self-knowledge the study brought to the participants, or the stress they

experienced. This question has been hotly debated, but this doesn’t take away

from the main message of the data—namely, it takes very little to get people to

obey extreme and inhuman commands.

Why

were Milgram’s participants so obedient? Part of the answer may lie in how each

of us thinks about commands and authority. In essence, when we are following

other people’s orders, we feel that it is they, and not we, who are in control;

they, and not we, who are responsible. The soldier following a general’s order

and the employee following the boss’s command may see themselves merely as the

agents who execute another’s will: the hammer that strikes the nail, not the

carpenter who wields it. As such, they feel absolved of responsibility—and, if

the consequences are bad, absolved of guilt.

This

feeling of being another person’s instrument, with little or no sense of

personal responsibility, can be promoted in various ways. One way is by

increasing the psychological distance between

a person’s actions and their end result. To explore thispossibility, Milgram

ran a variation of his procedure in which two “teachers” were involved: one a

confederate who administered the shocks, and the other—actually, the real

participant—who had to perform subsidiary tasks such as reading the words over

a microphone and recording the learner’s responses. In this new role, the

participant was still an essential part of the experiment, because if he

stopped, the learner would receive no further shocks. In this variation,

though, the participant was more removed from the impact of his actions, like a

minor cog in a bureaucratic machine. After all, he did not do the actual

shocking! Under these conditions, over 90% of the participants went to the

limit, continuing with the procedure even at the highest level of shock

(Milgram, 1963, 1965; see also Kilham & Mann, 1974).

The

obedient person also may reinterpret the situation to diminish any sense of

culpability. One common approach is to try to ignore the fact that the

recipient of one’s actions is a fellow human being. According to one of

Milgram’s participants, “You really begin to forget that there’s a guy out

there, even though you can hear him. For a long time I just concentrated on

pressing the switches and reading the words”. This dehumanization of the victim allows the obedient per-son to think

of the recipient of his actions as an object, not a person, reducing (and

perhaps eliminating) any sense of guilt at harming another individual (Bernard,

Ottenberg, & Redl, 1965; Goff, Eberhardt, Williams, & Jackson, 2008).

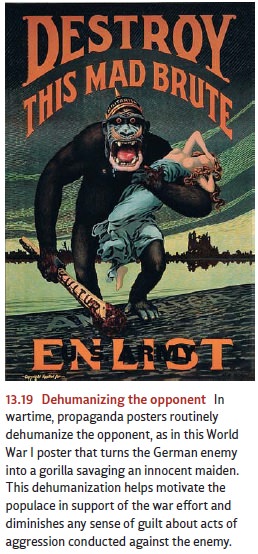

The

dehumanization of the victim in Milgram’s study has a clear parallel outside

the laboratory. Enemies in war and victims of atrocities are rarely described

as people, but instead are referred to as bodies, objects, pests, and numbers

(Figure 13.19). This dehu-manization is propped up by euphemistic jargon. The

Nazis used terms such as finalsolution (for

the mass murder of 6 million people) and

special treatment (for death bygassing); the nuclear age contributed fallout problem and preemptive attack; the Vietnam War gave us free-fire zone and body count;

other wars gave ethnic cleansing and collateraldamage—all dry, official

phrases that keep the thoughts of blood and human sufferingat a psychologically

safe distance.

Thus, people obeying morally questionable orders can rely on two different strategies to let themselves off the moral hook—a cognitive reorientation aimed at holding another person responsible for one’s own actions, or a shift toward perceiving the vic-tim as an object, not a person. It is important to realize that neither of these intellectual adjustments happens in an instant. Instead, inculcation is gradual, making it impor-tant that the initial act of obedience be relatively mild and not seriously clash with the person’s own moral outlook. But after that first step, each successive step can be slightly more extreme. In Milgram’s study, this pattern created a slippery slope that participants slid down unawares. A similar program of progressive escalation was used in the indoc-trination of death-camp guards in Nazi Germany and prison-camp guards in Somalia. The same is true for the training of soldiers everywhere. Draftees go through “basic training,” in part to learn various military skills but much more importantly to acquire the habit of instant obedience. Raw recruits are rarely asked to point their guns at another person and shoot. It’s not only that they don’t know how; it’s that most of them probably wouldn’t do it.

Related Topics