Chapter: Fundamentals of Database Systems : Query Processing and Optimization, and Database Tuning : Algorithms for Query Processing and Optimization

Algorithms for External Sorting

Algorithms

for External Sorting

Sorting is one of the primary algorithms used

in query processing. For example, whenever an SQL query specifies an ORDER BY-clause, the query result must be sorted. Sorting is also a key

component in sort-merge algorithms used for JOIN and other operations (such as UNION and INTERSECTION), and in duplicate elimination algorithms for

the PROJECT operation (when an SQL query specifies the DISTINCT option in the SELECT clause). We will discuss one of these

algorithms in this section. Note that sorting of a particular file may be

avoided if an appropriate index— such as a primary or clustering index (see

Chapter 18)—exists on the desired file attribute to allow ordered access to the

records of the file.

External

sorting refers to sorting algorithms

that are suitable for large files of records

stored on disk that do not fit entirely in main memory, such as most data-base

files. The typical external sorting algorithm uses a sort-merge strategy, which starts by

sorting small subfiles—called runs—of

the main file and then merges the sorted runs, creating larger sorted subfiles

that are merged in turn. The sort-merge algorithm, like other database

algorithms, requires buffer space in

main memory, where the actual sorting and merging of the runs is performed. The

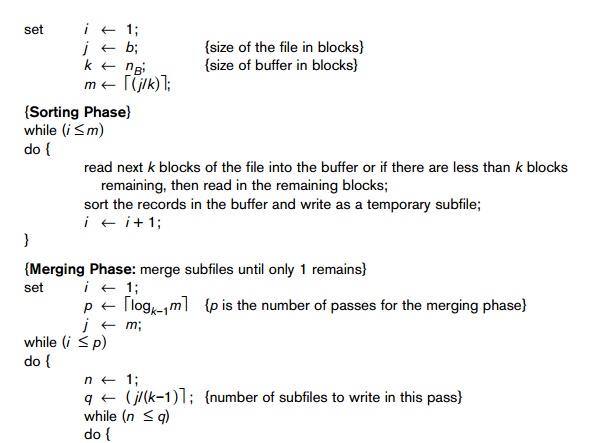

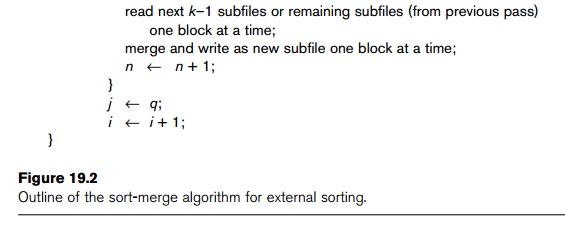

basic algorithm, outlined in Figure 19.2, consists of two phases: the sorting

phase and the merging phase. The buffer space in main memory is part of the DBMS cache—an area in the computer’s

main memory that is controlled by the DBMS. The buffer space is divided into

individual buffers, where each buffer

is the same size in bytes as the size of one disk block. Thus, one buffer can

hold the contents of exactly one disk

block.

In the sorting

phase, runs (portions or pieces) of the file that can fit in the available

buffer space are read into main memory, sorted using an internal sorting algorithm, and written back to disk as temporary

sorted subfiles (or runs). The size of each run and the number of initial runs (nR)

are dictated by the number of file

blocks (b) and the available buffer space (nB). For example, if the

number of available main memory buffers nB

= 5 disk blocks and the size of the file b

= 1024 disk blocks, then nR=

(b/nB) or 205 initial runs each of size 5 blocks (except

the last run which will have only 4

blocks). Hence, after the sorting phase, 205 sorted runs (or 205 sorted

subfiles of the original file) are stored as temporary subfiles on disk.

In the merging

phase, the sorted runs are merged during one or more merge passes. Each merge

pass can have one or more merge steps. The

degree of merging (dM) is

the number of sorted subfiles that can be merged in each merge step. During each merge step, one buffer block is

needed to hold one disk block from each of the sorted subfiles being merged,

and one additional buffer is needed for containing one disk block of the merge

result, which will produce a larger sorted file that is the result of merging

several smaller sorted subfiles. Hence, dM

is the smaller of (nB − 1) and nR,

and the number of merge passes is (logdM(nR)) . In our example where nB = 5, dM = 4 (four-way merging), so the 205 initial sorted

runs would be merged 4 at a time in each step into 52 larger sorted subfiles at

the end of the first merge pass. These 52 sorted files are then merged 4 at a

time into 13 sorted files, which are then merged into 4 sorted files, and then

finally into 1 fully sorted file, which means that four passes are needed.

The performance of the sort-merge algorithm can

be measured in the number of disk block reads and writes (between the disk and main

memory) before the sorting of the whole file is completed. The following

formula approximates this cost:

(2 * b) + (2 * b *

(logdM nR))

The first term (2 * b) represents the number of block

accesses for the sorting phase, since each file block is accessed twice: once

for reading into a main memory buffer and once for writing the sorted records

back to disk into one of the sorted subfiles. The second term represents the

number of block accesses for the merging phase. During each merge pass, a

number of disk blocks approximately equal to the original file blocks b is read and written. Since the number

of merge passes is (logdM nR), we get the total merge

cost of (2 * b *

(logdM nR)).

The minimum number of main memory buffers

needed is nB = 3, which

gives a dM of 2 and an nR of (b/3) . The minimum dM of 2 gives the worst-case

performance of the algorithm, which is:

(2 * b) + (2 * (b *

(log2 nR))).

The following sections discuss the various

algorithms for the operations of the relational algebra (see Chapter 6).

Related Topics