Chapter: Compilers : Principles, Techniques, & Tools : Optimizing for Parallelism and Locality

Other Uses of Affine Transforms

Other Uses of Affine

Transforms

1 Distributed memory machines

2 Multi-Instruction-Issue Processors

3 Vector and SIMD Instructions

4 Prefetching

So far we have focused on the

architecture of shared memory machines, but the theory of affine loop

transforms has many other applications. We can ap-ply affine transforms to

other forms of parallelism including distributed memory

machines, vector instructions,

SIMD (Single Instruction Multiple Data) instruc-tions, as well as

multiple-instruction-issue machines. The reuse analysis intro-duced in this

chapter also is useful for data prefetching, which is an effective

technique for improving memory performance.

1. Distributed Memory Machines

For distributed memory machines, where processors communicate by

sending messages to each other, it is even more important that processors be

assigned large, independent units of computation, such as those generated by

the affine-partitioning algorithm. Besides computation partitioning, a number

of addi-tional compilation issues remain:

1.

Data

allocation. If processors use

different portions of an array, they each only have to allocate enough

space to hold the portion used. We can use projection to determine the section

of arrays used by each processor. The input is the system of linear

inequalities representing the loop bounds, the

array access functions, and the affine partitions that map the

iterations to processor IDs. We project away the loop indices and find for each

processor ID the set of array locations used.

2. Communication code. We need to generate explicit code to

send and receive data to and from other processors. At each synchronization

point

(a)

Determine the

data residing on one processor that is needed by other processors.

(b)

Generate the

code that finds all the data to be sent and packs it into a buffer.

(c)

Similarly,

determine the data needed by the processor, unpack re-ceived messages, and move

the data to the right memory locations.

Again, if all accesses are affine, these tasks can be performed by

the compiler, using the affine framework.

3. Optimization. It is not necessary for all the

communications to take place at the synchronization points. It is preferable

that each processor sends data as soon as it is available, and that each

processor does not start waiting for data until it is needed. Such

optimizations must be balanced by the goal of not generating too many messages,

since there is a nontrivial overhead associated with processing each message.

Techniques described

here have other applications as well. For example, a special-purpose embedded

system may use coprocessors to offload some of its computations. Or, instead of

demand fetching data into the cache, an embedded system may use a separate

controller to load and unload data into and out of the cache, or other data

buffers, while the processor operates on other data. In these cases, similar

techniques can be used to generate the code to move data around.

2. Multi-Instruction-Issue Processors

We can also use affine loop transforms to optimize the performance

of multi-instruction-issue machines. As discussed in Chapter 10.5, the

performance of a software-pipelined loop is limited by two factors: cycles in

precedence con-straints and the usage of the critical resource. By changing the

makeup of the innermost loop, we can improve these limits.

First, we may be able to use loop transforms to create innermost

paralleliz-able loops, thus eliminating precedence cycles altogether. Suppose a

program has two loops, with the outer being parallelizable and the inner not.

We can permute the two loops to make the inner loop parallelizable and so

create more opportunities for instruction-level parallelism. Notice that it is

not necessary for iterations in the innermost loop to be completely

parallelizable. It is suffi-cient that the cycle of dependences in the loop be

short enough so that all the hardware resources are fully utilized.

We can also relax the limit due to resource usage by improving the

usage balance inside a loop. Suppose one loop only uses the adder, and another

uses only the multiplier. Or, suppose one loop is memory bound and another is

compute bound. It is desirable to fuse each pair of loops in these examples

together so as to utilize all the functional units at the same time.

3.

Vector and SIMD Instructions

Besides multiple-instruction issue, there are two other important

forms of in-struction-level parallelism: vector and SIMD operations. In both

cases, the issue of just one instruction causes the same operation to be

applied to a vector of data.

As mentioned previously, many early supercomputers used vector

instruc-tions. Vector operations are performed in a pipelined manner; the

elements in the vector are fetched serially and computations on different

elements are overlapped. In advanced vector machines, vector operations can be chained:

as the elements of the vector results are produced, they are immediately

con-sumed by operations of another vector instruction without having to wait

for all the results to be ready. Moreover, in advanced machines with scatter/gather

hardware, the elements of the vectors need not be contiguous; an index vector

is used to specify where the elements are located.

SIMD instructions specify that the same operation be performed on

contigu-ous memory locations. These instructions load data from memory in

parallel, store them in wide registers, and compute on them using parallel

hardware. Many media, graphics, and digital-signal-processing applications can

benefit from these operations. Low-end media processors can achieve

instruction-level parallelism simply by issuing one SIMD instruction at a time.

Higher-end pro-cessors can combine SIMD with multiple-instruction issue to

achieve higher performance.

SIMD and vector instruction generation share many similarities

with locality optimization. As we find independent partitions that operate on

contiguous memory locations, we stripmine those iterations and interleave these

operations in innermost loops.

SIMD instruction generation poses two additional difficulties.

First, some machines require that the SIMD data fetched from memory be aligned.

For example, they might require that 25,6-byte SIMD operands be placed in

ad-dresses that are multiples of 256. If the sdurce loop operates on just one

array of data, we can generate one main loop that operates on aligned data and

ex-tra code before and after the loop to handle those elements at the boundary.

For loops operating on more than one array, however, it may not be possible to

align all the data at the same time. Second, data used by consecutive

it-erations in a loop may not be contiguous. Examples include many important

digital-signal-processing algorithms, such as Viterbi decoders and fast Fourier

transforms. Additional operations to shuffle the data around may be necessary

to take advantage of the SIMD instructions.

4.Prefetching

No data-locality optimization can eliminate all memory accesses;

for one, data used for the first time must be fetched from memory. To hide the

latency of memory operations, prefetch instructions have been adopted in

many high-performance processors. Prefetch is a machine instruction that

indicates to the processor that certain data is likely to be used soon, and

that it is desirable to load the data into the cache if it is not present

already.

The reuse analysis

described in Section 11.5 can be used to estimate when caches misses are

likely. There are two important considerations when gener-ating prefetch

instructions. If contiguous memory locations are to be accessed, we need to

issue only one prefetch instruction for each cache line. Prefetch instructions

should be issued early enough so that the data is in the cache by the time it

are used. However, we should not issue prefetch instructions too far in

advance. The prefetch instructions can displace data that may still be needed;

also the prefetched data may be flushed before it is used.

Suppose the target machine has a prefetch instruction that can

fetch two words of data at a time, and that the latency of a prefetch

instruction takes about the time to execute six iterations of the loop above.

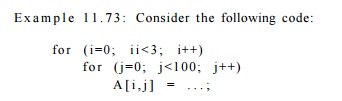

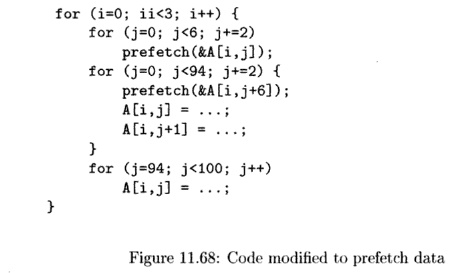

The prefetch code for the above example is shown in Fig. 11.68.

We unroll the innermost loop twice, so a prefetch can be issued for each cache line. We use the concept of software pipelining to prefetch data six iterations before it is used. The prolog fetches the data used in the first six iterations. The steady state loop prefetches six iterations ahead as it performs its computation. The epilog issues no prefetches, but simply executes the remaining iterations.

Summary of Chapter 11

• Parallelism and

Locality from Arrays. The most important opportunities

for both parallelism and locality-based optimizations come from loops that

access arrays. These loops tend to have limited dependences among accesses to

array elements and tend to access arrays in a regular pattern, allowing

efficient use of the cache for good locality.

• Affine Accesses. Almost all theory and techniques for parallelism and locality

optimization assume accesses to arrays are affine: the expressions for the

array indexes are linear functions of the loop indexes.

Reration Spaces. A loop nest with d

nested loops defines a d-dimensional iteration space. The points in the space

are the d-tuples of values that the loop indexes can assume during the

execution of the loop nest. In the affine case, the limits on each loop index

are linear functions of the outer loop indexes, so the iteration space is a

polyhedron.

• Fourier-Motzkin Elimination. A key manipulation of

iteration spaces is to reorder the loops that define the iteration space. Doing

so requires that a polyhedral iteration space be projected onto a subset of its

dimensions. The Fourier-Motzkin algorithm replaces the upper and lower limits

on a given variable by inequalities between the limits themselves.

• Data Dependences and Array Accesses. A central

problem we must solve in order to manipulate loops for parallelism and locality

optimizations is whether two array accesses have a data dependence (can touch

the same array element). When the accesses and loop bounds are affine, the

problem can be expressed as whether there are solutions to a matrix-vector

equation within the polyhedron that defines the iteration space.

• Matrix Rank and Data Reuse. The matrix that

describes an array access can tell us several important things about that

access. If the rank of the matrix is as large as possible (minimum of the

number of rows and number of columns), then the access never touches the same

element twice as the loops iterate. If the array is stored in row-

(column-)major form, then the rank of the matrix with the last (first) row deleted

tells us whether the access has good locality; i.e., elements in a single cache

line are accessed at about the same time.

• Data Dependence and Diophantine Equations. Just

because two accesses to the same array touch the same region of the array does not

mean that they actually access any element in common. The reason is that each

may skip some elements; e.g., one accesses even elements and the other accesses

odd elements. In order to be sure that there is a data dependence, we must

solve a Diophantine (integer solutions only) equation.

Solving

Diophantine Linear Equations. The key technique is to compute the greatest

common divisor (GCD) of the coefficients of the variables. Only if that GCD

divides the constant term will there be integer solutions.

• Space-Partition Constraints. To parallelize the

execution of a loop nest, we need to map the iterations of the loop to a space

of processors, which can have one or more dimensions. The space-partition

constraints say that if two accesses in two different iterations share a data

dependence (i.e., they access the same array element), then they must map to

the same processor. As long as the mapping of iterations to processors is

affine, we can formulate the problem in matrix-vector terms.

• Primitive Code Transformations.

The transformations used to parallelize programs with affine

array accesses are combinations of seven primitives: loop fusion, loop fission,

re-indexing (adding a constant to loop indexes), scaling (multiplying loop

indexes by a constant), reversal (of a loop index), permutation (of the order

of loops), and skewing (rewriting loops so the line of passage through the

iteration space is no longer along one of the axes).

• Synchronization of

Parallel Operations. Sometimes more parallelism can be

obtained if we insert synchronization operations between steps of a program.

For example, consecutive loop nests may have data depen-dences, but

synchronizations between the loops can allow the loops to be parallelized

separately.

• Pipelining. This parallelization technique allows processors to share data,

by synchronously passing certain data (typically array elements) from one

processor to an adjacent processor in the processor space. The method can

improve the locality of the data accessed by each processor.

Time-Partition

Constraints. To discover opportunities for pipelining,

we need to discover solutions to

the time-partition constraints. These

say that whenever two array accesses can

touch the same array element, then the

access in the iteration that occurs first must be assigned to a stage in the

pipeline that occurs no later than the stage to which the second access is

assigned.

Solving

Time-Partition Constraints. Farkas'

Lemma provides a powerful

technique for finding all the affine time-partition mappings that are allowed by a given loop nest with array

accesses. The technique is es- sentially

to replace the primal formulation of the linear inequalities that express the

time-partition constraints by their dual.

• Blocking. This technique breaks each of several loops in a loop nest into

two loops each. The advantage is that doing so may allow us to work on

small sections (blocks) of a multidimensional array, one block at a time. That,

in turn, improves the locality of the program, letting all the needed data

reside in the cache while working on a single block.

• Stripmining. Similar to blocking, this technique breaks only a subset of the

loops of a loop nest into two loops each. A possible advantage is that a

multidimensional array is accessed a "strip" at a time, which may

lead to the best possible cache utilization.

Related Topics