Chapter: Communication Theory : Information Theory

Discrete Memory less Channel

DISCRETE MEMORYLESS CHANNEL:

·

Transmission

rate over a noisy channel

Repetition

code

Transmission

rate

·

Capacity

of DMC

Capacity

of a noisy channel

Examples

Ø All these

transition probabilities from xi to yj are gathered in a transition matrix.

Ø The (i ;

j) entry of the matrix is P(Y = yj /jX = xi ), which is called forward

transition probability.

Ø In DMC

the output of the channel depends only on the input of the channel at the same

instant and not on the input before or after.

Ø The input

of a DMC is a RV (random variable) X who selects its value from a discrete

limited set X.

Ø The

cardinality of X is the number of the point in the used constellation.

Ø In an

ideal channel, the output is equal to the input.

Ø In a

non-ideal channel, the output can be different from the input with a given

probability.

·

Transmission

rate:

Ø H(X) is

the amount of information per symbol at the input of the channel.

Ø H(Y ) is

the amount of information per symbol at the output of the channel.

Ø H(XjY )

is the amount of uncertainty remaining on X knowing Y .

Ø The

information transmission is given by:I (X; Y ) = H(X) − H(XjY ) bits/channel use

Ø For an

ideal channel X = Y , there is no uncertainty over X when we observe Y . So all

the information is transmitted for each channel use: I (X;Y ) = H(X)

Ø If the

channel is too noisy, X and Y are independent. So the uncertainty over X

remains the same knowing or not Y , i.e. no information passes through the

channel: I (X; Y ) = 0.

·

Hard and

soft decision:

Ø Normally

the size of constellation at the input and at the output are the same, i.e.,

jXj = jYj

Ø In this

case the receiver employs hard-decision decoding.

Ø It means

that the decoder makes a decision about the transmitted symbol.

Ø It is

possible also that jXj 6= jY j.

Ø In this

case the receiver employs a soft-decision.

ü Channel models and channel capacity:

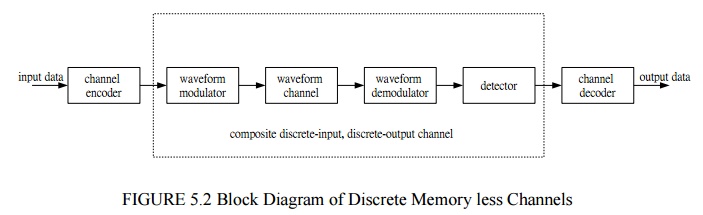

1. The

encoding process is a process that takes a k information bits at a time and

maps each k-bit sequence into a unique n-bit sequence. Such an n-bit sequence

is called a code word.

2. The code

rate is defined as k/n.

3. If the

transmitted symbols are M-ary (for example, M levels), and at the receiver the

output of the detector, which follows the demodulator, has an estimate of the

transmitted data symbol with

(a). M

levels, the same as that of the transmitted symbols, then we say the detector

has made a hard decision;

(b). Q

levels, Q being greater than M, then we say the detector has made a soft

decision.

ü Channels models:

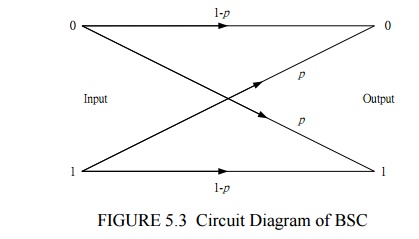

1. Binary symmetric channel (BSC):

If (a)

the channel is an additive noise channel, and (b) the modulator and

demodulator/detector are included as parts of the channel. Furthermore, if the

modulator employs binary waveforms, and the detector makes hard decision, then

the channel has a discrete-time binary input sequence and a discrete-time

binary output sequence.

Note that

if the channel noise and other interferences cause statistically independent

errors in the transmitted binary sequence with average probability p, the

channel is called a BSC. Besides, since each output bit from the channel

depends only upon the corresponding input bit, the channel is also memoryless.

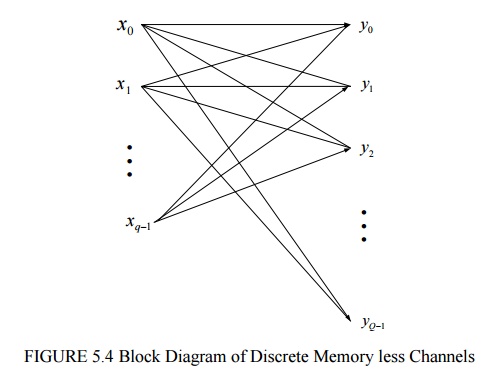

2. Discrete memoryless channels (DMC):

A channel

is the same as above, but with q-ary symbols at the output of the channel

encoder, and Q-ary symbols at the output of the detector, where Q ³ q . If the channel and the modulator are

memoryless, then it can be described by a set of qQ conditional probabilities

P (Y = y i | X = x j

) º P ( y i | x j ), i = 0,1,...,Q - 1; j = 0,1,..., q -1

Such a

channel is called discrete memory channel (DSC).

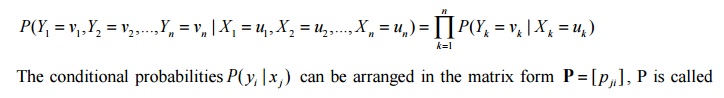

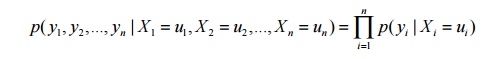

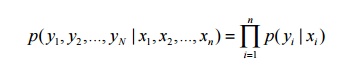

If the

input to a DMC is a sequence of n symbols u1

, u2 ,..., un selected from the alphabet

X and the corresponding output is the sequence v1 , v 2

,..., vn of symbols from

the alphabet Y, the joint conditional probability is

the

probability transition matrix for the channel.

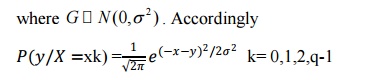

3. Discrete-input, continuous-output channels:

Suppose

the output of the channel encoder has q-ary symbols as above, but the output of

the detector is unquantized (Q = ¥) . The conditional probability density functions

p ( y | X = x k ), k = 0,1,

2,..., q -1

AWGN is

the most important channel of this type.

Y = X + G

For any

given sequence X i , i = 1, 2,..., n , the

corresponding output is Yi

, i = 1,

2,..., n

Yi

= X i

+ Gi

, i = 1, 2,..., n

If,

further, the channel is memoryless, then the joint conditional pdf of the

detector‘s output is

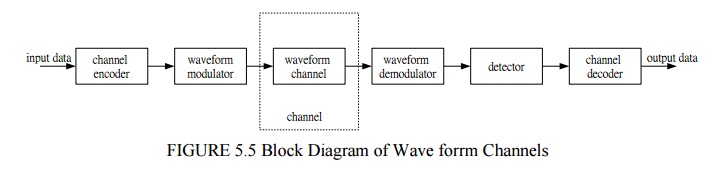

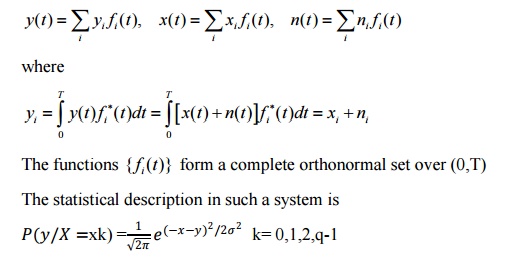

4. Waveform channels:

If such a

channel has bandwidth W with ideal frequency response C ( f ) = 1 , and if the bandwidth-limited input signal to the channel is x ( t)

, and the output signal, y ( t) of the channel is corrupted by AWGN,

then

y ( t ) = x ( t )

+ n ( t)

The

channel can be described by a complete set of orthonormal functions:

Since { ni } are uncorrelated and are

Gaussian, therefore, statistically independent. So

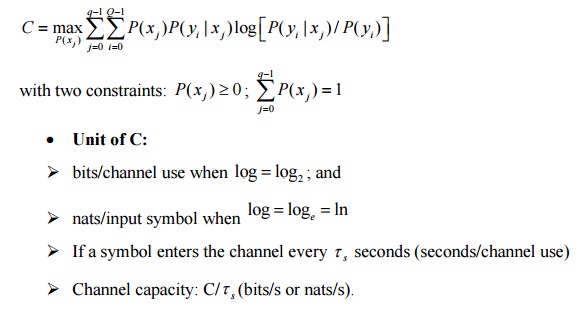

ü Channel Capacity:

Channel

model: DMC

Input

alphabet: X = {x0 , x1 , x 2 ,..., xq-1}

Output

alphabet: Y = {y 0 , y1 , y 2 ,..., yq-1}

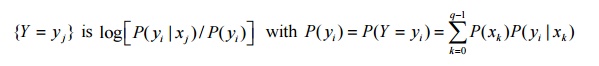

Suppose x j

is transmitted, yi is received, then

The

mutual information (MI) provided about the event {X = x j } by the

occurrence of the event

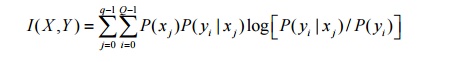

Hence,

the average mutual information (AMI) provided by the output Y about the input X

is

To maximize the AMI, we examine the above equation:

(1). P ( y i) represents the jth output of the detector;

(2). P ( y

i | x

j ) represents the channel

characteristic, on which we cannot do anything;

(3). P ( x

j ) represents the

probabilities of the input symbols, and we may do something or control them.

Therefore, the channel capacity is defined by

Related Topics