Chapter: Advanced Computer Architecture : Memory And I/O

Virtual memory & techniques for fast address translation

Virtual memory & techniques

for fast address translation

Virtual

memory divides physical memory into blocks (called page or segment) and

allocates them to different processes. With virtual memory, the CPU produces

virtual addresses that are translated by a combination of HW and SW to physical

addresses, which accesses main memory. The process is called memory mapping or

address translation.Today, the two memory-hierarchy levels controlled by

virtual memory are DRAMs and magnetic disks

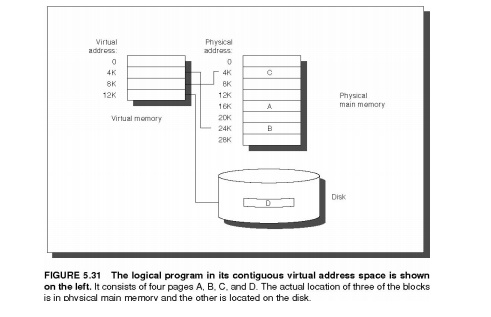

Virtual

Memory manages the two levels of the memory hierarchy represented by main

memory and secondary storage. Figure 5.31 shows the mapping of virtual memory

to physical memory for a program with four pages.

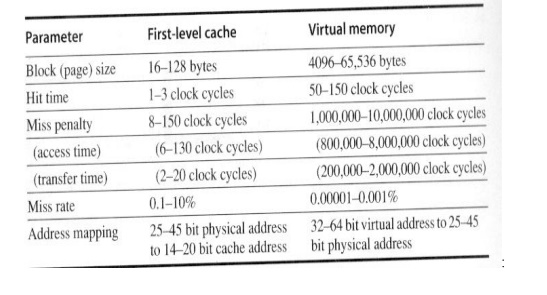

There are

further differences between caches and virtual memory beyond those quantitative

ones mentioned in Figure 5.32

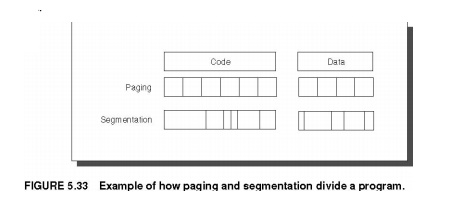

Virtual

memory also encompasses several related techniques. Virtual memory systems can

be categorized into two classes: those with fixed-size blocks, called pages,

and those with variable-size locks, called segments. Pages are typically fixed

at 4096 to 65,536 bytes, while16 segment size varies. The largest segment

supported on any processor ranges from 2 bytes

up to 32

2 bytes;

the smallest segment is 1 byte. Figure 5.33 shows how the two approaches might

divide code and data.

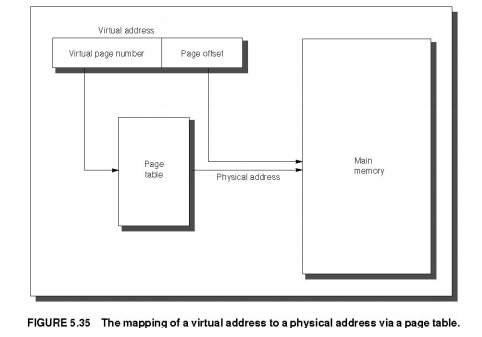

The block

can be placed anywhere in main memory. Both paging and segmentation rely

on a data

structure that is indexed by the page or segment number. This data structure

contains the physical address of the block. For segmentation, the offset is

added to the segment’s physical address to obtain the final physical address.

For paging, the offset is simply concatenated to this physical page address

(see Figure 5.35).

This data

structure, containing the physical page addresses, usually takes the form of a

page table. Indexed by the virtual page number, the size of the table is the

number of pages in the virtual address space. Given a 32-bit virtual address,

4-KB pages, and 4 bytes per page table entry, the size of the page table would be

To reduce

address translation time, computers use a cache dedicated to these addres

translations, called a translation look-aside buffer, or simply translation

buffer. They are described in more detail shortly.

With the

help of Operating System and LRU algorithm pages can be replaced whenever page

fault occurs.

1. Techniques for Fast Address

Translation

Page

tables are usually so large that they are stored in main memory, and some-times

paged themselves. Paging means that every memory access logically takes at

least twice as long, with one memory access to obtain the physical address and

a second access to get the data. This cost is far too dear.

One

remedy is to remember the last translation, so that the mapping process is

skipped if the current address refers to the same page as the last one. A more

general solution is to again rely on the principle of locality; if the accesses

have locality, then the address translations for the accesses must also have

locality. By keeping these address translations in a special cache, a memory

access rarely re-quires a second access to translate the data. This special

address translation cache is referred to as a translation look-aside buffer or

TLB, also called a translation buffer or TB.

A TLB

entry is like a cache entry where the tag holds portions of the virtual address

and the data portion holds a physical page frame number, protection field,

valid bit, and usually a use bit and dirty bit. To change the physical page

frame number or protection of an entry in the page

table,

the operating system must make sure the old entry is not in the TLB; otherwise,

the system won’t be-have properly. Note that this dirty bit means the

corresponding page is dirty, not that

the

address translation in the TLB is dirty nor that a particular block in the data

cache is dirty. The operating system resets these bits by changing the value in

the page table and then invalidating the corresponding TLB entry. When the

entry is reloaded from the page table, the TLB gets an accurate copy of the

bits.

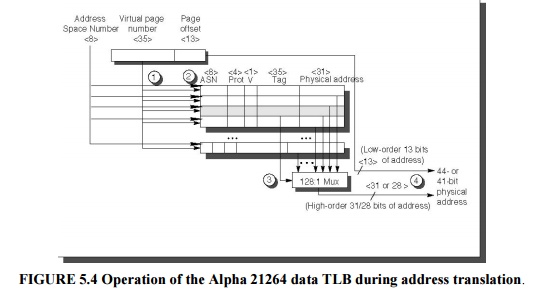

Figure

5.5 shows the Alpha 21264 data TLB organization, with each step of a

translation labeled. The TLB uses fully associative placement; thus, the

translation begins (steps 1 and 2) by sending the virtual address to all tags.

Of course, the tag must be marked valid to allow a match. At the same time, the

type of memory access is checked for a violation (also in step 2) against

protection infor-mation in the TLB.

Selecting a Page Size

The most

obvious architectural parameter is the page size. Choosing the page is a question

of balancing forces that favor a larger page size versus those favoring a

smaller size. The following favor a larger size:

Ø The size

of the page table is inversely proportional to the page size; memory (or other

resources used for the memory map) can therefore be saved by making the pages

bigger.

Ø A larger

page size can allow larger caches with fast cache hit times.

Ø Transferring

larger pages to or from secondary storage, possibly over a network, is more

efficient than transferring smaller pages.

Ø The

number of TLB entries are restricted, so a larger page size means that more

memory can be mapped efficiently, thereby reducing the number of TLB misses.

Virtual memory protection

Multiprogramming

forces to worry about usage of virtual memory. So Protection is required for

virtual memory concept. The responsibility for maintaining correct process

behavior is shared by designers of the computer and the operating system. The

computer designer must

ensure

that the CPU portion of the process state can be saved and restored. The

operating system designer must guarantee that processes do not interfere with

each others’ computations.

The

safest way to protect the state of one process from another would be to copy

the current information to disk. However, a process switch would then take

seconds—far too long for a time-sharing environment. This problem is solved by

operating systems partitioning main memory so that several different processes

have their state in memory at the same time.

Protecting Processes

The simplest protection mechanism is a pair of registers that checks every ad-dress to be sure that it falls between the two limits, traditionally called base and bound. An address is valid if Base ≤ Address ≤ Bound

In some

systems, the address is considered an unsigned number that is always added to

the base, so the limit test is just (Base + Address) ≤ Bound

If user

processes are allowed to change the base and bounds registers, then users can’t

be protected from each other. The operating system, however, must be able to

change the registers so that it can switch processes. Hence, the computer

designer has three more responsibilities in helping the operating system

designer protect processes from each other:

Ø Provide

at least two modes, indicating whether the running process is a user process or

an operating system process. This latter process is sometimes called a kernel

process, a supervisor process, or an executive process.

Ø Provide a

portion of the CPU state that a user process can use but not write. This state

includes the base/bound registers, a user/supervisor mode bit(s), and the exception

enable/disable bit. Users are prevented from writing this state because the

operating system cannot control user processes if users can change the address

range checks, give themselves supervisor privileges, or disable exceptions.

Ø Provide

mechanisms whereby the CPU can go from user mode to supervisor mode and vice

versa. The first direction is typically accomplished by a system call,

implemented as a special instruction that transfers control to a dedicated

location in supervisor code space. The PC is saved from the point of the

sys-tem call, and the CPU is placed in supervisor mode. The return to user mode

is like a subroutine return that restores the previous user/supervisor mode.

2. A Paged Virtual Memory

Example: The Alpha Memory Management and the 21264

TLB

The Alpha

architecture uses a combination of segmentation and paging, providing

protection while minimizing page table size. With 48-bit virtual addresses, the

64-bit address space is first divided into three segments: seg0 (bits 63 - 47 =

0...00), kseg (bits 63 - 46 = 0...10), and seg1 (bits 63 to 46 = 1...11). kseg

is re-served for the operating system kernel, has uniform protection for the

whole space, and does not use memory management.

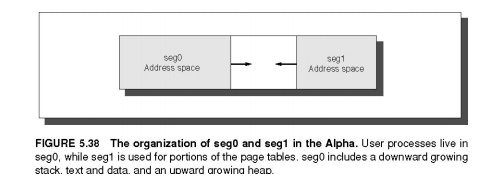

User

processes use seg0, which is mapped into pages with individual protection.

Figure 5.38 shows the layout of seg0 and seg1. seg 0 grows from address 0

upward, while seg1 grows downward to 0. This approach provides many advantages:

segmentation divides the address space and conserves page table space, while paging

provides virtual memory, relocation, and protection.

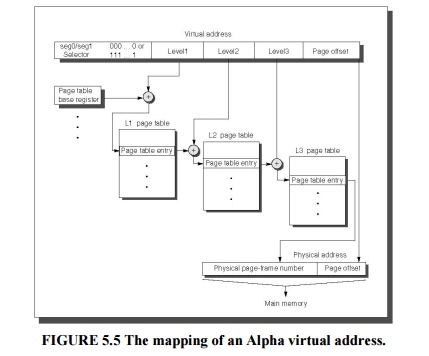

The Alpha

uses a three-level hierarchical page table to map the address space to keep the

size

reasonable. Figure 5.5 shows address translation in the Alpha. The addresses

for each of these page tables come from three “level” fields, labeled level1,

level2, and level3. Address

translation

starts with adding the level1 address field to the page table base register and

then reading memory from this location to get the base of the second-level page

table.

The

level2 address field is in turn added to this newly fetched address, and memory

is accessed again to determine the base of the third page table. The level3

address field is added to this base address, and memory is read using this sum

to (finally) get the physical address of the page being referenced. This

address is concatenated with the page offset to get the full physical address.

Each page table in the Alpha architecture is constrained to fit within a single

page.

The first

three levels (0, 1, and 2) use physical addresses that need no further

translation, but Level 3 is mapped virtually. These normally hit the TLB, but

if not, the table is accessed a second time with physical addresses.

The Alpha

uses a 64-bit page table entry (PTE) in each of these page tables. The first 32

bits contain the physical page frame number, and the other half includes the

following five protection fields:

Valid—Says

that the page frame number is valid for hardware translation User read

enable—Allows user programs to read data within this page Kernel read

enable—Allows the kernel to read data within this page User write enable—Allows

user programs to write data within this page

Kernel

write enable—Allows the kernel to write data within this page

In

addition, the PTE has fields reserved for systems software to use as it

pleases. Since the Alpha goes through three levels of tables on a TLB miss,

there are three potential places to check protection restrictions. The Alpha

obeys only the bottom-level PTE, checking the others only to be sure the valid

bit is set.

3. A Segmented Virtual Memory

Example: Protection in the Intel Pentium

The

original 8086 used segments for addressing, yet it provided nothing for virtual

memory or for protection. Segments had base registers but no bound registers

and no access checks, and before a segment register could be loaded the

corresponding segment had to be in physical memory.

Intel’s

dedication to virtual memory and protection is evident in the successors to the

8086 (today called IA-32), with a few fields extended to support larger

addresses. This protection scheme is elaborate, with many details carefully

designed to try to avoid security loopholes.

The first

enhancement is to double the traditional two-level protection model: the

Pentium has four levels of protection. The innermost level (0) corresponds to

Alpha kernel mode and the outermost level (3) corresponds to Alpha user mode.

The IA-32 has separate stacks for each level to avoid security breaches between

the levels.

The IA-32

divides the address space, al-lowing both the operating system and the user

access to the full space. The IA-32 user can call an operating system routine

in this space and

even pass

parameters to it while retaining full protection. This safe call is not a

trivial action, since the stack for the operating system is different from the

user’s stack. Moreover, the IA-32

allows

the operating system to maintain the protection level of the called routine for

the parameters that are passed to it. This potential loophole in protection is

prevented by not allowing the user process to ask the operating system to

access something indirectly that it would not have been able to access itself.

(Such security loopholes are called Trojan horses.)

Adding Bounds Checking and Memory Mapping

The first

step in enhancing the Intel processor was getting the segmented addressing to

check bounds as well as supply a base. Rather than a base address, as in the

8086, segment registers in the IA-32 contain an index to a virtual memory data

structure called a descriptor table. Descriptor tables play the role of page

tables in the Alpha. On the IA-32 the equivalent of a page table entry is a

segment descriptor.

It

contains fields found in PTEs:

A present

bit—equivalent to the PTE valid bit, used to indicate this is a valid

translation A base field—equivalent to a page frame address, containing the

physical address of the

first

byte of the segment

An access

bit—like the reference bit or use bit in some architectures that is helpful for

replacement algorithms

An

attributes field—specifies the valid operations and protection levels for

operations that use this segment

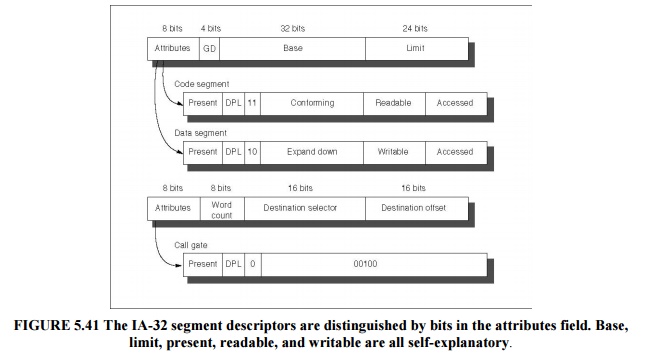

There is

also a limit field, not found in paged systems, which establishes the upper

bound of valid offsets for this segment. Figure 5.41 shows examples of IA-32

segment descriptors.

IA-32

provides an optional paging system in addition to this segmented addressing.

The upper portion of the 32-bit address selects the segment descriptor and the

middle portion is an index into the page table selected by the descriptor.

Adding Sharing and Protection

To

provide for protected sharing, half of the address space is shared by all

processes and half is unique to each process, called global address space and

local address space, respectively. Each half is given a descriptor table with

the appropriate name. A descriptor pointing to a shared segment is placed in

the global descriptor table, while a descriptor for a private segment is placed

in the local descriptor table.

Related Topics