Chapter: Multicore Application Programming For Windows, Linux, and Oracle Solaris : Hardware, Processes, and Threads

The Performance of 32-Bit versus 64-Bit Code

The

Performance of 32-Bit versus 64-Bit Code

A 64-bit

processor can, theoretically, address up to 16 exabytes (EB), which is 4GB squared,

of physical memory. In contrast, a 32-bit processor can address a maximum of

4GB of memory. Some applications find only being able to address 4GB of memory

to be a limitation—a particular example is databases that can easily exceed 4GB

in size. Hence, a change to 64-bit addresses enables the manipulation of much

larger data sets.

The

64-bit instruction set extensions for the x86 processor are referred to as

AMD64, EMT64, x86-64, or just x64. Not only did these increase the memory that

the processor could address, but they also improved performance by eliminating

or reducing two problems.

The first

issue addressed is the stack-based calling convention. This convention leads to

the code using lots of store and load instructions to pass parameters into functions.

In 32-bit code when a function is called, all the parameters to that function

needed to be stored onto the stack. The first action that the function takes is

to load those parameters back off the stack and into registers. In 64-bit code,

the parameters are kept in registers, avoiding all the load and store

operations.

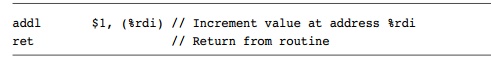

We can

see this when the earlier code is compiled to use the 64-bit x86 instruction

set, as is shown in Listing 1.4.

Listing 1.4 64-Bit x86 Assembly Code to Increment a Variable at

an Address

In this

example, we are down to two instructions, as opposed to the three instructions

used in Listing 1.3. The two instructions are the increment instruction that

adds 1 to the value pointed to by the register %rdi and the return instruction.

The

second issue addressed by the 64-bit transition was increasing the number of

general-purpose registers from about 6 in 32-bit code to about 14 in 64-bit

code. Increasing the number of registers reduces the number of register spills

and fills.

Because

of these two changes, it is very tempting to view the change to 64-bit code as

a performance gain. However, this is not strictly true. The changes to the

number of registers and the calling convention occurred at the same time as the

transition to 64-bit but could have occurred without this particular

transition—they could have been intro-duced on the 32-bit x86 processor. The

change to 64-bit was an opportunity to reevalu-ate the architecture and to make

these fundamental improvements.

The

actual change to a 64-bit address space is a performance loss. Pointers change

from being a 4-byte structure into an 8-byte structure. In Unix-like operating

systems, long-type variables also go from 4 to 8 bytes. When the size of a

variable increases, the memory footprint of the application increases, and

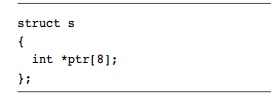

consequently performance decreases. For example, consider the C data structure

shown in Listing 1.5.

Listing 1.5 Data Structure Containing an Array of

Pointers to Integers

When compiled

for 32-bits, the structure occupies 8 ∗ 4 bytes

= 32 bytes. So, every 64-byte cache line can contain two structures. When

compiled for 64-bit addresses, the pointers double in size, so the structure

takes 64 bytes. So when compiled for 64-bit, a single structure completely

fills a single cache line.

Imagine

an array of these structures in a 32-bit version of an application; when one of

these structures is fetched from memory, the next would also be fetched. In a

64-bit version of the same code, only a single structure would be fetched.

Another way of look-ing at this is that for the same computation, the 64-bit

version requires that up to twice the data needs to be fetched from memory. For

some applications, this increase in mem-ory footprint can lead to a measurable

drop in application performance. However, on x86, most applications will see a

net performance gain from the other improvements. Some compilers can produce

binaries that use the EMT64 instruction set extensions and ABI but that

restrict the application to a 32-bit address space. This provides the

perform-ance gains from the instruction set improvements without incurring the

performance loss from the increased memory footprint.

It is

worth quickly contrasting this situation with that of the SPARC processor. The

SPARC processor will also see the performance loss from the increase in size of

pointers and longs. The SPARC calling convention for 32-bit code was to pass

values in registers, and there were already a large number of registers

available. Hence, codes compiled for SPARC processors usually see a small

decrease in performance because of the memory footprint.

Related Topics