Chapter: Multicore Application Programming For Windows, Linux, and Oracle Solaris : Hardware, Processes, and Threads

The Differences Between Processes and Threads

The

Differences Between Processes and Threads

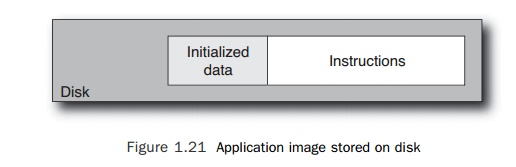

It is

useful to discuss how software is made of both processes and threads and how

these are mapped into memory. This section will introduce some of the concepts,

which will become familiar over the next few chapters. An application comprises

instructions and data. Before it starts running, these are just some

instructions and data laid out on disk, as shown in Figure 1.21.

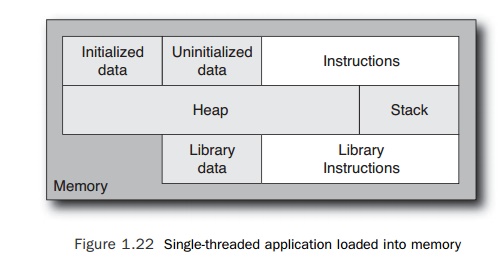

An

executing application is called a process.

A process is a bit more than instructions and data, since it also has state.

State is the set of values held in the processor registers, the address of the

currently executing instruction, the values held in memory, and any other

values that uniquely define what the process is doing at any moment in time.

The important difference is that as a process runs, its state changes. Figure

1.22 shows the lay-out of an application running in memory.

Processes

are the fundamental building blocks of applications. Multiple applications

running simultaneously are really just multiple processes. Support for multiple

users is typically implemented using multiple processes with different

permissions. Unless the process has been set up to explicitly share state with

another process, all of its state is pri-vate to the process—no other process

can see in. To take a more tangible example, if you run two copies of a text

editor, they both might have a variable current_line, but neither could read the

other one’s value for this variable.

A

particularly critical part of the state for an application is the memory that

has been allocated to it. Recall that memory is allocated using virtual

addresses, so both copies of the hypothetical text editor might have stored the

document at virtual addresses 0x111000 to 0x11a000. Each application will

maintain its own TLB mappings, so identi-cal virtual addresses will map onto

different physical addresses. If one core is running these two applications,

then each application should expect on average to use half the TLB entries for

its mappings—so multiple active processes will end up increasing the pressure

on internal chip structures like the TLBs or caches so that the number of TLB

or cache misses will increase.

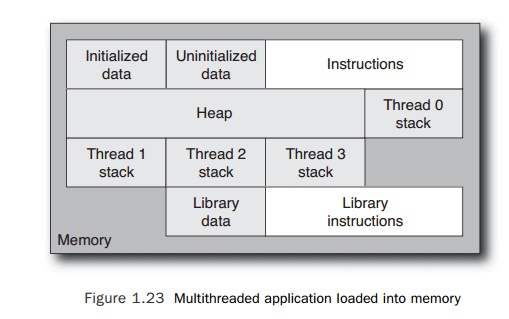

Each

process could run multiple threads. A thread has some state, like a process

does, but its state is basically just the values held in its registers plus the

data on its stack. Figure 1.23 shows the memory layout of a multithreaded

application.

A thread

shares a lot of state with other threads in the application. To go back to the

text editor example, as an alternative implementation, there could be a single

text editor application with two windows. Each window would show a different

document, but the

two

documents could no longer both be held at the same virtual address; they would

need different virtual addresses. If the editor application was poorly coded,

activities in one window could cause changes to the data held in the other.

There are

plenty of reasons why someone might choose to write an application that uses

multiple threads. The primary one is that using multiple threads on a system

with multiple hardware threads should produce results faster than a single

thread doing the work. Another reason might be that the problem naturally

decomposes into multiple threads. For example, a web server will have many

simultaneous connections to remote machines, so it is a natural fit to code it

using multiple threads. The other advantage of threads over using multiple

processes is that threads share most of the machine state, in particular the

TLB and cache entries. So if all the threads need to share some data, they can

all read it from the same memory address.

What you

should take away from this discussion is that threads and processes are ways of

getting multiple streams of instructions to coordinate in delivering a solution

to a problem. The advantage of processes is that each process is isolated—if

one process dies, then it can have no impact on other running processes. The

disadvantages of multiple processes is that each process requires its own TLB

entries, which increases the TLB and cache miss rates. The other disadvantage

of using multiple processes is that sharing data between processes requires

explicit control, which can be a costly operation.

Multiple

threads have advantages in low costs of sharing data between threads—one thread

can store an item of data to memory, and that data becomes immediately visible

to all the other threads in that process. The other advantage to sharing is

that all threads share the same TLB and cache entries, so multithreaded

applications can end up with lower cache miss rates. The disadvantage is that

one thread failing will probably cause the entire application to fail.

The same

application can be written either as a multithreaded application or as a multiprocess

application. A good example is the recent changes in web browser design.

Google’s Chrome browser is multiprocess. The browser can use multiple tabs to

display different web pages. Each tab is a separate process, so one tab failing

will not bring down the entire browser. Historically, browsers have been

multithreaded, so if one thread exe-cutes bad code, the whole browser crashes.

Given the unconstrained nature of the Web, it seems a sensible design decision

to aim for robustness rather than low sharing costs.

Related Topics