Chapter: An Introduction to Parallel Programming : Shared-Memory Programming with Pthreads

Pthreads - Hello, World Program

HELLO, WORLD

Let’s get started. Let’s take

a look at a Pthreads program. Program 4.1 shows a program in which the main

function starts up several threads. Each thread prints a message and then

quits.

1. Execution

The program is compiled like an ordinary C

program, with the possible exception that we may need to link in the Pthreads

library:

$ gcc -g -Wall -o pth_hello pth_hello.c -lpthread

The -lpthread tells the compiler that we want to link in the

Pthreads library. Note that it’s -lpthread, not -lpthreads. On some systems the compiler will automatically link in the

library, and lpthread won’t be needed.

To run the program, we just type

$ ./pth_hello <number of threads>

Program

4.1: A Pthreads “hello, world” program

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

/* Global

variable: accessible to all

threads */

int thread count;

void Hello(void rank); /*

Thread function */

int main(int argc, char argv[]) {

longthread; / Use

long in case of a 64 bit system /

pthread_t*

thread_handles;

/

Get number of threads from command line

/

thread_count = strtol(argv[1], NULL, 10);

thread_handles = malloc (thread_count *sizeof(pthread_t));

for (thread = 0; thread < thread_count; thread++)

pthread_create(&thread_handles[thread], NULL,

Hello, (void* ) thread);

printf("Hello from the main

thread\n");

for (thread = 0; thread < thread_count; thread++)

pthread_join(thread_handles[thread], NULL);

free(thread_handles);

return 0;

}

/ main /

void

Hello(void rank) {

long my rank = (long) rank

/* Use long in case of 64 bit system */

printf("Hello from thread %ld of %d\n",

my_rank,

thread_count);

return NULL;

}

/* Hello */

For example, to run the program with one

thread, we type

$ ./pth_hello 1

and the output will look something like this:

Hello from the main thread

Hello from thread 0 of 1

To run the program with four threads, we type

$ ./pth_hello 4

and the output will look something like this:

Hello from the main thread

Hello from thread 0 of 4

Hello from thread 1 of 4

Hello from thread 2 of 4

Hello from thread 3 of 4

2. Preliminaries

Let’s take a closer look at

the source code in Program 4.1. First notice that this is just a C program with a main function and one other function. The program

includes the familiar stdio.h and stdlib.h header files. However, there’s a lot that’s

new and different. In Line 3 we include pthread.h, the Pthreads header file, which declares the

various Pthreads functions, constants, types, and so on.

In Line 6 we define a global variable thread count. In Pthreads programs, global variables are

shared by all the threads. Local variables and function arguments—that is,

variables declared in functions—are (ordinarily) private to the thread

executing the function. If several threads are executing the same function,

each thread will have its own private copies of the local variables and

function arguments. This makes sense if you recall that each thread has its own

stack.

We should keep in mind that global variables

can introduce subtle and confusing bugs. For example, suppose we write a

program in which we declare a global variable int x. Then we write a function f in which we intend to use a local variable called x, but we forget to declare it. The program will compile with no

warnings, since f has access to the global x. But when we run the program, it produces very strange output,

which we eventually determine to have been caused by the fact that the global

variable x has a strange value. Days later, we finally discover that the

strange value came from f. As a rule of thumb, we should try to limit our

use of global variables to situations in which they’re really needed—for

example, for a shared variable.

In Line 15 the program gets the number of

threads it should start from the com-mand line. Unlike MPI programs, Pthreads

programs are typically compiled and run just like serial programs, and one

relatively simple way to specify the number of threads that should be started

is to use a command-line argument. This isn’t a requirement, it’s simply a

convenient convention we’ll be using.

The strtol function converts a string into a long int. It’s declared in stdlib.h, and its syntax is

long strtol(

const char number_p /* in */,

char end_p /*

out */,

int base /* in */);

It returns a long int corresponding to the string referred to by number_p. The base of the representation of the number is given by the base argument. If end_p isn’t NULL, it will point to the first invalid (that is,

nonnumeric) character in number_p.

3. Starting the threads

As we already noted, unlike MPI programs, in

which the processes are usually started by a script, in Pthreads the threads

are started by the program executable. This intro-duces a bit of additional

complexity, as we need to include code in our program to explicitly start the

threads, and we need data structures to store information on the threads.

In Line 17 we allocate storage for one pthread t object for each thread. The pthread t data structure is used for storing thread-specific information. It’s declared in pthread.h.

The pthread_t objects are examples of opaque objects. The actual data that they store is system specific, and their data members aren’t directly accessible to user code. However, the Pthreads standard guarantees that a pthread_t object does store enough information to uniquely identify the thread with which it’s associated. So, for example, there is a Pthreads function that a thread can use to retrieve its associated pthread t object, and there is a Pthreads function that can determine whether two threads are in fact the same by examining their associated pthread_t objects.

In Lines 19–21, we use the pthread create function to start the threads. Like most Pthreads functions, its

name starts with the string pthread _. The syntax of pthread_create is

int pthread create(

pthread_t* thread_p /* out */,

const pthread_attr_t attr_p /* in */,

void* (*start_routine)(void*) /* in */,

void* arg_p /* in */);

The first argument is a pointer to the

appropriate pthread t object. Note that the object is not allocated by the call to pthread create; it must be allocated before the call. We won’t be using the second argument, so we just pass

the argument NULL in our function call. The third argument is the function that the

thread is to run, and the last argument is a pointer to the argument that

should be passed to the function start

routine. The return value for most Pthreads functions

indicates if there’s been an error in the function call. In order to

reduce the clutter in our examples, in this chapter (as in most of the rest of

the book) we’ll generally ignore the return values of Pthreads functions.

Let’s take a closer look at the last two arguments.

The function that’s started by pthread create should have a prototype that looks something

like this:

Void* thread_function(void* args_p);

Recall that the type void can be cast to any pointer type in C, so args p can point to a list containing one or more values needed by thread function. Similarly, the return value of thread

function can point to a list of one or more values. In

our call to pthread create, the final argument is a fairly common kluge: we’re effectively

assigning each thread a unique integer rank. Let’s first look at why we are doing this; then we’ll worry about

the details of how to do it.

Consider the following problem: We start a

Pthreads program that uses two threads, but one of the threads encounters an

error. How do we, the users, know which thread encountered the error? We can’t

just print out the pthread t object, since it’s opaque. However, if when we

start the threads, we assign the first thread rank 0, and the second thread

rank 1, we can easily determine which thread ran into trouble by just including

the thread’s rank in the error message.

Since the thread function takes a void argument, we could allocate one int in main for each thread and assign each allocated int a unique value. When we start a thread, we

could then pass a pointer to the appropriate int in the call to pthread create. However, most programmers resort to some

trickery with casts. Instead of creating an int in main for the “rank,” we cast the loop variable thread to have type void* . Then in the thread function, hello, we cast the argument back to a long (Line 33).

The result of carrying out these casts is

“system-defined,” but most C compilers do allow this. However, if the size of

pointer types is different from the size of the integer type you use for the

rank, you may get a warning. On the machines we used, pointers are 64 bits, and

ints are only 32 bits, so we use long instead of int.

Note that our method of assigning thread ranks

and, indeed, the thread ranks them-selves are just a convenient convention that

we’ll use. There is no requirement that a thread rank be passed in the call to pthread_create. Indeed there’s no requirement that a thread be assigned a rank.

Also note that there is no technical reason for

each thread to run the same function; we could have one thread run hello, another run goodbye, and so on. However, as with the MPI programs,

we’ll typically use “single program, multiple data” style parallelism with our

Pthreads programs. That is, each thread will run the same thread function, but

we’ll obtain the effect of different thread functions by branching within a

thread.

4. Running the threads

The thread that’s running the main function is sometimes called the main thread. Hence, after starting the threads, it prints the message

Hello from the main thread

In the meantime, the threads started by the

calls to pthread create are also running. They get their ranks by casting in Line 33, and

then print their messages. Note that when a thread is done, since the type of

its function has a return value, the thread should return something. In this

example, the threads don’t actually need to return anything, so they return NULL.

In Pthreads, the programmer doesn’t directly

control where the threads are run. There’s no argument in pthread create saying which core should run which thread. Thread placement is

controlled by the operating system. Indeed, on a heavily loaded system, the

threads may all be run on the same core. In fact, if a program starts more

threads than cores, we should expect multiple threads to be run on a single

core. However, if there is a core that isn’t being used, operating systems will

typically place a new thread on such a core.

5. Stopping the threads

In Lines 25 and 26, we call the function pthread join once for each thread. A single call to pthread join will wait for the thread associated with the pthread t object to complete. The syntax of pthread_join is

int pthread join(

pthread_t thread /* in */,

void** ret_val_p /* out */);

The second argument can be used to receive any

return value computed by the thread. So in our example, each thread executes a return and, eventually, the main thread will call pthread_join for that thread to complete the termination.

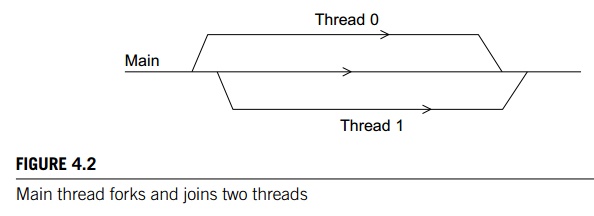

This function is called pthread_join because of a diagramming style that is often used to describe the

threads in a multithreaded process. If we think of the main thread as a single

line in our diagram, then, when we call pthread_create, we can create a branch or fork off the main thread. Multiple calls to pthread_create will result in multiple branches or forks. Then, when the threads

started by pthread_create terminate, the diagram shows the branches joining the main thread. See Figure 4.2.

6. Error checking

In the interest of keeping the program compact

and easy to read, we have resisted the temptation to include many details that

would therefore be important in a “real” program. The most likely source of

problems in this example (and in many programs) is the user input or lack of

it. It would therefore be a very good idea to check that the program was

started with command line arguments, and, if it was, to check the actual value

of the number of threads to see if it’s reasonable. If you visit the book’s

website, you can download a version of the program that includes this basic

error checking.

It may also be a good idea to check the error

codes returned by the Pthreads functions. This can be especially useful when

you’re just starting to use Pthreads and some of the details of function use

aren’t completely clear.

7. Other approaches to thread startup

In our example, the user

specifies the number of threads to start by typing in a command-line argument.

The main thread then creates all of the “subsidiary” threads. While the threads

are running, the main thread prints a message, and then waits for the other

threads to terminate. This approach to threaded programming is very similar to

our approach to MPI programming, in which the MPI system starts a collection of

processes and waits for them to complete.

There is, however, a very

different approach to the design of multithreaded pro-grams. In this approach,

subsidiary threads are only started as the need arises. As an example, imagine

a Web server that handles requests for information about high-way traffic in

the San Francisco Bay Area. Suppose that the main thread receives the requests

and subsidiary threads actually fulfill the requests. At 1 o’clock on a typical

Tuesday morning, there will probably be very few requests, while at 5 o’clock

on a typical Tuesday evening, there will probably be thousands of requests.

Thus, a natural approach to the design of this Web server is to have the main

thread start subsidiary threads when it receives requests.

Now, we do need to note that

thread startup necessarily involves some overhead. The time required to start a

thread will be much greater than, say, a floating point arithmetic operation,

so in applications that need maximum performance the “start threads as needed”

approach may not be ideal. In such a case, it may make sense to use a somewhat

more complicated scheme—a scheme that has characteristics of both approaches.

Our main thread can start all the threads it anticipates need-ing at the

beginning of the program (as in our example program). However, when a thread

has no work, instead of terminating, it can sit idle until more work is

avail-able. In Programming Assignment 4.5 we’ll look at how we might implement

such a scheme.

Related Topics