Chapter: An Introduction to Parallel Programming : Shared-Memory Programming with Pthreads

Producer-Consumer Synchronization and Semaphores

PRODUCER-CONSUMER SYNCHRONIZATION AND SEMAPHORES

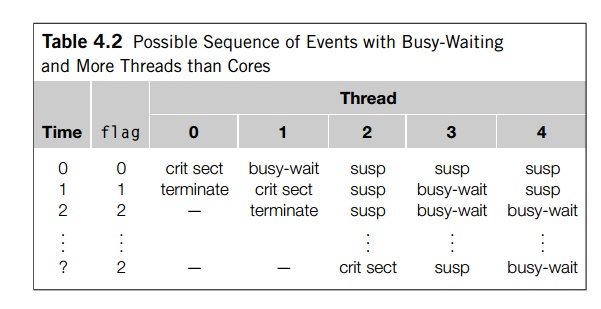

Although busy-waiting is

generally wasteful of CPU resources, it has the property by which we know, in

advance, the order in which the threads will execute the code in the critical

section: thread 0 is first, then thread 1, then thread 2, and so on. With

mutexes, the order in which

the threads execute the critical section is left to chance and the system.

Since addition is commutative, this doesn’t matter in our program for

estimating . However, it’s not difficult to think of situations in which we

also want to control the order in which the threads execute the code in the

critical section. For example, suppose each thread generates an n

n matrix,

and we want to multiply the matrices together in thread-rank order. Since

matrix multiplication isn’t commutative, our mutex solution would have

problems:

/* n and product matrix are

shared and initialized by the main thread */

/* product matrix is

initialized to be the identity matrix */

void Thread work(void* rank)

{

long my_rank = (long) rank;

matrix_t my_mat = Allocate

matrix(n);

Generate_matrix(my_mat);

pthread_mutex_lock(&mutex);

Multiply_matrix(product_mat,

my_mat); pthread_mutex_unlock(&mutex);

Free_matrix(&my_mat);

return NULL;

/* Thread work */

A somewhat more complicated example involves

having each thread “send a mes-sage” to another thread. For example, suppose we

have thread count or t threads and we want thread 0 to send a message to thread 1, thread

1 to send a message to thread 2, . . . , thread t- 2 to send a message to thread t- 1 and thread t -1 to send a message to thread 0. After a thread “receives” a

message, it can print the message and terminate. In order to implement the

message transfer, we can allocate a shared array of char . Then each thread can allocate storage for the message it’s

sending, and, after it has initialized the message, set a pointer in the shared

array to refer to it. In order to avoid dereferencing undefined pointers, the

main thread can set the individual entries in the shared array to NULL. See Program 4.7. When we run the program with more than a couple

of threads on a dual-core system, we see that some of the messages are never

received. For example, thread 0, which is started first, will typically finish

before thread t-1 has copied the message into the messages array. This isn’t surprising, and we could fix

the problem by replacing the if statement in Line 12 with a busy-wait while statement:

Program 4.7: A first attempt at sending messages using

Pthreads

/* messages has type char . It’s allocated in main. */

/* Each entry is set to NULL in main. */

void

Send_msg(void rank) {

long

my_rank = (long) rank;

long

dest = (my_rank + 1) % thread_count;

long

source = (my_rank + thread_count - 1) % thread_count;

char* my_msg = malloc(MSG_MAX* sizeof(char));

sprintf(my_msg,

"Hello to %ld from %ld", dest, my_rank);

messages[dest]

= my_msg;

if

(messages[my_rank] != NULL)

printf("Thread

%ld > %s\n", my_rank, messages[my_rank]);

else

printf("Thread

%ld > No message from %ld\n", my_rank, source);

return

NULL;

} /*

Send msg */

while (messages[my_rank] == NULL);

printf("Thread %ld > %s\n",

my_rank, messages[my_rank]);

Of course, this solution would have the same

problems that any busy-waiting solution has, so we’d prefer a different

approach.

After executing the assignment in Line 10, we’d

like to “notify” the thread with rank dest that it can proceed to print the message. We’d

like to do something like this:

. . .

messages[dest] = my_msg;

Notify thread dest that it can proceed;

Await notification from thread source

printf("Thread %ld > %snn", my_rank,

messages[my_rank]);

. . .

It’s not at all clear how mutexes can be of

help here. We might try calling pthread

mutex unlock to “notify” the thread with

rank dest. However, mutexes are initialized to be unlocked, so we’d need to add a call before initializing messages[dest]

to lock the mutex. This will be a problem since

we don’t know when the threads will reach the calls to pthread mutex lock.

To make this a little clearer, suppose that the

main thread creates and initializes an array of mutexes, one for each thread.

Then, we’re trying to do something like this:

.

. .

pthread_mutex_lock(mutex[dest]);

.

. .

messages[dest]

= my msg;

pthread_mutex_unlock(mutex[dest]);

.

. .

pthread_mutex_lock(mutex[my_rank]);

printf("Thread

%ld > %snn", my_rank, messages[my_rank]);

.

. .

Now suppose we have two threads, and thread 0

gets so far ahead of thread 1 that it reaches the second call to pthread mutex lock in Line 7 before thread 1 reaches the first in

Line 2. Then, of course, it will acquire the lock and continue to the printf statement. This will result in thread 0’s dereferencing a null

pointer, and it will crash.

There are other approaches to solving this problem with mutexes. See, for

exam-ple, Exercise 4.7. However, POSIX also provides a somewhat different means

of controlling access to critical sections: semaphores. Let’s take a look at them.

Semaphores can be thought of as a special type

of unsigned

int, so they can take on the values 0, 1, 2, . . .

. In most cases, we’ll only be interested in using them when they take on the

values 0 and 1. A semaphore that only takes on these values is called a binary semaphore. Very roughly speaking, 0

corresponds to a locked mutex, and 1 corresponds to an unlocked mutex. To use a

binary semaphore as a mutex, you initialize it to 1—that is, it’s “unlocked.” Before the critical section you

want to protect, you place a call to the function sem wait. A thread that executes sem wait will block if the semaphore is 0. If the

semaphore is nonzero, it will decrement the semaphore and proceed. After executing the code in the

critical section, a thread calls sem post, which increments the semaphore, and a thread waiting in sem wait can proceed.

Semaphores were first defined by the computer

scientist Edsger Dijkstra in [13]. The name is taken from the mechanical device

that railroads use to control which train can use a track. The device consists

of an arm attached by a pivot to a post. When the arm points down, approaching

trains can proceed, and when the arm is per-pendicular to the post, approaching

trains must stop and wait. The track corresponds to the critical section: when

the arm is down corresponds to a semaphore of 1, and when the arm is up

corresponds to a semaphore of 0. The sem wait and sem post calls correspond to signals sent by the train

to the semaphore controller.

For our current purposes, the crucial

difference between semaphores and mutexes is that there is no ownership

associated with a semaphore. The main thread can ini-tialize all of the

semaphores to 0—that is, “locked,” and then any thread can execute a sem post on any of the semaphores, and, similarly, any thread can execute sem wait on any of the semaphores. Thus, if we use semaphores, our Send msg function can be written as shown in Program 4.8.

Program 4.8: Using semaphores so that threads can send

messages

/* messages is allocated and initialized to

NULL in main */

/* semaphores is allocated and initialized to 0

(locked) in

main */

void* Send_msg(void* rank)

f

long

my_rank = (long) rank;

long

dest = (my_rank + 1) % thread_count;

char* my_msg = malloc(MSG_MAX* sizeof(char));

sprintf(my_msg,

"Hello to %ld from %ld", dest, my_rank);

messages[dest]

= my_msg;

sem_post(&semaphores[dest])

/* ‘‘Unlock’’

the semaphore of dest */

/* Wait for our semaphore to be unlocked */

sem_wait(&semaphores[my_rank]);

printf("Thread

%ld > %s\n", my_rank, messages[my_rank]);

return

NULL;

} /*

Send msg */

The syntax of the various semaphore functions

is

int sem init(

sem_t* semaphore/,p /* out */,

int shared /* in */,

unsigned initial_val /* in */);

int sem destroy(sem_t∗ semaphore_p /∗ in/out ∗/);

int sem post(sem_t∗ semaphore_p /∗ in/out ∗/);

int sem wait(sem_t∗ semaphore_p /∗ in/out ∗/);

We won’t make use of the second argument to sem

init: the constant 0 can be passed in. Note that semaphores are not part of

Pthreads. Hence, it’s necessary to add the following preprocessor directive to

any program that uses them:5

#include <semaphore.h>

Finally, note that the message-sending problem

didn’t involve a critical section. The problem wasn’t that there was a block of

code that could only be executed by one thread at a time. Rather, thread my

rank couldn’t proceed until thread source had finished creating the message.

This type of synchronization, when a thread can’t proceed until another thread

has taken some action, is sometimes called producer-consumer synchronization.

Related Topics