Chapter: Multicore Application Programming For Windows, Linux, and Oracle Solaris : Identifying Opportunities for Parallelism

Multiple Loosely Coupled Tasks

Multiple

Loosely Coupled Tasks

A slight

variation on the theme of multiple independent tasks would be where the tasks

are different, but they work together to form a single application. Some applications do need to have multiple independent

tasks running simultaneously, with each task generally independent and often

different from the other running tasks. However, the reason this is an

application rather than just a collection of tasks is that there is some

element of com-munication within the system. The communication might be from

the tasks to a central task controller, or the tasks might report some status

back to a status monitor.

In this

instance, the tasks themselves are largely independent. They may occasionally

communicate, but that communication is likely to be asynchronous or perhaps

limited to exceptional situations.

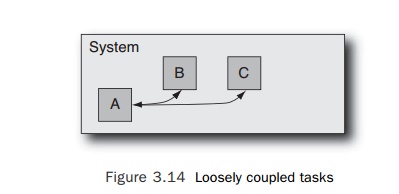

Figure

3.14 shows a single system running three tasks. Task A is a control or

supervi-sor, and tasks B and C are reporting status to task A.

The

performance of the application depends on the activity of these individual

tasks. If the CPU-consuming part of the “application” has been split off into a

separate task, then the rest of the components may become more responsive. For

an example of this improved responsiveness, assume that a single-threaded

application is responsible for receiving and forwarding packets across the

network and for maintaining a log of packet activity on disk. This could be

split into two loosely coupled tasks—one receives and for-wards the packets

while the other is responsible for maintaining the log. With the origi-nal

code, there might be a delay in processing an incoming packet if the

application is busy writing status to the log. If the application is split into

separate tasks, the packet can be received and forwarded immediately, and the

log writer will record this event at a convenient point in the future.

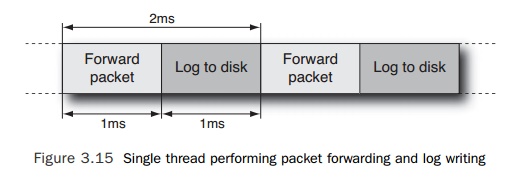

The

performance gain arises in this case because we have shared the work between

two threads. The packet-forwarding task only has to process packets and does

not get delayed by disk activity. The disk-writing task does not get stalled

reading or writing packets. If we assume that it takes 1ms to read and forward

the packet and another 1ms to write status to disk, then with the original

code, we can process a new packet every 2ms (this represents a rate of 5,000

packets per second). Figure 3.15 shows this situation.

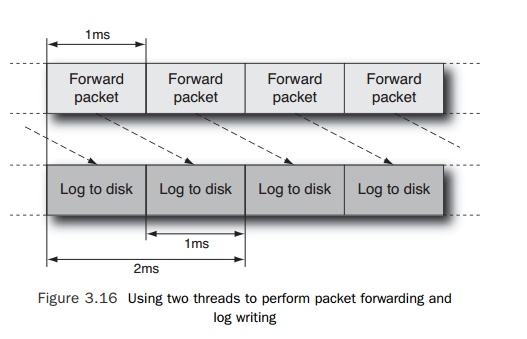

If we

split these into separate tasks, then we can handle a packet every 1ms, so

throughput will have doubled. It will also improve the responsiveness because

we will handle each packet within 1ms of arrival, rather than within 2ms.

However, it still takes 2ms for the handling of each packet to complete, so the

throughput of the system has doubled, but the response time has remained the

same. Figure 3.16 shows this situation.

Related Topics