Chapter: Multicore Application Programming For Windows, Linux, and Oracle Solaris : Identifying Opportunities for Parallelism

Determining the Maximum Practical Threads

Determining

the Maximum Practical Threads

If we take Amdahl’s law as a reasonable approximation to application scaling, it becomes an interesting question to ask how many threads we should expect an application to scale to. If we have an application that spends only 10% of its time in code that can be parallelized, it is unlikely that we’ll see much noticeable gain when using eight threads over using four threads. If we assume it took 100 seconds to start with, then four threads would complete the task in 92.5 seconds, whereas eight threads would take 91.25 sec-onds. This is just over a second out of a total duration of a minute and a half. In case the use of seconds might be seen as a way of trivializing the difference, imagine that the original code took 100 days; then the difference is equivalent to a single day out of a total duration of three months.

There

will be some applications where every last second is critical and it makes

sense to use as many resources as possible to increase the performance to as

high as possible. However, there are probably a large number of applications

where a small gain in per-formance is not worth the effort.

We can

analyze this issue assuming that a person has a tolerance, T, within which they

cease to care about a difference in performance. For many people this is

probably 10%; if the performance that they get is within 10% of the best

possible, then it is acceptable.

Other

groups might have stronger or weaker constraints.

Returning

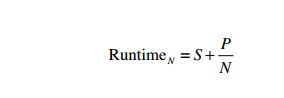

to Amdahl’s law, recall that the runtime of an application that has a

pro-portion P of parallelizable code and S of serial code and that is run with

N threads is as follows:

The

optimal runtime, when there are an infinite number of threads, is S. So, a

run-time within T percent of the optimal would be as follows:

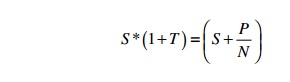

Acceptable

runtime = S * (1 + T )

We can

compare the acceptable runtime with the runtime with N threads:

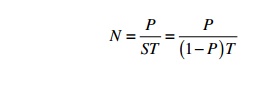

We can

then rearrange and solve for N to get the following relationship for N:

Using

this equation, Figure 3.11 shows the number of threads necessary to get a

run-time that is within 10% of the best possible.

Reading

this chart, it is clear that an application will have only limited scalability

until it spends at least half of its runtime in code that can be parallelized.

For an application to scale to large numbers of cores, it requires that 80%+ of

the serial runtime is spent in parallelizable code.

If

Amdahl’s law were the only constraint to scaling, then it is apparent that

there is lit-tle benefit to using huge thread counts on any but the most

embarrassingly parallel applications. If performance is measured as throughput

(or the amount of work done), it is probable that for a system capable of

running many threads, those threads may be bet-ter allocated to a number of

processes rather than all being utilized by a single process.

However,

Amdahl’s law is a simplification of the scaling situation. The next section

will discuss a more realistic model.

Related Topics