Chapter: Advanced Computer Architecture : Instruction Level Parallelism

Important Short Questions and Answers: Instruction Level Parallelism

1. Give few essential features of RISC

architecture.

The

RISC-based machines focused the attention of designers on two critical

performance techniques, the exploitation of instruction level parallelism

(initially through pipelining and later through multiple instruction issue) and

the use of caches (initially in simple forms and later using more sophisticated

organizations and optimizations).

The

RISC-based computers raised the performance bar, forcing prior architectures to

keep up or disappear.or/ both)

RISC

architectures are characterized by a few key properties, which dramatically

simplify their implementation:

• All

operations on data apply to data in registers and typically change the entire

register (32 or 64 bits per register).

• The only

operations that affect memory are load and store operations that move data from

memory to a register or to memory from a register, respectively.

Load and

store operations that load or store less than a full register (e.g., a byte, 16

bits, or 32 bits) are often available.

• The

instruction formats are few in number with all instructions typically being one

size. These simple properties lead to dramatic simplifications in the

implementation of pipelining, which is why these instruction sets were designed

this way.

2. Power sensitive designs will avoid fixed field

decoding. Why?

In RISC

architecture, register specifiers are at a fixed location and decoding is done

in parallel with reading registers. This technique is known as 'fixed field

decoding'. In this method, we may read a register which we may not use. This

doesn't help, but also doesn't hurt the performance. In case of power sensitive

designs, it does waste energy for reading an unnecessary register.

3. Give the causes of structural hazards.

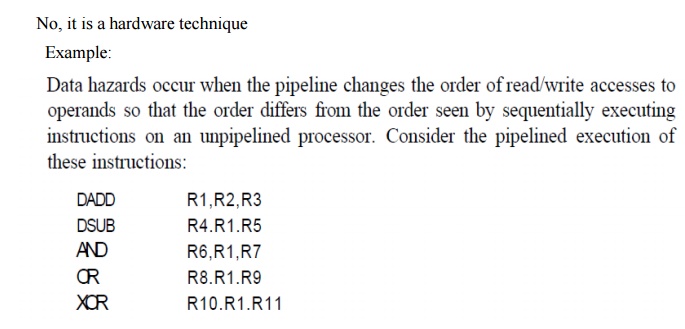

4. Give an example of result forwarding technique

to minimize data hazard stalls. Is forwarding a software technique?

5. Give a sequence of code that has true

dependence, anti-dependence and control dependence in it.

true

dependence: Instrns 1,2 (R0)

antidependence:

Instructions 3,4 (R1)

output

dependence: Instructions 2,3 (F4); 4,5 (R1)

6. What is the flaw in 1-bit branch prediction

scheme?

7. What is the key idea behind the

implementation of hardware speculation?

8. What is

trace scheduling? Which type of processors use this technique?

Trace

scheduling is useful for processors with a large number of issues per clock, w

here conditional or predicated execution is inappropriate or unsupported, and

where simple loop unrolling may not be sufficient by itself to uncover enough

ILP to keep the processor busy. Trace scheduling is a way to organize the

global code motion process, so as to simplify the code scheduling by incurring

the costs of possible code motion on the less frequent paths.

There are

two steps to trace scheduling. The first step, called trace selection, tries to

find a likely sequence of basic blocks whose operations will be put together

into a smaller number of instructions; this sequence is called a trace. Loop

unrolling is used to generate long traces, since loop branches are taken with

high probability.

Once a

trace is selected, the second process, called trace compaction, tries to

squeeze the trace into a small number of wide instructions. Trace compaction is

code scheduling; hence, it attempts to move operations as early as it can in a

sequence (trace), packing the operations into as few wide instructions (or

issue packets) as possible.

10 . Mention few limits on Instruction Level Parallelism.

1. Limitations

on the Window Size and Maximum Issue Count

2. Realistic

Branch and Jump Prediction

3. The

Effects of Finite Registers

4. The

Effects of Imperfect Alias Analysis

11.List the

various data dependence.

Ø Data

dependence

Ø Name

dependence

Ø Control

Dependence

12.

What is

Instruction Level Parallelism?

Pipelining

is used to overlap the execution of instructions and improve performance. This

potential overlap among instructions is called instruction level parallelism

(ILP) since the instruction can be evaluated in parallel.

13. Give an example of control dependence?

if p1

{s1;}

if p2

{s2;}

S1 is

control dependent on p1, and s2 is control dependent on p2

14. What is the limitation of the simple pipelining

technique?

These

technique uses in-order instruction issue and execution. Instructions are

issued in program order, and if an instruction is stalled in the pipeline, no

later instructions can proceed.

15. Briefly explain the idea behind using reservation

station?

Reservation

station fetches and buffers an operand as soon as available, eliminating the

need to get the operand from a register.

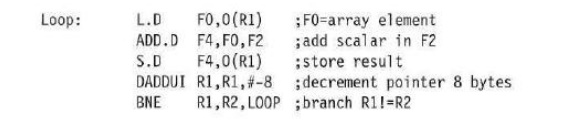

16. Give an example for data dependence.

Loop: L.D

F0,0(R1) ADD.D F4,F0,F2 S.D F4,0(R1) DADDUI R1,R1,#-8 BNE R1,R2,

loop

17. Explain the idea behind

dynamic scheduling?

In

dynamic scheduling the hardware rearranges the instruction execution to reduce

the stalls while maintaining data flow and exception behavior.

18. Mention the advantages of

using dynamic scheduling?

It

enables handling some cases when dependences are unknown at compile time and it

simplifies the compiler. It allows code that was compiled with one pipeline in

mind run efficiently on a different pipeline.

19. What are the possibilities for imprecise

exception?

The

pipeline may have already completed instructions that are later in program

order than instruction causing exception. The pipeline may have not yet

completed some instructions that are earlier in program order than the instructions

causing exception.

20. What are multilevel branch

predictors?

These

predictors use several levels of branch-prediction tables together with an

algorithm for choosing among the multiple predictors.

21. What are branch-target

buffers?

To reduce

the branch penalty we need to know from what address to fetch by end of IF

(instruction fetch). A branch prediction cache that stores the predicted

address for the next instruction after a branch is called a branch-target

buffer or branch target cache.

22. Briefly explain the goal of

multiple-issue processor?

The goal

of multiple issue processors is to allow multiple instructions to issue in a

clock cycle. They come in two flavors: superscalar processors and VLIW

processors.

23. What is speculation?

Speculation

allows execution of instruction before control dependences are resolved.

24. Mention the purpose of using

Branch history table?

It is a

small memory indexed by the lower portion of the address of the branch

instruction. The memory contains a bit that says whether the branch was

recently taken or not.

25. What are super scalar processors?

Superscalar

processors issue varying number of instructions per clock and are either

statically scheduled or dynamically scheduled.

26. Mention the idea behind hardware-based

speculation?

It

combines three key ideas: dynamic branch prediction to choose which instruction

to execute, speculation to allow the execution of instructions before control

dependences are resolved and dynamic scheduling to deal with the scheduling of

different combinations of basic blocks.

27. What are the fields in the

ROB?

Instruction

type Destination field Value field Ready field

28. How many branch selected entries are in

a (2,2) predictors that has a total of 8K bits in a prediction buffer?

number of

prediction entries selected by the branch = 8K number of prediction entries

selected by the branch = 1K

29. What is the advantage of

using instruction type field in ROB?

The

instruction field specifies whether instruction is a branch or a store or a

register operation

30. Mention the advantage of using tournament based

predictors?

The advantage of tournament predictor is its ability to select the right predictor for right branch.

Related Topics