Chapter: Security in Computing : Is There a Security Problem in Computing?

The Meaning of Computer Security

The Meaning of Computer Security

We have seen that any

computer-related system has both theoretical and real weaknesses. The purpose

of computer security is to devise ways to prevent the weaknesses from being

exploited. To understand what preventive measures make the most sense, we

consider what we mean when we say that a system is "secure."

Security Goals

We use the term

"security" in many ways in our daily lives. A "security

system" protects our house, warning the neighbors or the police if an

unauthorized intruder tries to get in. "Financial security" involves

a set of investments that are adequately funded; we hope the investments will

grow in value over time so that we have enough money to survive later in life.

And we speak of children's "physical security," hoping they are safe

from potential harm. Just as each of these terms has a very specific meaning in

the context of its use, so too does the phrase "computer security."

When we talk about computer

security, we mean that we are addressing three important aspects of any

computer-related system: confidentiality, integrity, and availability.

Confidentiality ensures that computer-related

assets are accessed only by authorized parties. That is, only those who should

have access to something will actually get that access. By "access,"

we mean not only reading but also viewing, printing, or simply knowing that a

particular asset exists. Confidentiality is sometimes called secrecy or privacy.

Integrity means that assets can be modified

only by authorized parties or only in authorized ways. In this context,

modification includes writing, changing, changing status, deleting, and

creating.

Availability means that assets are accessible

to authorized parties at appropriate times. In other words, if some person or

system has legitimate access to a particular set of objects, that access should

not be prevented. For this reason, availability is sometimes known by its

opposite, denial of service.

Security in computing

addresses these three goals. One of the challenges in building a secure system

is finding the right balance among the goals, which often conflict. For

example, it is easy to preserve a particular object's confidentiality in a

secure system simply by preventing everyone from reading that object. However,

this system is not secure, because it does not meet the requirement of

availability for proper access. That is, there must be a balance between

confidentiality and availability.

But balance is not all. In

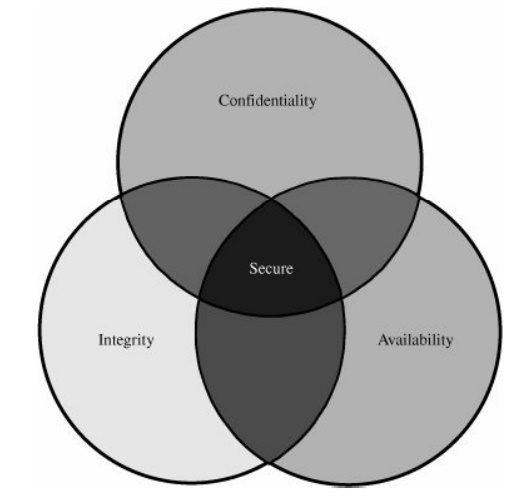

fact, these three characteristics can be independent, can overlap (as shown in Figure 1-3), and can even be mutually exclusive.

For example, we have seen that strong protection of confidentiality can

severely restrict availability. Let us examine each of the three qualities in

depth.

Figure 1-3. Relationship Between Confidentiality, Integrity, and

Availability.

Confidentiality

You may find the notion of

confidentiality to be straightforward: Only authorized people or systems can

access protected data. However, as we see in later chapters, ensuring

confidentiality can be difficult. For example, who determines which people or

systems are authorized to access the current system? By "accessing"

data, do we mean that an authorized party can access a single bit? the whole

collection? pieces of data out of context? Can someone who is authorized

disclose those data to other parties?

Confidentiality is the

security property we understand best because its meaning is narrower than the

other two. We also understand confidentiality well because we can relate

computing examples to those of preserving confidentiality in the real world.

Integrity

Integrity is much harder to

pin down. As Welke and Mayfield [WEL90 ,

MAY91, NCS91b]

point out, integrity means different things in different contexts. When we

survey the way some people use the term, we find several different meanings.

For example, if we say that we have preserved the integrity of an item, we may

mean that the item is

• precise

• accurate

• unmodified

• modified only in acceptable ways

• modified only by authorized people

• modified only by authorized processes

• consistent

• internally consistent

• meaningful and usable

Availability

Availability applies both to

data and to services (that is, to information and to information processing),

and it is similarly complex. As with the notion of confidentiality, different

people expect availability to mean different things. For example, an object or

service is thought to be available if

It is present in a usable form.

It has capacity enough to meet the service's

needs.

It is making clear progress, and, if in wait

mode, it has a bounded waiting time.

The service is completed in an acceptable

period of time.

We can construct an overall

description of availability by combining these goals. We say a data item,

service, or system is available if

There is a timely response to our request.

Resources are allocated fairly so that some

requesters are not favored over others.

The service or system involved follows a

philosophy of fault tolerance, whereby hardware or software faults lead to

graceful cessation of service or to work-arounds rather than to crashes and

abrupt loss of information.

The service or system can be used easily and in

the way it was intended to be used.

Concurrency is controlled; that is,

simultaneous access, deadlock management, and exclusive access are supported as

required.

As you can see, expectations

of availability are far -reaching. Indeed, the security community is just

beginning to understand what availability implies and how to ensure it. A

small, centralized control of access is fundamental to preserving

confidentiality and integrity, but it is not clear that a single access control

point can enforce availability. Much of computer security's past success has

focused on confidentiality and integrity; full implementation of availability

is security's next great challenge.

Vulnerabilities

When we prepare to test a

system, we usually try to imagine how the system can fail; we then look for

ways in which the requirements, design, or code can enable such failures. In

the same way, when we prepare to specify, design, code, or test a secure

system, we try to imagine the vulnerabilities that would prevent us from

reaching one or more of our three security goals.

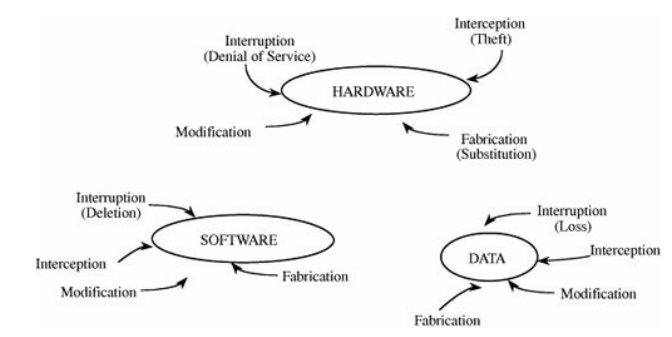

It is sometimes easier to

consider vulnerabilities as they apply to all three broad categories of system

resources (hardware, software, and data), rather than to start with the

security goals themselves. Figure 1-4

shows the types of vulnerabilities we might find as they apply to the assets of

hardware, software, and data. These three assets and the connections among them

are all potential security weak points. Let us look in turn at the

vulnerabilities of each asset.

Figure 1-4. Vulnerabilities of Computing Systems.

Hardware Vulnerabilities

Hardware is more visible than

software, largely because it is composed of physical objects. Because we can

see what devices are hooked to the system, it is rather simple to attack by adding

devices, changing them, removing them, intercepting the traffic to them, or

flooding them with traffic until they can no longer function. However,

designers can usually put safeguards in place.

But there are other ways that

computer hardware can be attacked physically. Computers have been drenched with

water, burned, frozen, gassed, and electrocuted with power surges. People have

spilled soft drinks, corn chips, ketchup, beer, and many other kinds of food on

computing devices. Mice have chewed through cables. Particles of dust, and

especially ash in cigarette smoke, have threatened precisely engineered moving

parts. Computers have been kicked, slapped, bumped, jarred, and punched.

Although such attacks might be intentional, most are not; this abuse might be

considered "involuntary machine slaughter": accidental acts not

intended to do serious damage to the hardware involved.

A more serious attack,

"voluntary machine slaughter" or "machinicide," usually

involves someone who actually wishes to harm the computer hardware or software.

Machines have been shot with guns, stabbed with knives, and smashed with all

kinds of things. Bombs, fires, and collisions have destroyed computer rooms.

Ordinary keys, pens, and screwdrivers have been used to short-out circuit

boards and other components. Devices and whole systems have been carried off by

thieves. The list of the kinds of human attacks perpetrated on computers is

almost endless.

In particular, deliberate

attacks on equipment, intending to limit availability, usually involve theft or

destruction. Managers of major computing centers long ago recognized these

vulnerabilities and installed physical security systems to protect their

machines. However, the proliferation of PCs, especially laptops, as office equipment

has resulted in several thousands of dollars'worth of equipment sitting

unattended on desks outside the carefully protected computer room. (Curiously,

the supply cabinet, containing only a few hundred dollars' worth of pens,

stationery, and paper clips, is often locked.) Sometimes the security of

hardware components can be enhanced greatly by simple physical measures such as

locks and guards.

Laptop computers are

especially vulnerable because they are designed to be easy to carry. (See Sidebar 1-3 for the story of a stolen laptop.)

Safeware Insurance reported 600,000 laptops stolen in 2003. Credent

Technologies reported that 29 percent were stolen from the office, 25 percent

from a car, and 14 percent in an airport. Stolen laptops are almost never recovered:

The FBI reports 97 percent were not returned [SAI05].

Software Vulnerabilities

Computing equipment is of

little use without the software (operating system, controllers, utility

programs, and application programs) that users expect. Software can be

replaced, changed, or destroyed maliciously, or it can be modified, deleted, or

misplaced accidentally. Whether intentional or not, these attacks exploit the

software's vulnerabilities.

Sometimes, the attacks are

obvious, as when the software no longer runs. More subtle are attacks in which

the software has been altered but seems to run normally. Whereas physical

equipment usually shows some mark of inflicted injury when its boundary has

been breached, the loss of a line of source or object code may not leave an

obvious mark in a program. Furthermore, it is possible to change a program so

that it does all it did before, and then some. That is, a malicious intruder

can "enhance" the software to enable it to perform functions you may

not find desirable. In this case, it may be very hard to detect that the software

has been changed, let alone to determine the extent of the change.

A classic example of

exploiting software vulnerability is the case in which a bank worker realized

that software truncates the fractional interest on each account. In other

words, if the monthly interest on an account is calculated to be $14.5467, the

software credits only $14.54 and ignores the $.0067. The worker amended the

software so that the throw-away interest (the $.0067) was placed into his own

account. Since the accounting practices ensured only that all accounts

balanced, he built up a large amount of money from the thousands of account

throw-aways without detection. It was only when he bragged to a colleague of

his cleverness that the scheme was discovered.

Software Deletion

Software is surprisingly easy

to delete. Each of us has, at some point in our careers, accidentally erased a

file or saved a bad copy of a program, destroying a good previous copy. Because

of software's high value to a commercial computing center, access to software

is usually carefully controlled through a process called configuration management so that software cannot be deleted,

destroyed, or replaced accidentally. Configuration management uses several

techniques to ensure that each version or release retains its integrity. When

configuration management is used, an old version or release can be replaced

with a newer version only when it has been thoroughly tested to verify that the

improvements work correctly without degrading the functionality and performance

of other functions and services.

Software Modification

Software is vulnerable to

modifications that either cause it to fail or cause it to perform an unintended

task. Indeed, because software is so susceptible to "off by one"

errors, it is quite easy to modify. Changing a bit or two can convert a working

program into a failing one. Depending on which bit was changed, the program may

crash when it begins or it may execute for some time before it falters.

With a little more work, the

change can be much more subtle: The program works well most of the time but

fails in specialized circumstances. For instance, the program may be

maliciously modified to fail when certain conditions are met or when a certain

date or time is reached. Because of this delayed effect, such a program is

known as a logic bomb. For example,

a disgruntled employee may modify a crucial program so that it accesses the

system date and halts abruptly after July 1. The employee might quit on May l

and plan to be at a new job miles away by July.

Another type of change can

extend the functioning of a program so that an innocuous program has a hidden

side effect. For example, a program that ostensibly structures a listing of

files belonging to a user may also modify the protection of all those files to

permit access by another user.

Other categories of software

modification include

Trojan horse: a program that overtly does

one thing while covertly doing another

virus: a specific type of Trojan

horse that can be used to spread its "infection" from one computer to

another

trapdoor: a program that has a secret

entry point

z information leaks in a program: code that makes information

accessible to unauthorized people or programs

More details on these and

other software modifications are provided in Chapter

3.

Of course, it is possible to

invent a completely new program and install it on a computing system.

Inadequate control over the programs that are installed and run on a computing

system permits this kind of software security breach.

Software Theft

This attack includes

unauthorized copying of software. Software authors and distributors are

entitled to fair compensation for use of their product, as are musicians and

book authors. Unauthorized copying of software has not been stopped

satisfactorily. As we see in Chapter 11,

the legal system is still grappling with the difficulties of interpreting

paper-based copyright laws for electronic media.

Data Vulnerabilities

Hardware security is usually

the concern of a relatively small staff of computing center professionals.

Software security is a larger problem, extending to all programmers and

analysts who create or modify programs. Computer programs are written in a

dialect intelligible primarily to computer professionals, so a "leaked"

source listing of a program might very well be meaningless to the general

public.

Printed data, however, can be

readily interpreted by the general public. Because of its visible nature, a

data attack is a more widespread and serious problem than either a hardware or

software attack. Thus, data items have greater public value than hardware and

software because more people know how to use or interpret data.

By themselves, out of

context, pieces of data have essentially no intrinsic value. For example, if

you are shown the value "42," it has no meaning for you unless you

know what the number represents. Likewise, "326 Old Norwalk Road" is

of little use unless you know the city, state, and country for the address. For

this reason, it is hard to measure the value of a given data item.

On the other hand, data items

in context do relate to cost, perhaps measurable by the cost to reconstruct or

redevelop damaged or lost data. For example, confidential data leaked to a

competitor may narrow a competitive edge. Data incorrectly modified can cost

human lives. To see how, consider the flight coordinate data used by an

airplane that is guided partly or fully by software, as many now are. Finally,

inadequate security may lead to financial liability if certain personal data

are made public. Thus, data have a definite value, even though that value is

often difficult to measure.

Typically, both hardware and

software have a relatively long life. No matter how they are valued initially,

their value usually declines gradually over time. By contrast, the value of

data over time is far less predictable or consistent. Initially, data may be

valued highly. However, some data items are of interest for only a short period

of time, after which their value declines precipitously.

To see why, consider the

following example. In many countries, government analysts periodically generate

data to describe the state of the national economy. The results are scheduled

to be released to the public at a predetermined time and date. Before that

time, access to the data could allow someone to profit from advance knowledge

of the probable effect of the data on the stock market. For instance, suppose

an analyst develops the data 24 hours before their release and then wishes to

communicate the results to other analysts for independent verification before

release. The data vulnerability here is clear, and, to the right people, the

data are worth more before the scheduled release than afterward. However, we

can protect the data and control the threat in simple ways. For example, we

could devise a scheme that would take an outsider more than 24 hours to break;

even though the scheme may be eminently breakable (that is, an intruder could

eventually reveal the data), it is adequate for those data because

confidentiality is not needed beyond the 24-hour period.

Data security suggests the

second principle of computer security.

Principle of Adequate Protection: Computer items must be

protected only until they lose their value. They must be protected to a degree consistent with their value.

This principle says that

things with a short life can be protected by security measures that are

effective only for that short time. The notion of a small protection window

applies primarily to data, but it can in some cases be relevant for software

and hardware, too.

Sidebar 1-4 confirms that

intruders take advantage of vulnerabilities to break in by whatever means they

can.

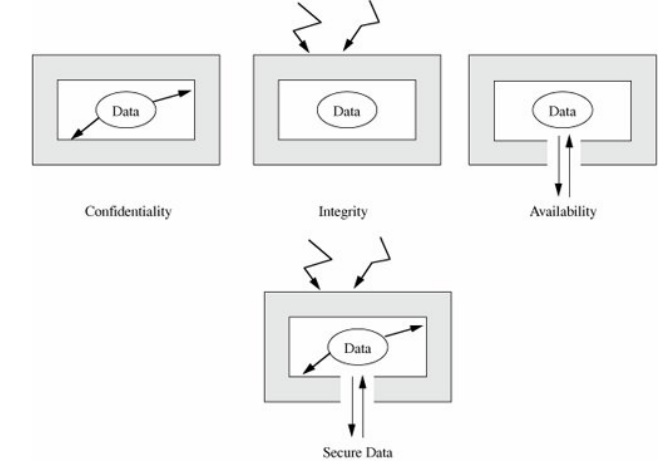

Figure 1-5 illustrates how

the three goals of security apply to data. In particular, confidentiality

prevents unauthorized disclosure of a data item, integrity prevents

unauthorized modification, and availability prevents denial of authorized

access.

Figure 1-5. Security of Data.

Data Confidentiality

Data can be gathered by many

means, such as tapping wires, planting bugs in output devices, sifting through

trash receptacles, monitoring electromagnetic radiation, bribing key employees,

inferring one data point from other values, or simply requesting the data.

Because data are often available in a form people can read, the confidentiality

of data is a major concern in computer security.

Data are not just numbers on

paper; computer data include digital recordings such as CDs and DVDs, digital

signals such as network and telephone traffic, and broadband communications

such as cable and satellite TV. Other forms of data are biometric identifiers

embedded in passports, online activity preferences, and personal information

such as financial records and votes. Protecting this range of data types

requires many different approaches.

Data Integrity

Stealing, buying, finding, or

hearing data requires no computer sophistication, whereas modifying or

fabricating new data requires some understanding of the technology by which the

data are transmitted or stored, as well as the format in which the data are

maintained. Thus, a higher level of sophistication is needed to modify existing

data or to fabricate new data than to intercept existing data. The most common

sources of this kind of problem are malicious programs, errant file system

utilities, and flawed communication facilities.

Data are especially

vulnerable to modification. Small and skillfully done modifications may not be

detected in ordinary ways. For instance, we saw in our truncated interest

example that a criminal can perform what is known as a salami attack: The crook shaves a little from many accounts and

puts these shavings together to form a valuable result, like the meat scraps

joined in a salami.

A more complicated process is

trying to reprocess used data items. With the proliferation of

telecommunications among banks, a fabricator might intercept a message ordering

one bank to credit a given amount to a certain person's account. The fabricator

might try to replay that message,

causing the receiving bank to credit the same account again. The fabricator

might also try to modify the message slightly, changing the account to be

credited or the amount, and then transmit this revised message.

Other Exposed Assets

We have noted that the major

points of weakness in a computing system are hardware, software, and data.

However, other components of the system may also be possible targets. In this

section, we identify some of these other points of attack.

Networks

Networks are specialized

collections of hardware, software, and data. Each network node is itself a

computing system; as such, it experiences all the normal security problems. In

addition, a network must confront communication problems that involve the

interaction of system components and outside resources. The problems may be

introduced by a very exposed storage medium or access from distant and

potentially untrustworthy computing systems.

Thus, networks can easily

multiply the problems of computer security. The challenges are rooted in a

network's lack of physical proximity, use of insecure shared media, and the

inability of a network to identify remote users positively.

Access

Access to computing equipment

leads to three types of vulnerabilities. In the first, an intruder may steal

computer time to do general-purpose computing that does not attack the

integrity of the system itself. This theft of computer services is analogous to

the stealing of electricity, gas, or water. However, the value of the stolen

computing services may be substantially higher than the value of the stolen

utility products or services. Moreover, the unpaid computing access spreads the

true costs of maintaining the computing system to other legitimate users. In

fact, the unauthorized access risks affecting legitimate computing, perhaps by

changing data or programs. A second vulnerability involves malicious access to

a computing system, whereby an intruding person or system actually destroys

software or data. Finally, unauthorized access may deny service to a legitimate

user. For example, a user who has a time-critical task to perform may depend on

the availability of the computing system. For all three of these reasons,

unauthorized access to a computing system must be prevented.

Key People

People can be crucial weak

points in security. If only one person knows how to use or maintain a

particular program, trouble can arise if that person is ill, suffers an

accident, or leaves the organization (taking her knowledge with her). In

particular, a disgruntled employee can cause serious damage by using inside

knowledge of the system and the data that are manipulated. For this reason,

trusted individuals, such as operators and systems programmers, are usually

selected carefully because of their potential ability to affect all computer

users.

We have described common

assets at risk. In fact, there are valuable assets in almost any computer

system. (See Sidebar 1-5 for an example

of exposed assets in ordinary business dealings.)

Next, we turn to the people

who design, build, and interact with computer systems, to see who can breach

the systems' confidentiality, integrity, and availability.

Related Topics