Chapter: Security in Computing : Is There a Security Problem in Computing?

Attacks

Attacks

When you test any computer

system, one of your jobs is to imagine how the system could malfunction. Then,

you improve the system's design so that the system can withstand any of the

problems you have identified. In the same way, we analyze a system from a

security perspective, thinking about ways in which the system's security can

malfunction and diminish the value of its assets.

Vulnerabilities, Threats, Attacks, and Controls

A computer-based system has

three separate but valuable components: hardware,

software, and data. Each of these assets offers value to different members of the

community affected by the system. To analyze security, we can brainstorm about

the ways in which the system or its information can experience some kind of

loss or harm. For example, we can identify data whose format or contents should

be protected in some way. We want our security system to make sure that no data

are disclosed to unauthorized parties. Neither do we want the data to be

modified in illegitimate ways. At the same time, we must ensure that legitimate

users have access to the data. In this way, we can identify weaknesses in the

system.

A vulnerability is a weakness in the security system, for example, in

procedures, design, or implementation, that might be exploited to cause loss or

harm. For instance, a particular system may be vulnerable to unauthorized data

manipulation because the system does not verify a user's identity before

allowing data access.

A threat to a computing system is a set of circumstances that has the

potential to cause loss or harm. To see the difference between a threat and a

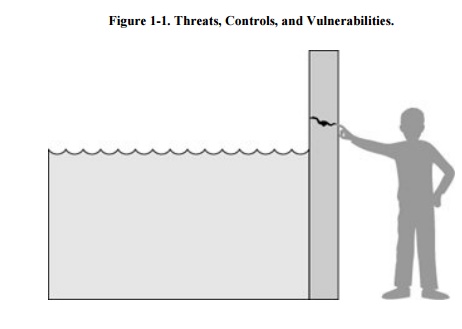

vulnerability, consider the illustration in Figure

1-1. Here, a wall is holding water back. The water to the left of

the wall is a threat to the man on the right of the wall: The water could rise,

overflowing onto the man, or it could stay beneath the height of the wall,

causing the wall to collapse. So the threat of harm is the potential for the

man to get wet, get hurt, or be drowned. For now, the wall is intact, so the

threat to the man is unrealized.

However, we can see a small

crack in the walla vulnerability that threatens the man's security. If the

water rises to or beyond the level of the crack, it will exploit the

vulnerability and harm the man.

There are many threats to a

computer system, including human-initiated and computer -initiated ones. We

have all experienced the results of inadvertent human errors, hardware design

flaws, and software failures. But natural disasters are threats, too; they can

bring a system down when the computer room is flooded or the data center

collapses from an earthquake, for example.

A human who exploits a

vulnerability perpetrates an attack

on the system. An attack can also be launched by another system, as when one

system sends an overwhelming set of messages to another, virtually shutting

down the second system's ability to function. Unfortunately, we have seen this

type of attack frequently, as denial-of-service attacks flood servers with more

messages than they can handle. (We take a closer look at denial of service in Chapter 7.)

How do we address these

problems? We use a control as a

protective measure. That is, a control is an action, device, procedure, or

technique that removes or reduces a vulnerability. In Figure 1-1, the man is placing his finger in the

hole, controlling the threat of water leaks until he finds a more permanent

solution to the problem. In general, we can describe the relationship among

threats, controls, and vulnerabilities in this way:

A threat is blocked by

control of a vulnerability.

Much of the rest of this book

is devoted to describing a variety of controls and understanding the degree to

which they enhance a system's security.

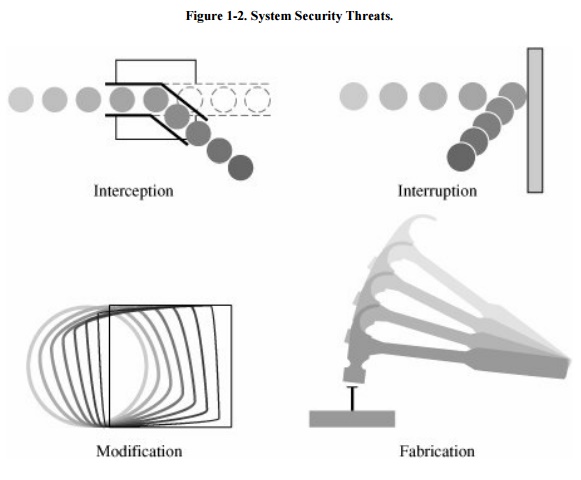

To devise controls, we must

know as much about threats as possible. We can view any threat as being one of

four kinds: interception, interruption, modification, and fabrication. Each

threat exploits vulnerabilities of the assets in computing systems; the threats

are illustrated in Figure 1-2.

An interception

means that some unauthorized party has gained access to an asset. The outside

party can be a person, a program, or a computing system. Examples of this type

of failure are illicit copying of program or data files, or wiretapping to

obtain data in a network. Although a loss may be discovered fairly quickly, a

silent interceptor may leave no traces by which the interception can be readily

detected.

In an interruption,

an asset of the system becomes lost, unavailable, or unusable. An example is

malicious destruction of a hardware device, erasure of a program or data file,

or malfunction of an operating system file manager so that it cannot find a

particular disk file.

If an unauthorized party not only accesses but

tampers with an asset, the threat is a modification.

For example, someone might change the values in a database, alter a program so

that it performs an additional computation, or modify data being transmitted

electronically. It is even possible to modify hardware. Some cases of

modification can be detected with simple measures, but other, more subtle,

changes may be almost impossible to detect.

Finally, an unauthorized

party might create a fabrication of

counterfeit objects on a computing system. The intruder may insert spurious

transactions to a network communication system or add records to an existing

database. Sometimes these additions can be detected as forgeries, but if skillfully done,

they are virtually indistinguishable from the real thing.

These four classes of

threatsinterception, interruption, modification, and fabricationdescribe the

kinds of problems we might encounter. In the next section, we look more closely

at a system's vulnerabilities and how we can use them to set security goals.

Method, Opportunity, and Motive

A malicious attacker must

have three things:

method: the skills, knowledge, tools,

and other things with which to be able to pull off the attack

opportunity: the time and access to

accomplish the attack

motive: a reason to want to perform

this attack against this system

(Think of the acronym

"MOM.") Deny any of those three things and the attack will not occur.

However, it is not easy to cut these off.

Knowledge of systems is

widely available. Mass-market systems (such as the Microsoft or Apple or Unix

operating systems) are readily available, as are common products, such as word

processors or database management systems. Sometimes the manufacturers release

detailed specifications on how the system was designed or operates, as guides

for users and integrators who want to implement other complementary products.

But even without documentation, attackers can purchase and experiment with many

systems. Often, only time and inclination limit an attacker.

Many systems are readily

available. Systems available to the public are, by definition, accessible;

often their owners take special care to make them fully available so that if

one hardware component fails, the owner has spares instantly ready to be

pressed into service.

Finally, it is difficult to

determine motive for an attack. Some places are what are called

"attractive targets," meaning they are very appealing to attackers.

Popular targets include law enforcement and defense department computers,

perhaps because they are presumed to be well protected against attack (so that

a successful attack shows the attacker's prowess). Other systems are attacked

because they are easy. (See Sidebar 1-2

on universities as targets.) And other systems are attacked simply because they

are there: random, unassuming victims.

Protecting against attacks

can be difficult. Anyone can be a victim of an attack perpetrated by an

unhurried, knowledgeable attacker. In the remainder of this book we discuss the

nature of attacks and how to protect against them.

Related Topics