Chapter: An Introduction to Parallel Programming : Parallel Hardware and Parallel Software

Some Background: von Neumann architecture, Processes, multitasking, and threads

SOME BACKGROUND

Parallel hardware and

software have grown out of conventional serial hardware and software:

hardware and software that runs (more or less) a single job at a time. So in

order to better understand the current state of parallel systems, let’s begin

with a brief look at a few aspects of serial systems.

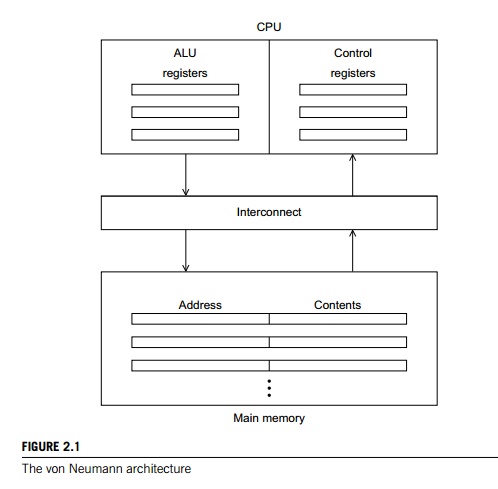

1. The von Neumann architecture

The “classical” von Neumann architecture consists of main memory, a central-processing unit (CPU) or processor or core, and an interconnection between the memory and the CPU. Main memory consists of a collection of

locations, each of which is capable of storing both instructions and data.

Every location consists of an address, which is used to access the location and

the contents of the location—the instructions or data stored in the location.

The central processing unit is divided into a

control unit and an arithmetic and logic unit (ALU). The control unit is

responsible for deciding which instructions in a program should be executed,

and the ALU is responsible for executing the actual instructions. Data in the

CPU and information about the state of an executing program are stored in

special, very fast storage called registers. The control unit has a special register called the program counter. It stores the address of the next instruction

to be executed.

Instructions and data are transferred between

the CPU and memory via the inter-connect. This has traditionally been a bus, which consists of a collection of parallel

wires and some hardware controlling access to the wires. A von Neumann machine

executes a single instruction at a time, and each instruction operates on only

a few pieces of data. See Figure 2.1.

When data or instructions are transferred from

memory to the CPU, we some-times say the data or instructions are fetched or read from memory. When data are transferred from the CPU to memory, we

sometimes say the data are written to mem-ory or stored. The separation of memory and CPU is often called the von Neumann

bottleneck, since the interconnect determines the rate at

which instructions and data can be accessed. The potentially vast quantity of data and instructions needed to run

a program is effectively isolated from the CPU. In 2010 CPUs are capable of

executing instructions more than one hundred times faster than they can fetch

items from main memory.

In order to better understand

this problem, imagine that a large company has a single factory (the CPU) in

one town and a single warehouse (main memory) in another. Further imagine that

there is a single two-lane road joining the warehouse and the factory. All the

raw materials used in manufacturing the products are stored in the warehouse.

Also, all the finished products are stored in the warehouse before being

shipped to customers. If the rate at which products can be manufactured is much

larger than the rate at which raw materials and finished products can be

transported, then it’s likely that there will be a huge traffic jam on the

road, and the employees and machinery in the factory will either be idle for

extended periods or they will have to reduce the rate at which they produce

finished products.

In order to address the von

Neumann bottleneck, and, more generally, improve CPU performance, computer

engineers and computer scientists have experimented with many modifications to

the basic von Neumann architecture. Before discussing some of these

modifications, let’s first take a moment to discuss some aspects of the

software that are used in both von Neumann systems and more modern systems.

2. Processes, multitasking, and threads

Recall that the operating system, or OS, is a major piece of software whose

purpose is to manage hardware and software resources on a computer. It

determines which programs can run and when they can run. It also controls the

allocation of memory to running programs and access to peripheral devices such

as hard disks and network interface cards.

When a user runs a program, the operating

system creates a process—an instance of a computer program that is being executed. A process

consists of several entities:

.. The executable machine language

program.

A block of memory, which will include the

executable code, a call stack that keeps

track of active functions, a heap, and some other memory locations.

Descriptors of resources that the operating

system has allocated to the process—

for example, file descriptors.

Security information—for example, information

specifying which hardware and software resources the process can access.

Information about the state of the process,

such as whether the process is ready to run or is waiting on some resource, the

content of the registers, and information about the process’ memory.

Most modern operating systems are multitasking. This means that the operating system provides

support for the apparent simultaneous execution of multiple pro-grams. This is

possible even on a system with a single core, since each process runs

for a small interval of time (typically a few

milliseconds), often called a time slice. After one running program has executed for a time slice, the

operating system can run a different program. A multitasking OS may change the

running process many times a minute, even though changing the running process

can take a long time.

In a multitasking OS if a process needs to wait

for a resource—for example, it needs to read data from external storage—it will

block. This means that it will stop executing and

the operating system can run another process. However, many pro-grams can

continue to do useful work even though the part of the program that is

currently executing must wait on a resource. For example, an airline

reservation system that is blocked waiting for a seat map for one user could

provide a list of available flights to another user. Threading provides a mechanism for

programmers to divide their programs into more or less independent tasks with

the property that when one thread is blocked another thread can be run.

Furthermore, in most sys-tems it’s possible to switch between threads much faster

than it’s possible to switch between processes. This is because threads are

“lighter weight” than processes. Threads are contained within processes, so

they can use the same executable, and they usually share the same memory and

the same I/O devices. In fact, two threads belonging to one process can share

most of the process’ resources. The two most important exceptions are that

they’ll need a record of their own program counters and they’ll need their own

call stacks so that they can execute independently of each other.

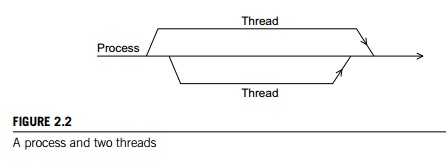

If a process is the “master”

thread of execution and threads are started and stopped by the process, then we

can envision the process and its subsidiary threads as lines: when a thread is

started, it forks off the process; when a thread terminates, it joins the process. See Figure 2.2.

Related Topics