Chapter: Medicine Study Notes : Evidence Based Medicine

Evaluation of Diagnostic Tests

Evaluation of Diagnostic Tests

Sensitivity and Specificity

· Sensitivity: proportion of people with disease who have a positive test (i.e. true positive). How good is the test at picking up people who have the condition? SnNout = when a test has a high sensitivity, a negative result rules out the diagnosis

· Specificity: the proportion of people free of a disease who have a negative test (i.e. false positive). How good is this test at correctly excluding people without the condition? SpPin = When a test is highly specific, a positive test rules in the diagnosis

· Necessary Sensitivity and Specificity depend on setting. E.g. if screening for a disease occurring 1 in 10,000 in a population of 100,000 then a test with sensitivity of 99% and specificity of 99% will find

·

9.9 true positives and 999.9

false positives. But if the disease occurs 1 in 100 then you‟ll find 9990 true

positives and 998 false positives – far better strike rate

Pre-test Probability

·

= P (D+) = probability of target

disorder before a diagnostic test result is known. Depends on patient (history

and risk factors), setting (e.g. GP, A&E, etc) and signs/symptoms

·

Is useful for:

o Deciding whether to test at all (testing threshold)

o Selecting diagnostic tests

o Interpreting tests

o Choosing whether to start treatment without further tests (treatment threshold) of while awaiting further tests

·

Based on epidemiology (e.g.

prevalence) or clinical experience

Likelihood Ratio

· Positive Likelihood Ratio = the likelihood that a positive test result would be expected in a patient with the target disorder compared to the likelihood that the same result would be expected in a patient without the target disorder

·

Negative Likelihood Ratio = same

but for negative result

·

Less likely than sensitivity and

specificity to change with the prevalence of a disorder

·

Can be calculated for several

levels of the symptom or test

·

Can be used to calculate

post-test odds if pre-test odds and LR known

·

Impact of LR:

o < 0.1 or > 10: large changes in disease likelihood (i.e. large

change to pre-test probability)

o 0.2 – 0.5 or 2 – 5: small changes in disease likelihood

o 1: no change at all

Post-test Probability

·

= Proportion of patients with a

positive test result who have the target disorder

·

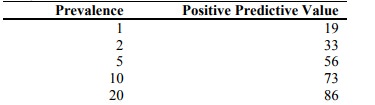

Positive Predictive Value (+PV):

proportion of people with a positive test who have disease. If the person tests

positive, what is the probability that s/he has the disease? Determined by

sensitivity and specificity, AND by the prevalence of the condition

·

Predictive value of test depends

on sensitivity and specificity AND on prevalence. E.g., for a test with

·

99% sensitivity:

·

So significance of test may vary

between, say, hospital and GP

Formulas

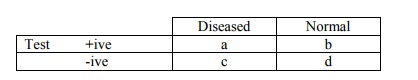

o Sensitivity = a/(a+c)

o Specificity = d/(b+d)

o LR + = sensitivity /(1-specificity)

o LR - = (1-sensitivity)/specificity

o Positive Predictive Value = a/(a+b)

o Negative Predictive Value = d/(c+d)

o Prevalence = (a+c)/(a+b+c+d)

o Pre-test odds = prevalence /(1-prevalance)

o Post-test odds = pre-test odds * LR

o Post-test probability = post-test odds/(post-test odds+1)

o Accuracy = (a+d)/(a+b+c+d) = what proportion of results have given the

correct result

Study design for researching a test

· Spectrum composition: what population was it tested on. Sensitivity and specificity may vary between populations with significant disease and the general population

· Are pertinent subgroups assessed separately? Condition for test use must be narrowly defined to avoid heterogeneity

·

Avoidance of work-up bias: if

there is bias in who is referred for the gold standard. All subjects given a

test should receive either the gold standard test or be verified by follow-up

·

Avoidance of Review Bias: is

there objectivity in interpretation of results (e.g. blinding)

· Precision: are confidence intervals quoted?

·

Should report all positive,

negative and indeterminate results and say whether indeterminate ones where

included in accuracy calculations

·

Test reproducibility: is this

tested in tests requiring interpretation

Using an article about a diagnostic test

·

Is the evidence about the

accuracy of a diagnostic test valid?

o Was there an independent, blind comparison with a reference standard?

o Did the patient sample include an appropriate spectrum of patients to whom the diagnostic test will be applied in clinical practice

o Did the results of the test being evaluated influence the decision to

perform the reference standard?

o Were the methods for performing the test described in sufficient detail to permit replication?

· Does the evidence show the test can accurately distinguish between those who do and don‟t have the disorder? Are the likelihood ratios for the test results presented or data necessary for their calculation provided?

· Can I apply this test to a specific patient?

o Will the reproducibility of the test and its interpretation be

available, affordable, accurate and precise in my setting? Is interpretation of

the test tested on people with my skill level?

o Can I generate a sensible estimate of the patient‟s pre-test

probability?

o Are the results applicable to my patient? (e.g. do they have the same

disease severity)

o Will the results change my management?

o Will the patients be better off as a result of the test? An accurate

test is very valuable if the target disorder is dangerous if undiagnosed, has

acceptable risks and effective treatment exists

Bayesian Theory

·

Combining information from

history, exam and investigations to determine overall likelihood

· Puts test results in context

· Use as part of decision analysis to determine the level at which the probability of disease is sufficiently low to withhold treatment or further tests, or sufficiently high to start treatment. In between, do further tests to raise or lower probability

·

Balance between: severity of

illness, efficiency, complications of test and treatment, and properties of the

test

Related Topics