Chapter: Satellite Communication : Satellite Access

Compression - Encryption

Compression -

Encryption:

At

the broadcast center, the high-quality digital stream of video goes through an

MPEG encoder, which converts the programming to MPEG-4 video of the correct

size and format for the satellite receiver in your house.

Encoding

works in conjunction with compression to analyze each video frame and eliminate

redundant or irrelevant data and extrapolate information from other frames.

This process reduces the overall size of the file. Each frame can be encoded in

one of three ways:

As an intraframe, which contains the complete image data for that frame.

This

method provides the least compression.

As a predicted frame, which contains just enough information to tell the

satellite receiver how to display the frame based on the most recently

displayed intraframe or predicted frame.

As a bidirectional frame, which displays information from the surrounding

intraframe or predicted frames. Using data from the closest surrounding frames,

the receiver interpolates the position and color of each pixel.

This

process occasionally produces artifacts -- glitches in the video image. One

artifact is macroblocking, in which the fluid picture temporarily dissolves

into blocks. Macroblocking is often mistakenly called pixilating, a technically

incorrect term which has been accepted as slang for this annoying artifact.

There

really are pixels on your TV screen, but they're too small for your human eye

to perceive them individually -- they're tiny squares of video data that make

up the image you see.

The

rate of compression depends on the nature of the programming. If the encoder is

converting a newscast, it can use a lot more predicted frames because most of

the scene stays the same from one frame to the next.

In

more fast-paced programming, things change very quickly from one frame to the

next, so the encoder has to create more intraframes. As a result, a newscast

generally compresses to a smaller size than something like a car race.

1. Encryption

and Transmission:

After

the video is compressed, the provider encrypts it to keep people from accessing

it for free. Encryption scrambles the digital data in such a way that it can

only be decrypted (converted back into usable data) if the receiver has the

correct decryption algorithm and security keys.

Once

the signal is compressed and encrypted, the broadcast center beams it directly

to one of its satellites. The satellite picks up the signal with an onboard

dish, amplifies the signal and uses another dish to beam the signal back to

Earth, where viewers can pick it up.

In

the next section, we'll see what happens when the signal reaches a viewer's

house.

2. Video and

Audio Compression:

Video

and Audio files are very large beasts. Unless we develop and maintain very high

bandwidth networks (Gigabytes per second or more) we have to compress to data.

Relying

on higher bandwidths is not a good option -- M25 Syndrome: Traffic needs ever

increases and will adapt to swamp current limit whatever this is.

As

we will compression becomes part of the representation or coding scheme which

have become popular audio, image and video formats.

We

will first study basic compression algorithms and then go on to study some

actual coding formats.

What is Compression?

Compression

basically employs redundancy in the data:

Temporal -- in 1D data, 1D signals, Audio etc.

Spatial -- correlation between neighbouring pixels or data items

Spectral -- correlation between colour or luminescence components. This uses

the frequency domain to exploit relationships between frequency of change in

data.

psycho-visual -- exploit perceptual properties of the human visual system.

Compression

can be categorised in two broad ways:

Lossless Compression :

--

where data is compressed and can be reconstituted (uncompressed) without loss

of detail or information. These are referred to as bit-preserving or reversible

compression systems also.

Lossy Compression :

--

where the aim is to obtain the best possible fidelity for a given bit-rate or

minimizing the bit-rate to achieve a given fidelity measure. Video and audio

compression techniques are most suited to this form of compression.

If

an image is compressed it clearly needs to uncompressed (decoded) before it can

viewed/listened to. Some processing of data may be possible in encoded form

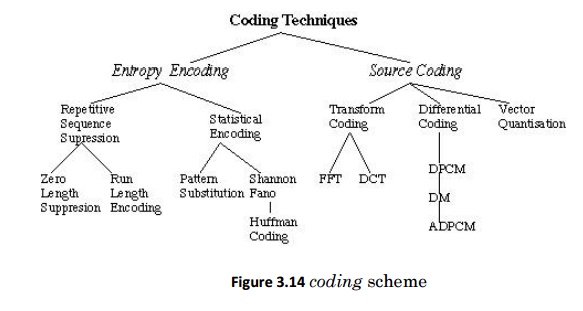

however. Lossless compression frequently involves some form of entropy encoding

and are based in information theoretic techniques.

Lossy

compression use source encoding techniques that may involve transform encoding,

differential encoding or vector quantization.

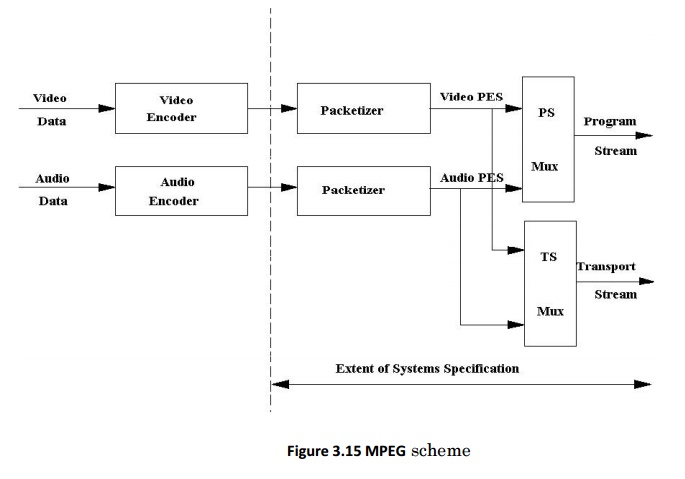

3. MPEG

Standards :

All

MPEG standards exist to promote system interoperability among your computer, television

and handheld video and audio devices. They are:

MPEG-1: the original standard for encoding and decoding streaming video and

audio files.

MPEG-2: the standard for digital television, this compresses files for

transmission of high-quality video.

MPEG-4: the standard for compressing high-definition video into smaller- scale

files that stream to computers, cell phones and PDAs (personal digital

assistants).

MPEG-21: also referred to as the Multimedia Framework. The standard that

interprets what digital content to provide to which individual user so that

media plays flawlessly under any language, machine or user conditions.

Related Topics