Chapter: Distributed Systems : Peer To Peer Services and File System

Case Study: The Andrew File System (AFS)

Case Study: The Andrew File

System (AFS)

AFS

differs markedly from NFS in its design and implementation. The differences are

primarily attributable to the identification of scalability as the most

important design goal. AFS is designed to perform well with larger numbers of

active users than other distributed file systems. The key strategy for

achieving scalability is the caching of whole files in client nodes. AFS has

two unusual design characteristics:

Whole-file serving: The

entire contents of directories and files are transmitted to client computers by AFS servers (in AFS-3, files larger

than 64 kbytes are transferred in 64-kbyte chunks).

Whole file caching: Once a

copy of a file or a chunk has been transferred to a client computer it is stored in a cache on the local disk.

The cache contains several hundred of the files most recently used on that

computer. The cache is permanent, surviving reboots of the client computer.

Local copies of files are used to satisfy clients’ open requests in preference to remote copies whenever possible.

Like NFS,

AFS provides transparent access to remote shared files for UNIX programs

running on workstations.

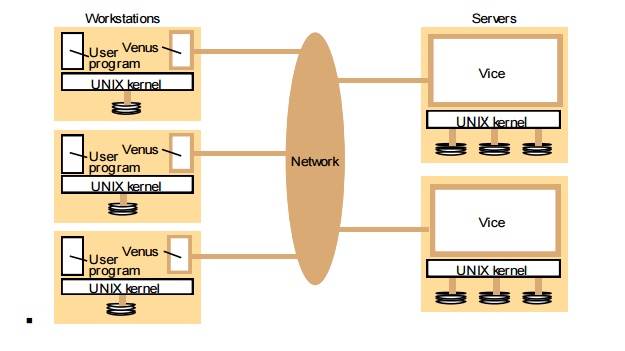

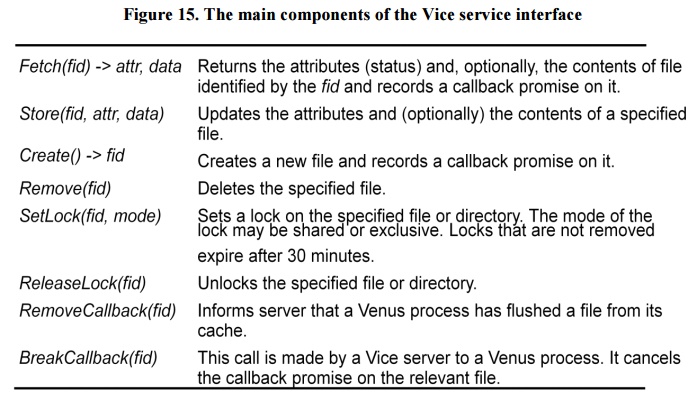

AFS is

implemented as two software components that exist at UNIX processes called Vice

and Venus.

Scenario • Here is a simple scenario

illustrating the operation of AFS:

When a

user process in a client computer issues an open

system call for a file in the shared

-file

space and there is not a current copy of the file in the local cache, the

server holding the file is located and is sent a request for a copy of the

file.

The copy

is stored in the local UNIX file system in the client computer. The copy is

then opened and the resulting UNIX

file descriptor is returned to the client.

Subsequent

read, write and other operations on the file by processes in the client

computer are applied to the local copy.

When the

process in the client issues a close

system call, if the local copy has been updated its contents are sent back to

the server. The server updates the file contents and the timestamps on the

file. The copy on the client’s local disk is retained in case it is needed

again by a user-level process on the same workstation.

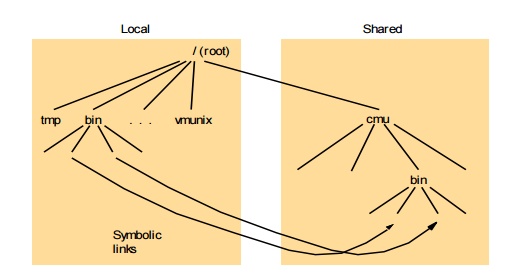

The files

available to user processes running on workstations are either local or shared.

Local

files are handled as normal UNIX files.

They are

stored on the workstation’s disk and are available only to local user

processes.

Shared

files are stored on servers, and copies of them are cached on the local disks

of workstations.

The name

space seen by user processes is illustrated in Figure 12.

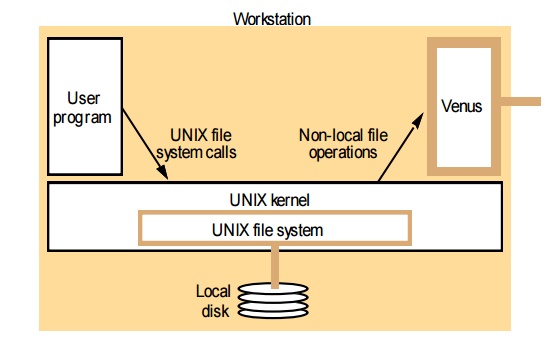

The UNIX

kernel in each workstation and server is a modified version of BSD UNIX.

The

modifications are designed to intercept open, close and some other file system

calls when they refer to files in the shared name space and pass them to the

Venus process in the client computer. (Figure 13)

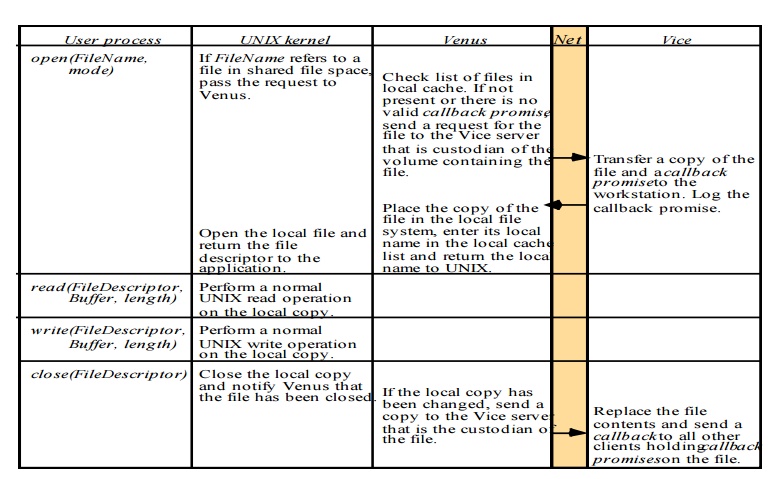

Figure 14

describes the actions taken by Vice, Venus and the UNIX kernel when a user

process issues system calls.

Other

aspects

AFS

introduces several other interesting design developments and refinements that

we outline here, together with a summary of performance evaluation results:

UNIX

kernel modifications

Location database

Threads

Read-only

replicas

Bulk

transfers

Partial

file caching

Performance

Wide area

support

Related Topics