Chapter: Software Testing : Test Management

Test plan components

Test plan components

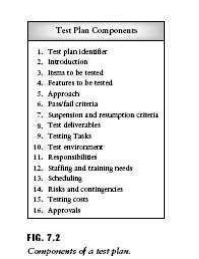

This

section of the text will discuss the basic test plan components as described in

IEEE Std 829-1983 [5]. They are shown in Figure 7.2. These components should

appear in the master test plan and in each of the levelbased test plans (unit,

integration, etc.) with the appropriate amount of detail. The reader should

note that some items in a test plan may appear in other related documents, for

example, the project plan. References to such documents should be included in

the test plan, or a copy of the appropriate section of the document should be

attached to the test plan.

1 . Test Plan Identifier

Each test

plan should have a unique identifier so that it can be associated with a

specific project and become a part of the project history. The project history

and all project-related items should be stored in a project database or come

under the control of a configuration management system. Organizational

standards should describe the format for the test plan identifier and how to

specify versions, since the test plan, like all other software items, is not written

in stone and is subject to change. A mention was made of a configuration

management system. This is a tool that supports change management. It is

essential for any software project and allows for orderly change control. If a

configuration management system is used, the test plan identifier can serve to

identify it as a configuration item .

2 . Introduction

In this

section the test planner gives an overall description of the project, the

software system being developed or maintained, and the soft ware items and/or

features to be tested. It is useful to include a high-level description of

testing goals and the testing approaches to be used. References to related or

supporting documents should also be included in this section, for example,

organizational policies and standards documents, the project plan, quality

assurance plan, and software configuration plan. If test plans are developed as

multilevel documents, that is, separate documents for unit, integration,

system, and acceptance test, then each plan must reference the next higher

level plan for consistency and compatibility reasons.

3 . Items to Be Tested

This is a

listing of the entities to be tested and should include names, identifiers, and

version/revision numbers for each entity. The items listed could include

procedures, classes, modules, libraries, subsystems, and systems. References to

the appropriate documents where these items and their behaviors are described

such as requirements and design documents, and the user manual should be included

in this component of the test plan. These references support the tester with

traceability tasks. The focus of traceability tasks is to ensure that each

requirement has been covered with an appropriate number of test cases. In this

test plan component also refer to the transmittal media where the items are

stored if appropriate; for example, on disk, CD, tape. The test planner should

also include items that will not be

included in the test effort.

4 . Features to Be Tested

In this

component of the test plan the tester gives another view of the entities to be

tested by describing them in terms of the features they encompass. Chapter 3

has this definition for a feature.

Features may be described as distinguishing

characteristics of a software component or system.

They are

closely related to the way we describe software in terms of its functional and

quality requirements . Example features relate to performance,reliability,

portability, and functionality requirements for thesoftware being tested.

Features that will not be tested

should be identified and reasons for their exclusion from test should be

included.

5 . Approach

This

section of the test plan provides broad coverage of the issues to be addressed

when testing the target software. Testing activities are described. The level

of descriptive detail should be sufficient so that the major testing tasks and

task durations can be identified. More details will appear in the accompanying

test design specifications. The planner should also include for each feature or

combination of features, the approach that will be taken to ensure that each is

adequately tested. Tools and techniques necessary for the tests should be

included.

6 . Item Pass/Fail Criteria

Given a

test item and a test case, the tester must have a set of criteria to decide on

whether the test has been passed or failed upon execution. The master test plan

should provide a general description of these criteria. In the test design

specification section more specific details are given for each item or group of

items under test with that specification. A definition for the term failure was

given in Chapter 2. Another way of describing the term is to state that a

failure occurs when the actual output produced by the software does not agree

with what was expected, under the conditions specified by the test. The

differences in output behavior (the failure) are caused by one or more defects.

The impact of the defect can be expressed using an approach based on

establishing severity levels. Using this approach, scales are used to rate

failures/defects with respect to their impact on the customer/user (note their

previous use for stop-test decision making in the preceding section). For

example, on a scale with values from 1 to 4, a level 4 defect/failure may have

a minimal impact on the customer/user, but one at level 1 will make the system

unusable.

7 . Suspension and Resumption Criteria

In this

section of the test plan, criteria to suspend and resume testing are described.

In the simplest of cases testing is suspended at the end of a working day and

resumed the following morning. For some test items this condition may not apply

and additional details need to be provided by the test planner. The test plan

should also specify conditions to suspend testing based on the effects or

criticality level of the failures/defects observed. Conditions for resuming the

test after there has been a suspension should also be specified. For some test

items resumption may require certain tests to be repeated.

8 . Test Deliverables

Execution-based

testing has a set of deliverables that includes the test plan along with its

associated test design specifications, test procedures, and test cases. The

latter describe the actual test inputs and expected outputs. Deliverables may also

include other documents that result from testing such as test logs, test

transmittal reports, test incident reports, and a test summary report. These

documents are described in subsequent sections of this chapter. Preparing and

storing these documents requires considerable resources. Each organization

should decide which of these documents is required for a given project.

Another

test deliverable is the test harness. This is supplementary code that is

written specifically to support the test efforts, for example, module drivers

and stubs. Drivers and stubs are necessary for unit and integration test. Very

often these amount to a substantial amount of code. They should be well

designed and stored for reuse in testing subsequent releases of the software.

Other support code, for example, testing tools that will be developed

especially for this project, should also be described in this section of the

test plan.

9 . Testing Tasks

In this

section the test planner should identify all testing-related tasks and their

dependencies. Using a Work Breakdown Structure (WBS) is useful here.

A Work Breakdown Structure is a hierarchical or

treelike representation of all the tasks that are required to complete a

project.

High-level

tasks sit at the top of the hierarchical task tree. Leaves are detailed tasks

sometimes called work packages that can be done by 1-2 people in a short time

period, typically 3-5 days. The WBS is used by project managers for defining

the tasks and work packages needed for project planning. The test planner can

use the same hierarchical task model but focus only on defining testing tasks.

Rakos gives a good description of the WBS and other models and tools useful for

both project and test management .

10. The Testing Environment

Here the

test planner describes the software and hardware needs for the testing effort.

For example, any special equipment or hardware needed such as emulators,

telecommunication equipment, or other devices should be noted. The planner must

also indicate any laboratory space containing the equipment that needs to be

reserved. The planner also needs to specify any special software needs such as

coverage tools, databases, and test data generators. Security requirements for

the testing environment may also need to be described.

11. Responsibilities

The staff

who will be responsible for test-related tasks should be identified. This

includes personnel who will be:

• transmitting

the software-under-test;

• developing

test design specifications, and test cases;

• executing

the tests and recording results;

• tracking

and monitoring the test efforts;

• checking

results;

• interacting

with developers;

• managing

and providing equipment;

• developing

the test harnesses;

• interacting

with the users/customers.

This

group may include developers, testers, software quality assurance staff,

systems analysts, and customers/users.

12. Staffing and Training Needs

The test

planner should describe the staff and the skill levels needed to carry out

test-related responsibilities such as those listed in the section above. Any

special training required to perform a task should be noted.

13. Scheduling

Task

durations should be established and recorded with the aid of a task networking

tool. Test milestones should be established, recorded, and scheduled. These

milestones usually appear in the project plan as well as the test plan. They

are necessary for tracking testing efforts to ensure that actual testing is

proceeding as planned. Schedules for use of staff, tools, equipment, and

laboratory space should also be specified. A tester will find that PERT and

Gantt charts are very useful tools for these assignments.

14. Risks and Contingencies

Every

testing effort has risks associated with it. Testing software with a high

degree of criticality, complexity, or a tight delivery deadline all impose

risks that may have negative impacts on project goals. These risks should be:

(i) identified, (ii) evaluated in terms of their probability of occurrence,

(iii) prioritized, and (iv) contingency plans should be developed that can be

activated if the risk occurs.

An

example of a risk-related test scenario is as follows. A test planner, lets say

Mary Jones, has made assumptions about the availability of the software under

test. A particular date was selected to transmit the test item to the testers

based on completion date information for that item in the project plan. Ms.

Jones has identified a risk: she realizes that the item may not be delivered on

time to the testers. This delay may occur for several reasons. For example, the

item is complex and/or the developers are inexperienced and/or the item

implements a new algorithm and/or it needs redesign. Due to these conditions

there is a high probability that this risk could occur. A contingency plan

should be in place if this risk occurs. For example, Ms. Jones could build some

flexibility in resource allocations into the test plan so that testers and

equipment can operate beyond normal working hours. Or an additional group of

testers could be made available to work with the original group when the

software is ready to test. In this way the schedule for testing can continue as

planned, and deadlines can be met.

It is

important for the test planner to identify test-related risks, analyze them in

terms of their probability of occurrence, and be ready with a contingency plan

when any high-priority riskrelated event occurs. Experienced planners realize

the importance of risk management.

15. Testing Costs

The IEEE

standard for test plan documentation does not include a separate cost component

in its specification of a test plan. This is the usual case for many test plans

since very often test costs are allocated in the overall project management

plan. The project manager in consultation with developers and testers estimates

the costs of testing. If the test plan is an independent document prepared by

the testing group and has a cost component, the test planner will need tools

and techniques to help estimate test costs. Test costs that should included in

the plan are:

• costs of

planning and designing the tests;

costs of

acquiring the hardware and software necessary for the tests (includes

development of the test harnesses);

• costs to

support the test environment;

• costs of

executing the tests;

• costs of

recording and analyzing test results;

• tear-down

costs to restore the environment.

Other

costs related to testing that may be spread among several projects are the

costs of training the testers and the costs of maintaining the test database.

Costs for reviews should appear in a separate review plan.

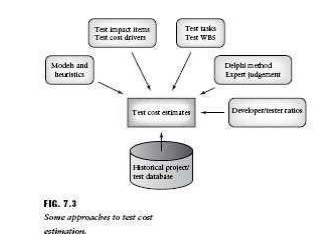

When

estimating testing costs, the test planner should consider organizational,

project, and staff characteristics that impact on the cost of testing. Several

key characteristics that we will call ―test cost impact items‖ are briefly described below.

The

nature of the organization; its testing maturity level, and general maturity. This will

determine

the degree of test planning, the types of testing methods applied, the types of

tests that are designed and implemented, the quality of the staff, the nature

of the testing tasks, the availability of testing tools, and the ability to

manage the testing effort. It will also determine the degree of support given

to the testers by the project manager and upper management.

The

nature of the software product being developed. The tester

must understand the nature of the

system to

be tested. For example, is it a real time, embedded, mission-critical system,

or a business application? In general, the testing scope for a business

application will be smaller than one for a mission or safely critical system,

since in case of the latter there is a strong possibility that software defects

and/or poor software quality could result in loss of life or property. Mission-

and safety-critical software systems usually require extensive unit and integration

tests as well as many types of system tests (refer to Chapter 6). The level of

reliability required for these systems is usually much higher than for ordinary

applications.

For these

reasons, the number of test cases, test procedures, and test scripts will most

likely be higher for this type of software as compared to an average

application. Tool and resource needs will be greater as well.

The scope

of the test requirements. This includes the types of tests

required, integration, performance, reliability,

usability, etc. This characteristic directly relates to the nature of the

software product. As described above, mission/safety-critical systems, and

real-time embedded systems usually require more extensive system tests for

functionality, reliability, performance, configuration, and stress than a

simple application. These test requirements will impact on the number of tests

and test procedures required, the quantity and complexity of the testing tasks,

and the hardware and software needs for testing.

The level of tester ability. The

education, training, and experience levels of the testers will impact on their ability to design, develop,

execute, and analyze test results in a timely and effective manner. It will

also impact of the types of testing tasks they are able to carry out.

Knowledge of the project problem domain. It is not

always possible for testers to have detailed

knowledge of the problem domain of the software they are testing. If the

level of knowledge is poor, outside experts or consultants may need to be hired

to assist with the testing efforts, thus impacting on costs.

The level of tool support. Testing

tools can assist with designing, and executing tests, as well as collecting and analyzing test data.

Automated support for these tasks could have a positive impact on the

productivity of the testers; thus it has the potential to reduce test costs.

Tools and hardware environments are necessary to drive certain types of system

tests, and if the product requires these types of tests, the cost should be

folded in.

Training requirements. State-of-the-art

tools and techniques do help improve tester productivity but often training is required for testers

so that they have the capability to use these tools and techniques properly and

effectively. Depending on the organization, these training efforts may be

included in the costs of testing. These costs, as well as tool costs, could be

spread over several projects.

Project

planners have cost estimation models, for example, the COCOMO model, which they

use to estimate overall project costs. At this time models of this type have

not been designed specifically for test cost estimation.

Related Topics