Chapter: XML and Web Services : Building XML-Based Applications : Architecting Web Services

Technical Motivations for Web Services

Technical Motivations for Web Services

The technical motivations for Web Services are far more complex than the

business moti-vations. Fundamentally, technologists are looking for the

simplicity and flexibility promised, but never delivered, by RPC architectures

and object-oriented technologies.

Limitations of CORBA and DCOM

Programming has been performed on a computer-by-computer basis for much

of the his-tory of computing. Programs were discrete chunks of computer code

that ran on individ-ual computers. Even object-oriented programming originated

in a single-computer environment. This isolated computer mindset has been

around so long that it pervades all thinking about software.

Then along came networks, and technologists looked for ways to break up

program func-tionality onto multiple computers. Early communication protocols,

such as the Network File System for Unix and Microsoft’s Distributed Computing

Environment, focused on the network layer. These protocols, in turn, led to the

development of wire protocols for distributed computing—in particular, the

Object Remote Procedure Call (ORPC) proto-col for Microsoft’s DCOM and the

Object Management Group’s Internet Inter-ORB Protocol (IIOP) that underlies

CORBA.

RPC architectures such as DCOM and CORBA enabled programs to be broken

into dif-ferent pieces running on different computers. Object-oriented

techniques were particu-larly suited to this distributed environment for a few

reasons. First, objects maintained their own discrete identities. Second, the

code that handles the communication between objects could be encapsulated into

its own set of classes so that programmers working in a distributed environment

needn’t worry about how this communication worked.

However, programmers still had that isolated computer mindset, which

colored both DCOM’s and CORBA’s approach: Write your programs so that the

remote computer appears to be a part of your own computer. RPC architectures

all involved marshalling a piece of a program on one computer and shipping it

to another system.

Unfortunately, both DCOM and CORBA share many of

the same problems. DCOM is expressly a Microsoft-only architecture, and

although CORBA is intended to provide cross-platform interoperability, in

reality it is too complex and semantically ambiguous to provide any level of

interoperability without a large amount of manual integration work. In

addition, the specter of marshalling executable code and shipping it over the

Internet opens up a Pandora’s box of security concerns, such as viruses and

worms.

Furthermore, each of these technologies handles key functionality in its

own, proprietary way. CORBA’s payload parameter value format is the Common Data

Representation (CDR) format, whereas DCOM uses the incompatible Network Data

Representation (NDR) format (Web Services use XML). Likewise, CORBA uses

Interoperable Object References (IORs) for endpoint naming, whereas DCOM uses

OBJREFs (Web Services use URIs, which are generalized URLs).

By representing business concepts with systems of business components,

business modelers seek to achieve the following objectives:

Limit complexity and costs by

developing coarse-grained software units.

Support high levels of reuse of

business components.

Speed up the development cycle by

combining preexisting business components and continuous integration.

Deliver systems that can easily

evolve.

Allow different vendors to

provide competing business components that serve the same purpose, leading to a

market in business components.

Unfortunately, large-scale business modeling has not widely achieved any

of these objectives, for several reasons, including the following:

Business components in reality

typically have complex, nonstandard interfaces, which makes reuse and

substitutability difficult to achieve.

As systems of business components

evolve into increasingly complex, compre-hensive systems, it becomes very

difficult to maintain the encapsulation of the components. Ideally, each

component is a black box that can be plugged into the underlying framework; in

reality, developers must spend time tweaking the internal operations of the

components.

The business drivers behind the

development of the business components lead to custom development, which makes

each component unique and custom in its own right. Every company handles its

business models differently, so every business component is different.

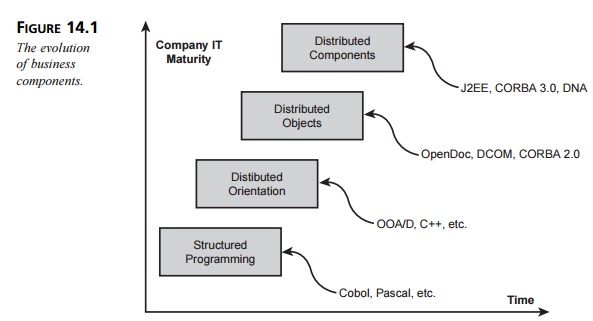

The Web Services model can be thought of as the next step in the

evolution of business components. Whereas business components are large,

recursively defined collections of objects, Web Services should be relatively

small, self-organizing components with well-defined, dynamic interfaces.

Problems with Vendor Dependence

Early leaders in every nascent industry find that they must integrate

their companies ver-tically. For example, Standard Oil drilled the wells,

transported the petroleum, refined it, distributed it, and then ran the gas

stations that sold it. It had to follow this business model, because there were

no other companies that could provide each of these services at a low-enough

cost or with adequate quality.

The same is true of the software industry. ERP systems were essential to

companies’ operations, because the only cost-effective way to get all the

operational components that make up ERP systems to work together was to get

them from the same company. If you tried to cobble together accounting and

manufacturing software back in 1995, you would have found large variations in

quality and extremely high integration costs.

Simply put, the single main advantage to single-vendor distributed

software solutions is that they work. When the cost of integration is high,

going with a single vendor will save money. However, there are also several

disadvantages to obtaining software from a single vendor. The disadvantages are

as follows:

As the market matures, other

vendors will offer individual packages that are of a higher quality than the

single vendor, making a “best-of-breed” approach more attractive.

The purchasing company’s business

grows to depend on the business strategy of the vendor. Shifts in strategic

direction or business problems at the vendor can filter down to the vendor’s

customers (the “all-the-eggs-in-one-basket” problem).

It is very difficult to integrate

a “one-stop shop” vendor’s product with other ven-dors’ products at other

companies. As a result, a single vendor approach limits the potential of

e-business.

Taking a vendor-independent software strategy solves the problems of

vendor depen-dence but is only cost effective when certain conditions are met:

A “best-of-breed” approach makes

sense because the market is mature enough to offer competing packages of

sufficient quality.

There is a broadly accepted

integration framework that allows for inexpensive inte-gration of different

packages, both within companies and between companies.

The Web Services model has the potential to meet both of these

conditions. In particular, Web Services’ loose coupling is the key to flexible,

inexpensive integration capabilities.

Reuse and Integration Goals

Software reuse has been a primary goal of object-oriented architectures

but, like the Holy Grail, has always been just out of reach. Creating objects

and components to be reusable takes more development time and design skill, and

therefore more money up front.

However, conventional wisdom says that coding for reusability saves

money in the long run, so why isn’t coding for reusability more prevalent?

The problem is that the goal of software reuse presupposes a world with

stable business requirements, and such a world just doesn’t exist. Building a component

so that it can handle future situations different from the current ones tends

to be wasted work, because the future always brings surprises. Instead, it

usually makes more sense to take an agile approach to components and include

only the functionality you need right now. Such an approach keeps costs down

and is more likely to meet the business requirements, but the resulting

component is rarely reusable.

Simple integration of software applications is likewise just out of

reach. This problem is especially onerous in the area of legacy integration.

Today’s approach to integrating legacy systems into component architectures is

to create a “wrapper” for the legacy system so that it will expose a standard

interface that all the other components know how to work with. What ends up

happening is that getting that wrapper to work becomes the major expense and

takes the most time. Maybe 90 percent of your software is easy to integrate,

but the remaining 10 percent takes up most of your budget.c

Related Topics