Chapter: Advanced Computer Architecture : Multiprocessors and Thread Level Parallelism

Multithreading exploiting TLP

Multithreading

exploiting TLP.

1. Multithreading: Exploiting

Thread-Level Parallelism within a Processor

Multithreading allows multiple threads to share the

functional units of a single processor in an overlapping fashion. To permit

this sharing, the processor must duplicate the independent state of each

thread. For example, a separate copy of the register file, a separate PC, and a

separate page table are required for each thread.

There are

two main approaches to multithreading.

Ø Fine-grained

multithreading switches between threads on each instruction, causing the execution of multiples threads to be interleaved. This

interleaving is often done in a round-robin fashion, skipping any threads that

are stalled at that time.

Ø Coarse-grained multithreading was invented as an alternative to fine

grained multithreading. Coarse-grained multithreading switches threads only on

costly stalls, such as level

two cache misses. This change

relieves the need

to have thread- switching be

essentially free and is much less

likely to slow the processor down, since instructions from other threads will

only be issued, when a thread encounters a costly stall.

2. Simultaneous Multithreading:

Converting Thread-Level Parallelism into Instruction-Level Parallelism:

Simultaneous

multithreading (SMT) is a variation on multithreading that uses the resources

of a multiple issue, dynamically-scheduled processor to exploit TLP at the same

time it exploits ILP. The key insight that motivates SMT is that modern

multipleissue processors often have more functional unit parallelism available

than a single thread can effectively use. Furthermore, with register renaming

and dynamic scheduling, multiple instructions from independent threads can be

issued without regard to the dependences among them; the resolution of the

dependences can be handled by the dynamic scheduling capability.

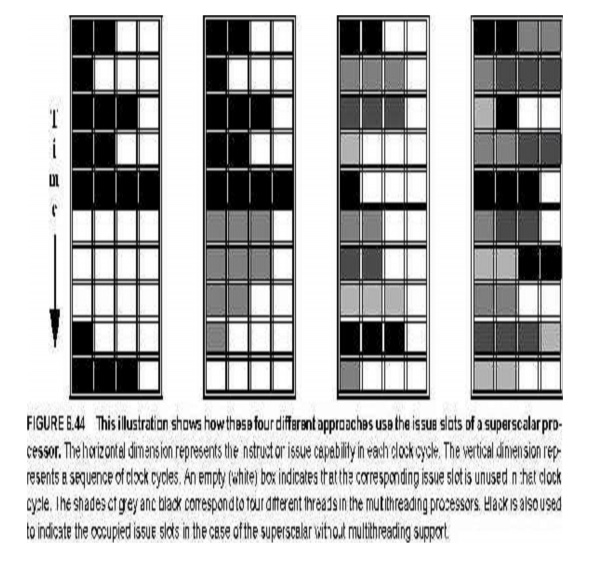

Figure

6.44 conceptually illustrates the differences in a processor’s ability to

exploit the resources of a superscalar for the following processor configurations:

n a superscalar with no

multithreading support,

n a superscalar with

coarse-grained multithreading,

n a superscalar with

fine-grained multithreading, and

n a superscalar with

simultaneous multithreading.

In the

superscalar without multithreading support, the use of issue slots is limited

by a lack of ILP.

In the

coarse-grained multithreaded superscalar, the long stalls are partially hidden

by switching to another thread that uses the resources of the processor.In the

fine-grained case, the interleaving of threads eliminates fully empty slots.

Because only one thread issues instructions in a given clock cycle.

In the

SMT case, thread-level parallelism (TLP) and instruction-level parallelism

(ILP) are exploited simultaneously; with multiple threads using the issue slots

in a single clock cycle.

Figure

6.44 greatly simplifies the real operation of these processors it does

illustrate the potential performance advantages of multithreading in general

and SMT in particular.

3. Design Challenges in SMT

processors

There are

a variety of design challenges for an SMT processor, including:

Ø Dealing

with a larger register file needed to hold multiple contexts,

Ø Maintaining

low overhead on the clock cycle, particularly in critical steps such as

instruction issue, where more candidate instructions need to be considered, and

in instruction completion, where choosing what instructions to commit may be

challenging, and

Ø Ensuring

that the cache conflicts generated by the simultaneous execution of multiple

threads do not cause significant performance degradation.

In

viewing these problems, two observations are important. In many cases, the

potential performance overhead due to multithreading is small, and simple

choices work well enough. Second, the efficiency of current super-scalars is

low enough that there is room for significant improvement, even at the cost of

some overhead.

Related Topics