Chapter: Advanced Computer Architecture : Multiprocessors and Thread Level Parallelism

Distributed Shared-Memory Architectures

Distributed Shared-Memory

Architectures.

There are

several disadvantages in Symmetric Shared Memory architectures.

Ø First,

compiler mechanisms for transparent software cache coherence are very limited.

Ø Second,

without cache coherence, the multiprocessor loses the advantage of being able

to fetch and use multiple words in a single cache block for close to the cost

of fetching one word.

Ø Third,

mechanisms for tolerating latency such as prefetch are more useful when they

can fetch multiple words, such as a cache block, and where the fetched data

remain coherent; we will examine this advantage in more detail later.

These

disadvantages are magnified by the large latency of access to remote memory

versus a local cache. For these reasons, cache coherence is an accepted

requirement in small-scale multiprocessors.

For

larger-scale architectures, there are new challenges to extending the

cache-coherent shared-memory model. Although the bus can certainly be replaced with

a more scalable interconnection network and we could certainly distribute the

memory so that the memory bandwidth could also be scaled, the lack of

scalability of the snooping coherence scheme needs to be addressed is known as

Distributed Shared Memory architecture.

The first

coherence protocol is known as a directory protocol. A directory keeps the

state of every block that may be cached. Information in the directory includes

which caches have copies of the block, whether it is dirty, and so on.

To prevent

the directory from becoming the bottleneck, directory entries can be

distributed along with the memory, so that different directory accesses can go

to different locations, just as different memory requests go to different

memories. A distributed directory retains the characteristic that the sharing

status of a block is always in a single known location. This property is what

allows the coherence protocol to avoid broadcast.

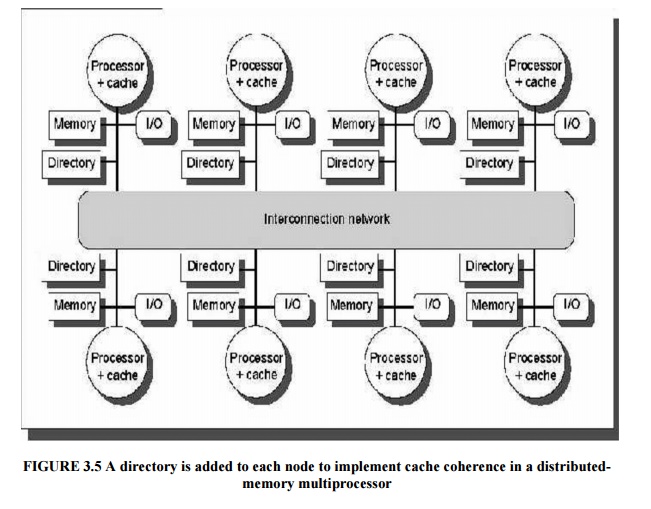

Figure

3.5 shows how our distributed-memory multiprocessor looks with the directories

Added to

each node.

Directory-Based

Cache-Coherence Protocols: The Basics

There are

two primary operations that a directory protocol must implement:

Ø handling

a read miss and handling a write to a shared, clean cache block. (Handling a

write miss to a shared block is a simple combination of these two.)

Ø To

implement these operations, a directory must track the state of each cache

block.

In a

simple protocol, these states could be the following:

Ø Shared—One

or more processors have the block cached, and the value in memory is up to date

(as well as in all the caches)

Ø Uncached—No

processor has a copy of the cache block

Exclusive—Exactly

one processor has a copy of the cache block and it has written the block, so

the memory copy is out of date. The processor is called the owner of the block.

In addition to tracking the state of each cache

block, we must track the processors that have copies of the block when it is

shared, since they will need to be invalidated on a write.

The simplest way to do this is to keep a bit vector

for each memory block. When the block is shared, each bit of the vector

indicates whether the corresponding processor has a copy of that block. We can

also use the bit vector to keep track of the owner of the block when the block

is in the exclusive state. For efficiency reasons, we also track the state of

each cache block at the individual caches.

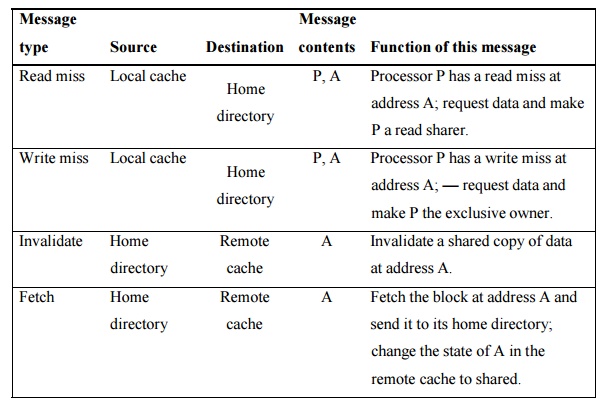

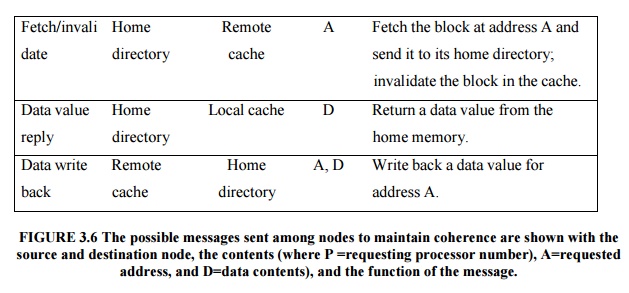

A catalog of the message types that may be sent

between the processors and t he directories. Figure 6.28 shows the type of

messages sent among nodes. The local node is the node where a request

originates.

The home node is the node where the memory location

and the directory entry of an address reside. The physical address space is

statically distributed, so the node that contains the memory and directory for

a given physical address is known.

For example, the high-order bits may provide the

node number, while the low-order bits provide the offset within the memory on

that node. The local node may also be the home node. The directory must be

accessed when the home node is the local node, since copies may exist in yet a

third node, called a remote node.

A remote

node is the node that has a copy of a cache block, whether exclusive (in which

case it is the only copy) or shared. A remote node may be the same as either

the local node or the home node. In such cases, the basic protocol does not

change, but interprocessor messages may be replaced with intraprocessor

messages.

Related Topics