Chapter: Embedded and Real Time Systems : Process and Operating Systems

Multiprocessor

MULTIPROCESSOR:

A multiprocessor

is, in general, any computer system with two or more processors coupled

together. Multiprocessors used for scientific or business applications tend to

have regular architectures: several identical processors that can access a

uniform memory space. We use the term processing element (PE) to mean any

unit responsible for computation, whether it is programmable or not.

Embedded

system designers must take a more general view of the nature of

multiprocessors. As we will see, embedded computing systems are built on top of

an astonishing array of different multiprocessor architectures.

The first

reason for using an embedded multiprocessor is that they offer significantly

better cost/performance—that is, performance and functionality per dollar spent

on the system—than would be had by spending the same amount of money on a

uniprocessor system. The basic reason for this is that processing element

purchase price is a nonlinear

function of performance [Wol08].

The cost

of a microprocessor increases greatly as the clock speed increases. We would

expect this trend as a normal consequence of VLSI fabrication and market

economics. Clock speeds are normally distributed by normal variations in VLSI

processes; because the fastest chips are rare, they naturally command a high

price in the marketplace.

Because

the fastest processors are very costly, splitting the application so that it

can be performed on several smaller processors is usually much cheaper.

Even with

the added costs of assembling those components, the total system comes out to

be less expensive. Of course, splitting the application across multiple

processors does entail higher engineering costs and lead times, which must be

factored into the project.

In

addition to reducing costs, using multiple processors can also help with real

time performance. We can often meet deadlines and be responsive to interaction

much more easily when we put those time-critical processes on separate

processors. Given that scheduling multiple processes on a single

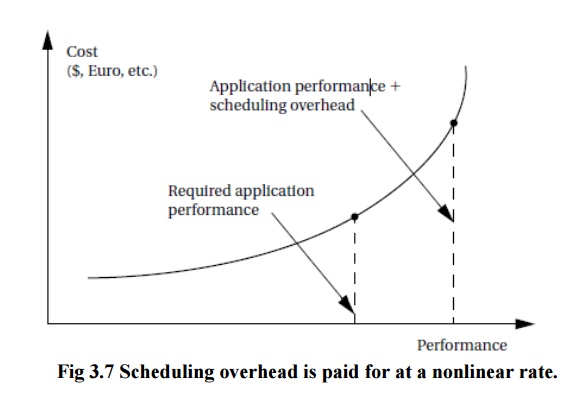

Because

we pay for that overhead at the nonlinear rate for the processor, as

illustrated in Figure 3.7, the savings by segregating time-critical processes

can be large—it may take an extremely large and powerful CPU to provide the same

responsiveness that can be had from a distributed system.

Many of

the technology trends that encourage us to use multiprocessors for performance

also lead us to multiprocessing for low power embedded computing.

Several

processors running at slower clock rates consume less power than a single large

processor: performance scales linearly with power supply voltage but power

scales with V2.

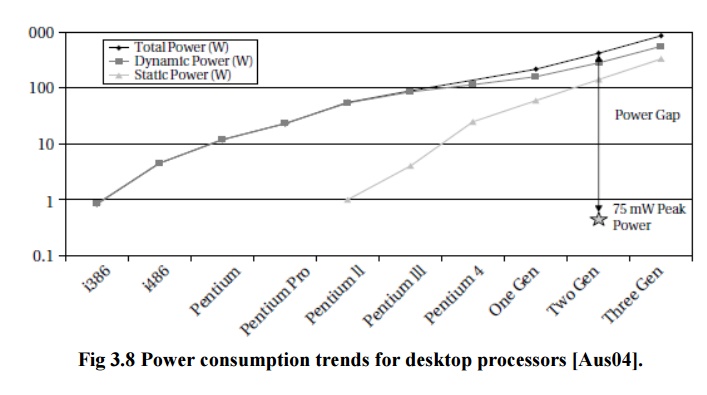

Austin et al. [Aus04] showed that

general-purpose computing platforms are not keeping up with the strict energy

budgets of battery-powered embedded computing. Figure 3.8 compares the

performance of power requirements of desktop processors with available battery

power. Batteries can provide only about 75 Mw of power.

Desktop

processors require close to 1000 times that amount of power to run. That huge

gap cannot be solved by tweaking processor architectures or software.

Multiprocessors provide a way to break through this power barrier and build

substantially more efficient embedded computing platforms.

Related Topics