Chapter: User Interface Design : Interface Testing

Kinds of Tests

Kinds of

Tests

A test is

a tool that is used to measure something. The “something” may be:

Conformance with a requirement.

Conformance with guidelines for good design.

Identification of design problems.

Ease of system learning.

Retention of learning over time.

Speed of task completion.

Speed of need fulfillment.

Error rates.

Subjective user satisfaction.

Guidelines Review

Description:

A review

of the interface in terms of an organization’s standards and design

guidelines.

Advantages:

Can be

performed by developers.

Low cost.

Can

identify general and recurring problems

Particularly

useful for identifying screen design and layout problems.

Disadvantages:

May miss

severe conceptual, navigation, and operational problems.

Heuristic Evaluation

Description:

A

detailed evaluation of a system by interface design specialists to identify

problems.

Advantages:

Easy to

do.

Relatively

low cost.

Does not

waste user’s time.

Can

identify many problems.

Disadvantages:

Evaluators

must possess interface design expertise.

Evaluators

may not possess an adequate understanding of the tasks and user communities.

Difficult

to identify system wide structural problems.

Difficult

to uncover missing exits and interface elements.

Difficult

to identify the most important problems among all problems uncovered.

Does not

provide any systematic way to generate solutions to the problems uncovered.

Guidelines:

Use 3 to

5 expert evaluators.

Choose

knowledgeable people:

Familiar with the project situation.

Possessing a long-term relationship with the

organization.

Heuristic

Evaluation Process

Preparing the session:

Select

evaluators.

Prepare or

assemble:

A project overview.

A checklist of heuristics.

Provide

briefing to evaluators to:

Review the purpose of the evaluation session.

Preview the evaluation process.

Present the project overview and heuristics.

Answer any evaluator questions.

Provide any special evaluator training that may be

necessary.

Conducting the session:

Have each

evaluator review the system alone.

The

evaluator should:

Establish own process or method of reviewing the

system.

provide

usage scenarios, if necessary.

Compare his or her findings with the list of

usability principles.

Identify any other relevant problems or issues.

Make at least two passes through the system.

Detected

problems should be related to the specific heuristics they violate.

Comments

are recorded either:

By the evaluator.

By an observer.

The

observer may answer questions and provide hints.

Restrict

the length of the session to no more than 2 hours.

After the session:

Hold a

debriefing session including

observers and design

team members

where:

Each evaluator presents problems detected and the

heuristic it violated.

A composite problem listing is assembled.

Design suggestions for improving the problematic

aspects of the system

are

discussed.

After the

debriefing session:

Generate a composite list of violations as a

ratings form.

Request evaluators to assign severity ratings to

each violation.

Analyze results and establish a

program to correct

violations and

deficiencies.

Heuristic

Evaluation Effectiveness

One of the earliest papers addressing the

effectiveness of heuristic evaluations was by Nielsen (1992). He reported that

the probability of finding a major

usability problem averaged 42 percent for single

evaluators

in six case studies. The corresponding probability for uncovering a minor problem was only 32 percent.

Heuristic evaluations are useful in identifying

many usability problems and should be part of the testing arsenal. Performing

this kind of evaluation before beginning actual testing with users will

eliminate a number of design problems, and is but one step along the path

toward a very usable system.

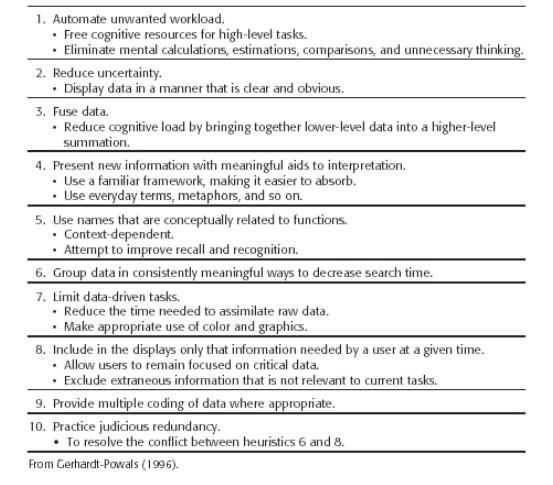

Research

based set of heuristics

Cognitive Walkthroughs

Description:

Reviews

of the interface in the context of tasks users perform.

Advantages:

Allow a clear

evaluation of the task flow early in the design process.

Do not

require a functioning prototype.

Low cost.

Can be

used to evaluate alternate solutions.

Can be

performed by developers.

More

structured than a heuristic evaluation.

Useful

for assessing “exploratory learning.”

Disadvantages:

Tedious

to perform.

May miss

inconsistencies and general and recurring problems.

Guidelines:

Needed to

conduct the walkthrough are:

A general

description of proposed system users and what relevant

knowledge

they possess.

A

specific description of one or more core or representative tasks to be

performed.

A list of the correct actions required to complete

each of the tasks.

Review:

Several core or representative tasks across a range

of functions.

Proposed tasks of particular concern.

Developers

must be assigned roles of:

Scribe to record results of the action.

Facilitator to keep the evaluation moving.

Start

with simple tasks.

Don’t get

bogged down demanding solutions.

Limit

session to 60 to 90 minutes.

Think-Aloud Evaluations

Description:

Users

perform specific tasks while thinking out load.

Comments

are recorded and analyzed.

Advantages:

Utilizes

actual representative tasks.

Provides

insights into the user’s reasoning.

Disadvantages:

May be

difficult to get users to think out loud.

Guidelines:

Develop:

Several core or representative tasks.

Tasks of particular concern.

Limit

session to 60 to 90 minutes.

Usability Test

Description:

An

interface evaluation under real-world or controlled conditions.

Measures

of performance are derived for specific tasks.

Problems

are identified.

Advantages:

Utilizes

an actual work environment.

Identifies

serious or recurring problems.

Disadvantages:

High cost

for establishing facility.

Requires

a test conductor with user interface expertise.

Emphasizes

first-time system usage.

Poorly

suited for detecting inconsistency problems.

Classic Experiments

Description:

An

objective comparison of two or more prototypes identical in all aspects except

for one design issue.

Advantages:

Objective

measures of performance are obtained.

Subjective

measures of user satisfaction may be obtained.

Disadvantages:

Requires

a rigorously controlled experiment to conduct the evaluation.

The

experiment conductor must have expertise in setting up, running, and analyzing

the data collected.

Requires

creation of multiple prototypes.

Guidelines:

State a

clear and testable hypothesis.

Specify a

small number of independent variables to be manipulated.

Carefully

choose the measurements.

Judiciously

select study participants and carefully or randomly assign them to groups.

Control

for biasing factors.

Collect

the data in a controlled environment.

Apply

statistical methods to data analysis.

Resolve

the problem that led to conducting the experiment.

Focus Groups

Description:

A

discussion with users about interface design prototypes or tasks.

Advantages:

Useful

for:

Obtaining initial user thoughts.

Trying out ideas.

Easy to

set up and run.

Low cost.

Disadvantages:

Requires

experienced moderator.

Not

useful for establishing:

How people really work.

What kinds of usability problems people have.

Guidelines:

Restrict

group size to 8 to 12.

Limit to

90 to 120 minutes in length.

Record

session for later detailed analysis.

Choosing a Testing Method

Beer, Anodenko, and Sears (1997) suggest a good pairing is cognitive

walkthroughs followed by think-aloud evaluations.

Using cognitive walkthroughs early in the development process permits

the identification and correction of the most serious problems. Later, when a

functioning prototype is available, the remaining problems can be identified

using a think-aloud evaluation.

A substantial leap forward in the testing process would be the creation

of a software tool simulating the behavior of people. This will allow usability

tests to be performed without requiring real users to perform the necessary

tasks.

In conclusion, each testing method has strengths and weaknesses. A

well-rounded testing program will use a combination of some, or all, of these

methods to guarantee the usability of its created product.

It is very important that testing start as early as possible in the

design process and, continue through all developmental stages.

Developing and Conducting the

Test

A usability test requires developing a test plan, selecting test

participants, conducting the test, and analyzing the test results.

The Test Plan

Define the scope of the test.

A test’s

scope will be influenced by a variety of factors. o Determinants include the following issues:

The design

stage:

early,

middle, or late—the stage of design influences the kinds of prototypes that may

exist for the test,

the time

available

for the

test—this may range from just a few days to a year or more,

finances allocated for testing—money allocated may

range from one percent of a

project’s cost to more than 10 percent,

the

project’s

novelty (well defined or

exploratory)—this will influence the

kinds of

tests feasible to conduct, expected user

numbers (few or many) and interface

criticality (life-critical medical system or informational exhibit)—much

more testing depth and length will be needed for systems with greater human

impact, and finally, the development

team’s experience and testing

knowledge will also affect the kinds of tests that can be conducted.

Define the purpose of the test.

Performance

goals.

What the

test is intended to accomplish.

Define the test methodology.

Type of

test to be performed.

Test

limitations.

Developer

participants.

Identify and schedule the test facility or

location.

The

location should be away from distractions and disturbances. If the test is being held in a usability

laboratory, the test facility should resemble the

location

where the system will be used.

It may be an actual office designated for the

purpose of testing, or it may be a laboratory specially designed and fitted for

conducting tests.

Develop scenarios to satisfy the test’s purpose.

Test Participants

Assemble the proper people to participate in the

test.

Test Conduct and Data Collection

To collect usable data, the test should begin only

after the proper preparation. Then, the data must be properly and accurately

recorded.

Finally, the test must be concluded and followed up

properly.

Related Topics