Chapter: Cryptography and Network Security Principles and Practice : System Security : Intruders

Intrusion Detection

INTRUSION DETECTION

Inevitably, the best intrusion prevention system will fail.

A system’s second line of defense

is intrusion detection, and this has been the focus

of much research in recent

years. This interest

is motivated by a number

of considerations, including the following:

1.

If an intrusion is

detected quickly enough, the intruder can be identified and ejected from the

system before any damage is done or any data are compro- mised. Even if the detection is not sufficiently timely to preempt

the intruder, the sooner that

the intrusion is detected, the less the amount of damage and the more quickly that recovery can be achieved.

2.

An effective intrusion detection system can serve as a deterrent, so acting to pre-

vent intrusions.

3.

Intrusion detection

enables the collection of information about intrusion tech- niques that can be used to strengthen the intrusion prevention facility.

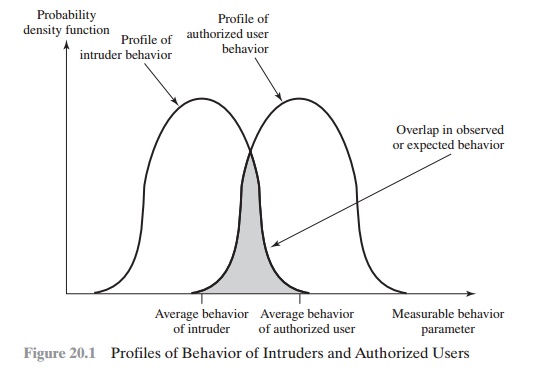

Intrusion detection is based on the assumption

that the behavior of the intruder differs from that of a legitimate user in ways that

can be quantified. Of course, we cannot expect that there will be a crisp,

exact distinction between an attack by an intruder and the normal

use of resources by an authorized user.

Rather, we must expect that there will be some overlap.

Figure 20.1 suggests, in very abstract

terms, the nature

of the task confronting the

designer of an intrusion detection system. Although the typical behavior of an

intruder differs from the typical

behavior of an authorized user, there is an overlap in these behaviors. Thus, a loose interpretation of intruder behavior, which will catch more intruders, will also lead to a

number of “false positives,” or authorized users identified as intruders. On

the other hand, an attempt to limit false positives by a tight interpretation of intruder behavior

will lead to an increase

in false negatives, or intruders not identified as intruders. Thus, there is an element of compromise and

art in the practice of intrusion detection.

In Anderson’s study

[ANDE80], it was postulated that one could,

with reason- able confidence, distinguish between a masquerader and a legitimate user. Patterns of legitimate user behavior can be established by observing past history,

and signifi- cant deviation

from such patterns can be detected. Anderson suggests that the task of

detecting a misfeasor (legitimate user performing in an unauthorized fashion) is more

difficult, in that the distinction between abnormal and normal behavior may be

small. Anderson concluded that such violations would be undetectable solely through the search for anomalous

behavior. However, misfeasor behavior might nevertheless be detectable by

intelligent definition of the class of conditions that suggest unauthorized use. Finally,

the detection of the clandestine user was felt to be beyond

the scope of purely automated

techniques. These observations, which were made in 1980, remain

true today.

[PORR92] identifies the following approaches

to intrusion detection:

1.

Statistical anomaly detection: Involves the collection of data relating

to the behavior of legitimate users

over a period of time. Then statistical tests are applied

to observed behavior

to determine with a high level of confidence

whether that behavior is not legitimate user behavior.

a.

Threshold detection:

This approach involves defining thresholds, inde- pendent of user, for the frequency of occurrence of various events.

b.

Profile based:

A profile of the activity

of each user is developed

and used to detect changes in the behavior

of individual accounts.

2.

Rule-based detection: Involves an attempt to define a set of rules that can be used to decide

that a given behavior

is that of an intruder.

a.

Anomaly detection: Rules are developed

to detect deviation

from previ- ous usage patterns.

b.

Penetration

identification: An expert system approach that searches for suspicious behavior.

In a nutshell, statistical approaches attempt

to define normal,

or expected, behavior, whereas rule-based approaches

attempt to define proper behavior.

In terms of the types

of attackers listed

earlier, statistical anomaly

detection is effective against

masqueraders, who are unlikely to mimic the behavior patterns

of the accounts they appropriate. On the other hand, such techniques may be unable to

deal with misfeasors. For such attacks,

rule-based approaches may be able to recog- nize events and sequences

that, in context,

reveal penetration. In practice, a system

may exhibit a combination of both approaches to be effective against a broad

range of attacks.

Audit Records

A fundamental tool for intrusion detection

is the audit record. Some record of ongoing activity by users must be

maintained as input to an intrusion detection system. Basically, two plans are

used:

•

Native

audit records: Virtually all multiuser operating systems include accounting software that collects information on user activity. The advantage of using this information is that no additional

collection software is needed. The disadvantage

is that the native audit records may not contain the needed information or may not contain it in a convenient form.

•

Detection-specific audit records: A collection facility

can be implemented that generates audit records containing

only that information required by the intrusion detection system. One advantage of such an approach is that it could

be made vendor independent and ported to a variety of systems. The disad- vantage is the extra overhead

involved in having, in effect, two accounting packages running on a machine.

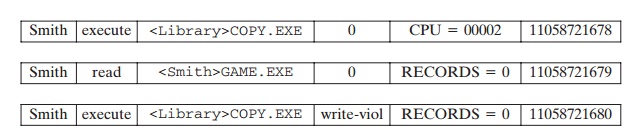

A good example of detection-specific audit

records is one developed by Dorothy Denning [DENN87]. Each audit record

contains the following fields:

•

Subject: Initiators of actions.

A subject is typically a terminal user but might also be a process

acting on behalf of users or groups of users.

All activity arises through commands issued by subjects. Subjects

may be grouped into different access classes, and these

classes may overlap.

•

Action: Operation performed by the subject

on or with an object;

for example, login, read,

perform I/O, execute.

•

Object: Receptors of actions. Examples

include files, programs, messages, records, terminals, printers, and user- or program-created structures. When

a subject is the recipient of an action,

such as electronic mail, then that subject is considered an object. Objects

may be grouped by type.

Object granularity may vary

by object type and by environment. For example,

database actions may be

audited for the database as a whole

or at the record level.

•

Exception-Condition: Denotes which, if any, exception condition is raised on

return.

•

Resource-Usage: A

list of quantitative elements in which each element gives the amount used of

some resource (e.g., number of lines

printed or displayed, number of records read or written, processor time, I/O units

used, session elapsed time).

•

Time-Stamp: Unique time-and-date

stamp identifying when the action took place.

Most user operations are made up of a number of elementary

actions. For example, a file copy involves the execution of the user

command, which includes doing access validation and setting up the copy, plus the read from one file, plus the

write to another file. Consider

the command

COPY GAME.EXE TO <Libray>GAME.EXE

issued by Smith to copy an executable file

GAME from the current directory to the

<Library> directory. The following audit records may

be generated:

In this case, the copy is aborted because

Smith does not have write permission to

<Library>.

The decomposition of a user operation into

elementary actions has three advantages:

1.

Because objects

are the protectable entities in a system, the use of elementary

actions enables an audit of all behavior affecting an object. Thus, the system can detect attempted

subversions of access controls (by noting an abnormal- ity in the number

of exception conditions returned) and can detect successful subversions by noting an

abnormality in the set of objects accessible to the subject.

2.

Single-object, single-action audit records simplify the model and

the implemen- tation.

3.

Because

of the

simple, uniform structure of the detection-specific

audit records, it may be

relatively easy to obtain this information or at least part of it by a straightforward mapping from existing native audit records to the detection-specific audit

records.

Statistical Anomaly Detection

As was mentioned,

statistical anomaly detection techniques fall into two broad categories: threshold detection and profile-based

systems. Threshold detection involves counting the number

of occurrences of a specific

event type over an inter- val of time. If the count surpasses what is considered a reasonable number that one might

expect to occur,

then intrusion is assumed.

Threshold

analysis, by itself,

is a crude and ineffective detector of even moder-

ately sophisticated attacks. Both the threshold and the time interval must be deter- mined. Because of the variability across users, such thresholds are likely to generate

either a lot of false

positives or a lot of false negatives. However, simple threshold detectors may be useful in conjunction with more sophisticated techniques.

Profile-based anomaly detection focuses on

characterizing the past behavior of individual users or related groups of users

and then detecting significant devia- tions.

A profile may consist of a set of parameters, so that deviation on just a single

parameter may not be sufficient in itself to signal an alert.

The foundation

of this approach is an analysis of audit records. The audit records

provide input to the intrusion

detection function in two ways. First, the

designer must decide on a number of quantitative metrics

that can be used to mea-

sure user behavior. An analysis

of audit records

over a period of time can be used to determine the activity profile of the

average user. Thus, the audit records

serve to define typical behavior. Second, current audit records are the input

used to detect intrusion. That is, the intrusion detection

model analyzes incoming

audit records to determine deviation from average

behavior.

Examples of metrics that are useful for

profile-based intrusion detection are the

following:

•

Counter: A nonnegative integer

that may be incremented but not decre- mented

until it is reset by management action. Typically,

a count of certain event types is

kept over a particular period of time. Examples

include the number of logins by a single

user during an hour, the number of times a given

command is executed during a single user session, and the number of pass- word failures

during a minute.

•

Gauge: A nonnegative integer

that may be incremented or decremented. Typically, a gauge

is used to measure the

current value of some entity. Examples include

the number of logical connections assigned to a user appli- cation and the number of outgoing

messages queued for a user process.

•

Interval timer: The length of time between two related events.

An example is the length of time between

successive logins to an account.

•

Resource utilization: Quantity of resources consumed

during a specified period.

Examples include the number of pages printed during a user session and total time consumed

by a program execution.

Given these general metrics, various tests can be performed to determine whether current activity fits within acceptable limits. [DENN87] lists the following approaches that may be taken:

•

Mean and standard deviation

•

Multivariate

•

Markov process

•

Time series

•

Operational

The simplest statistical test is to measure the mean and standard

deviation of a parameter

over some historical period. This gives a reflection of the average behav- ior

and its variability. The use of mean

and standard deviation is applicable to a wide variety of counters, timers, and

resource measures. But these measures, by them- selves, are typically too crude

for intrusion detection purposes.

A multivariate

model is based on correlations between

two or more

variables. Intruder behavior may

be characterized with greater confidence by

considering such correlations (for example, processor

time and resource usage, or login fre-

quency and session

elapsed time).

A Markov

process model is used to establish transition probabilities among various

states. As an example, this model might be used to look at transitions between

certain commands.

A time

series model focuses on time intervals, looking for sequences of events that happen

too rapidly or too slowly. A variety of statistical tests

can be applied to

characterize abnormal timing.

Finally, an operational model is based on a judgment of what is considered abnormal,

rather than an automated analysis of past audit records. Typically, fixed limits are defined and

intrusion is suspected for an observation that is outside the limits. This type

of approach works best where intruder behavior can be deduced from certain

types of activities. For example,

a large number of login attempts over a short period suggests an attempted intrusion.

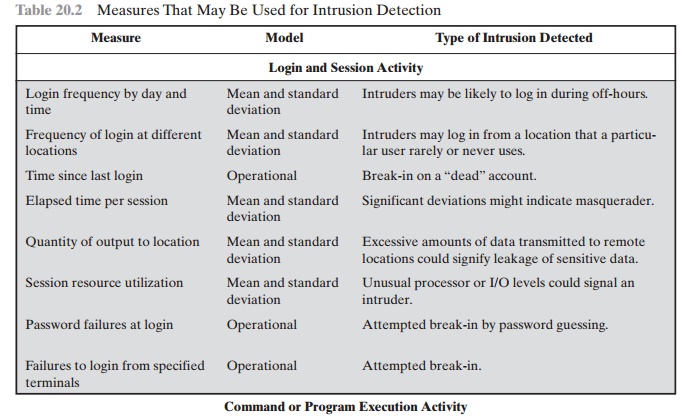

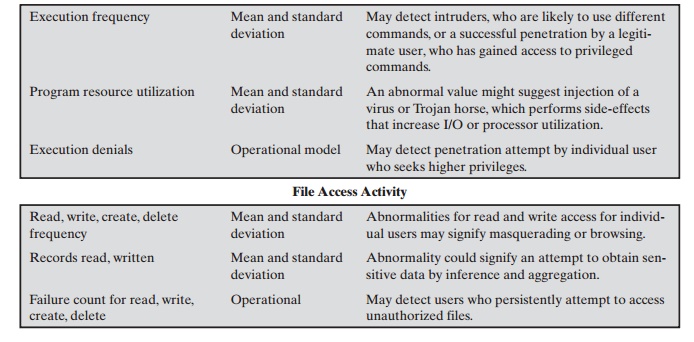

As an example of the use of these various

metrics and models, Table 20.2 shows various measures considered or tested for

the Stanford Research Institute (SRI) intrusion detection system (IDES)

[DENN87, JAVI91, LUNT88].

The main advantage of the use

of statistical profiles is that a prior knowledge of security flaws is not required. The detector

program learns what is “normal” behavior and then looks for

deviations. The approach is not based on system-dependent

characteristics and vulnerabilities. Thus, it should be readily portable

among a variety of systems.

Rule-Based Intrusion Detection

Rule-based techniques detect intrusion by

observing events in the system and applying a set of rules that lead to a decision

regarding whether a given pattern of activity is or is not suspicious. In very

general terms, we can characterize all approaches as focusing on either anomaly

detection or penetration identification, although there is some overlap in

these approaches.

Rule-based anomaly

detection is similar in terms of its approach

and strengths to statistical anomaly detection. With the rule-based

approach, historical audit records

are analyzed to identify usage patterns and to generate automatically rules that describe those patterns. Rules

may represent past behavior patterns of users, programs, privileges, time slots,

terminals, and so on. Current

behavior is then observed, and each transaction is matched against

the set of rules to determine if it

conforms to any historically observed

pattern of behavior.

As with statistical anomaly detection,

rule-based anomaly detection does not

require knowledge of security vulnerabilities within the system.

Rather, the scheme is based on observing past

behavior and, in effect, assuming that the future will be like the past. In order for this approach to be effective,

a rather large database of rules will be needed. For example, a scheme

described in [VACC89] contains any-

where from 104 to 106 rules.

Rule-based penetration identification takes a very different approach to intru- sion detection. The key feature of

such systems is the use of rules for identifying known penetrations or

penetrations that would exploit known weaknesses. Rules can also be defined

that identify suspicious behavior, even when the behavior is within the bounds of established patterns

of usage. Typically, the rules used in these systems

are specific to the machine and

operating system. The most fruitful

approach to developing such rules is to analyze

attack tools and scripts collected

on the Internet. These rules

can be supplemented with rules generated by knowledge- able security personnel. In this latter case, the normal procedure

is to interview

Table 20.2 Measures That May Be Used for Intrusion Detection

system administrators and security analysts

to collect a suite of known penetration scenarios and key events that threaten

the security of the target system.

A simple example of the type of rules that

can be used is found in NIDX, an early system that used heuristic rules that can be used to assign

degrees of suspicion to activities [BAUE88].

Example heuristics are the following:

1.

Users should not read files in other users’ personal directories.

2.

Users must not write other users’ files.

3.

Users who log in after hours often access

the same files they used earlier.

4.

Users do not generally open disk devices directly but rely on higher-level operat-

ing system utilities.

5.

Users should not be logged

in more than once to the same system.

6.

Users do not make copies of system programs.

The penetration identification scheme used in IDES is

representative of the strategy followed. Audit

records are examined as they are generated, and they are matched against

the rule base.

If a match is found,

then the user’s

suspicion rating is

increased. If enough rules are matched, then the rating will pass a threshold

that results in the reporting of an anomaly.

The IDES approach is based on an examination

of audit records. A weak- ness of this plan is its lack of flexibility. For a

given penetration scenario, there may be a number of alternative audit record

sequences that could be produced, each varying from the others slightly or in

subtle ways. It may be difficult to pin down all these variations in explicit

rules. Another method is to develop a higher- level model independent of

specific audit records. An example of this is a state transition model known as

USTAT [ILGU93]. USTAT deals in general actions rather than the detailed specific

actions recorded by the UNIX auditing mecha- nism. USTAT is implemented on a

SunOS system that provides audit records on 239 events. Of these, only 28 are

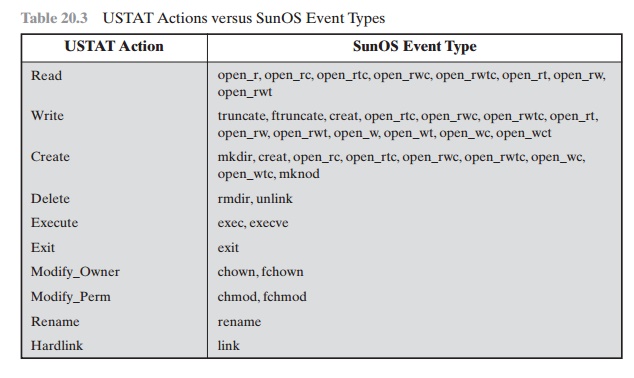

used by a preprocessor, which maps these onto 10 general actions (Table 20.3).

Using just these actions and the parameters that are invoked with each action,

a state transition diagram is developed that charac- terizes suspicious

activity. Because a number of different auditable events map into a smaller

number of actions, the rule-creation process is simpler. Furthermore, the state

transition diagram model is easily modified to accommo- date newly learned

intrusion behaviors.

Table 20.3 USTAT

Actions versus SunOS Event Types

The Base-Rate Fallacy

To be of practical use, an intrusion

detection system should detect a substantial percentage of intrusions while

keeping the false alarm rate at an acceptable level. If only a modest

percentage of actual intrusions are detected, the system provides a false sense

of security. On the other hand, if the system frequently triggers an alert when

there is no intrusion (a false alarm), then either system managers will begin

to ignore the alarms, or much time will be wasted analyzing the false alarms.

Unfortunately, because of the nature of the

probabilities involved, it is very difficult to

meet the standard of high rate of detections with a low rate of false

alarms. In general, if

the actual numbers of intrusions is

low compared to the number

of legitimate uses of a system, then the false

alarm rate will be high unless

the test is extremely discriminating. A study of existing intrusion

detection systems, reported in [AXEL00], indicated that current systems

have not overcome

the prob- lem of the base-rate

fallacy. See Appendix 20A for a brief background on the math- ematics of this problem.

Distributed Intrusion Detection

Until recently,

work on intrusion detection systems

focused on single-system stand- alone facilities. The typical organization, however,

needs to defend a distributed collection of hosts supported by a LAN or

internetwork. Although it is possible to mount a defense by using stand-alone

intrusion detection systems on each host, a more effective defense can be

achieved by coordination and cooperation among intrusion detection systems across

the network.

Porras points out the following major issues

in the design of a distributed intrusion detection system [PORR92]:

•

A distributed intrusion detection system may need to deal with different

audit record formats. In a heterogeneous environment, different systems will employ

different native audit

collection systems and, if using

intrusion detec- tion, may employ different formats for security-related audit records.

•

One or more nodes in the network will serve as collection and analysis points for the data from the systems on

the network. Thus, either raw audit

data or summary data must be transmitted across the network. Therefore, there

is a requirement to assure the integrity

and confidentiality of these data. Integrity

is required to prevent an intruder from masking his or her activities by altering

the transmitted audit information.

Confidentiality is required because the transmitted audit information could

be valuable.

•

Either a centralized or decentralized architecture can be used. With a central-

ized architecture, there

is a single central point

of collection and analysis of all

audit data. This eases the task of correlating incoming reports but creates a

potential bottleneck and single point of failure.

With a decentralized architec-

ture, there are more than one analysis

centers, but these

must coordinate their activities and exchange information.

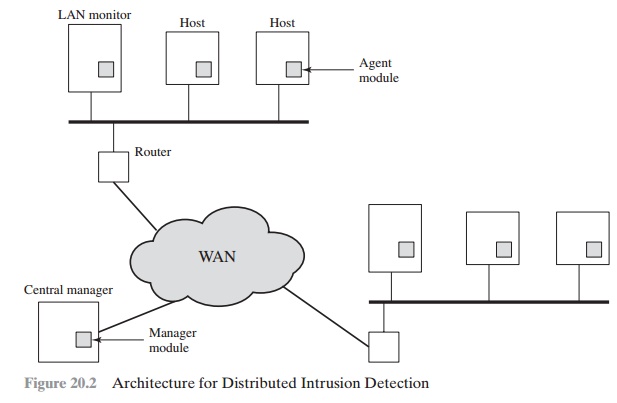

A good example of a distributed intrusion detection system

is one developed at the University of California at Davis [HEBE92,

SNAP91]. Figure 20.2 shows the overall architecture, which consists

of three main components:

•

Host agent module: An

audit collection module operating as a background process on a monitored system.

Its purpose is to collect data on

security- related events on the host

and transmit these to the central manager.

•

LAN monitor

agent module: Operates in

the same

fashion as a host agent module

except that it analyzes LAN traffic and reports the results to the

central manager.

•

Central manager module: Receives reports from LAN monitor and host

agents

and processes and correlates these reports to detect intrusion.

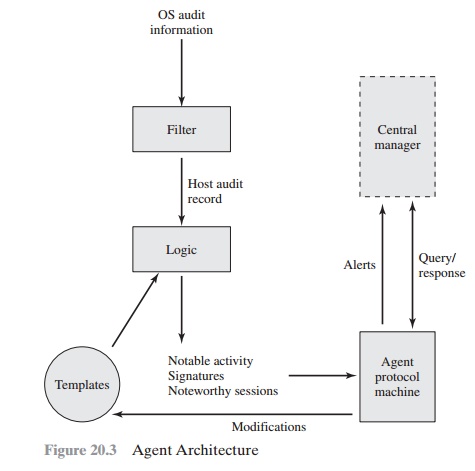

The scheme is designed to be independent of any operating

system or system auditing implementation. Figure 20.3 [SNAP91] shows the general

approach that is taken.

The agent captures each audit record produced by the native audit collection system. A filter is applied that retains only those records

that are of security interest. These records are then

reformatted into a standardized format referred to as the host audit record (HAR). Next, a template-driven logic module analyzes the records

for suspicious activity. At the lowest

level, the agent

scans for notable

events that are of interest independent of any past events. Examples

include failed file accesses, accessing

system files, and changing a

file’s access control. At the next higher level,

the agent looks

for sequences of events, such as known

attack patterns (signatures).

Finally, the agent looks for

anomalous behavior of an individual user based on a historical profile of that

user, such as number of programs executed, number of files accessed, and the like.

When suspicious activity is detected, an alert is sent to

the central manager. The central manager

includes an expert system that can draw

inferences from received data.

The manager may also query individual systems for copies

of HARs to correlate

with those from other agents.

The LAN monitor

agent also supplies

information to the central manager.

The LAN monitor

agent audits host-host connections, services used,

and volume of traf-

fic. It searches for significant events, such as sudden changes

in network load,

the use of security-related services, and network

activities such as rlogin.

The architecture depicted

in Figures 20.2 and 20.3 is quite

general and flexible. It offers a foundation for a

machine-independent approach that can expand from stand-alone intrusion

detection to a system that is able to correlate activity from a number of sites

and networks to detect suspicious activity that would otherwise remain undetected.

Honeypots

A relatively recent innovation in intrusion

detection technology is the honeypot. Honeypots are decoy systems that are

designed to lure a potential attacker away from critical systems. Honeypots are

designed to

•

divert an attacker from accessing critical

systems

•

collect information about the attacker’s activity

•

encourage the

attacker to stay on the system long enough for administrators to respond

These systems are filled with fabricated information designed to appear

valu- able but that a legitimate user of the system wouldn’t

access. Thus, any access

to the honeypot is suspect. The system is instrumented with sensitive monitors

and event loggers that detect these accesses and collect information about the attacker’s activ- ities. Because any attack against

the honeypot is made to seem successful, adminis- trators have time to mobilize and log and track the

attacker without ever exposing productive systems.

Initial efforts involved

a single honeypot

computer with IP addresses designed to attract hackers. More recent

research has focused on building entire honeypot networks that emulate an

enterprise, possibly with actual or simulated traffic and data. Once hackers are within the network, administrators can observe their

behav- ior in detail and figure out defenses.

Intrusion Detection Exchange Format

To facilitate the development of distributed intrusion

detection systems that can function across a wide range of platforms and

environments, standards are needed

to support interoperability. Such standards are the focus of the IETF Intrusion Detection

Working Group. The purpose

of the working group is to define data for- mats

and exchange procedures for sharing information of interest to intrusion detection and response

systems and to management systems

that may need to inter- act with them. The outputs

of this working group include:

1.

A requirements document, which describes

the high-level functional require- ments for communication between intrusion detection systems and requirements for communication between intrusion detection systems and with management systems, including

the rationale for those requirements. Scenarios will be used to illustrate the requirements.

2.

A common intrusion language specification, which

describes data formats that satisfy the

requirements.

3.

A framework document, which identifies existing protocols best

used for com- munication

between intrusion detection systems,

and describes how the devised

data formats relate to them.

As of this writing, all of these documents are in an Internet-draft document stage.

Related Topics