Chapter: Software Engineering : Project Management

Estimation – FP Based, LOC Based, Make/Buy Decision, COCOMO II

Estimation – FP Based, LOC Based, Make/Buy Decision, COCOMO II

A function point is a unit of measurement to express the amount of

business functionality an information system (as a product)

provides to a user. Function points measure software size. The cost (in dollars

or hours) of a single unit is calculated from past projects

·

The risk of "inflation" of the created lines of code, and

thus reducing the value of the measurement system, if developers are

incentivized to be more productive. FP advocates refer to this as measuring the

size of the solution instead of the size of the problem.

·

Lines of Code (LOC) measures reward low level languages

because more lines of code are needed to deliver a similar amount of

functionality to a higher level language. C. Jones offers a method of

correcting this in his work.

·

LOC measures are not useful during early project phases where

estimating the number of lines of code that will be delivered is challenging.

However, Function Points can be derived from requirements and therefore are

useful in methods such as estimation by proxy.

Loc:

Source lines of code (SLOC),

also known as lines of code (LOC), is a software metric used

to measure the size of a computer

program by counting the number of lines in the text of the program's source

code. SLOC is typically used to predict the amount of effort that will be

required to develop a program, as well as to estimate programming

productivity or maintainability once the software is produced

SLOC measures are somewhat

controversial, particularly in the way that they are sometimes misused.

Experiments have repeatedly confirmed that effort is highly correlated with

SLOC, that is, programs with larger SLOC values take more time to develop.

Thus, SLOC can be very effective in estimating effort. However, functionality

is less well correlated with SLOC: skilled developers may be able to develop

the same functionality with far less code, so one program with fewer SLOC may

exhibit more functionality than another similar program. In particular, SLOC is

a poor productivity measure of individuals, since a developer can develop only

a few lines and yet be far more productive in terms of functionality than a

developer who ends up creating more lines (and generally spending more effort).

Good developers may merge multiple code modules into a single module, improving

the system yet appearing to have negative productivity because they remove

code. Also, especially skilled developers tend to be assigned the most difficult

tasks, and thus may sometimes appear less "productive" than other

developers on a task by this measure. Furthermore, inexperienced developers

often resort to code duplication, which is highly discouraged as it is more

bug-prone and costly to maintain, but it results in higher SLOC.

Make/buy decision:

The act of choosing between

manufacturing a product in-house or purchasing it from an external supplier.

In a make-or-buy decision,

the two most important factors to consider are cost and availability of production

capacity.

An enterprise may decide to

purchase the product rather than producing it, if is cheaper to buy than make

or if it does not have sufficient production capacity to produce it in-house.

With the phenomenal surge in global outsourcing over the past decades, the

make-or-buy decision is one that managers have to grapple with very frequently.

nvestopedia explains 'make-or-buy decision'

Factors that may influence a

firm's decision to buy a part rather than produce it internally include lack of

in-house expertise, small volume requirements, desire for multiple sourcing,

and the fact that the item may not be critical to its strategy. Similarly,

factors that may tilt a firm towards making an item in-house include existing

idle production capacity, better quality control or proprietary technology that

needs to be protected.

COCOMO II

COnstructive COst MOdel II (COCOMO® II) is a model that

allows one to estimat e the cost, effort, and schedule when planning a new software development activity. COCOMO®

II is the latest major extension to the original COCOMO® ( COCOMO® 81 ) model

published in 1981. It consists of three submodels, each one offering increased

fidelity the further along one is in the project planning and design process.

Listed in increasing fidelity, these submodels are called the Applications

Composition, Early Design, and Post-architecture models.

![]()

COCOMO® II can be used for the following major decision situations

·

Making investment or other financial decisions involving a software

development effort

·

Setting project budgets and schedules as a basis for planning and

control

·

Deciding on or negotiating tradeoffs among software cost, schedule,

functionality, performance or quality factors

·

Making software cost and schedule risk management decisions

·

Deciding which parts of a software system to develop, reuse, lease,

or purchase

·

Making legacy software inventory decisions: what parts to modify,

phase out, outsource, etc

·

Setting mixed investment strategies to improve organization's

software capability, via reuse, tools, process maturity, outsourcing, etc

·

Deciding how to implement a process improvement strategy, such as

that provided in the SEI

CMM

The original COCOMO® model

was first published by Dr. Barry Boehm in 1981, and reflected the software

development practices of the day. In the ensuing decade and a half, software

development techniques changed dramatically. These changes included a move away

from mainframe overnight batch processing to desktop-based real-time

turnaround; a greatly increased emphasis on reusing existing software and

building new systems using off-the-shelf software components; and spending as

much effort to design and manage the software development process as was once

spent creating the software product.

These changes and others

began to make applying the original COCOMO® model problematic. The solution to

the problem was to reinvent the model for the 1990s. After several years and

the combined efforts of USC-CSSE, ISR at UC Irvine, and the COCOMO® II

Project Affiliate Organizations , the result is COCOMO® II, a revised cost

estimation model reflecting the changes in professional software development

practice that have come about since the 1970s. This new, improved COCOMO® is

now ready to assist professional software cost estimators for many years to

come.

![]()

About the Nomenclature

The original model published

in 1981 went by the simple name of COCOMO®. This is an acronym derived from the

first two letters of each word in the longer phrase COnstructive COst MOdel. The word constructive refers to the fact that the

model helps an estimator better understand the complexities of the software job to be done, and by its

openness permits the estimator to know exactly why the model gives the estimate

it does. Not surprisingly, the new model (composed of all three submodels) was

initially given the name COCOMO® 2.0. However, after some confusion in how to

designate subsequent releases of the software implementation of the new model,

the name was permanently changed to COCOMO® II. To further avoid confusion, the

original COCOMO® model was also then re -designated COCOMO® 81. All references

to COCOMO® found in books and literature published before 1995 refer to wh at

is now called COCOMO® 81. Most references to COCOMO® published from 1995 onward

refer to what is now called COCOMO® II.

If in examining a reference

you are still unsure as to which model is being discussed, there are a few

obvious clues. If in the context of discussing COCOMO® these terms are used:

Basic, Intermediate, or Detailed for model names; Organic, Semidetached, or

Embedded for development mode, then

the model being discussed is COCOMO® 81. However, if the model names mentioned

are Application Composition, Early Design, or Post-architecture; or if there is

mention of scale factors

Precedentedness (PREC), Development Flexibility (FLEX), Architecture/Risk

Resolution (RESL), Team Cohesion (TEAM), or Process Maturity (PMAT), then the

model being discussed is COCOMO® II.

Intermediate COCOMO computes software development effort as

function of program size and a set of "cost

drivers" that include subjective assessment of product, hardware,

personnel and project attributes. This extension considers a set of four

"cost drivers",each with a number of subsidiary attributes:-

·

Product attributes

o Required software reliability

o Size of application database

o Complexity of the product

·

Hardware attributes

o Run-time performance

constraints

o Memory constraints

o Volatility of the virtual

machine environment

o Required turnabout time

·

Personnel attributes

o Analyst capability

o Software engineering

capability

o Applications experience

o Virtual machine experience

o Programming language

experience

·

Project attributes

o Use of software tools

o Application of software

engineering methods o Required development schedule

Each of the 15 attributes receives a rating on a six-point scale

that ranges from "very low" to "extra high" (in importance

or value). An effort multiplier from the table below applies to the rating. The

product of all effort multipliers results in an effort adjustment factor (EAF). Typical values for EAF range from

0.9 to 1.4.

The Intermediate Cocomo

formula now takes the form:

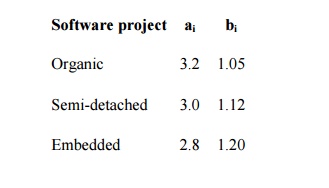

E=ai(KLoC)(bi).EAF

where E is the effort applied

in person-months, KLoC is the

estimated number of thousands of delivered lines of code for the project, and EAF is the factor calculated above. The

coefficient ai and the exponent bi are given in the next table.

The Development time D calculation uses E in the same way as in the Basic COCOMO.

DETAILED COCOMO

Detailed COCOMO incorporates

all characteristics of the intermediate version with an assessment of the cost

driver's impact on each step (analysis, design, etc.) of the software

engineering process.

The detailed model uses

different effort multipliers for each cost driver attribute. These Phase Sensitive effort multipliers are

each to determine the amount of effort required to complete each phase. In

detailed cocomo,the whole software is divided in different modules and then we

apply COCOMO in different modules to estimate effort and then sum the effort

In detailed COCOMO, the

effort is calculated as function of program size and a set of cost drivers

given according to each phase of software life cycle.

A Detailed project schedule

is never static.

The five phases of detailed

COCOMO are:-

·

plan and requirement.

·

system design.

·

detailed design.

·

module code and test.

·

integration and test.

Related Topics