Chapter: Graphics and Multimedia : Hypermedia

Multimedia authoring and User Interface Multimedia Authoring Systems

Multimedia authoring and User Interface Multimedia Authoring

Systems

Multimedia authoring systems

are designed with two primary target users: They are

(i) Professionals who prepare

documents, audio or sound tracks, and full motion video clips for wide distribution.

(il) Average business users

preparing documents, audio recordings, or full motion video clips for stored

messages' or presentations.

The authoring system covers

user interface. The authoring system spans issues such as data access, storage

structures for individual components embedded in a document, the user's ability

to browse through stored objects, and so on.

Most authoring systems are

managed by a control application.

Design Issues for Multimedia Authoring

Enterprise wide standards

should be set up to ensure that the user requirements are fulfilled with good

quality and made the objects transferable from one system to another.

So standards must be set for

a number of design issues

1.

Display resolution

2.

Data formula for capturing data

3.

Compression algorithms

4.

Network interfaces

5.

Storage formats.

Display resolution

A number of design issues

must be considered for handling different display outputs. They are:

(a) Level of standardization on

display resolutions.

(b) Display protocol

standardization.

(c) Corporate norms for service

degradations

(d) Corporate norms for network

traffic degradations as they relate to resolution issuesSetting norms will be

easy if the number of different work station types, window managers, and

monitor resolutions are limited in number.But if they are more in number,

setting norms will be difficult.Another consideration is selecting protocols to

use. Because a number of protocols have emerged, including AVI, Indeo, Quick

Time and so on.So, there should be some level of convergence that allows these

three display protocols to exchange data and allow viewing files in other

formats.

File Format and Data Compression Issues

There are variety of data

formats available for image, audio, and full motion video objects.

Since the varieties are so

large, controlling them becomes difficult. So we should not standardize on a

single format. Instead, we should select a set for which reliable conversion

application tools are available.

Another key design Issue is

to standardize on one or two compression formula for each type of data object.

For example for facsimile machines, CCITT Group 3 and 4 should be included in

the selected standard. Similarly, for full motion video, the selected standard

should include MPEG and its derivatives such as MPEG 2.

While doing storage, it is

useful to have some information (attribute information) about the object itself

available outside the object to allow a user to decide if they need to access

the object data. one of such attribute information are:

(i)

Compression type (ii) Size of the object

(iii) Object orientation

(iv)Data and time of creation

(v) Source file name

(vi)Version number (if any)

(vii) Required software

application to display or playback the object.

Service degradation policies: Setting up Corporate norms for network

traffic degradation is difficult as they relate to resolution Issues:

To address these design

issues, several policies are possible. They are:

1.

Decline further requests with a message to try later.

2.

Provide the playback server but at a lower resolution.

3.

Provide the playback service at full resolution but, in the case of

sound and full motion video, drop intermediate frames.

Design Approach to Authoring

Designing an authoring system

spans a number of design issues. They include:

Hypermedia application design

specifics, User Interface aspects, Embedding/Linking streams of objects to a

main document or presentation, Storage of and access to multimedia objects.

Playing back combined streams in a synchronized manner.

A good user interface design

is more important to the success of hypermedia applications.

Types of Multimedia Authoring Systems

There are varying degrees of

complexity among the authoring systems. For example, dedicated authoring

systems that handle only one kind of an object for a single user is simple,

where as programmable systems are most complex.

Dedicated Authority Systems

Dedicated authoring systems

are designed for a single user and generally for single streams.

Designing this type of

authoring system is simple, but if it should be capable of combining even two

object streams, it becomes complex. The authoring is performed on objects

captured by the local video camera and image scanner or an objects stored in

some form of multimedia object library. In the case of dedicated authoring

system, users need not to be experts in multimedia or a professional artist.

But the dedicated systems should be designed in such a way that. It has to

provide user interfaces that are extremely intuitive and follow real-world

metaphors.

A structured design approach

will be useful in isolating the visual and procedural design components.

TimeLine –based authoring

In a timeline based authoring

system, objects are placed along a timeline. The timeline can be drawn on the

screen in a window in a graphic manner, or it created using a script in a

mann.er similar to a project plan. But, the user must specify a resource object

and position it in the timeline.

On playback, the object

starts playing at that point in the time Scale. Fig:TimeLinebased authoring

In most timeline based

approaches, once the multimedia object has been captured in a timeline,.it is

fixed in location and cannot be manipulated easily, So, a single timeline

causes loss of information about the relative time lines for each individual

object.

Structured Multimedia Authoring

A structured multimedia

authoring approach was presented by Hardman. It is an evolutionary approach

based on structured object-level construction of complex presentations. This

approach consists of two stages:

(i) The construction of the

structure of a presentation.

(ii) Assignment of detailed timing

constraints.

A successful structured

authoring system must provide the following capabilities for navigating through

the structure of presentation.

1.Ability to view

the complete structure.

2.Maintain a

hierarchy of objects.

3.Capability to

zoom down to any specific component. 4.View specific components in part or

from start to finish.

5.Provide a running

status of percentage full of the designated length of the presentation. 6.Clearly show the

timing relations between the various components.

7.Ability to

address all multimedia types including text, image, audio, video and frame

based digital images.

The author must ensure that

there is a good fit within each object hierarchy level. The navigation design

of authoring system should allow the author to view the overall structure while

examining a specific object segment more closely.

Programmable Authoring Systems :Ea rly structured authoring tools were not

able to allow the authors to express automatic function

for handling certain routine tasks. But,

programmable

authoring system bas improved in providing powerful

functions based on image

processing and analysis and embedding program interpreters to use image-processing

functious.

The capability of this

authoring system is enhanced by Building user programmability in the authoring

tool to perform the analysis and to manipulate the stream based on the analysis

results and also manipulate the stream based on the analysis results. The

programmability allows the following tasks through the program interpreter

rather than manually. Return the time stamp of the next frame. Delete a

specified movie segment. Copy or cut a specified movie segment to the clip

board . Replace the current segment with clip board contents.

Multisource Multi-user Authoring Systems

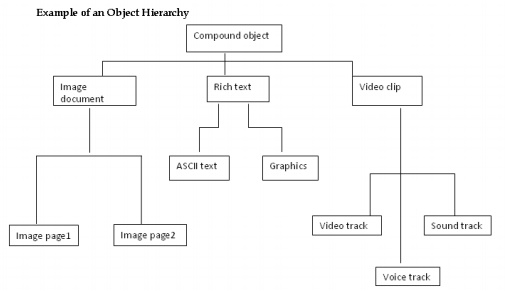

We can have an object

hierarchy in a geographic plane; that is, some objects may be linked to other

objects by position, while others may be independent and fixed in position".

We need object data, and

information on composing it. Composing means locating it in reference to other

objects in time as Well as space.

Once the object is rendered

(display of multimedia object on the screen) the author can manipulate it and change

its rendering information must be available at the same time for display.If

there are no limits on network bandwidth and server performance, it would be

possible to assemble required components on cue at the right time to be

rendered.

In addition to the multi-user

compositing function A multi user authoring system must provide resource

allocation and scheduling of multimedia objects.

Telephone Authoring systems

There is an application where

the phone is linking into multimedia electronic mail application

1.Tele phone can be used as a

reading device by providing fill text to-speech synthesis capability so that a

user on the road can have electronic mail messages read out on the telephone.

2. The phone can be used for

voice command input for setting up and managing voice mail messages. Digitized

voice clips are captured via the phone and embedded in electronic mail

messages.

3. As the capability to

recognize continuous speech is deploy phones can be used to create electronic

mail messages where the voice is converted to ASCII text on the fly by

high-performance voice recognition engines.

Phones provide a means of

using voice where the alternative of text on a screen is not available. A phone

can be used to provide interactive access to electronic mail, calendar

information databases, public information databass and news reports, electronic

news papers and a variety of other applications. !ntegrating of all these

applications in a common authoring tool requires great skill in planning.

The telephone authoring

systems support different kinds of applications. Some of them are: 1.Workstation controls

for phone mail.

2.Voice command

controls for phone mail.

3.Embedding of

phone mail in electric mail.

Hypermedia Application Design Consideration

The user interface must be

highly intuitive to allow the user to learn the tools quickly and be able to

use them effectively. In addition, the user interface should be designed to

cater to the needs of both experienced and inexperienced user.

In addition to control of their

desktop environments, user also need control of their system environment. This

controlThe abilityshould toincludespec fysomeaprimaryofthefollowing:serverfor

each object class within a domain specified by the system administrative. A

domain can be

viewed as a

list of servers

to which they

have

unrestricted access.

The ability to specify

whether all multimedia -objects or only references should be replicated.

The ability to specify that

the multimedia object should be retrieved immediately for display versus

waiting for a signal to "play" the object. This is more significant

if the object must be retrieved from a remote server.

Display resolution defaults

for each type of graphics or video object.

Essential for good hypermedia design:

1.Determining the

type of hypermedia application.

2.Structuring the

information.

3.Determining the

navigation throughout the application.

4.Methodologies for

accessing the information.

5.Designing the

user interface.

Integration of Applications

The computer may be called

upon to run a diverse set of applications, including some combination of the

following:

1.Electronic mail.

2.Word processing

or technical publishing.

3.Graphics and

formal presentation preparation software. . 4.. Spreadsheet or some other

decision support software. 5.Access to a relational on object-oriented database. 6.Customized

applications directly related to job function:

* Billing * Portfolio management *

Others.

Integration of these

applications consists of two major themes: the appearance of the applications

and the ability of the applications to exchange of data.

Common UI and Application Integration

Microsoft Windows has

standardized the user interface for a large number of applications by providing

standardization at the following levels: Overall visual look and feel of the

application windows

This standardization level

makes it easier for the user to interact with applications designed for the

Microsoft Windows operational environment. Standardization is being provided

for Object Linking and Embedding (OLE), Dynamic Data Exchange (DOE), and the

Remote Procedure Call (RPC).

Data Exchange

The Microsoft Windows

Clipboard allows exchanging data in any format. It can be used to exchange

multimedia objects also. We can cut and copy a multimedia objects in one

document and pasting in another. These documents can be opened under different

applications.The windows clipboard allows the following formats to be stored:

.:. Text Bitrnap

.:.

Image Sound

.:. Video (AVI format).

Distributed Data Access

If all applications required

for a compound object can access the subobjects that they manipulate, then only

application integration succeeds.

Fully distributed data access

implies that any application at any client workstation in the enterprise-wide

WAN must be able to access any data object as if it were local. The underlying

data management software should provide transport mechanisms to achieve

transparence for the application.

Hypermedia Application Design

Hypermedia applicati'ons are

applications consisting of compound objects that include the multimedia

objects. An authoring applicationn may use existing multimedIa objects or call

upon a media editor to CD create new object.

Structuring the Information

A good information structure

should consist the following modeling primitives:

.:. Object types and object hierarchies.

.:. Object representations.

.:. Object connections.

.:. Derived connections and representations.

The goal of information

Structuring is to identify the information objects and to develop an

information model to define the relationships among these objects.

Types and Object Hierarchies

Object types are related with

various attributes and representations of the objects. The nature of the

information structure determines the functions that can be performed on that

information set. The object hierarchy defines a contained-in relationship

between objects. The manner in which this hierarchy is approached depends on

whether the document is being created or played back.

Users need the ability to

search for an object knowing very little about the object. Hypermedia

application design should allow for such searches.

The user interface with the

application depends on the design of the application, particularly the

navigation options provided for the user.

Object representations

Multimedia objects have a

variety of different object representations. A hypermedia object is a compound

object, consists of s~ information elements, including data, text,

image, and video

Since each of these

multimedia objects may have its own sub objects, the design must consider the

representation of objects.

An object representation may

require controls that allow the user to alter the rendering of the object

dynamically. The controls required for each object representation must be

specified with the object.

Object connection

In the relational model, the

connections are achieved through joins, and in the object oriented models,

through pointers hidden inside objects. Some means of describing explicit

connections is required for hypermedia design to define the relationships among

objects more clearly and to help in establishing the navigation.

Derived Connections and Representations

Modeling of a hypermedia

system should attempt to take derived objects into consideration for

establishing connection guidelines.

User Interface Design Multi media applications contain user interface

design. There are four kinds of user interface development

tools. They are

1.

Media editors

2.

An authoring application

3.

Hypermedia object creation

4.

Multimedia object locator and browser

A media editor is an

application responsible of the creation and editing of a specific multimedia

object such as an image, voice, or Video object. Any application that allows

the user to edit a multimedia object contains a media editor. Whether the

object is text, ~voice, or full-motion video, the basic functions

provided by the editor are the same: create, delete, cut, copy, paste, move,

and merge.

Navigation through the application

Navigation refers to the

sequence in which the application progresses and objects are created, searched

and used.

Naviation can be of three

modes:

(i) Direct: It is completely

predefined. In this case, the user needs to know what to expect with successive

navigation actions.

Free-form mode: In this mode~ the user determines the next sequence of actions.

Browse mode: In this mode, the user does not know the precise question and

wnats to get general information

about a particular topic. It is a very common mode in application based on

large volumes of non-symbolic data. This mode allows a user to explore the

databases to support the hypothesis.

Designing user Interfaces

User Interface should be

designed by structured following design guidelines as follows:

1.Planning the

overall structure of the application

2.Planning the

content of the application

3.Planning the

interactive behavior

4.Planning the look

and feel of the application

A good user interface must be

efficient and intuitive by most users.

The interactive behaviour of

the application determines how the User interacts with the application. A

number of issues are determined at this level.

They are Data entry dialog

boxes

Application designed sequence

of operation depicted by graying or enabling specific menu items

Context-Sensitive operation of buttons. Active icons that perform ad hoc tasks

(adhoc means created for particular purpose only)

A look and feel of the

application depends on a combination of the metaphor being used to simulate

real-life interfaces, Windows guidelines, ease of use, and aesthetic appeal.

Special Metaphors for Multimedia Applications

In this section let us look

at a few key multimedia user interface metaphors.

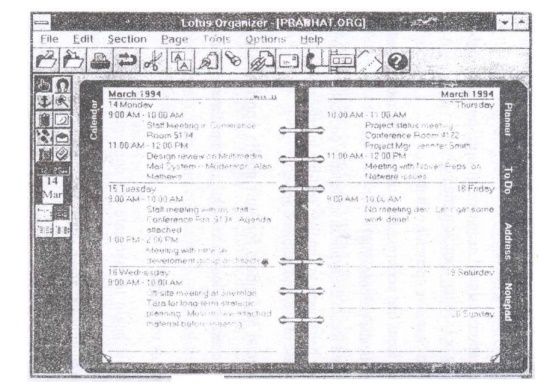

The organizer metaphor

One must begin to associate

the concept of embedding multimedia object in the appointment diary or notepad

to get obvious view of the multimedia aspe.cts of the organizer.

Other use of multimedia

object in an organizer is to associate maps or voice mail directions with

addresses in address books.

The lotus organizer was the

first to use a screen representation of the office diary type organizer 'Telephone Metaphor: The role of the

telephone was changed b the advent of voice mail system. Voice mail servers convert the analog voice

and store it in digital form. With the standards for voice ~ail file formats

and digital storage of sound for computer. Now, computer system is used to

manage the phone system. The two essential components of a phone system are

speakers and microphones. They are included in most personal computers.

Figure 5.5 shows how a

telephone can be created on a screen to make it a good user interface

The telephone keypad on the

screen allows using the interface just as a telephone keypad is used. Push

buttons in dialog boxes and function selections in memos duplicate the function

provided by the keypad. Push buttons, radio buttons, list boxes, and data entry

fields and menu selections allow a range of functionality than can be achieved

by the telephone.

Aural User Interface: A Aural user interface allows computer

systems to accept speech as direct input

and provide an oral response to the user actions. Speech enabling is an

important feature in this UI. To design AUI system first, we have to create an aural

desk top which substitutes voice and ear for the keyboard and display and be

able to mix and match them Aural cues should be able to represent icons, voice,

menus and the windows of graphical user interface.

AUl design involves human

perception, cagnitive science and psycho-acoutic theory. AUI systems learn

systems to perform routine functions without user's feedback. An AUI must be

temporal and use time based metaphors.

AUI has to address the

following issues

1.

Recent user memory

2.

Attention span

3.

Rhythms

4.

Quick return to missed oral cues

The VCR metaphor: The User

interface metaphor for VCR is to draw a TV on screen and provide live buttons

on it for selecting channels, increasing sound volume and changing channel.

User

interface for functions suchas video capture, channel play, and stored video

playback is to emulate the camera, television and VCR on screen Fi5.6 shows all

functions of typical video camera when it is in a video capture mode.

Audio/Video Indexing Functions

Index

marking allowed users to mark the location on tape in the case of both audio

and video to which they may wish to fast forward are rewind.

Other form of index marking

is time based. In his form the tape counter shows playtime in hours, minutes,

and secondsfrom the time the counter was reset.

Three paradigms for indexing

audio and video tapes are

Counter identify tape

locations, and the user maintains index listingSpecial events are used as index

markersUsers can specify locations for index markings and the system maintains

the index.Indexing is useful only if the video is stored. Unless live video is

stored, indexing information is lost since the video cannot be repeated.In most

systems where video is stored, the sound and video streams are decompressed and

managed separately, so synchronization for playback is important. The indexing

information n\must be stored on apermanent basis.

Information Access:

Access structure defines the

way objects can be accessed and how navigation takes place through the

information objects.

The common forms of

navigations for information access are:

Direct: Direct information accessis completely predefined. User must have

knowledge about the object that need

to be accessed. That information includes object representations in a compound

object. Indexed: Index access

abstracts the real object from the access to the object. If the object ID of

the object is an index entry that

resolves to a filename on a specific server and disk partition, then the

information access mechanism is an indexed mechanism. \

Random Selection: In this fonn, the user can pick one of several

possible items. The items need not arranged

in any logical sequence; and they need not to be displayed sequentially. The

user need not have much knowledge about the infonnation. They must browse

through the infornlation.

Path selection or Guided tour: In guided tour, the application guides the user

through a predefined path acrosS a

number of objects and operations. The user may pause to examine the objects at

any stage, but the overall access is controlled by the application. Guided

tours can also be used for operations such as controlling the timing for

discrete media, such as slide show. It can be used for control a sound track or

a video clip.

Browsing: It is useful when the user does not have much knowledge about the

object to access it directly.

Object Display Playback Issues: User expects some common features apart from

basic functions for authoring systems. And to

provide users with same special control on the display/ playback of these

objects, designer have to address some of these issues for image, audio and

video objects.

Image Display Issues Scaling: Image scaling is performed

on the fly after decompressio The image is

scaled to fit in an application defined window at t:' full pixel rate for

the window.The image may be scaled by using factors. For eg: for the window

3600 x 4400 pixels can be scaled by a factor of 6 x 10 ie.60 x 440 (60 times).

Zooming: Zooming allows the user to see more detail

for a specific area of the image. Users can zoom by defining a zoom factor (eg: 2: 1,5: 1 or 10: 1). These are

setup as preselected zoom values.

Rubber banding: This is another form of zooming. In this case,

the user uses a mouse to define two comers

of the rectangle. The selected area can be copied to the clipboard, cut, moved

or zoomed. Panning: If the image

window is unable to display the full image at the ·selected resolution for

display. The image can be panned

left to right or right to left as wellas top to bottom or bottom to top.

Panning is useful for finding detail that is not visible in the full image.

Audio Quality: Audio files are stored in one of a number of formats, including

WAVE and A VI. Playing back audio

requires that the audio file server be capable of playing back data at the rate

of 480 kbytes/min uncompressed or 48 kbytes/min for compressed 8 bit sound or

96 kbytes/min for 16 bit sound.

The calculation is based on

an 8 MHz sampling rate and ADCPM compression with an estimated compression

ratio. 32 bit audio will need to be supported to get concert hall quality in

stored audio. Audio files can be very long. A 20 minute audio clip is over 1 MB

long. When played back from the server, it must be transferred completely in

one burst or in a controlled manner.

Special features for video playback: Before seeing the features

of video playback let us learn what is isochronous playback. The playback at a

constant rate to ensure proper cadence (the rise and fall in pitch of a

person's voice) is known as isochronous playback. But isochronous playback is

more complex With video than It is for sound. .

If video consists of multiple

clips of video and multiple soundtracks being retrieved from different servers

and combined for playback by accurately synchronizing them, the problem becomes

more complex.To achieve isochronous playback, most video storage systems

use frame interleaving

concepts. Video Frame Interleaving: Frame interleaving defines the structure

o;the video file in terms of the layout of sound and video components.

Programmed Degradation: When the client workstation is unable to keep

up with the incoming data, programmed

degradation occurs. Most video servers are designed to transfer data from

storage to the client at constant rates. The video server reads the file from

storage, separate the sound and video components, and feeds them as a seperate

streams over the network to the client workstations. Unless specified by the

user, the video server defaults to favoring sound and degrades video playback

by dropping frames. So, sound can be heard on a constant basis. But the video

loses its smooth motion and starts looking shaky. Because intermediate frames

are not seen.

The user can force the ratio

of sound to video degradation by changing the interleaving factor for playback;

ie the video server holds back sound until the required video frames are

transferred. This problem becomes more complex when multiple streams of video

and audio are being played back from multiple source servers. .

Scene change Frame Detection: The scene we see changes every few seconds or

minutes and it replaced by a new

image.Even within the same scene, there may be a constant motion of some

objects in a scene.

Reason for scene change detection: Automating scence change

detection is very useful for browsing through

very large video clips to find the exact frame sequence of interest.

Spontaneous scene change detection provides an automatic indexing mechanism

that can be very useful in browsing. A user can scan a complete video clip very

rapidly if the key frame for each new scene is displayed in an iconic (poster

frame) form in a slide sorter type display. The user can then click on a

specific icon to see a particular scene. This saves the user a significant

amount of time and effort and reduces resource load by decompressing and

displaying only the specific scene of interest rather than the entire video.

Scene change detection is of

real advantage if it can be performed without decompressing the video object.

Let us take a closer-look at potential techniques that can be employed for this

purpose. Techniques:

(i) Histogram Generation: Within a scene, the histogram changes as the

subject of the scene mover. For

example, if a person is running and the camera pans the scene, a large part of

the scene is duplicated with a little shift. But if the scene changes from a

field to a room, the histogram changes quite substantially. That is, when a

scene cuts over to a new scene, the histogram changes rapidly. Normal

histograms require decompressing the video for the successive scenes to allow

the optical flow of pixels to be plotted on a histogram. The foot that the

video has tobe decompressed does help in that the user can jump from one scene

to the nect. However, to show a slide sorter view requires the entire video to

be decompressed. So this solution does not really of the job.

Since MPEG and JPEG encoded

video uses DCT coefficients, DCT quantization analysis on uncompressed video or

Audio provides the best alternatives for scene change detection without decompressing

video

The efficiency can be managed

by determining the frame interval for checks and by deciding on the regions

within the frame that are being checked. A new cut in a scene or a scene change

can be detected by concentrating on a very small portion of the frame

The scene change detection

technology as is the case with video compression devices as well as devices

that can process compressed video, the implementations of scene change

detection can be significantly enhanced.

Video scaling, Panning and Zooming:

Scaling:

Scaling is a feature since

users are used in changing window sizes. When the size of the video window is

changed, scaling take place.

Panning: Panning allows the user to move to other parts of the window.

Panning is useful incombination with

zooming. Only if the video is being displayed at full resolution and the video

window is not capable of displaying the entire window then panning is useful.

Therfore panning is useful only for video captured using very high resolution

cameras.

Zooming:

Zooming implies that the

stored number of pixels is greater than the number that can be displayed in the

video window . In that case, a video scaled to show the complete image in the

video window can be paused and an area selected to be shown in a higher

resolution within the same video window. The video can be played again from

that point either in the zoomed mode or in scaled to fit window mode.

Three Dimensional Object Display and VR(Virtual Reality)

Number of 3D effects are used

in home entertainment a advanced systems used for specialized applications to

achieve find Ine results.

Let us review the approaches

in use to determine the impact 0 multimedia display system design due to these

advanced systems.

Planar Imaging Technique: The planar imaging technique, used in

computer-aided tomography (CAT Scan)

systems, displays a twodimensional [20] cut of X-ray images through

multidimensional data specialized display techniques try to project a 3D image

constructed from the 2D data. An important design issue is the volume of data

being displayed (based on the image resolution and sampling rate) and the rate

at which 3D renderings need to be constructed to ensure a proper time sequence

for the changes in the data.

Computed tomography has a

high range of pixel density and can be used for a variety of applications.

Magnetic resonance imaging, on the other hand, is not as fast, nor does it

provide as high a pixel density as CT. Ultrasound is the third technique used

for 3D imaging in the medical and other fields. .

Related Topics