Chapter: Computer Architecture : Parallelism

Hardware in Parallelism

HARDWARE

Exploiting Thread-Level

Parallelism within a Processor.

·

Multithreading allows multiple threads to share

the functional units of a single processor in an overlapping fashion.

·

To permit this sharing, the processor must

duplicate the independent state of each thread.

·

For example, a separate copy of the register file,

a separate PC, and a separate page table are required for each thread.

There are two main approaches to multithreading.

1. Fine-grained

multithreading switches between threads on each instruction, causing the

execution of multiples threads to be interleaved. This interleaving is often

done in a round-robin fashion, skipping any threads that are stalled at that

time.

2. Coarse-grained

multithreading was invented as an alternative to fine-grained multithreading.

Coarse-grained multithreading switches threads only on costly stalls, such as

level two cache misses. This change relieves the need to have thread-switching

be essentially free and is much less likely to slow the processor down, since

instructions from other threads will only be issued, when a thread encounters a

costly stall.

Simultaneous Multithreading:

·

Converting Thread-Level Parallelism into

Instruction-Level Parallelism.

·

Simultaneous multithreading (SMT) is a variation

on multithreading that uses the resources of a multiple issue,

dynamically-scheduled processor to exploit TLP at the same time it exploits

ILP.

·

The key insight that motivates SMT is that modern

multiple-issue processors often have more functional unit parallelism available

than a single thread can effectively use.

·

Furthermore, with register renaming and dynamic

scheduling, multiple instructions from independent threads can be issued

without regard to the dependences among them; the resolution of the dependences

can be handled by the dynamic scheduling capability.

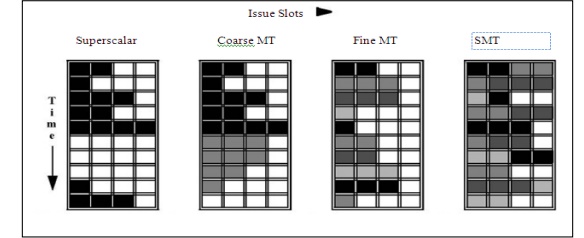

The following figure

illustrates the differences in a processor’s ability to exploit the resources

of a superscalar for the following processor configurations:

·

a superscalar with no multithreading support,

·

a superscalar with coarse-grained multithreading,

·

a superscalar with fine-grained

multithreading, and

·

a superscalar with simultaneous multithreading.

·

In the superscalar without multithreading support,

the use of issue slots is limited by a lack of ILP.

·

In the coarse-grained multithreaded superscalar,

the long stalls are partially hidden by switching to another thread that uses

the resources of the processor.

·

In the fine-grained

case, the interleaving of threads eliminates fully empty slots. Because only

one thread issues instructions in a given clock cycle.In the SMT case,

thread-level parallelism (TLP) and instruction-level parallelism (ILP) are

exploited simultaneously; with multiple threads using the issue slots in a

single clock cycle.

·

The above figure greatly simplifies the real

operation of these processors it does illustrate the potential performance

advantages of multithreading in general and SMT in particular.

Motivation for Multi-core

¡ Exploits

increased feature-size and density

¡ Increases

functional units per chip (spatial efficiency)

¡ Limits

energy consumption per operation

¡ Constrains

growth in processor complexity

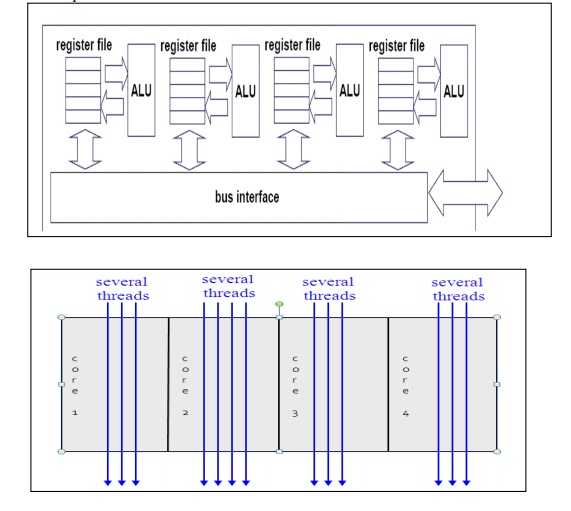

Ø A

multi-core processor is a processing system composed of two or more independent

cores (or CPUs). The cores are typically integrated onto a single integrated

circuit die (known as a chip multiprocessor or CMP), or they may be integrated

onto multiple dies in a single chip package.

Ø A

many-core processor is one in which the number of cores is large

enough that traditional multi-processor techniques are no longer

efficient - this threshold is somewhere in the range of several tens of cores -

and likely requires a network on chip.

Ø A

multi-core processor implements multiprocessing in a single physical package.

Cores in a multi-core device may be coupled together tightly or loosely. For

example, cores may or may not share caches, and they may implement message

passing or shared memory inter-core communication methods. Common network

topologies to interconnect cores include: bus, ring, 2-dimentional mesh, and

crossbar.

Ø All cores

are identical in symmetric multi-core systems and they are not identical in

asymmetric multi-core systems. Just as with single-processor systems, cores in

multi-core systems may implement architectures such as superscalar, vector

processing, or multithreading.

Ø Multi-core

processors are widely used across many application domains including:

general-purpose, embedded, network, digital signal processing, and graphics.

Ø The

amount of performance gained by the use of a multi-core processor is strongly

dependent on the software algorithms and implementation.

Ø Multi-core

processing is a growing industry trend as single core processors rapidly reach

the physical limits of possible complexity and speed.

Ø Companies

that have produced or are working on multi-core products include AMD, ARM,

Broadcom, Intel, and VIA.

Ø with a

shared on-chip cache memory, communication events can be reduced to just a

handful of processor cycles.

Ø therefore

with low latencies, communication delays have a much smaller impact on overall

performance.

Ø threads

can also be much smaller and still be effective

Ø automatic parallelization more

feasible.

Multiple cores run in parallel

Properties of Multi-core systems

¡ Cores

will be shared with a wide range of other applications dynamically.

¡ Load can

no longer be considered symmetric across the cores.

¡ Cores

will likely not be asymmetric as accelerators become common for scientific

hardware.

¡ Source

code will often be unavailable, preventing compilation against the specific

hardware configuration.

Applications that benefit from multi-core

¡ Database

servers

¡ Web

servers

¡ Telecommunication

markets

¡ Multimedia

applications

¡ Scientific

applications

¡

In general, applications with Thread-level

parallelism (as opposed to instruction-level parallelism

Related Topics