Chapter: Fundamentals of Database Systems : Query Processing and Optimization, and Database Tuning : Physical Database Design and Tuning

Physical Database Design in Relational Databases

Physical Database Design in Relational

Databases

In this section, we begin by

discussing the physical design factors that affect the performance of

applications and transactions, and then we comment on the specific guidelines

for RDBMSs.

1. Factors That Influence Physical Database

Design

Physical design is an activity where the goal

is not only to create the appropriate structuring of data in storage, but also

to do so in a way that guarantees good performance. For a given conceptual schema,

there are many physical design alternatives in a given DBMS. It is not

possible to make meaningful physical design decisions and performance analyses

until the database designer knows the mix of queries, transactions, and

applications that are expected to run on the database. This is called the job mix for the particular set of

database system applications. The database administrators/designers must

analyze these applications, their expected frequencies of invocation, any

timing constraints on their execution speed, the expected frequency of update

operations, and any unique constraints on attributes. We discuss each of these

factors next.

A. Analyzing the Database Queries and

Transactions. Before undertaking the physical database design, we must have a good idea of the

intended use of the database by defining in a high-level form the queries and

transactions that are expected to run on the database. For each retrieval query, the following

information about the query would be needed:

The

files that will be accessed by the query.

The

attributes on which any selection conditions for the query are specified.

Whether

the selection condition is an equality, inequality, or a range condi-tion.

The

attributes on which any join conditions or conditions to link multiple tables

or objects for the query are specified.

The

attributes whose values will be retrieved by the query.

The attributes listed in items 2 and 4 above

are candidates for the definition of access structures, such as indexes, hash

keys, or sorting of the file.

For each update

operation or update transaction,

the following information would be needed:

The

files that will be updated.

The type

of operation on each file (insert, update, or delete).

The

attributes on which selection conditions for a delete or update are spec-ified.

The

attributes whose values will be changed by an update operation.

Again, the attributes listed in item 3 are

candidates for access structures on the files, because they would be used to

locate the records that will be updated or deleted. On the other hand, the

attributes listed in item 4 are candidates for avoiding an access structure,

since modifying them will require updating the access structures.

B. Analyzing the Expected Frequency of

Invocation of Queries and Transactions. Besides identifying the characteristics of expected retrieval

queries and update transactions, we must consider their expected rates of

invocation. This frequency information, along with the attribute information

collected on each query and transaction, is used to compile a cumulative list

of the expected fre-quency of use for all queries and transactions. This is

expressed as the expected fre-quency of using each attribute in each file as a

selection attribute or a join attribute, over all the queries and transactions.

Generally, for large volumes of processing, the informal 80–20 rule can be used: approximately 80 percent of the processing

is accounted for by only 20 percent of the queries and transactions. Therefore,

in prac-tical situations, it is rarely necessary to collect exhaustive

statistics and invocation rates on all the queries and transactions; it is

sufficient to determine the 20 percent or so most important ones.

Analyzing the Time Constraints of Queries and

Transactions. Some queries and transactions may have stringent performance

constraints. For example, a transaction may have the constraint that it should

terminate within 5 seconds on 95 percent of the occasions when it is invoked,

and that it should never take more than 20 seconds. Such timing constraints

place further priorities on the attributes that are candidates for access

paths. The selection attributes used by queries and transactions with time

constraints become higher-priority candidates for primary access structures for

the files, because the primary access structures are generally the most

efficient for locating records in a file.

Analyzing the Expected Frequencies of Update

Operations. A minimum number of access paths should be specified for a file that is

frequently updated, because updating the access paths themselves slows down the

update operations. For example, if a file that has frequent record insertions

has 10 indexes on 10 different attributes, each of these indexes must be

updated whenever a new record is inserted. The overhead for updating 10 indexes

can slow down the insert operations.

Analyzing the Uniqueness Constraints on

Attributes. Access paths should be specified on all candidate

key attributes—or sets of attributes—that are either the primary key of a

file or unique attributes. The existence of an index (or other access path)

makes it sufficient to only search the index when checking this uniqueness

constraint, since all values of the attribute will exist in the leaf nodes of

the index. For example, when inserting a new record, if a key attribute value

of the new record already exists in the

index, the insertion of the new record should be rejected, since it would violate the uniqueness constraint

on the attribute.

Once the preceding information is compiled, it

is possible to address the physical database design decisions, which consist

mainly of deciding on the storage structures and access paths for the database

files.

2. Physical Database Design Decisions

Most relational systems represent each base

relation as a physical database file. The access path options include

specifying the type of primary file organization for each relation and the

attributes of which indexes that should be defined. At most, one of the indexes

on each file may be a primary or a clustering index. Any number of additional

secondary indexes can be created.

Design Decisions about Indexing. The attributes whose values are required in equality or range conditions (selection operation) are those that

are keys or that participate in join conditions (join operation) requiring

access paths, such as indexes.

The performance of queries largely depends upon

what indexes or hashing schemes exist to expedite the processing of selections

and joins. On the other hand, during insert, delete, or update operations, the

existence of indexes adds to the overhead. This overhead must be justified in

terms of the gain in efficiency by expediting queries and transactions.

The physical design decisions for indexing fall

into the following categories:

Whether to index an attribute. The general rules for creating an index on an attribute are that the attribute must

either be a key (unique), or there must be some query that uses that attribute

either in a selection condition (equal-ity or range of values) or in a join

condition. One reason for creating multi-ple indexes is that some operations

can be processed by just scanning the indexes, without having to access the

actual data file (see Section 19.5).

What attribute or attributes to index on. An index can be constructed on a single attribute, or on more than one

attribute if it is a composite index. If multiple attributes from one relation

are involved together in several queries, (for example, (Garment_style_#, Color) in a garment inventory database), a

multiattribute (composite) index is warranted. The ordering of attributes

within a multiattribute index must correspond to the queries. For instance, the

above index assumes that queries would be based on an ordering of col-ors

within a Garment_style_# rather than vice versa.

Whether to set up a clustered index. At most, one index per table can be a primary or clustering index, because

this implies that the file be physically

ordered on that attribute. In most RDBMSs, this

is specified by the keyword CLUSTER. (If the attribute is a key, a primary index is created, whereas a clustering

index is created if the attribute

is not a key—see Section 18.1.) If a table requires several indexes, the

decision about which one should be the primary or clustering index depends upon

whether keeping the table ordered on that attribute is needed. Range queries

benefit a great deal from clustering. If several attributes require range

queries, relative benefits must be evaluated before deciding which attribute to

cluster on. If a query is to be answered by doing an index search only (without

retrieving data records), the corresponding index should not be clustered, since the main benefit of clustering is achieved

when retrieving the records themselves. A clustering index may be set up as a

multiattribute index if range retrieval by that composite key is useful in

report creation (for example, an index on Zip_code, Store_id, and

Product_id may be a clustering index for

sales data).

Whether

to use a hash index over a tree index. In general, RDBMSs use B+-trees for indexing. However,

ISAM and hash indexes are also provided in some systems (see Chapter 18). B+-trees

support both equality and range queries on the attribute used as the search

key. Hash indexes work well with equality conditions, particularly during joins

to find a matching record(s), but they do not support range queries.

Whether to use dynamic hashing for the file. For files that are very volatile—that is, those that grow and shrink continuously—one of the

dynamic hashing schemes discussed in Section 17.9 would be suitable. Currently,

they are not offered by many commercial RDBMSs.

How to Create an Index. Many RDBMSs have a similar type of command for creating an index, although it is not part of the SQL standard. The

general form of this command is:

CREATE [ UNIQUE ] INDEX <index name>

ON <table name> ( <column

name> [ <order> ] { , <column

name> [ <order> ] } ) [ CLUSTER ] ;

The keywords UNIQUE and CLUSTER are optional. The keyword CLUSTER is used when the index to be created should also sort the data

file records on the indexing attribute. Thus, specifying CLUSTER on a key (unique) attribute would create some variation of a

primary index, whereas specifying CLUSTER on a nonkey (nonunique) attribute would create

some variation of a clustering index. The value for <order> can be either ASC (ascending) or DESC (descending), and

specifies whether the data file should be ordered in ascending or descending

values of the indexing attribute. The default is ASC. For example, the

following would create a clustering (ascending) index on the nonkey attribute Dno of the EMPLOYEE file:

CREATE

INDEX DnoIndex

ON EMPLOYEE (Dno)

CLUSTER ;

Denormalization as a Design Decision for

Speeding Up Queries. The

ulti-mate goal during normalization (see Chapters 15 and 16) is to separate

attributes into tables to minimize redundancy, and thereby avoid the update

anomalies that lead to an extra processing overhead to maintain consistency in

the database. The ideals that are typically followed are the third or

Boyce-Codd normal forms (see Chapter 15).

The above ideals are sometimes sacrificed in

favor of faster execution of frequently occurring queries and transactions.

This process of storing the logical database design (which may be in BCNF or

4NF) in a weaker normal form, say 2NF or 1NF, is called denormalization. Typically, the designer includes certain

attributes from a table S into

another table R. The reason is that

the attributes from S that are

included in R are frequently

needed—along with other attributes in R—for

answer-ing queries or producing reports. By including these attributes, a join

of R with S is avoided for these frequently occurring queries and reports.

This reintroduces redundancy in the

base tables by including the same attributes in both tables R and S. A partial

functional dependency or a transitive dependency now exists in the table R, thereby creating the associated

redundancy problems (see Chapter 15). A tradeoff exists between the additional updating needed for maintaining

consistency of redundant attributes versus the effort needed to perform a join

to incorporate the additional attributes needed in the result. For example,

consider the following rela-tion:

ASSIGN (Emp_id, Proj_id,

Emp_name, Emp_job_title,

Percent_assigned, Proj_name,

Proj_mgr_id, Proj_mgr_name),

which corresponds exactly to the headers in a

report called The Employee Assignment Roster.

This relation is only in 1NF because of the

following functional dependencies:

Proj_id → Proj_name,

Proj_mgr_id

Proj_mgr_id → Proj_mgr_name

Emp_id → Emp_name, Emp_job_title

This relation may be preferred over the design

in 2NF (and 3NF) consisting of the following three relations:

EMP (Emp_id, Emp_name, Emp_job_title)

PROJ (Proj_id, Proj_name, Proj_mgr_id)

EMP_PROJ (Emp_id, Proj_id, Percent_assigned)

This is because to produce the The Employee Assignment Roster report

(with all fields shown in ASSIGN above), the latter multirelation design

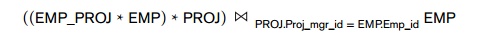

requires two NATURAL JOIN (indicated with *) operations (between EMP and EMP_PROJ, and between PROJ and EMP_PROJ), plus a final JOIN between PROJ and EMP to retrieve the Proj_mgr_name from the Proj_mgr_id. Thus the following JOINs would be needed (the final join would also require renaming

(aliasing) of the last EMP table, which is not shown):

It is also possible to create a view for the ASSIGN table. This does not mean that the join operations will be

avoided, but that the user need not specify the joins. If the view table is

materialized, the joins would be avoided, but if the virtual view table is not

stored as a materialized file, the join computations would still be necessary.

Other forms of denormalization consist of storing extra tables to maintain

original functional dependencies that are lost during BCNF decomposition. For

example, Figure 15.14 shows the TEACH(Student, Course, Instructor) relation with the func-tional dependencies {{Student, Course} → Instructor, Instructor → Course}. A lossless decomposition of TEACH into T1(Student, Instructor) and T2(Instructor, Course) does not

allow queries of the form what course

did student Smith take from instructor Navathe to be answered without

joining T1 and T2. Therefore, storing T1, T2, and TEACH may be a possible solution, which reduces the design from BCNF to

3NF. Here, TEACH is a materialized join of the other two

tables, representing an extreme redundancy. Any updates to T1 and T2 would have to be applied to TEACH. An alter-nate strategy is to create T1 and T2 as updatable base tables, and to create TEACH as a view (virtual table) on T1 and T2 that can only be queried.

Related Topics