Chapter: Multicore Application Programming For Windows, Linux, and Oracle Solaris : Using Automatic Parallelization and OpenMP

An Example of Parallelization

An

Example of Parallelization

As an example of automatic parallelization and parallelization using

OpenMP, we will consider a short code that determines whether each point in a

matrix is in or out of the Mandelbrot set. Listing 7.63 shows the code.

Listing 7.63 Code

to Determine Whether a Point Is in the Mandelbrot Set

int inSet( double ix, double iy )

{

int iterations = 0;

double x = ix, y = iy;

double x2 = x*x, y2

= y*y;

while ( (x2 + y2 < 4) && (iterations < 1000)

) /* Line 9 */

{

y = 2*x*y + iy;

x = x2 – y2

+ ix;

x2= x*x;

y2= y*y;

iterations++;

}

return iterations;

}

The code in Listing 7.63 contains a single loop.

This loop is not suitable for automatic parallelization for two reasons:

n Each iteration of the loop depends on the previous iteration. It is not

possible for the calculation of the next iteration to start until the previous

iteration has com-pleted. This fact means that the loop can be calculated only

in serial.

n The loop iterates until the point either escapes a circle of radius 2

centered around the origin or the maximum iteration count is exceeded. Since

the trip count is unknown until the loop is executed, it is not possible to

assign the work to multi-ple threads because it is not known how much work

there is to perform.

Listing 7.64 shows the main code, including OpenMP

parallelization directives. This allocates a large matrix to hold the results

of the calculations and then computes for every point in the matrix whether it

is in or out of the Mandelbrot set. The final loop in the code is purely there

to ensure that the compiler does not eliminate the main loops because the

values are not used.

Listing 7.64 Main

Loop for Mandelbrot Code

#define

SIZE 4000

int

main()

{

int

*matrix[SIZE];

for

( int i=0; i<SIZE; i++ ) /* Line 24 */

{

matrix[i]

= (int*)malloc( SIZE*sizeof(int) );

}

#pragma

omp parallel for

for

( int x=0; x<SIZE; x++ ) /* Line 30 */

for

( int y=0; y<SIZE; y++ ) /* Line 31 */

{

double

xv = ( (double)x - (SIZE/2) ) / (SIZE/4);

double

yv = ( (double)y - (SIZE/2) ) / (SIZE/4);

matrix[x][y]

= inSet(xv,yv);

}

for

( int x=0; x<SIZE; x++ ) /* Line 38 */

for

( int y=0; y<SIZE; y++ ) /* Line 39 */

if

( matrix[x][y] == -7 ) { printf(""); }

}

To get automatic parallelization to work, the routine inSet must be inlined so that the compiler can determine that parallelization

is safe. Solaris Studio compilers require an optimization level of at least -xO4. Listing 7.65 shows the result of compiling the code. The compiler has

parallelized the inlined call to inSet, but it has only been able to parallelize the innermost loop. The

outermost loop iterates over an array of pointers, and multiples of those

pointers could point to the same memory address; hence, the compiler cannot

parallelize the outer loop because of aliasing issues.

Listing 7.65 Results

of Using Automatic Parallelization on the Mandelbrot Example

$ cc -xO4 -xautopar -xloopinfo

mandle.c

"mandle.c", line 9: not parallelized, loop has multiple exits

"mandle.c", line 9: not parallelized,

loop has multiple exits (inlined loop) "mandle.c", line 24: not

parallelized, call may be unsafe

"mandle.c", line 30: not parallelized, unsafe dependence

"mandle.c", line 31:

PARALLELIZED

"mandle.c", line 38: not parallelized,

call may be unsafe "mandle.c", line 39: not parallelized, call may be

unsafe

The source code in Listing 7.64 already includes an OpenMP directive to

make the outermost loop parallel. Listing 7.66 shows the result of compiling

and running the OpenMP code with various numbers of threads.

Listing 7.66 Performance

of Code Parallelized with OpenMP

% export OMP_NUM_THREADS=1

% timex ./a.out

real 28.83

user 28.69

sys 0.07

% export OMP_NUM_THREADS=2

% timex ./a.out

real 20.92

user 28.69

sys 0.07

% export OMP_NUM_THREADS=3

% timex ./a.out

real 23.35

user 28.69

sys 0.06

The code takes about 29 seconds of wall time to run with a single

thread, roughly 21 seconds with two threads and just over 23 seconds with three

threads. This is far from ideal scaling, which would be that the runtime

decreased in proportion to the number of running threads. Notice that the user

time for the code remains constant. This means that the same amount of work is performed, regardless of the number of

threads used. This indicates that the poor scaling is not the result of the

threads having to perform an increasing amount of work. If the amount of work

is not increasing, it suggests that the scaling problems are the result of that

work being poorly distributed between the threads.

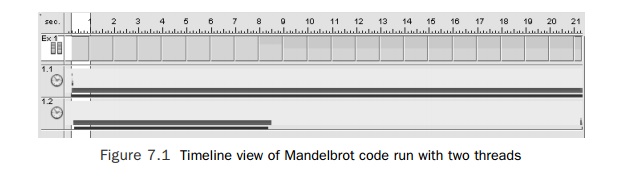

Figure 7.1 shows the timeline view from the Solaris Studio Performance

Analyzer running the code with two threads. This view shows the activity of the

two threads over the entire duration of the run. There are three lines shown.

The top line shows the activ-ity of all the threads in the application. This

shows that initially both threads were run-ning entirely in user mode, and

around 8 seconds into the run, only one of the two threads was in user mode

while the other thread was idle.

The other two lines indicate the activity of the

two threads. The top one shows that the master thread was active over the

entire duration of the run, as is to be expected by the OpenMP execution model.

The second thread was active only during the first 8.5 seconds of the run. This

confirms that the poor scaling is because of an unequal distribu-tion of work

between the two threads.

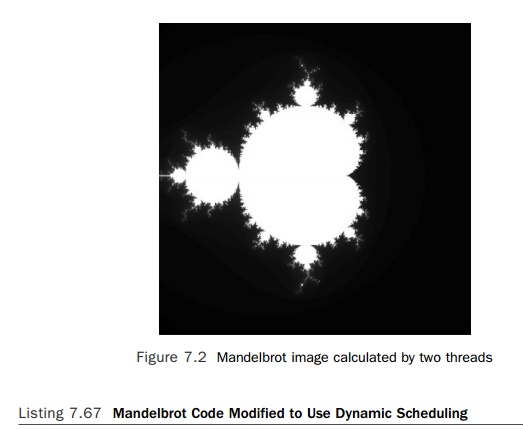

It is easy to understand where this workload

imbalance comes from when the actual image being computed is viewed. Figure 7.2

shows the image that is being computed. One thread is computing the left half,

the other the right half. For a large number of the points, colored black in

the image, it takes only a few iterations to determine that the point is not in

the set. However, it takes the maximum limit on the iteration count to

determine that a point is, or might be, in the set; these points are colored

white.

The areas that are shaded black in the figure take

relatively few iterations and are computed quickly. The areas that are shaded

white take many iterations and therefore take some time to compute. Comparing

the left and right halves of the image, it is apparent that the right half

contains more black pixels than the left half. This means that the thread

computing the right half will take less time than the thread computing the left

half. This is the source of the workload imbalance between the two threads.

Fixing the workload imbalance is relatively easy since it is just a

matter of changing the scheduling clause for the parallel code. Either dynamic

or guided scheduling could be used. Listing 7.67 shows the code modified to use

dynamic scheduling.

Listing 7.67 Mandelbrot

Code Modified to Use Dynamic Scheduling

#define SIZE 4000

int main()

{

int *matrix[SIZE];

for (int i=0; i<SIZE; i++)

{

matrix[i]=(int*)malloc(SIZE*sizeof(int));

}

#pragma omp

parallel for schedule( dynamic )

for (int x=0; x<SIZE; x++) for (int y=0;

y<SIZE; y++)

{

double xv = ((double)x-(SIZE/2))/(SIZE/4); double

yv = ((double)y-(SIZE/2))/(SIZE/4); matrix[x][y]=inSet(xv,yv);

}

for (int x=0; x<SIZE; x++) for (int y=0;

y<SIZE; y++)

if (matrix[x][y]==-7){printf("");}

}

Listing 7.68 shows the resulting scaling from this

code. With dynamic scheduling, the work is evenly distributed across the

threads, leading to nearly linear performance gains as the number of threads

increases.

Listing 7.68 Scaling

of Dynamically Scheduled Code

% setenv OMP_NUM_THREADS 1

% timex ./a.out

real 28.84

user 28.69

sys 0.07

% setenv OMP_NUM_THREADS 2

% timex ./a.out

real 15.42

user 28.69

sys 0.07

% setenv OMP_NUM_THREADS 3

% timex ./a.out

real 10.49

user 28.70

sys 0.08

Related Topics